After establishing 25 months ago, adding 5 standards in its game bag, and submitting 4 of them to IEEE for adoption, what is the next MPAI challenge? The answer is that MPAI has more than one challenge in its viewfinder and that this post will report on the first of them, namely the next standards MPAI is working on.

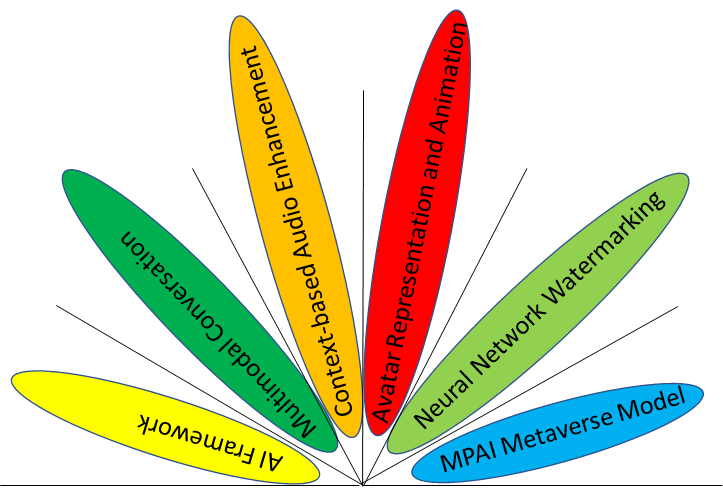

AI Framework (MPAI-AIF). Version 1 (V1) of this standard specifies an environment where non-monolithic component-based AI applications are executed. The new (V2) standard is adding a set of APIs that enable an application developer to select a security level or implement a particular security solution.

Context-based Audio Enhancement (MPAI-CAE). One MPAI-CAE V1 use case is Enhanced Audioconference Experience where the remote end can correctly recreate the sound sources at its end by using the Scene Description of the Audio at the transmitting side. The new (V2) standard is targeting more challenging environments than a room, such as a human outdoor talking to a vehicle whose speech must be as clean as possible. Therefore, a more powerful audio scene description needs to be developed.

Multimodal Conversation (MPAI-MMC). One MPAI-MMC V1 use case is a machine talking to a human and extracting the human’s emotional state from their text, speech, and face to improve the quality of the conversation. The new (V2) standard is augmenting the scope of the understanding of the human internal state by introducing Personal Status combining emotion, cognitive state and social attitude. MPAI-MMC applies it to three new use cases: Conversation about a Scene, Virtual Secretary, and Human-Connected Autonomous Vehicles Interaction (which uses the MPAI-CAE V2 technology).

Avatar Animation and Representation (MPAI-ARA). The new (V1) MPAI-ARA standard addresses several areas where the appearance of a human is mapped to an avatar model. One example is the Avatar-Based Videoconference Use Case where the appearance of an avatar is expected to faithful reproduce a human participant or where a machine conversing with humans displays itself as an avatar showing a Personal Status consistent with the conversation.

Neural Network Watermarking. The new (V1) MPAI-NNW standard specifies methodologies to evaluate neural network watermarking technologies in the following areas:

- The impact on the performance of a watermarked neural network (and its inference).

- The ability of the detector/decoder to detect/decode a payload when the watermarked neural network has been modified.

- The computational cost of injecting, detecting, or decoding a payload in the watermark.

MPAI Metaverse Model. The new (V1) MPAI-MMM Technical Report identifies, organises, defines, and exemplifies functionalities generally considered useful to the metaverse without assuming that a specific metaverse implementation support any of them.

All these are ambitious targets but the work is supported by the submissions received in response to the relevant Calls for Technologies and MPAI’s internal expertise.

This is the first of the current MPAI objectives. It is good enough to convince you to join MPAI now. Read all the 7 good reasons in the MPAI blog.