Improved Health Services with AI

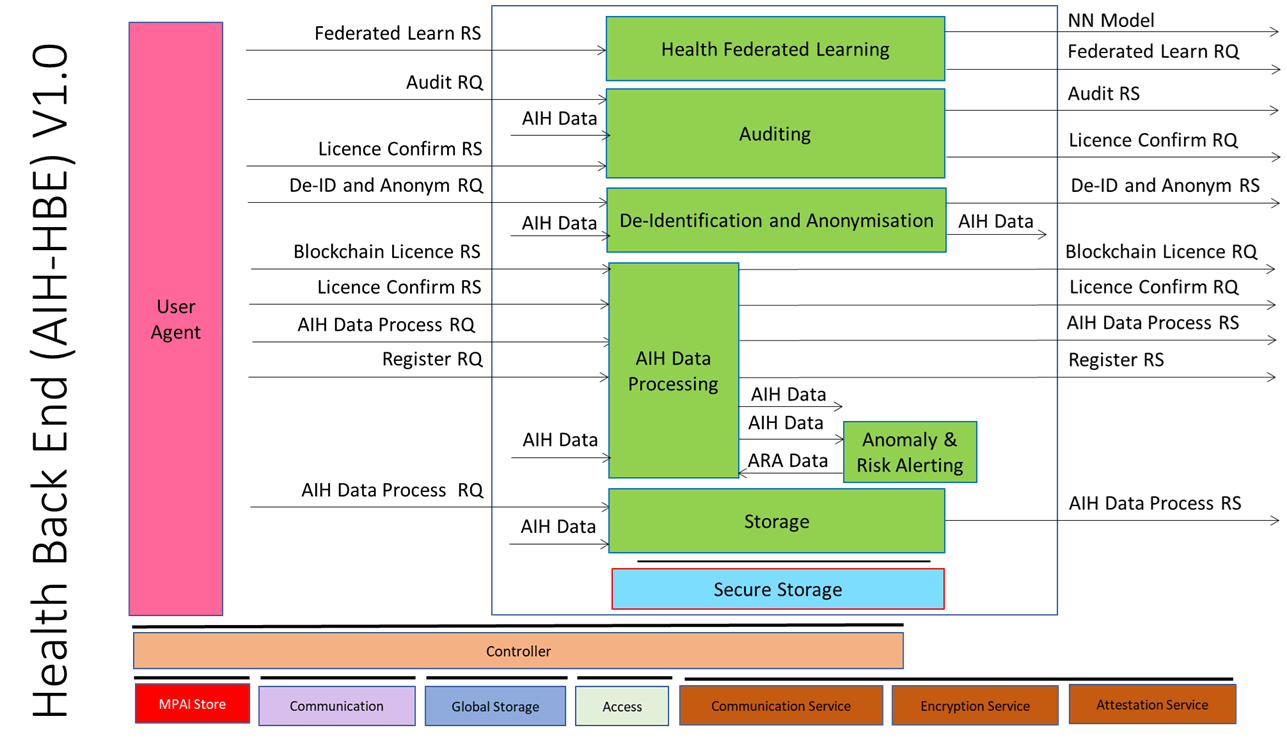

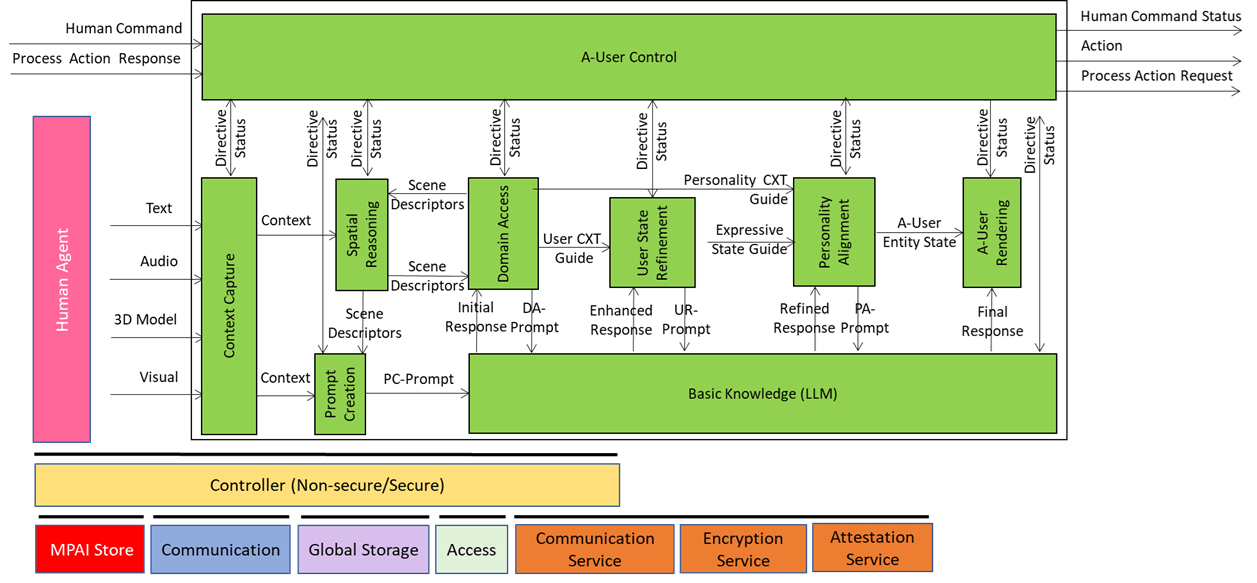

The 64th MPAI General Assembly has approved publication of Technical Specification: AI for Health (MPAI-AIH) – Health Secure Platform (AIH-HSP) V1.0 with a request for Community Comments to be received by the MPAI Secretariat by 16 March 2026. This paper gives an overview of the proposed standard introduction to help those who wish to review and comment on AIH-HSP. The Health Secure Platform specifies the architecture of a platform offering health-related services enabling the following functionalities: End Users use AIH-HSP…