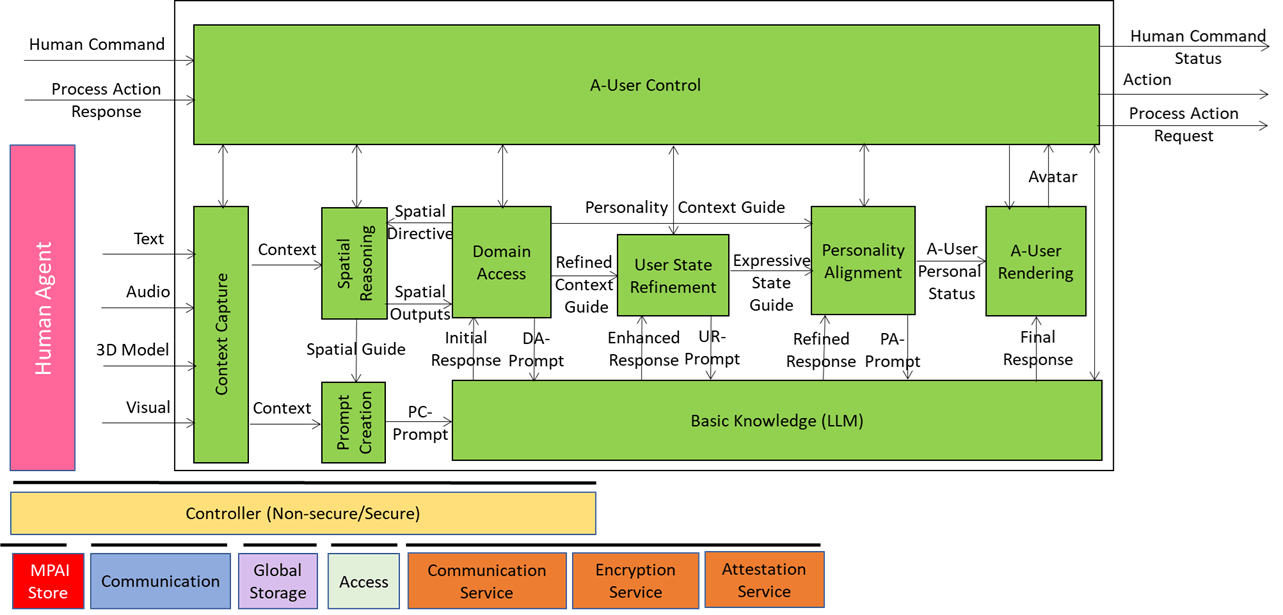

We have already presented the system diagram of the Autonomous User (A-User), an autonomous agent able to move and interact (converse, etc.) with another User in a metaverse. The latter User may also be an A-User or may be under the direct control of a human and is thus called a Human-User (H-User). The A-User acts as a “conversation partner in a metaverse interaction” with the User.

This is the first of a planned sequence of posts having the goal to illustrate more in depth the architecture of an A-User and provide an easy entry point for those who wish to respond to the MPAI Call for Technology on Autonomous User Architecture.

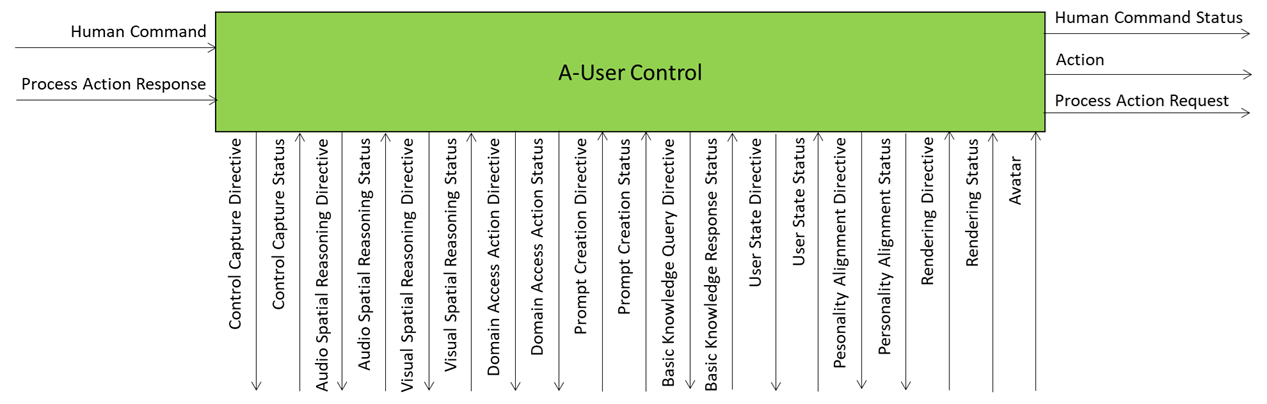

A-User Control is the general commander of the A-User system making sure the Avatar behaves like a coherent digital entity aware of the rights it can exercise in an instance of the MPAI Metaverse Model – Architecture (MMM-TEC) standard. The command is actuated by various signals exchanged with the AI-Modules (AIM) composing the Autonomous User.

At its core, A-User Control decides what the A-User should do, which AIM should do it, and how it should do it – all while respecting the Rights granted to the A-User and the Rules defined by the M-Instance. A-User Control either executes an Action directly or delegates it to another Process in the metaverse to carry it out.

A-User Control is not just about triggering actions. A-User Control also manages the operation of its AIMs, for instance A-User Rendering, which can turn text produced by the Basic Knowledge (LLM) and the Personal Status selected by Personality Alignment into a speaking and gesturing Avatar. A-User Control sends shaping commands to A-User Rendering, ensuring the Avatar’s behaviour aligns with metaverse-generated cues and contextual constraints.

A-User Control is not independent of human influence. The human, i.e., the A-User “owner”, can override, adjust, or steer its behaviour. This makes A-User Control a hybrid system: autonomous by design, but open to human modulation when needed.

The control begins when A-User Control triggers Context Capture to perceive the current M-Location — the spatial zone of the metaverse where the User is active. That snapshot, called Context, includes spatial descriptors and a readout of the human’s cognitive and emotional posture called User State. From there, the two Spatial Reasoning components – Audio and Visual – use Context to analyse the scene and sending outputs to Domain Access and Prompt Creation, which enrich the User’s input and guide the A-User’s understanding.

As reasoning flows through Basic Knowledge, Domain Access, and User State Refinement, A-User Control ensures that every action, rendering, and modulation is aligned with the A-User’s operational logic.

In summary, the A-User Control is the executive function of the A-User: part orchestrator, part gatekeeper, part interpreter. It’s the reason the Avatar doesn’t just speak – it does so while being aware of the Context – both the spatial and User components – with purpose, permission, and precision.

Stay tuned for more introductions into the world of the Autonomous User Architecture.

Responses to the Call must reach the MPAI Secretariat (secretariat@mpai.community) by 2025/01/21.

To know more, register to attend the online presentations of the Call on 17 November at 9 UTC (https://tinyurl.com/y4antb8a) and 16 UTC (https://tinyurl.com/yc6wehdv).