Geneva, Switzerland – 19 July 2022. Today the international, non-profit, unaffiliated Moving Picture, Audio and Data Coding by Artificial Intelligence (MPAI) standards developing organisation has concluded its 22nd General Assembly. Among the outcomes is the publication of three Calls for Technologies supporting the Use Cases and Functional Requirements identified for extensions of two existing standards – AI Framework and Multimodal Conversation – and for a new standard – Neural Network Watermarking.

Each of the three Calls is accompanied by two documents. The first document identifies the Use Cases whose implementation the standard is intended to enable and the Functional Requirements that the proposed data formats and associated technologies are expected to support.

The extended AI Framework standard (MPAI-AIF V2) will retain the functionalities specified by Version 1 and will enable the components of the Framework to access security functionalities.

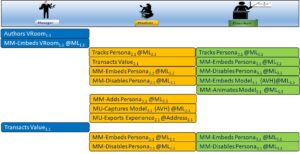

The extended Multimodal Conversation (MPAI-MMC V2) will enable a variety of new use cases such as separation and location of audio-visual objects in a scene (e.g., human beings, their voices and generic objects); the ability of a party in metaverse1 to import an environmental setting and a group of avatars from metaverse2; representation and interpretation of the visual features of a human to extract information about their internal state (e.g., emotion) or to accurately reproduce the human as an avatar.

Neural Network Watermarking (MPAI-NNW) will provide the means to assess if the insertion of a watermark deteriorates the performance of a neural network; how well a watermark detector can detect the presence of a watermark and a watermark decoder can retrieve the payload; and how to quantify the computational cost to inject, detect, and decode a payload.

The second document accompanying a Call for Technologies is the Framework Licence for the standard that will be developed from the technologies submitted in response to the Call. The Framework Licence is a licence without critical data such as cost, dates, rates etc.

The document packages of the Calls can be found on the MPAI website.

Those intending to respond to the Calls should do so by submitting their responses to the MPAI secretariat by 23:39 UTC on 10 October 2022.

MPAI develops data coding standards for applications that have AI as the core enabling technology. Any legal entity supporting the MPAI mission may join MPAI, if able to contribute to the development of standards for the efficient use of data.

So far, MPAI has developed 5 standards (not italic in the list below), is currently engaged in extending 2 approved standards (underlined) and is developing another 10 standards (italic).

| Name of standard | Acronym | Brief description |

| AI Framework | MPAI-AIF | Specifies an infrastructure enabling the execution of implementations and access to the MPAI Store. |

| Context-based Audio Enhancement | MPAI-CAE | Improves the user experience of audio-related applications in a variety of contexts. |

| Compression and Understanding of Industrial Data | MPAI-CUI | Predicts the company’s performance from governance, financial, and risk data. |

| Governance of the MPAI Ecosystem | MPAI-GME | Establishes the rules governing the submission of and access to interoperable implementations. |

| Multimodal Conversation | MPAI-MMC | Enables human-machine conversation emulating human-human conversation. |

| Avatar Representation and Animation | MPAI-ARA | Specifies descriptors of avatars impersonating real humans. |

| Connected Autonomous Vehicles | MPAI-CAV | Specifies components for Environment Sensing, Autonomous Motion, and Motion Actuation. |

| End-to-End Video Coding | MPAI-EEV | Explores the promising area of AI-based “end-to-end” video coding for longer-term applications. |

| AI-Enhanced Video Coding | MPAI-EVC | Improves existing video coding with AI tools for short-to-medium term applications. |

| Integrative Genomic/Sensor Analysis | MPAI-GSA | Compresses high-throughput experiments’ data combining genomic/proteomic and other data. |

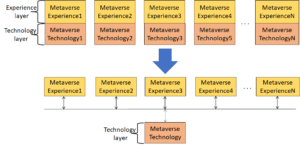

| Mixed-reality Collaborative Spaces | MPAI-MCS | Supports collaboration of humans represented by avatars in virtual-reality spaces. |

| Neural Network Watermarking | MPAI-NNW | Measures the impact of adding ownership and licensing information to models and inferences. |

| Visual Object and Scene Description | MPAI-OSD | Describes objects and their attributes in a scene. |

| Server-based Predictive Multiplayer Gaming | MPAI-SPG | Trains a network to compensate data losses and detects false data in online multiplayer gaming. |

| XR Venues | MPAI-XRV | XR-enabled and AI-enhanced use cases where venues may be both real and virtual. |

Please visit the MPAI website, contact the MPAI secretariat for specific information, subscribe to the MPAI Newsletter and follow MPAI on social media: LinkedIn, Twitter, Facebook, Instagram, and YouTube.

Most importantly: please join MPAI, share the fun, build the future.