Fifteen months ago, MPAI started an investigation on AI-based End-to-End Video Coding, a new approach to video coding not based on traditional architectures. Recently published results show that Version 0.3 of the MPAI-EEV Reference Model (EEV-0.3) has generally higher performance than the MPEG-HEVC video coding standard when applied to the MPAI set of high-quality drone video sequences.

This is now supersed by the news that the soon-to-be-released EEV-0.4 subjectively outperforms the MPEG-VVC codec using low-delay P configuration. Join the online presentation of MPAI EEV-0.4

At 15 UTC on the 1st of March 2023, Place

You will learn about the model, its performance, and its planned public release both for training and test, and how you can participate in the EEV meetings and contribute to achieving the amazing promises of end-to-end video coding.

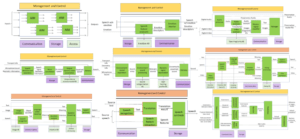

The main features of EEV-0.4 are:

- A new model that decomposes motion into intrinsic and compensatory motion: the former, originating from the implicit spatiotemporal context hidden in the reference pictures, does not add to the bit budget while the latter, playing a structural refinement and texture enhancement role, requires bits.

- Inter prediction is performed in the deep feature space in the form of progressive temporal transition, conditioned on the decomposed motion.

- The framework is end-to-end optimized together with the residual coding and restoration modules.

Extensive experimental results on diverse datasets (MPEG test and non-standard sequences) demonstrate that this model outperforms the VTM-15.2 Reference Model in terms of MS-SSIM.

You are invited to attend the MPAI EEV-0.4 presentation. You will learn about the model, its performance, and its planned public release both for training and test, and how you can participate in the EEV meetings and contribute to achieve the amazing promises of end-to-end video coding.