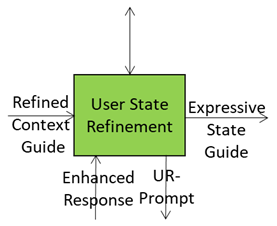

User State Refinement starts from a “blurry photo” of the User in the context (the initial User State) that includes a location, activity, initial intent, maybe a few emotional hints and adds to the “blurry photo” all the information about the User that the workflow has been able to collect.

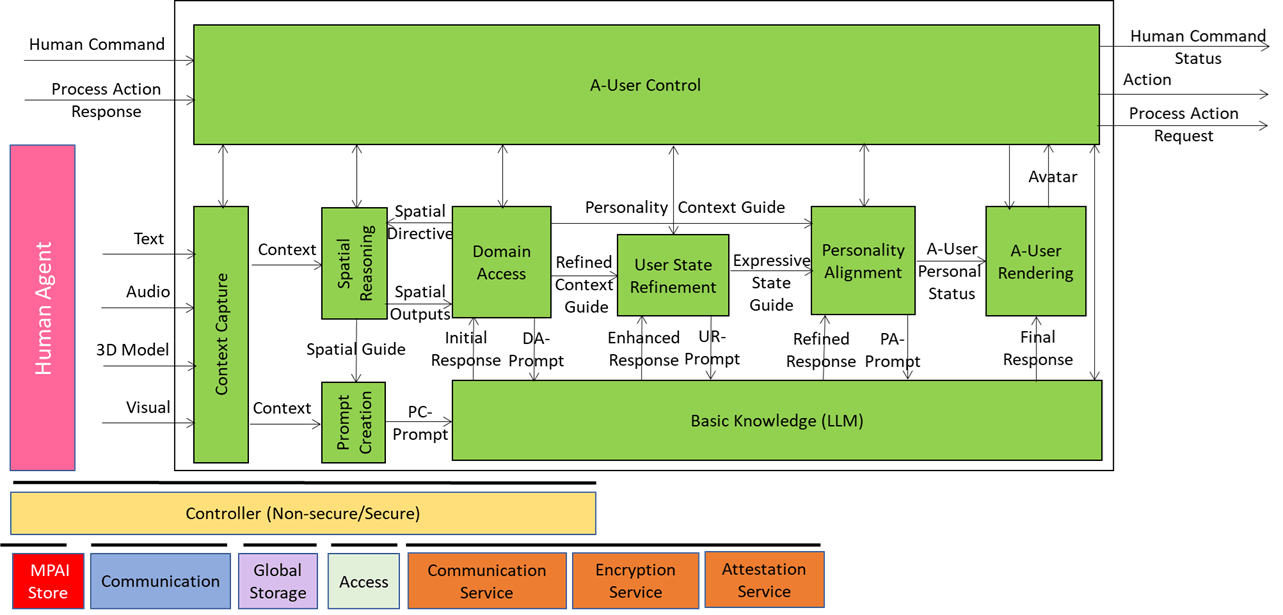

We have already presented the system diagram of the Autonomous User (A-User), an autonomous agent able to move and interact (walk, converse, do things, etc.) with another User in a metaverse. The latter User may be an A-User or be under the direct control of a human and is thus called a Human-User (H-User). The A-User acts as a “conversation partner in a metaverse interaction” with the User.

This is the eighth of a sequence of posts aiming to illustrate more in depth the architecture of an A-User and provide an easy entry point for those who wish to respond to the MPAI Call for Technology on Autonomous User Architecture. The first six dealt with 1) the Control performed by the A-User Control AI Module on the other components of the A-User; 2) how the A-User captures the external metaverse environment using the Context Capture AI Module; 3) listens, localises, and interprets sound not just as data, but as data having a spatially anchored meaning; 4) makes sense of what the Autonomous User sees by understanding objects’ geometry; relationships, and salience; 5) takes raw sensory input and the User State and turns them into a well‑formed prompt that Basic Knowledge can actually understand and respond to; 6) taps into domain-specific intelligence for deeper understanding of user utterances and operational context; and 7) the core language model of the Autonomous User – the “knows-a-bit-of-everything” brain, the first responder to a prompt of a sequence of four.

When the A-User begins interacting, it starts with a basic User State captured by Context Capture – location, activity, initial intent, and perhaps a few emotional hints. This initial state is useful, but it’s like a blurry photo: the A-User knows who’s there, but not the details that matter for nuanced interaction.

As the session unfolds, the A-User learns much more thanks to Prompt Creation, Spatial Reasoning, and Domain Access. Suddenly, the A-User understands not just what the User said, but what it meant, the context it operates in, and the reasoning patterns relevant to the domain. This new knowledge is integrated with the initial state so that subsequent steps – especially Personality Alignment and Basic Knowledge – are based on an appropriate understanding of the User State.

Why Update the User State?

Personality Alignment is where the A-User adapts tone, style, and interaction strategy. If it only relies on the first guess of the User State, it risks taking an incongruent attitude – formal when casual is needed, directive when supportive is expected. Updating the User State ensures:

- Domain-aware precision: incorporating jargon, compliance rules, and reasoning patterns.

- Emotional calibration: adjusting responses to confusion, urgency, or confidence.

The Refinement Process

- Start with Context Snapshot: capture environment, speech, gestures, and basic emotional cues.

- Inject Domain Intelligence: from Domain Access: technical vocabulary, rules, structured reasoning.

- Merge New Observations: emotional shifts, spatial changes, updated intent.

- Validate Consistency: ensure module coherence for reliable downstream use.

- Feed Forward: pass the refined state to Personality Alignment and sharper prompts to Basic Knowledge.

Key point to take away about User State Refinement

- Capture initial User State from Context: location, activity, intent, basic emotions.

- Initial state = blurry photo: useful but lacks detail.

- A-User learns what was said, meant, and domain reasoning patterns.

- Merge new insights with original state for accuracy by

- Injecting domain intelligence

- Merging emotional/spatial updates

- Outputs: better prompt for Basic Knowledge and more complete User State.

- Goal: dynamic, nuanced understanding powering adaptive interaction.