One year ago today, MPAI could take stock of 3 months of work: an established organisation with the mission of developing Artificial Intelligence (AI)-based data coding standards, a first identification of a program of work, progression of several work items and a published Call for Technologies for one of them.

What can MPAI say today? That it has lived a very intense year and that it can declare itself satisfied of what it has achieved.

The first is that it has refined its method of work to make it solid but also capable to overcome problems plaguing other Standards Developing Organisations (SDO). A standard project goes through 8 stages, progression to a new stage requiring approval by the MPAI General Assembly. A Call for Technology is issued with Functional and Commercial Requirements.

The second is that it has developed 3 Technical Specifications (TS) that use AI to enable the industry to accelerate deployment of AI-based applications. Two words for each of them:

Context-based Audio Enhancement (MPAI-CAE) – supports 4 use cases:

- Emotion-Enhanced Speech (EES) allows a user to give a machine a sentence uttered without emotion and obtain one that it is uttered with a given emotion, say, happy, or sad, or cheerful etc., or uttered with the colour of a specific model utterance.

- Audio Recording Preservation (ARP) allows a user to preserve old audio tapes by providing a high-quality digital version and a digital version restored using AI with a documented set of irregularities found in the tape.

- Speech Restoration System (SRS) allows a user to automatically recover damaged speech segments using a speech model obtained from the undamaged part of the speech.

- Enhanced Audioconference Experience (EAE) improves a participant’s audioconference experience by using a microphone array and extracting the spatial attributes of the speakers with respect to the position of the microphone array to allow spatial representation of the speech signals at the receiver.

Compression and Understanding of Industrial Data (MPAI-CUI) support one use case: Company Performance Prediction. This gives the financial risk assessment industry new, powerful and extensible means to predict the performance of a company several years into the future in terms of company default probability, business discontinuity probability and adequacy index of company organisational model.

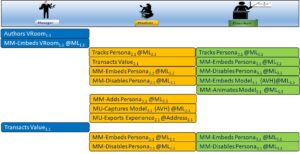

Multimodal Conversation (MPAI-MMC) – supports 5 use cases:

- Conversation with Emotion (CWE) allows a user to have a full conversation with a machine impersonated by a synthetic speech and an animated face. The machine understands the emotional state of the user and its speech and face are congruent with that emotional state.

- Multimodal Question Answering (MQA) allows a user to ask a machine via speech information about an object held in their hand and obtain a verbal response from the machine.

- Unidirectional Speech Translation (UST) allows a user to express a verbal sentence in a language and obtain a verbal translation in another language that preserves the user’s vocal featurers.

- Bidirectional Speech Translation (BST) allows two users to have a dialogue each using their own language and hearing the other user’s translated voice with that user’s native speech features.

- One-to-Many Speech Translation (MST); allows a user to select a set of languages and have their speech translated to the selected languages with the possibility to decide whether to preserve or not their speech features in the translations.

The first MPAI Call for Technologies was issued on 16 December 2020 and concerned the AI Framework (MPAI-AIF) for creation and execution of AI Workflows (AIW) composed of AI Modules (AIM). These may have been developed in any environment using any proprietary framework for any operating system, be AI- and non-AI-based, implemented in hardware or software or in a hybrid hardware and software combination, for execute in MCUs up to HPC in local and distributed environments, and in proximity with other AIFs, irrespective of the AIM provider. The three TSs mentioned above rely on the MPAI-AIF TS for their implementations.

Finally, in 2021 MPAI has develop the Governance of the MPAI Ecosystem (MPAI-GME) TS. This lays down the rules governing the MPAI Ecosystem composed

- MPAI developing standards.

- Implementers developing implementations

- The MPAI established and controlled not-for-profit MPAI Store where implementations are uploaded, checked for security, and tested for conformance.

- MPAI-appointed performance assessors who assess that implementations are reliable and trustworthy.

- Users who can access secure MPAI standard implementations guaranteed for Conformance and Performance.

In 2020, MPAI has developed the four MPAI components – Technical Specifications, Reference Software, Conformance Testing and Performance Assessment – for Compression and Understanding of Industrial Data (MPAI-CUI). These, together with the other TSs, are published on the MPAI web site.

These are firm results for standards that industry can take up, but MPAI has carried out substantial more work preparing for the future:

MPAI-CAV: Connected Autonomous Vehicles

MPAI-EEV: AI-based End-to-End Video Coding

MPAI-EVC: AI-Enhanced Video Coding

MPAI-MCS: Mixed-reality Collaborative Spaces

MPAI-SPG: Server-based Predictive Multiplayer Gaming

This huge work has been carried out by a network of technical groups that MPAI thanks for their efforts and results.

Want to know more? Read “Towards Pervasive and Trustworthy Artificial Intelligence”, the book that illustrates the results achieved by MPAI in its 15 months of operation and the plans for the next 12 months.

The work has just begun. It is time to become an MPAI member. Join the fun – build the future!