The ridge between two ages

For 30 years smart use of data processing has reduced the enormous amount of data generated by audio and video to manageable levels, but 30 years later, the spirit that has produced those uniquely innovative and successful standards as MP3, digital television, audio and video on the internet, DASH and so much more has waned.

MPAI – Moving pictures, audio and data coding by Artificial Intelligence (AI) is the organisation that has that spirit and thrust. MPAI uses AI as enabling technology because coding is not just compression but also understanding, coding benefits not only video and audio, but many other data types.

Data processing remains a valid alternative to AI in many domains, so data processing is still part of the family…

MPAI is not just like any other organisation…

MPAI wants to be a new and different actor in the world of standards basing its activity on 5 pillars.

Pillar #1: New process improving old shortcomings

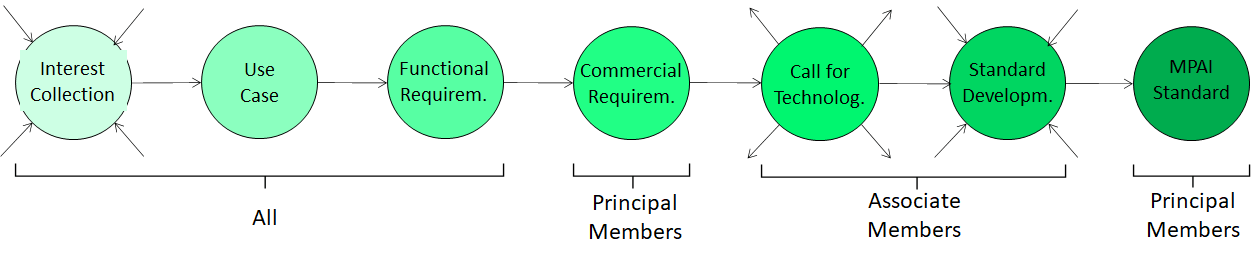

The first pillar is a new standards development process that strives to improve old shortcomings.

- A standard is designed to satisfy needs. They can be old needs to be better served or new ones. MPAI collects interests of those who have serious needs to satisfy opening its online meetings.

- When ideas are mature, a use case is approved.

- Open meetings are then convened to develop the functional requirements that the future standard shall satisfy.

- When the functional requirements are approved, the MPAI Principal Members who intend to contribute technology to the standard develop commercial requirements and approve a document called “Framework Licence”. This is the business model – without values – that will be adopted by patent holders to monetise their Intellectual Property.

- When the Framework Licence is approved, a Call for Technologies is developed and widely published.

- By the deadline set in the Call, MPAI receives responses and starts the standard development. MPAI Associate Members can participate in the development of the standard on par with Principal Members.

- Eventually the standard is approved.

Please note that the General Assembly, MPAI’s supreme body, approves the progression of a standard through each of its seven stages.

Pillar #2: AI modules

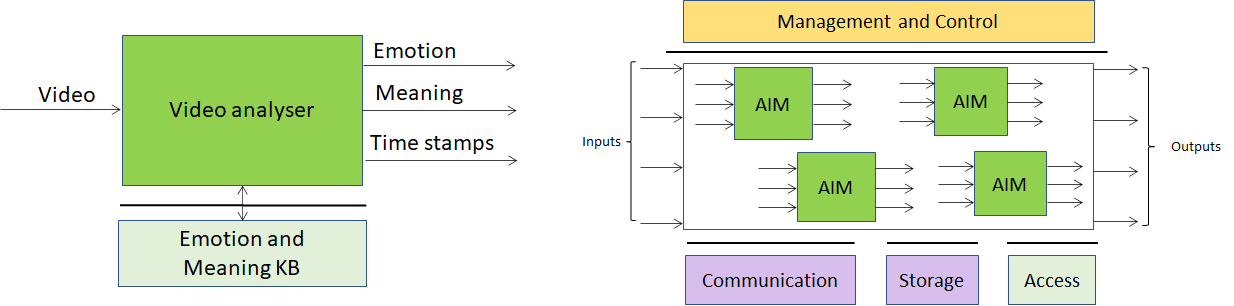

MPAI defines AI Modules (AIM) as the elementary target of standardisation. AIMs perform an identified function, receive inputs and produce outputs that have standard syntax and semantics, may use Artificial Intelligence, Machine Learning or data processing technologies and can be implemented in Hardware or Software or mixed technologies.

Pillar #3: AI Framework

Pillar#3 is a standard environment, called AI Framework (AIF), where AI Modules are executed. An MPAI-conforming implementation uses standard AIMs executed in a standard AIF. Importantly, AIMs can be implemented in hardware or software or mixed technologies, and AIF supports that.

Pillar #4: Framework Licences

Framework Licences are the patent holders’ business models. Patents are the engine of innovation and indeed they were the driver of MPEG standards’ success. But today the MPEG IPR machine has jammed. MPAI framework licences promise to accelerate availability of licences after the standard is done.

Pillar #5: Ethical standards

MPAI is convinced of the huge impact AI will have on society and humans and that monolithic and opaque AI systems alienate users from AI.

The combination of MPAI’s Pillars #2 and #3 provide an initial response to the need for explainability, i.e. the nned for humans to understand how an AI systems has produced an output in response to an input. It is possible to trace how an MPAI-conforming AI Systems ha produced an output from an input.

What standards is MPAI developing?

AI Framework (MPAI-AIF) is a standard environment to implement MPAI-conforming AI systems that supports Artificial Intelligence, Machine Learning and Data Processing life cycles of networked AI Modules (AIM). The standard is planned to be delivered in June 2021

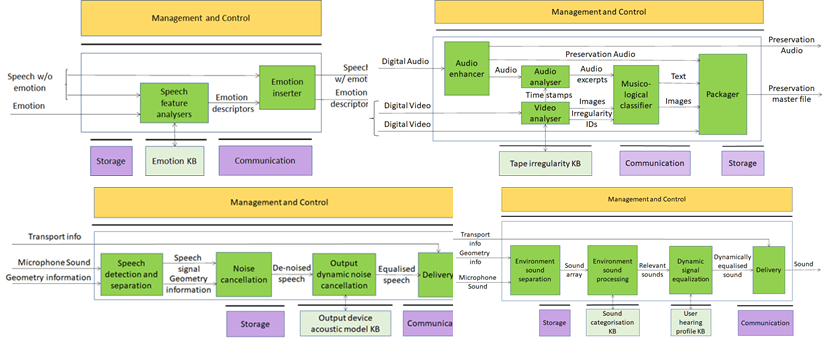

| Context-based Audio Enhancement (MPAI-CAE) is an application standard for 4 initial use cases: adding a desired emotion to a speech without emotion, preserving old audio tapes, improving the audioconference experience and removing unwanted sounds while keeping the relevant ones to a user walking in the street. |  |

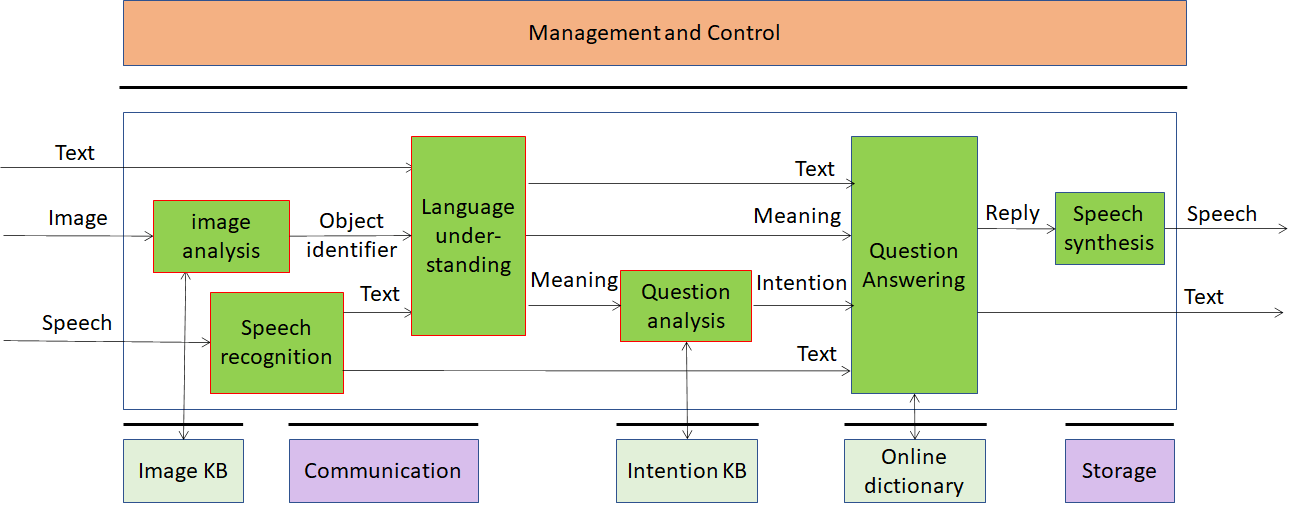

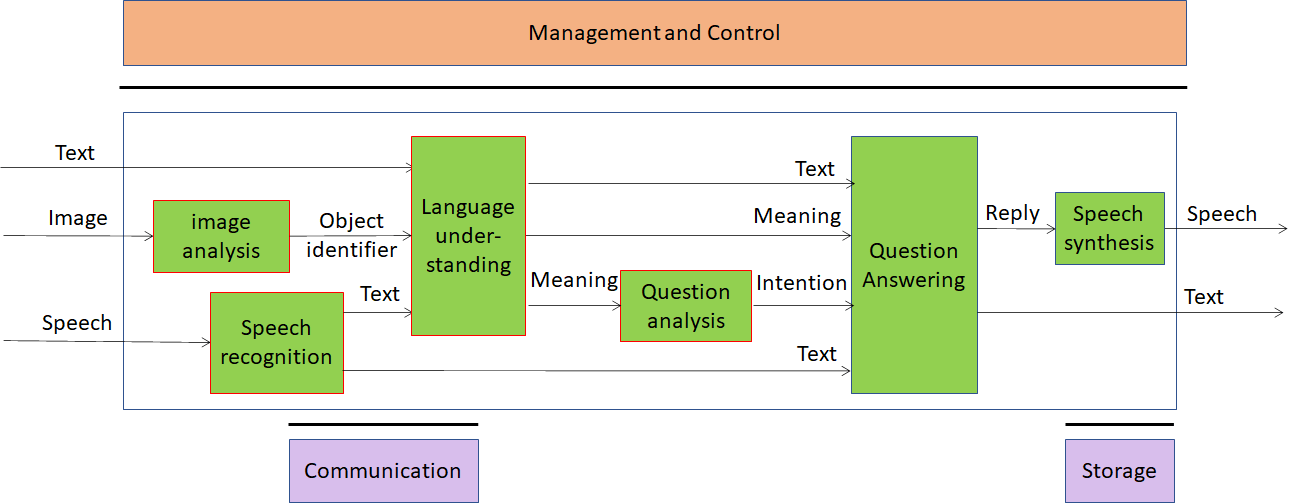

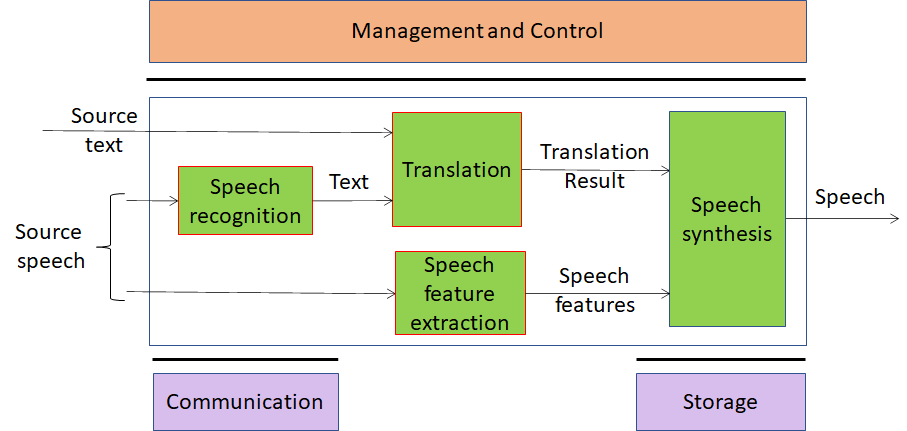

| Multimodal Conversation (MPAI-MMC) is an application standard for 3 initial use cases: audio-visual conversation with a machine impersonated by a synthesised voice and an animated face, request for information about a displayed object, translation of a sentence using a synthetic voice that preserves the speech features of the human. |

|  |

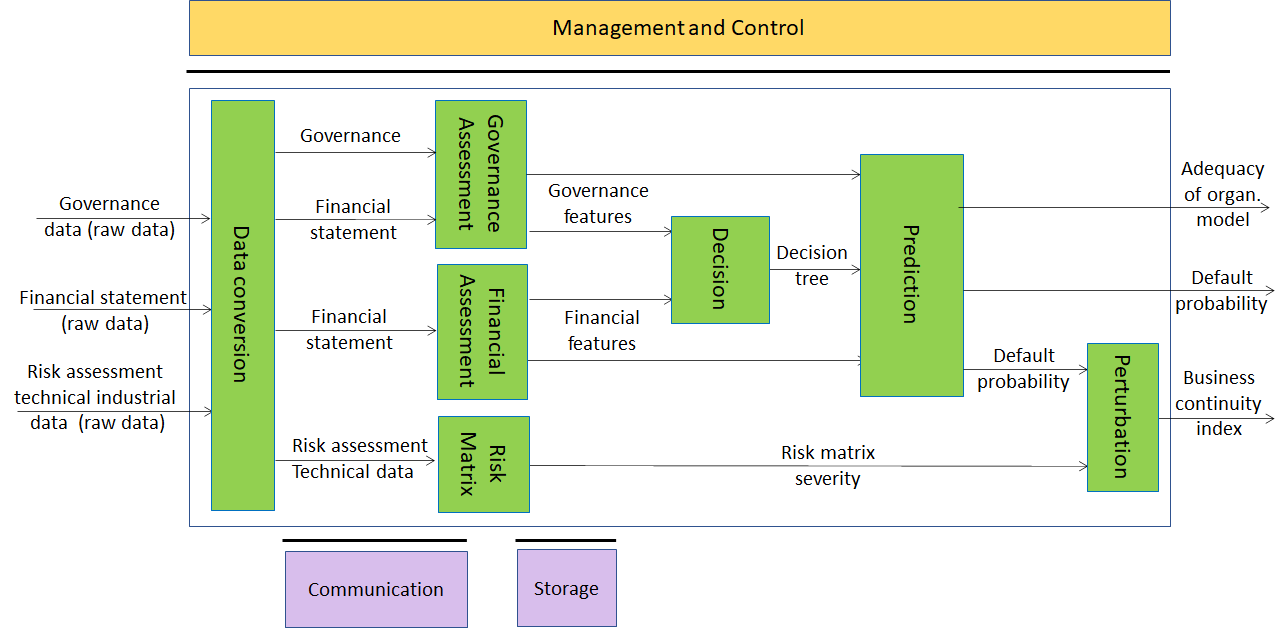

| Compression and Understanding of Industrial Data (MPAI-CUI) is application standard for one initial use case: Prediction of performance by filtering and extraction of information from a company’s governance, financial and risk data. Performance is measured with 3 parameters: organisational adequacy, default probability and business continuity index. |  |

Not the end of the story, but the beginning

MPAI is currently developing 4 standards, but more standards are in the pipeline:

- Server-based Predictive Multiplayer Gaming (MPAI-SPG) is an application standard to predict data from game clients that have not reached the server because of network latency and to identify game clients that send altered data (so-called Anti-cheating). It is expected that MPAI-SPG will be implemented as a plug-in to an online game server for training and inference.

- AI-Enhanced Video Coding (MPAI-EVC) is an Application standard that improves coding efficiency of the EVC standard by enhancing or replacing existing data processing tools with AI tools. The standard is developed by a global network of researchers who plug their AI tools developed in any framework.

- Integrative Genomic/Sensor Analysis (MPAI-GSA) is a collection of representative use cases to compress and understand the result of high-throughput experiments combining genomic/proteomic and other data, e.g., from video, motion, location, weather, medical sensors.

- Visual Object and Scene Description (MPAI-OSD) is a collection of Use Cases sharing the goal of describing visual object and locate them in the space. Scene description includes the usual description of objects and their attributes in a scene.