1 Introduction

Moving Picture, Audio and Data Coding by Artificial Intelligence (MPAI) is an international, non-affiliated, not-for-profit registered in Geneva. MPAI’s mission is to promote the efficient use of Data by

- developing standards for coding any type of data, especially using new technologies to enable the use of Artificial Intelligence

- integrating data coding components in Information and Communication Technology systems

- bridging the gap between standards and their practical use by means of Framework Licenses.

While Artificial Intelligence (AI) technologies are used in more and more applications yielding one of the fastest-growing markets in the data analysis and service sector, stakeholders must overcome hurdles fully to exploit this historical opportunity: current framework-based development model making application redeployment difficult, and monolithic and opaque AI applications that generate mistrust in users.

The MPAI Manifesto summarises the key points:

- MPAI considers AI module (AIM) and its interfaces as the AI building block. The syntax and semantics of interfaces determine what AIMs should perform, not how. AIMs can be implemented in hardware or software, with AI or Machine Learning legacy Data Processing.

- MPAI’s AI framework enables creation, execution, composition and update of AIM-based workflows (MPAI-AIF). It is the cornerstone of MPAI standardisation because it enables building high-complexity AI solutions by interconnecting multi-vendor AIMs trained to specific tasks that operate in the standard AI framework and exchange data in standard formats.

|  |

| Figure 1 – An example of AI module | Figure 2 – An example of AI Framework |

- MPAI relies on AI technologies for its standards. However, it is well aware that in our transitional age there are many technologies that have been used to provide products and services that AI promises to replace. MPAI’s AI Framework is designed to enable coexistence, inter-operation and mutual replacement ofAI Modules of different nature: AI, Machine Learning (ML) and legacy Data Processing (DP). In the following sections, technology-neutral solutions will be described.

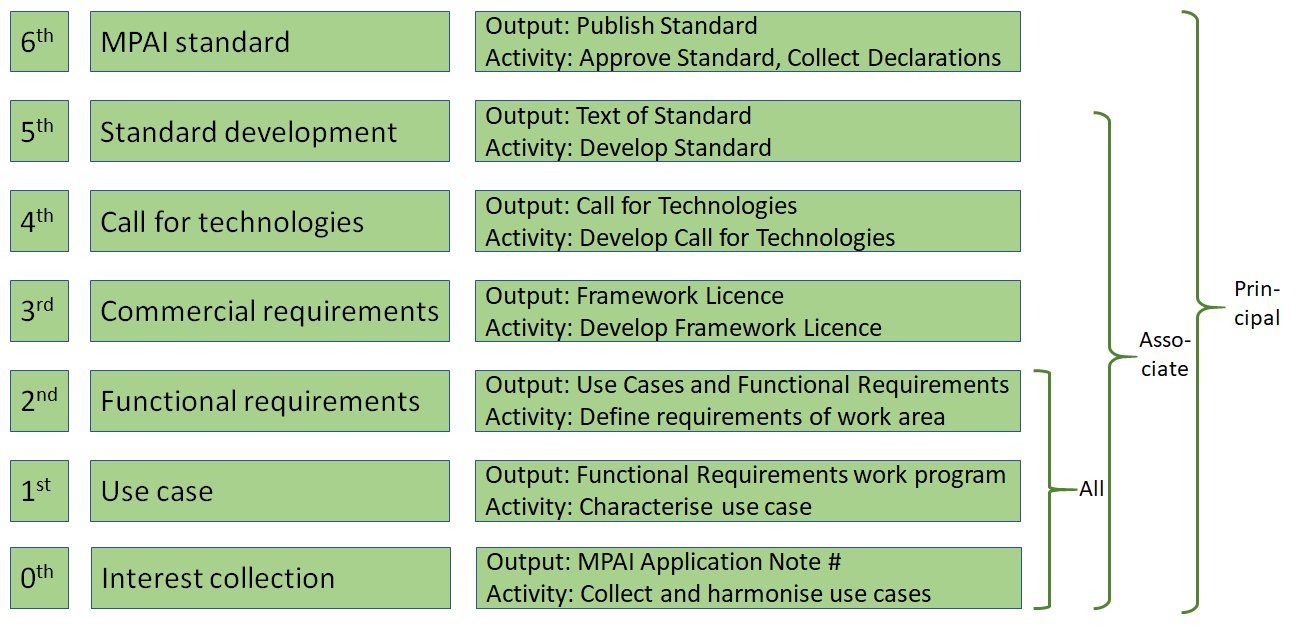

- The MPAI process.

Anybody can participate in

– 0. collection of interest

– 1. use case definition and

– 2. functional requirement identification.

All members participate in

– 3. definition of commercial requirements,

– 4. drafting of call for technologies, and

– 5. developing the standard.

Principal members

– 6. approve the standard.

2 AI Framework

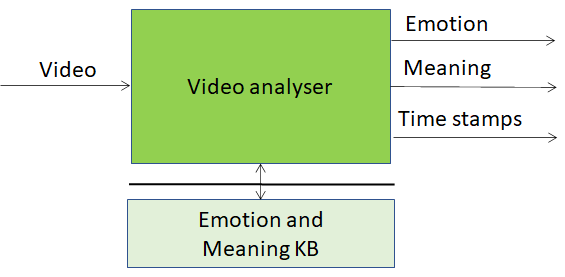

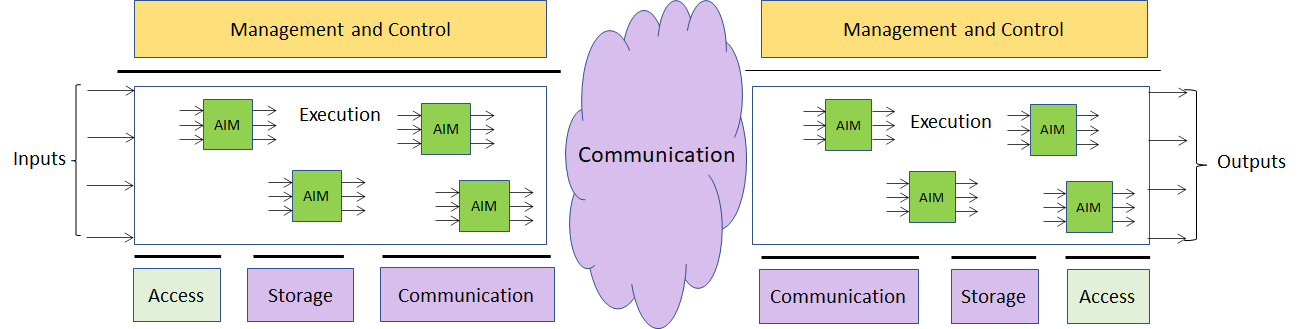

AI Framework (MPAI-AIF) has a reference model of Figure 3 that extends the conceptual model of Figure 2. MPAI issued a Call for Technologies [2] for MPAI-AIF on 16 December 2020. Responses were received on 14 February 2021. MPAI is busy reviewing the responses received that it will use to develop the standard. planned for July 2021.

Figure 3 – The MPAI-AIF Architecture

The scope of the MPAI-AIF standard is describes by its requirements [1]:

- Support single AIM life cycle

- initialize | instantiate-configure-remove | start-suspend-stop AIMs

- dump/retrieve internal state | enforce resource limits of AIMs

- Support multiple AIMs life cycles

- initialise | instantiate-remove-configure AIMs

- manual-automatic-dynamic-adaptive interface configuration of AIMs

- Support AIM machine learning

- train-retrain-update AIMs | configure/reconfigure ML computational models

- dynamic update of ML model

- supervised/unsupervised/reinforcement-based learning paradigms

- Workflows

- hierarchical execution of workflows | computational graphs, DAG as a minimum

- AIM topologies synchronised according to time base and full ML life cycles

- 1 & 2 way signal initialisation & control, communication & security policies between AIMs

3 Context-based Audio Enhancement

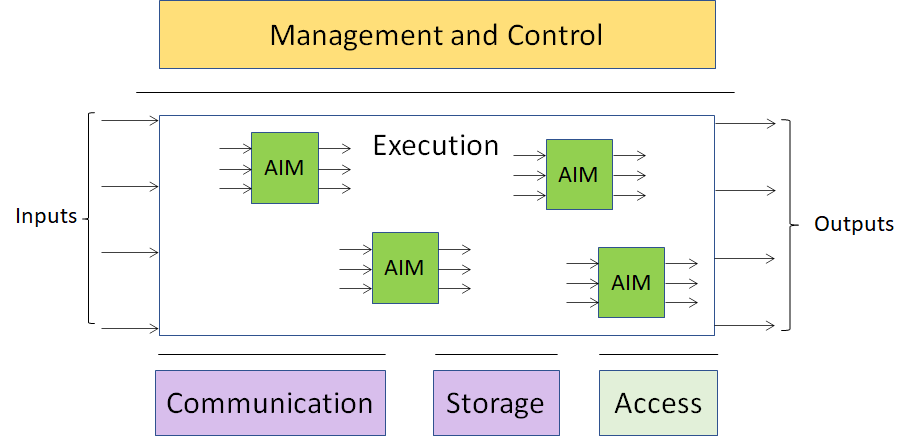

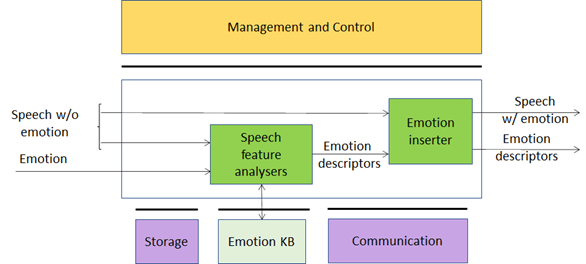

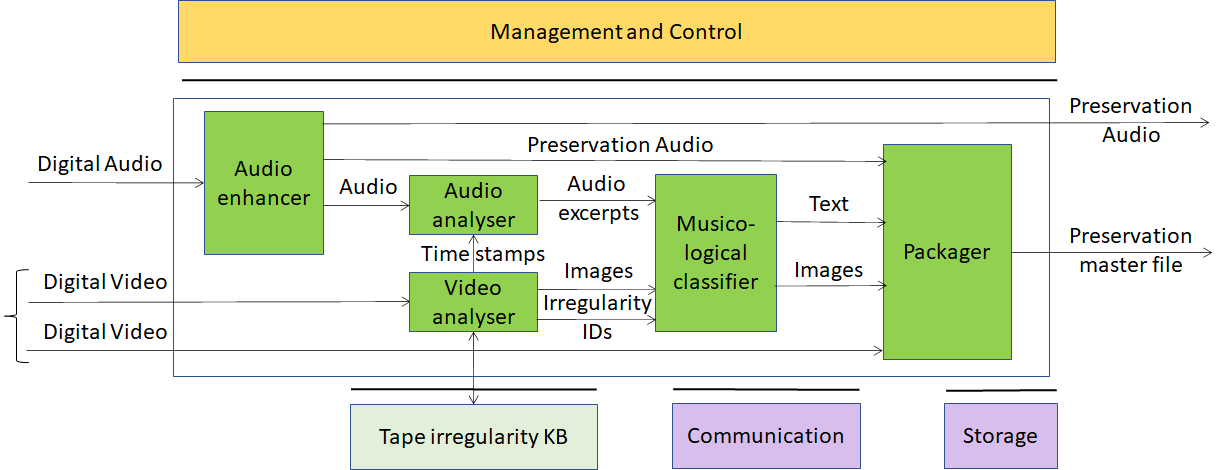

Context-based Audio Enhancement (MPAI-CAE) includes 4 use cases. In Emotion-enhanced speech (Figure 4) an emotion-less synthesised or natural speech is enhanced with a specified emotion with specified intensity and in Audio recording preservation (Figure 5) sound from an old audio tape is enhanced and a preservation master file produced using a video camera pointing to the magnetic head;

|  |

| Figure 4 – Emotion-enhanced speech | Figure 5 – Audio Recording Preservation |

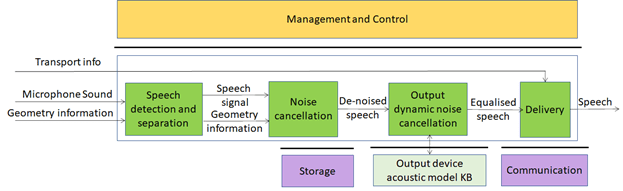

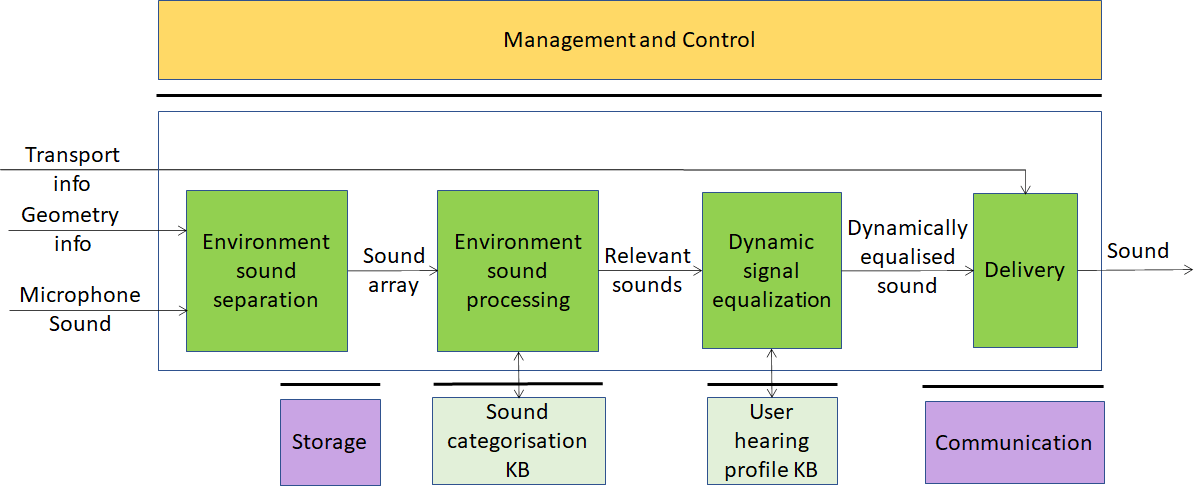

In Enhanced audioconference experience (Figure 6) speech captured in an unsuitable (e.g. at home) enviroment is cleaned of unwanted sounds and in Audio on the go (Figure 7) the audio experienced by a user in an environment preserves the external sounds that are considered relevant.

|  |

| Figure 6 – Enhanced Audioconference Experience | Figure 7 – Audio-on-the-go |

MPAI has issued a Call for Technologies for MPAI-CAE [4] on 20 January 2021. Responses are due on 12 April 2021. Some of the requested technologies documented in [3] are

- Emotion-enhanced speech use case

- Emotion

- Speech features

- Emotion descriptors

- Audio Recording Preservation use case

- Tape image features

- Enhanced audioconference experience use case

- Microphone geometry information

- Output device acoustic model KB query format

- Audio-on-the-go use case

- Sound features

- Sound Categorisation

- User hearing profile KB query format

4 Multimodal conversation

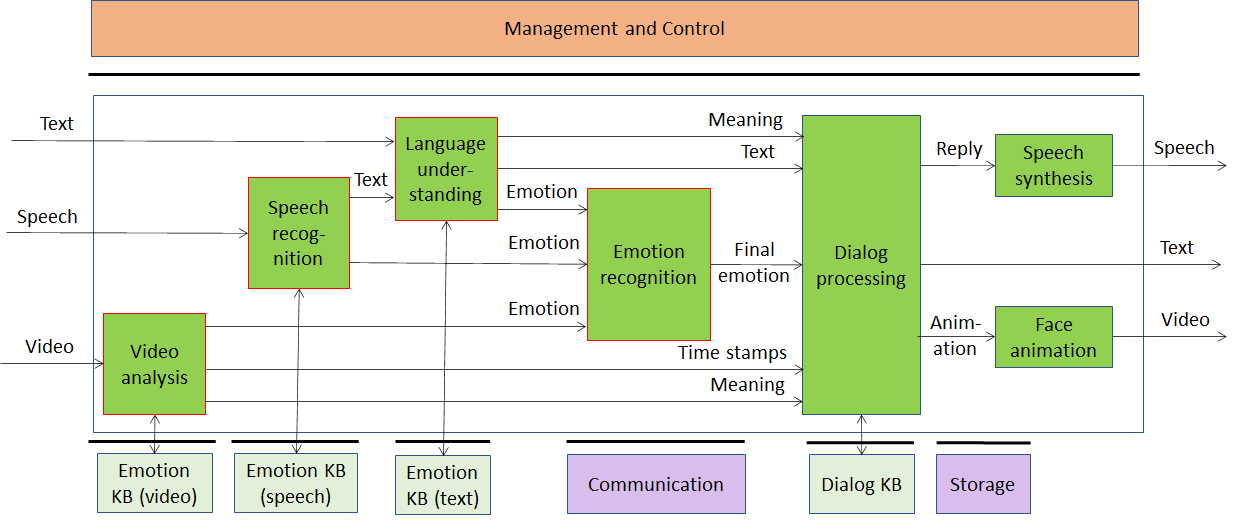

| Currently, Multimodal conversation (MPAI-MMC) includes 3 use cases where a human entertains an audio-visual conversation with a machine emulating human-to-human conversation in completeness and intensity. In Conversation with emotion (Figure 8). the human holds a dialogue with speech, video and possibly text. The machine responds with a synthesised voice and an animated face. |  |

| Figure 8 – Conversation with emotion |

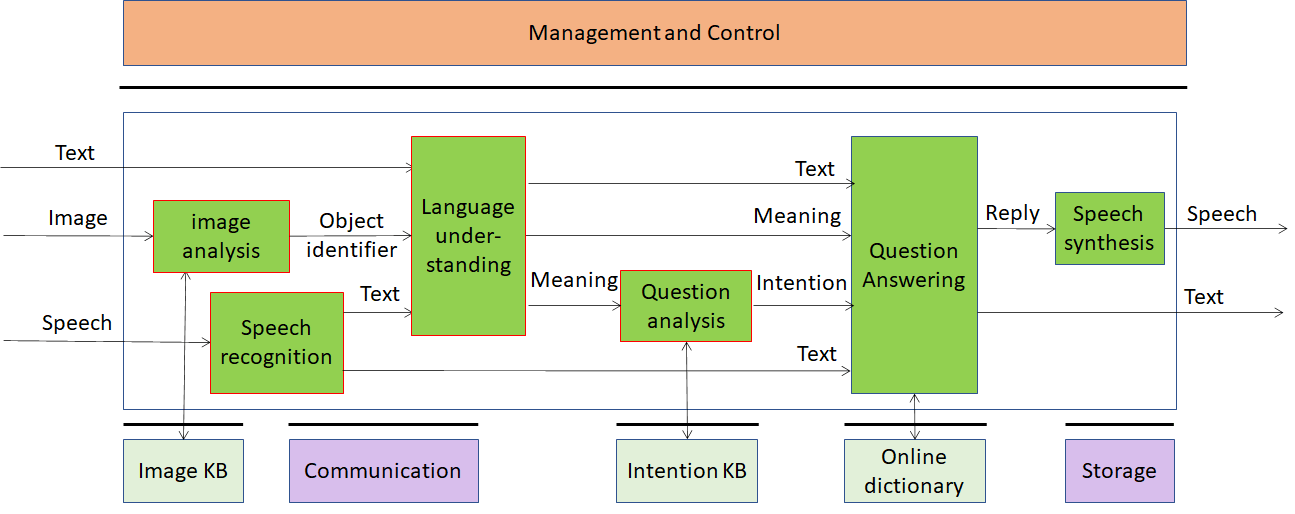

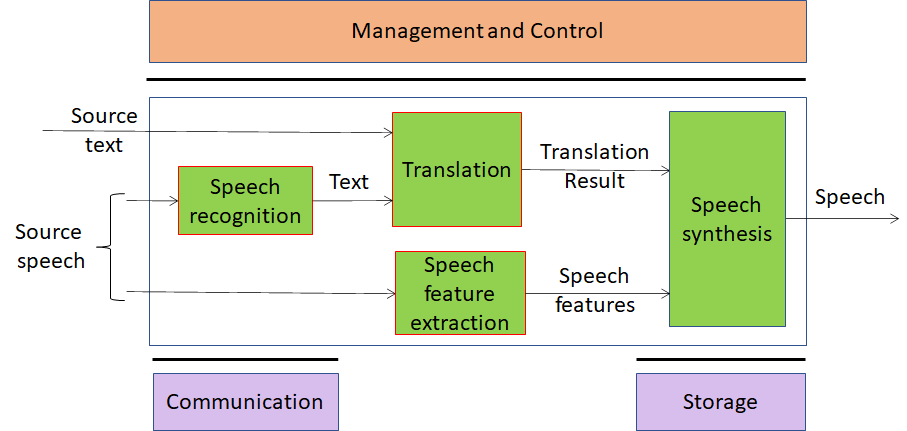

In Multimedia question answering (Figure 9),a human requests information about an object while displaying it. The machine responds with synthesised speech. In Personalized Automatic Speech Translation (Figure 10), a sentence uttered by a human is translated by a machine using a synthesised voice that preserves the speech features of the human.

|  |

| Figure 9 – Multimodal Question Answering | Figure 10 – Personalized Automatic Speech Translation |

MPAI has issued a Call for Technologies for MPAI-MMC [6] on 20 January 2021. Responses are due on 12 April 2021.

Some of the technologies requested in [5] are

- Conversation with emotion use case

- Emotion

- Text features

- Speech features

- Video features

- Meaning

- Sentence features

- Text with emotion

- Concept with emotion

- Multimodal question answering use case

- Image features

- Emotion

- Intention KB query format

- Online dictionary query format

- Personalised automatic speech translation use case

- Speech features

5 Other standards in the pipeline

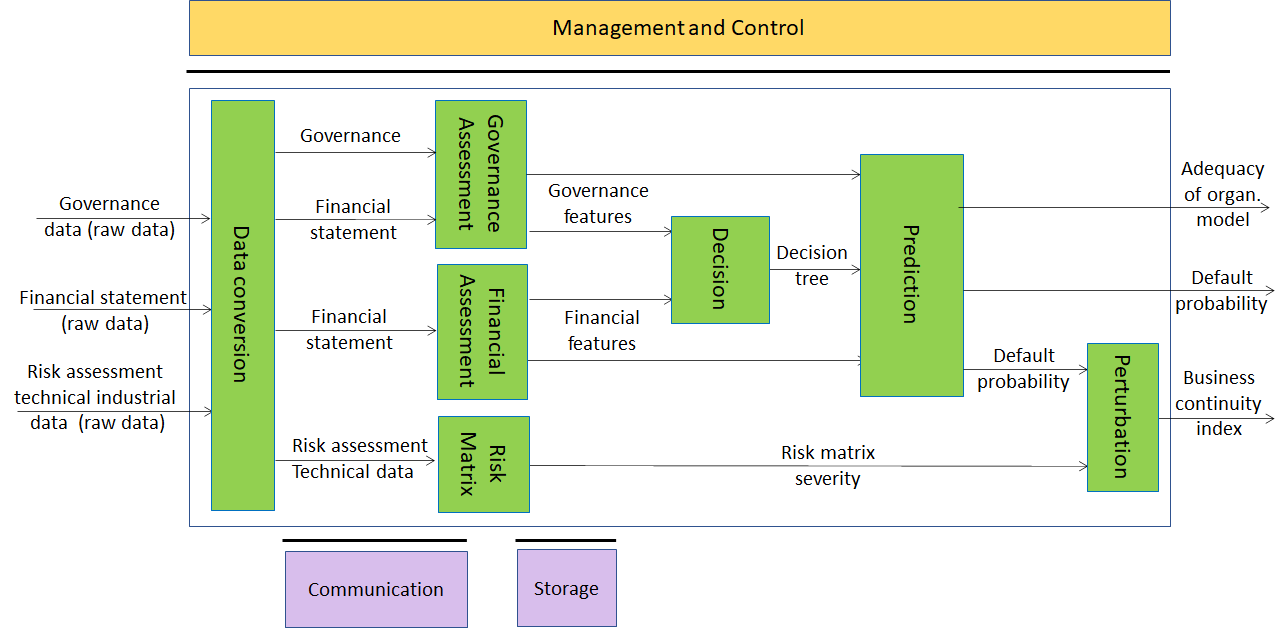

Compression and Understanding of Industrial Data (MPAI-CUI) is depicted in Figure 11. The standard enables AI-based filtering and extraction of key information from the flow of data produced by and outside of companies (e.g., data on vertical risks such as seismic, cyber etc.) to assess the risks faced by a company by using information from the flow of data produced. MPAI has completed the Functional Requirements of (MPAI-CUI) [7] and MPAI Active Members are now developing the Framework Licence to facilitate the actual licences – to be developed outside of MPAI.

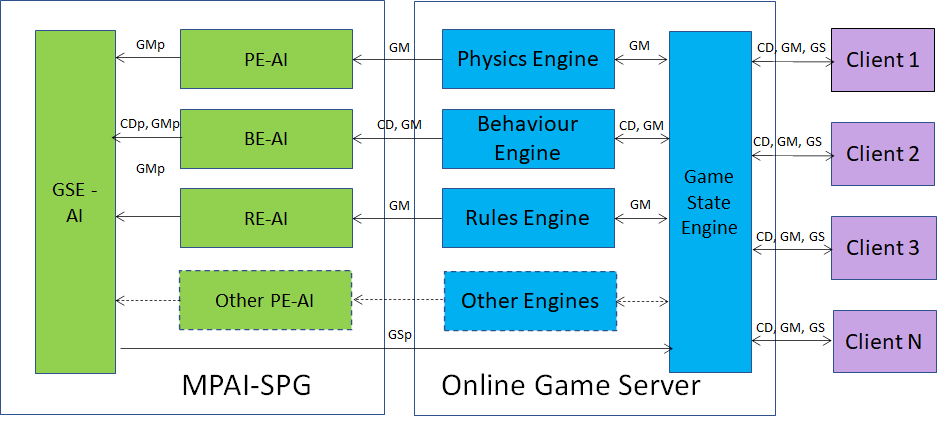

Server-based Predictive Multiplayer Gaming (MPAI-SPG) is depicted in Figure 12. The standard aims to minimise the audio-visual and gameplay discontinuities caused by high latency or packet losses during an online real-time game. In case information from a client is missing, the data collected from the clients involved in a particular game are fed to an AI-based system that predicts the moves of the client whose data are missing. MPAI is finalising the MPAI-SPG Functional Requirements [9].

|  |

| Figure 11 – AI-based Performance Prediction | Figure 12 – Server-based predictive Multiplayer Gaming |

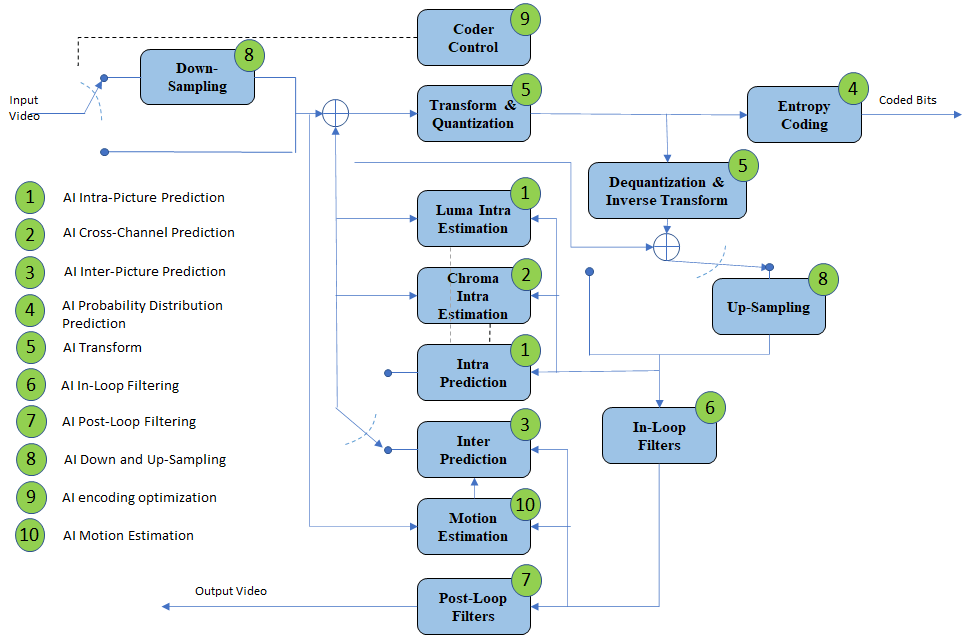

| AI tools promise to further reduce the bits/pixel beyond what has been achieved so far. AI-Enhanced Video Coding (MPAI-EVC) uses the Essential Video Coding (EVC) standard. EVC Baseline Profile, made of >20 years old technologies, has a performance comparable to High Efficiency Video Coding (HEVC). EVC Main Profile typically reduces the bitrate by ca. 36% over HEVC for 4k resolution High Dynamic Range (HDR) sequences. MPAI uses EVC as the starting point and is replacing the blue blocks, representing 10 EVC existing tools, with AI-based tools (Figure 13). |  |

Figure 13 – MPAI-EVC Evidence Project |

MPAI intends to obtain confirmation of the performance of AI tools used to replace DP-tools and will then issue a Call for Technologies. MPAI has developed stable MPAI-EVC Functional Requirements [10].

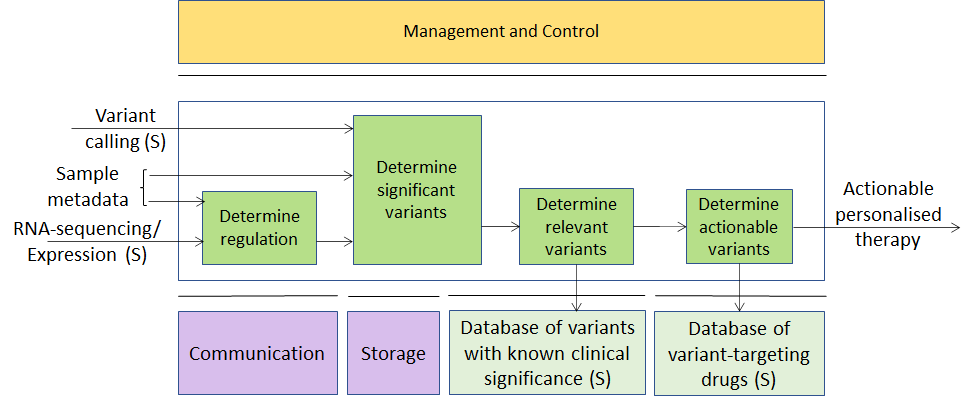

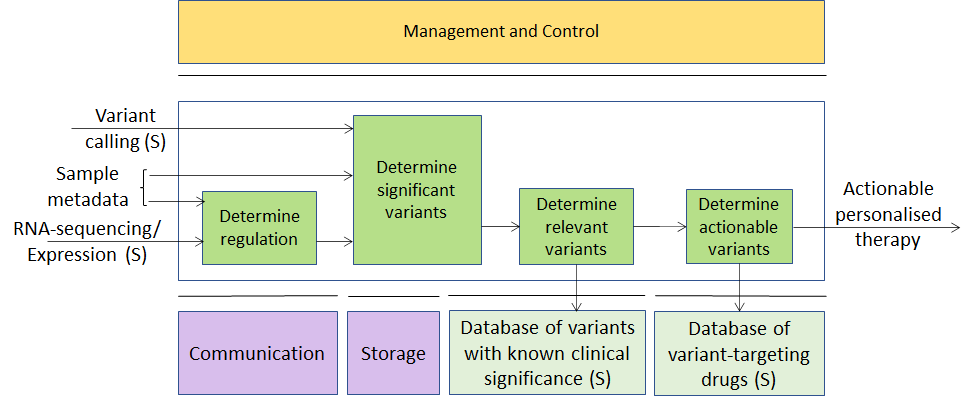

Integrative Genomic/Sensor Analysis (MPAI-GSA) is depicted in Figure 14. The standard uses AI to understand and compress the result of high-throughput experiments combining genomic/proteomic and other data, e.g., from video, motion, location, weather, medical sensors. So far, MPAI-GSA has been found applicable to 4 Use Areas, i.e., collections of homogeneous Use Cases.

In Integrative analysis of ‘omics datasets (Figure 14) molecular techniques (e.g. RNA-sequencing, ChIP-sequencing, HiC) and metadata are correlated/combined. Examples are: correlating gene regulation with an individual’s variants to determine actionable variants and a personalised therapy.

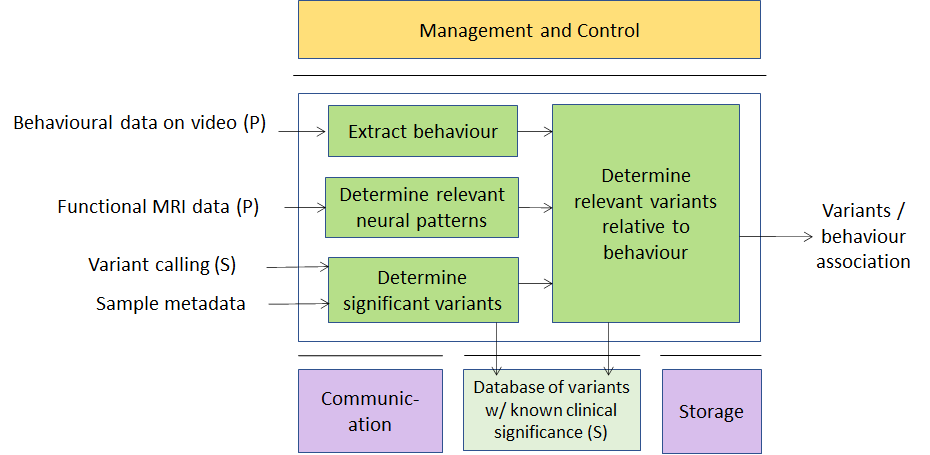

In Genomics and phenotypic/spatial data (Figure 15), animal behaviour (e.g., lab mice), neural patterns (i.e., from functional MRI-based experiments), genetic profile and reaction to drug administration are correlated and/or combined.

|  |

| Figure 14 – Integrative analysis of ‘omics datasets | Figure 15 – Genomics and Phenotypic/spatial data |

In Genomics and behaviour (Figure 16), molecular techniques (e.g., barcoded RNA-sequencing, metabolomics) and phenotype as determined by 2D/3D images or metadata (e.g., tissue composition, cell lineages) are correlated/combined.

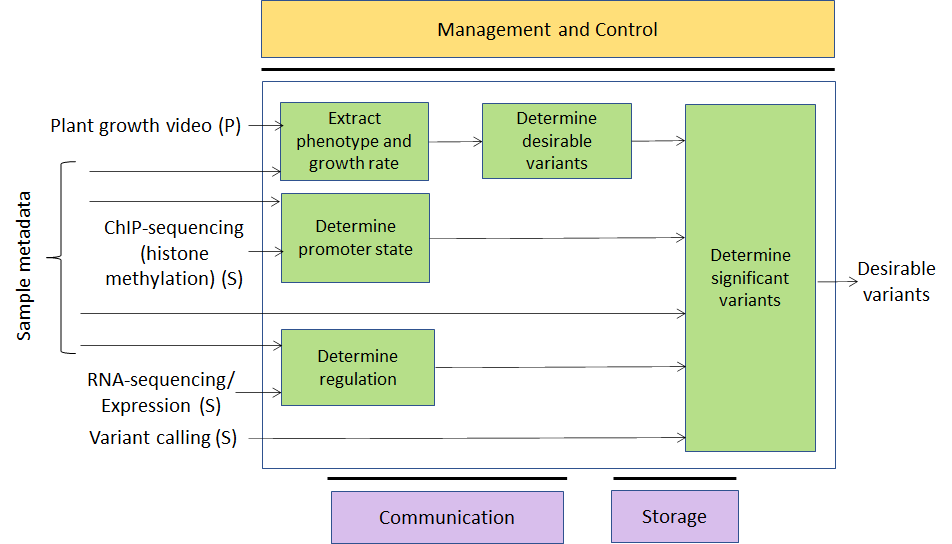

In Smart Farming (Figure 17), molecular techniques (e.g., RNA-sequencing, variants) and monitoring images (e.g., growth rate, satellite imagery) and position tracking (e.g. GPS) are correlated and o/r combined

|  |

| Figure 16 – Genomics and Behaviour | Figure 17 – Smart Farming |

MPAI is finalising the MPAI-GSA Functional Requirements [8].

6 Conclusions

- MPAI standards address the problems identified in the introduction bringing benefits to various actors:

- Technology providers will be able to offer their conforming AIMs to an open market

- Application developers will find on the open market the AIMs their applications need

- Consumers will be offered a wider choice of better AI applications by a competitive market

- Innovation will be fuelled by the demand for novel and more performing AIMs

- Society will be able to lift the veil of opacity from large, monolithic AI-based applications.

- AI-based data coding is future-proof as it allows MPAI to take advantage of emerging and future research in representation learning, transfer learning, edge AI, and reproducibility of performance.

- MPAI Framework Licences, where Intellectual Property Right (IPR) holders set out in advance IPR guidelines, alleviate the IPR-related problems which have accompanied high-tech standardisation based on vague and contention-prone Fair, Reasonable and Non-Discriminatory (FRAND) declarations.

- MPAI is aware of AI’s revolutionary impact on the future of human society and pledges to address ethical questions potentially raised by its technical work. The initial significant progress offered by AIMs and AIF is to allow understanding of the inner working of complex AI systems.

7 References

- MPAI-AIF Use Cases and Functional Requirements, N74; https://mpai.community/standards/mpai-aif/#Requirements

- MPAI-AIF Call for Technologies, N100; https://mpai.community/standards/mpai-aif/#Technologies

- MPAI-CAE Use Cases and Functional Requirements, N151; https://mpai.community/standards/mpai-cae/#UCFR

- MPAI-CAE Call for Technologies, N152; https://mpai.community/standards/mpai-cae/#Technologies

- MPAI-MMC Use Cases and Functional Requirements, N153; https://mpai.community/standards/mpai-mmc/#Requirements

- MPAI-MMC Call for Technologies, N154; https://mpai.community/standards/mpai-mmc/#Technologies

- MPAI-CUI Use Cases and Functional Requirement, N155; https://mpai.community/standards/mpai-cui/#Requirements

- Draft MPAI-GSA Use Cases and Functional Requirements, N156; https://mpai.community/standards/mpai-gsa/#Requirements

- Draft MPAI-SPG Use Cases and Functional Requirements, N157; https://mpai.community/standards/mpai-spg/#UCFR

- MPAI-EVC Use Cases and Functional Requirements, N92; https://mpai.community/standards/mpai-evc/#Requirements