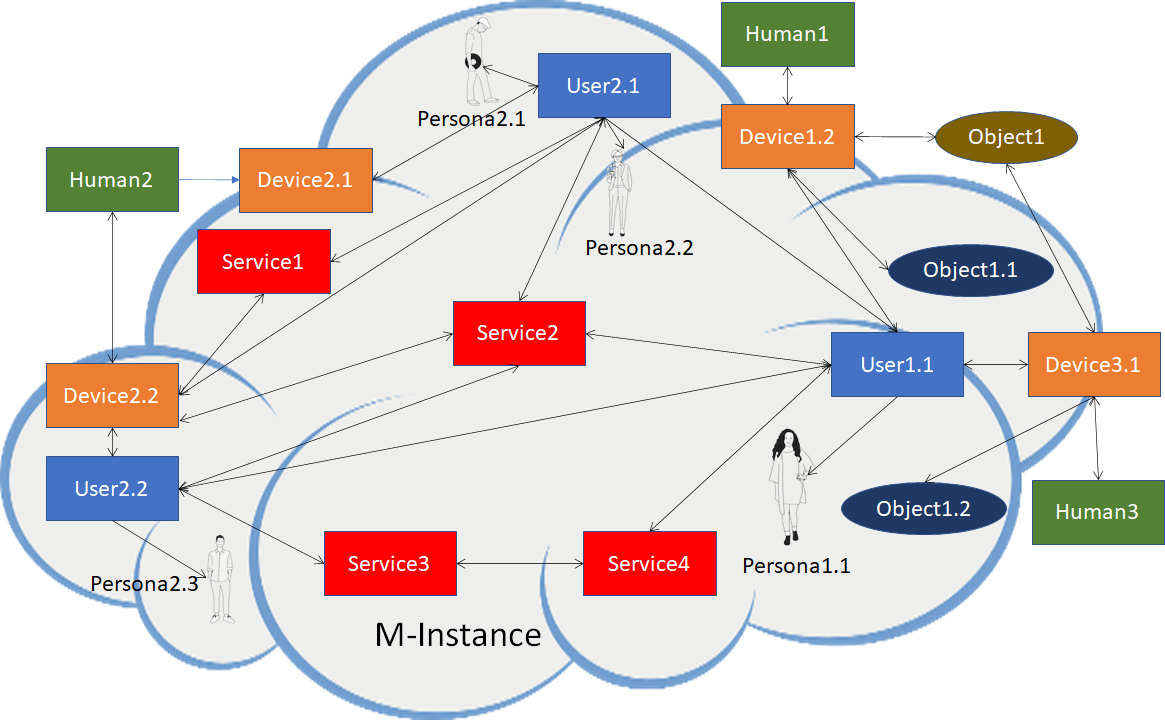

Introduction to MPAI’s Human and Machine Communication (MPAI-HMC) V1.1

One of the new Technical Specifications published by MPAI is Version 1.1 of Human and Machine Communication (MPAI-HMC). The title is definitely not reductive – the scope is not intended to be narrow. Indeed, Human and Machine Communication is a vast area where new technologies are constantly introduced – more so today than before, thanks to the rapid progress of Artificial Intelligence. It is also a vast business area where new products, services, and applications are launched by the day,…