“Digital humans” are computer-created digital objects that can be rendered with a human appearance and called Avatars. As Avatars have mostly been created, animated, and rendered in closed environments, there is no surprise that there has been very little need for standards.

In a communication context, say, in an interoperable metaverse, digital humans may not be constrained to be in a closed environment. Therefore, if a sender requires that a remote receiving client reproduce a digital human as intended by the sender, standards are needed.

Technical Specification: Avatar Representation and Animation is a first response to this need, with the following goals:

- Objective1: To enable a user to reproduce a virtual environment as intended.

- Objective2: to enable a user to reproduce a sender’s avatar and its animation as intended by the sender.

- Objective3: to estimate the personal status of a human or avatar.

- Objective4: to display an avatar with a selected personal status.

Personal Status is a data type standardised by Multimodal Conversation V2 representing the ensemble of the information internal to a person, including Emotion, Cognitive State, and Attitude. See more on Personal Status here.

The MPAI-ARA standard has been designed to provide all the standards that are required to implement the Avatar-Based Videoconference Use Case where Avatars having the visual appearance and uttering the real voice of human participants meet in a virtual environment (Figure 1).

![]()

Figure 1 – Avatar-Based Videoconference

MPAI-ARA assumes that the system is composed of fours subsystems, as depicted in Figure 2.

![]()

Figure 2 – Avatar-Based Videoconference System

This is how the system works:

Remotely located Transmitting Clients sends to Server:

- At the beginning:

- Avatar Model(s) and Language Preferences.

- Speech Object and Face Object for Authentication.

- Continuously sends:

- Avatar Descriptors and Speech to Server.

The Server:

- At the beginning:

- Selects an Environment, e.g., a meeting room.

- Equips the room with objects, i.e., meeting table and chairs.

- Places Avatar Models around the table.

- Distributes Environment, Avatars, and their positions to all receiving Clients.

- Authenticates Speech and Face Objects

- Continuously:

- Translates Speech from participants according to Language Preferences.

- Sends Avatar Descriptors and Speech to receiving Clients.

The Virtual Secretary

- Receives Text, Speech, and Avatar Descriptors of conference participants.

- Recognises Speech streams.

- Refines Recognised Text and extracts Meaning.

- Extracts Avatars’ Personal Status.

- Produces a Summary.

- Produces Edited Summary using the comments received from participants.

- Produces Text and Personal Status.

- Creates Speech and Avatar Descriptors from Text and Personal Status.

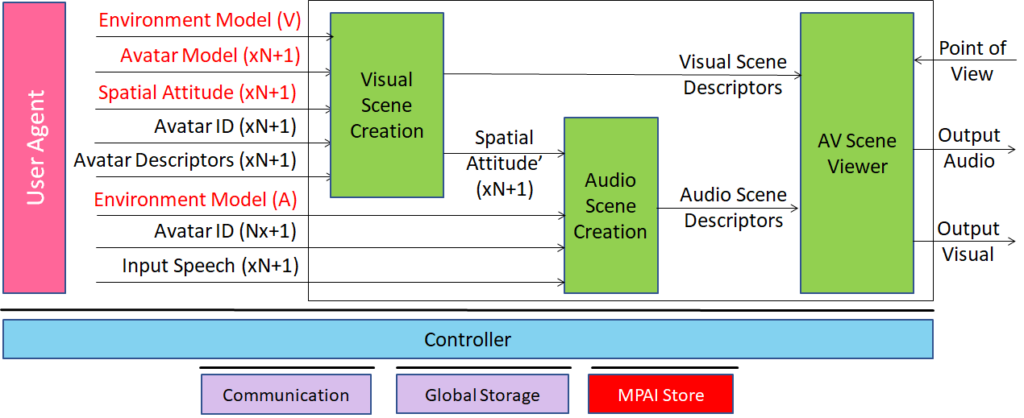

The Receiving Clients:

- At the beginning:

- Environment Model

- Avatar Models

- Spatial Attitudes

- Continuously:

- Creates Audio and Visual Scene Descriptors.

- Renders the Audio-Visual Scene from the Point of View selected by Participant.

Only the Receiving Client of Avatar-Based Videconference is depicted in Figure 3.

Figure 3 – Receiving Client of Avatar-Based Videconference

The data types use by the Avatar-Based Videconference use case are given by Table 1.

Table 1 – Data Types used by ARA-ABV

| Name of Data Format | Specified by |

| Environment | OSD |

| Body Model | ARA |

| Body Descriptors | ARA |

| Face Model | ARA |

| Face Descriptors | ARA |

| Avatar Model | ARA |

| Avatar Descriptors | ARA |

| Spatial Attitude | OSD |

| Audio Scene Descriptors | CAE |

| Visual Scene Descriptors | OSD |

| Text | MMC |

| Language identifier | MMC |

| Meaning | MMC |

| Personal Status | MMC |

We note that MPAI-ARA only specifies Body Model and Descriptors, Face Model and Descriptors, and Avatar Model and Descriptors. Three other MPAI standards provide the needed specifications.

The MPAI-ARA Working Draft (html, pdf) is published with a request for Community Comments. See also the video recordings (YT, WimTV) and the slides of the presentation made on 07 September. Comments should be sent to the MPAI Secretariat by 2023/09/26T23:59 UTC. MPAI will use the Comments received to develop the final draft planned to be published at the 36th General Assembly (29 September 2023).

As we said, this is a first contribution to avatar interoperability. MPAI will continue the development of Reference Software, start the development of Conformance Testing and study extensions of MPAI-ARA (e.g., compression of Avatar Description).