Shanghai, a humongous city of 30 million people, traditionally the commercial heart of China, and many things more has hosted the Global AI Conference 2023 conference, a cluster of events held at different locations of the city. I was invited to give two keynote speeches in two sessions that had metaverse and AI as title. Here I will introduce my second speech whose content was largely influenced by my perceived need to promote understanding of the role of interoperability in the eventual success of the metaverse.

First the title of my speech: Is the metaverse possible?

I started by saying that I do not know if, how and when the metaverse will “happen”, but I do know why it should happen. That is because the metaverse can be considered as the ultimate step of the 100-year-old digitisation process, it will bring new forms of human-human and human-machine interaction and will “remove” some of the daily-life “constraints” of the physical world (provided our bodies stay on the Earth…).

But I am not a blind believer in the metaverse because of the many hurdles lying ahead. In my speech I identified some of the interoperability hurdles that lie between us and a realised metaverse and suggested measures for each of them.

First hurdle: we should make sure that we agree on what we talk about. The lack of an agreed definition of the metaverse betrays a language problem, similar to the Tower of Babel’s. MPAI has a definition that tries and accommodates different needs.

The metaverse is coordinated set of processes providing some or all the following functions:

- To sense data from U-Locations.

- To process the sensed data and produce Items.

- To produce one or more environments populated by Items that can be either digitised or virtual, the latter with or without autonomy.

- To process Items from the M-Instance or potentially from other M-Instances to affect U- and/or M-Location using Items in ways that:

- Are consistent with the goals set for the M-Instance.

- Performed within the capabilities of the M-Instance.

- Complying with the Rules set for the M-Instance.

The first hurdle anticipates the next, namely, the lack of an agreed metaverse terminology. In the MPAI definition, U-/M-Locations indicate real/virtual world locations; Item indicates any type of data (in the metaverse we only have data and processes) that is recognised and supported in an M-Instance (Metaverse Instance).

The next suggestion is a consequence of the above: make sure that we agree on the meaning of words. It is so interesting to observe that everybody agrees on the importance of terminology and that explains why we have so many metaverse terminologies. The words used to indicate notions important for the metaverse are not important per se – the terminology could designate avatar with the label A4BC – the fact is that a term reflects the metaverse model that people have in mind.

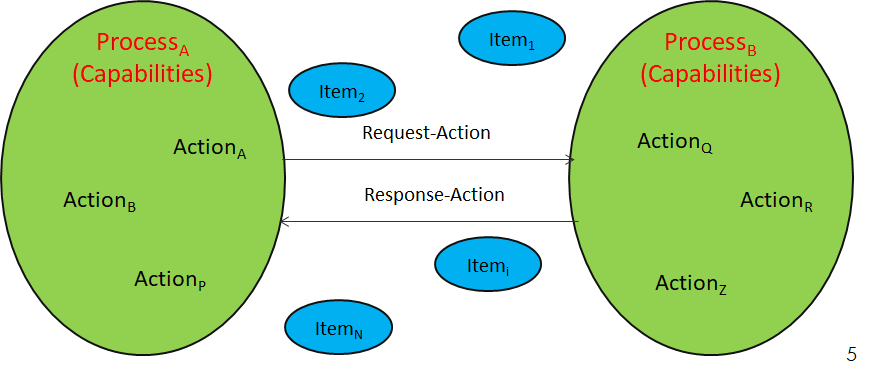

This leads us to the next hurdle: we do not have a common terminology because we do not have a common metaverse model. Hence the next suggestion: when you talk about interoperability make sure that your interlocutor shares the same metaverse model as you. The MPAI Metaverse Model is simple: a metaverse (actually, an M-Instance) is a set of Processes Acting on Items. Well, true, there are a few more details 😉, but the figure below gives an idea and adds another notion, i.e., that Processes not only perform Actions on Items – another non-marginal notion – but may also request other Processes to perform Actions on Items (if they have the Rights to do that).

At this point, I decided to make a brief digression talking about MPAI. Even if you are familiar with MPAI, these 6-slides will tell you a lot about MPAI.

After identifying and making suggestions about metaverse interoperability, I decided to delve into the topic and defined Metaverse Interoperability as the ability of M-Instance #1 to use data from as intended by M-Instance #2.

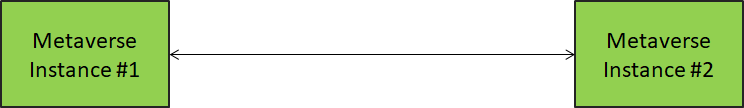

The figure below depicts the simplest form of interoperability, when the two M-Instances use the same data formats.

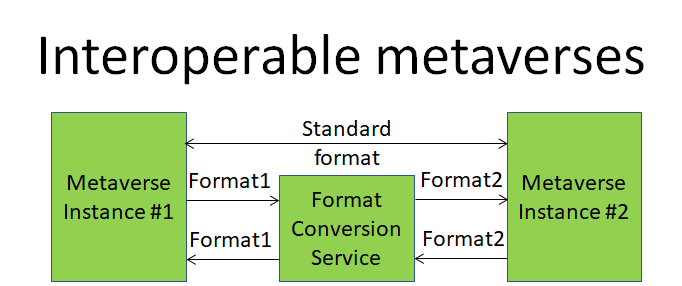

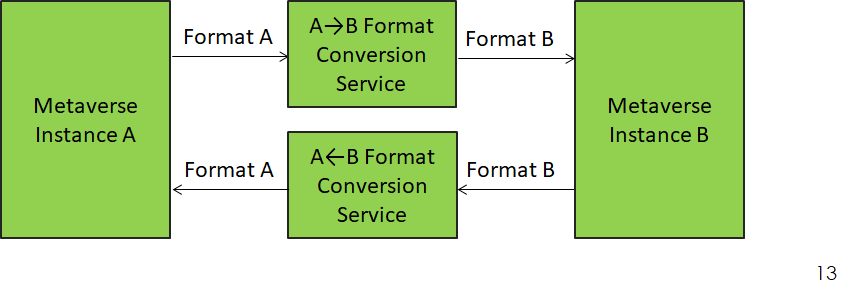

In the general case, however, we are not so lucky because some data formats are the same, but others are different. The figure below depicts how conversion services hopefully solve the problem.

Problem solved? Unfortunately, not. The interoperability ghost is still there haunting us.

If metaverseA and metaverseB use data formatA and data formatB. respectively, for conversion to be lossless, both M-Instance should attach the same meaning (i.e, semantics) to both data formatA and data formatB. If this condition is not satisfied, only part of the data in data formatA can be converted to data in data formatB.

Therefore, we should pay attention to the data format but also to the data semantics and the order is: data semantics first and data formats later. Let’s give an example. I want to transmit the emotional state of my face, quantised to one of Happy, Sad, Fearful, Angry, Surprised, and Disgusted (this is a very rough quantisation, MPAI used ten times more values). The meaning of these words may be more or less understood (because we have a shared semantics from everyday’ s life), so if I say: “I am happy”, my message is more or less understood.

Human-to-human communication of the emotional state of happiness may be settled ((probably more less than more because happiness has so many facets), but what if I want to tell my avatar in an M-Instance to show “happiness”?

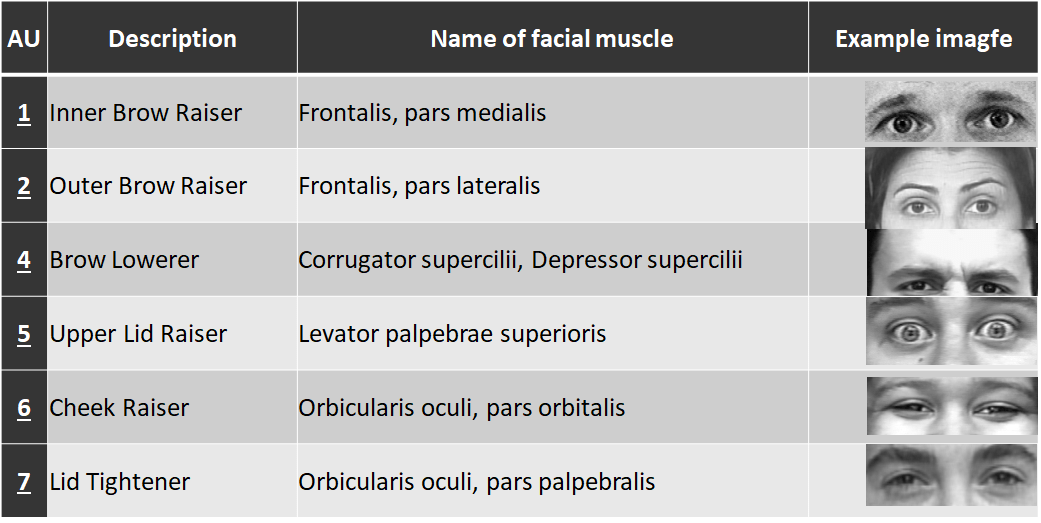

The human-shared semantics is no longer sufficient, but this may not be a problem because this is a kind of known problem since Paul Eckman (1978) published a seminal paper dealing with a Facial Action Coding System (FACS) connecting the movements of a set of facial muscles to a particular emotion displayed on the face. The figure below shows the name of some Facial Action Units with the anatomical name of the muscle and a figure of the part of the face affected (from here).

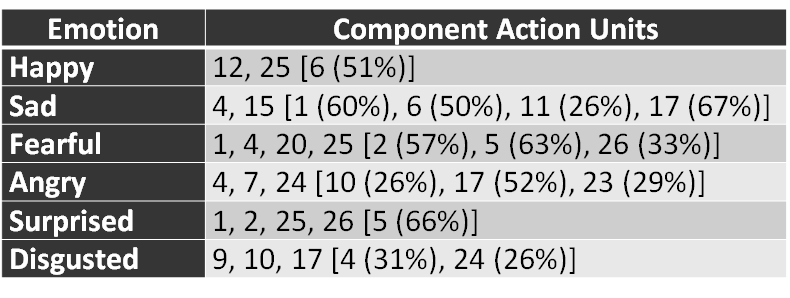

Thanks to lengthy experiments involving human subjects, it was possible to establish the correspondences of the table below (there are more correspondences for more emotions)

The figure above, however, highlights an obvious fact, not everybody expresses happiness in the same way. The first row of the figure says that all subjects tested use facial muscles 12 (Lip Corner Puller) and 25 (Lips part) to express happiness, but more that 50% of the subjects also used (Cheek Raiser).

Therefore, Action Units provide a sort of interoperability because they do not exactly reproduce my way of expressing “happiness” or “anger”. A “better” result could be obtained by training an AI model with my emotions and then drive it with my trained model.

The question, though, is whether this is interoperable. The answer is that it depends on the answer that 8 billion people will give to the next two questions:

- Does the metaverse keep 8 billion models for 8 billion humans?

- Are 8 billion humans willing to entrust their models to the metaverse?

A different approach would be to train my model with my emotions and then ask the model to generate Action Units.

The conclusion is that there are potentially many ways to interoperate. They are mostly driven by business considerations and depend on where you place the interface where data are exchanged.

My final recommendation has been that you are either interoperable by design, or you interoperate either because the rest of the world has adopted your solution, or because you have been forced to adopt someone else’s solution.

Interoperability by design offer a level playground. The problem is that there are no interoperability specifications. The good news is that the MPAI Metaverse Model – Architecture specifications planned for a September 2023 release will be a first step in the metaverse interoperability path offering a specification for the semantics of Actions and Items.

The conclusions of my speech were that I remain a believer in a metaverse where people can fully express themselves with people who can fully express themselves but I do not guarantee that this will be possible because an interoperable approach to the metaverse is required.

I am also not sure I would like a metaverse world where people live tied to the M*** or A*** of the metaverse.

´This work has been inspired by MPAI – Moving Picture, Audio and Data Coding by Artificial Intelligence, the international, unaffiliated, non-profit organisation developing standards for AI-based data coding.