Introduction

AI systems have typically been trained. Humans, too, are trained. However, it may happen that, in spite one’s effort to train a pupil according to strict moral principles, he turns out to be a libertine in life. An illustrious, so to speak, example, is Iosip Stalin who, from a catholic seminarist in Georgia turned into something else.

AI systems, typically do not possess the freedom to evolve beyond their training. Therefore, trained AI systems carry the imprint of their teacher, i.e., the data set used to train them. If the AI system, however, is meant for an application of wide and general use, that may often cause problems because a user may not be able to explain certain outputs the AI system produces in responses to certain inputs, or s/he is only able to conclude that “the training was biased”.

In its Manifesto, MPAI pledges to address ethical questions raised by its technical work and acknowledges that its technical approach to AI system standardisation may provide some early practical responses.

MPAI’s approach to AI system standardisation

MPAI standards target components and systems enabled by data coding technologies, especially, but not necessarily, using AI. MPAI subdivides an AI System into functional components called AI Modules (AIM). An AI system is an aggregation of interconnected AIMs executed inside an AI Framework (AIF).

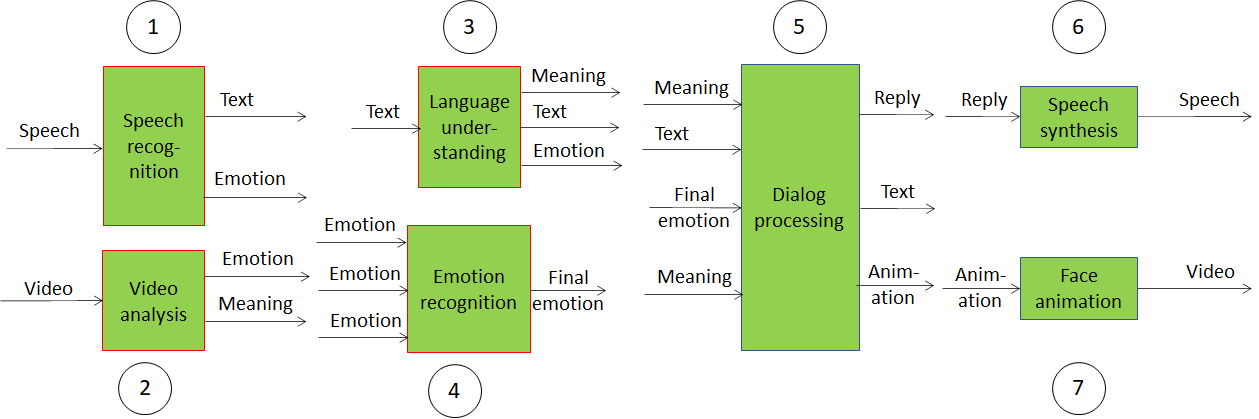

Let’s consider the following set of AI Modules

Figure 1 – A set of MPAI AI Modules (AIM)

The functions of the 7 AIMs are:

- from speech extract text and emotion

- from video extract emotion and the meaning of the sentence

- from text extract emotion and meaning, and pass text

- fuse three emotions into one

- from text and video meaning, text and final emotion produce vocal reply and video animation, and pass text

- from reply produce synthetic text

- from animation produce video animation.

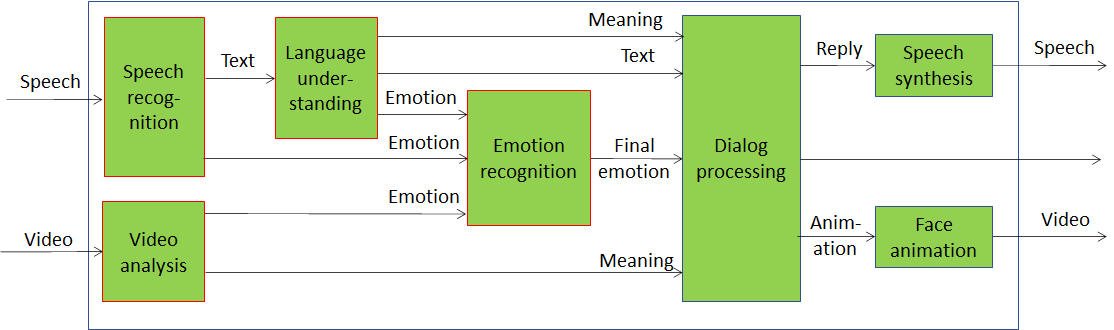

The 7 AIMs can be connected to produce the following AI system

Figure 2 – An implementation of an AI system

Explainability

An implementation of this reference diagram gives considerable more insight into the AI system and helps a lot in providing an explanation of how a set of inputs (speech and video of a face), has produced a set of outputs (synthetic speech and animated face). It is conceivable that, if you have an AI system available, you can put probes to the inputs and outputs of the AIMs.

As this, however, is not something a regular consumer is capable or willing to do, we can envisage testing laboratories who can put the system under test and declare that a particular AI system is above or below a certain level of performance.

Here is the solution

- MPAI develops an AI Framework standard for executing aggregations of AIMs (MPAI-AIF)

- MPAI develops standards that normatively specify

- the functionality supported by a Use Case of which an AI system is an implementation

- the functionalities of the AIMs and their input/output data (but not the inside of the AIMs)

- the topology of the AIMs and their interconnections

- MPAI develops an identification system of

- MPAI standards, Use Cases, profiles, versions

- Implementers

- AIFs

- AIMs

- MPAI develops or approves testing means, i.e., procedures, software, data etc., to be used to test the performance of an AI system that implements an MPAI Use Case

- MPAI authorises entities (implementers and laboratories) to test AI systems implemented in AI Frameworks and their AIMs.

A rigorous implementation of the above will allow a testing entity to put a “Seal of Performance” and give consumers levels of confidence in an AI system.

Of course, there will always be implementers who are not interested in consumers’ confidence. They may stop at points 1 and 2 above and disregard identification, and testing.

Conclusion

By their choices, consumers will decide whether explainable AI systems is something consumers are interested in.