AI has generated easy enthusiasms but also fears. The narrative that has developed sees in the development of AI more a potentially dystopian machine-ruled future than a tool potentially capable to improve the weel being of humanity.

Indeed, some AI technologies hold the potential to transform our society in a disruptive way. That possibility must be kept in check if we want to avoid potentially serious problems. Just think of video deep fakes, but also of the possibility of advanced linguistic models such as GPT-3 to generate ethically questionable outcomes.

One problem in this is that AI is a new technology and its limitations and problems are sometimes difficult to understand and evaluate. This is illustrated by the frequent identification of training bias or vulnerabilities which could have disastrous impacts in systems that are mission-critical or make sensitive decisions.

The MPAI Technical Specification called “Governance of the MPAI Ecosystem (MPAI-GME)” (see here) deals with these issues. To address a problem, however, you first need to identify it. MPAI does that by defining the Performance of an Implementation of an MPAI standard as a collection of 4 attributes:

- Reliability: Implementation performs as specified by the standard, profile and version the Implementation refers to, e.g., within the application scope, stated limitations, and for the period of time specified by the Implementer.

- Robustness: the ability of the Implementation to cope with data outside of the stated application scope with an estimated degree of confidence.

- Replicability: the assessment made by an entity can be replicated, within an agreed level, by another entity.

- Fairness: the training set and/or network is open to testing for bias and unanticipated results so that the extent of system applicability can be assessed.

MPAI defines the figure of “Performance Assessors” who are mandated to assess how much an Implementation possesses the Performance attributes.

Who can be a Performance Assessor? A testing laboratory, a qualified company and even an Implementer. In the last case, an Implementer may not Assess the Performance of its Implementations. A Performance Assessor is appointed for a particular domain and for an indefinite duration and may charge Implementers for its services. However, MPAI can revoke the appointment.

In making its assessments, an MPAI Assessor is guided by the Performance Assessment Specification (PAS), the fourth component of an MPAI Standard. A PAS specifies the characteristics of the procedure, the tools and the datasets used by an Assessor when assessing the Performance of an Implementation.

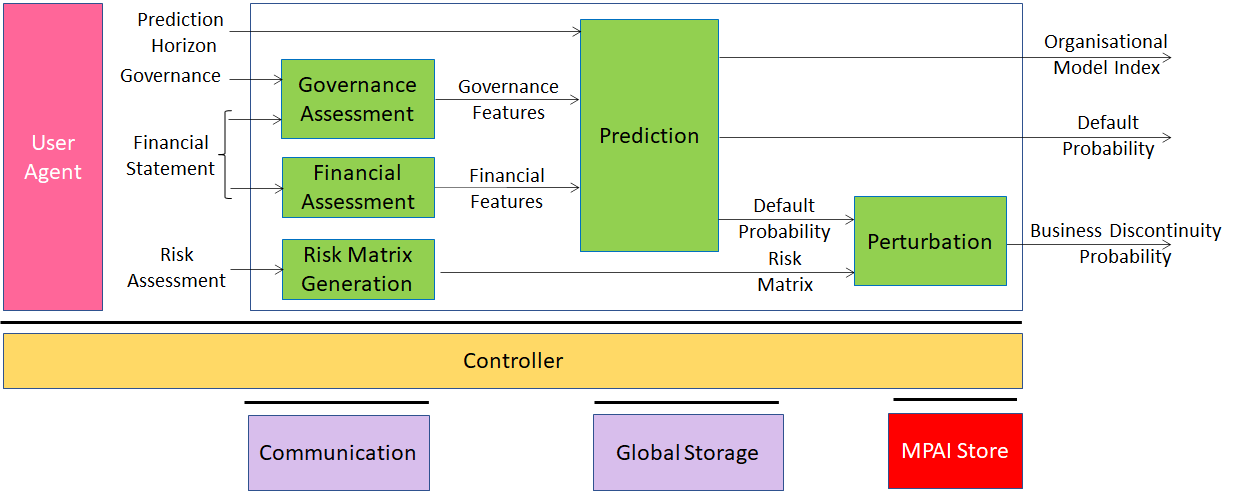

MPAI has developed the PAS of the Compression and Understanding of Industrial Data Standard (MPAI-CUI). MPAI-CUI can predict the default and business discontinuity probability, and the adequacy of the organisational model of a company in a given prediction horizon using governance and financial statement data, and the assessment of cyber and seismic risk. Of course, the outlook of a company depends on more risks than cyber and seismic, but the standard in its current form takes only these risk into account.

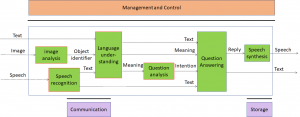

The figure below gives the referencemodelof the standard.

Let’s see what the MPAI-CUI PAS actually says.

A Performance Assessor shall assess the Performance of an Implementation using a dataset satisfying the following requirement:

- The turnover of the companies used to create the dataset shall be between 1 M$ and 50 M$.

- The Financial Statements used shall cover 5 consecutive years.

- The last year of the Financial Statements and Governance data shall be the year the Performance is assessed.

- No Financial Statements, Governance data and no risk data shall be missing.

and the assessment process shall be carried out in 3 steps, as follows:

- Compute the Default Probability for each company in the dataset that

- Includes geographic location and industry types.

- Does not include geographic location and industry types.

- Compute the Organisational Model Index for each company in the dataset that

- Includes geographic location and industry types.

- Does not include geographic location and industry types.

- Verify that the average

- Default Probabilities for 1.a. and 1.b. do not differ by more than 2%.

- Organisational Model Index for 2.a. and 2.b. does not differ by more than 2%.

The MPAI Store will use the result of the Performance Assessment to label an Implementation.

Although very specific to an application, the example provided in this article gives a sufficient indication that the governance of the MPAI ecosystem has been designed to provide a practical solution to a difficult problem that risks depriving humankind of a potentially good technology.

If only we can separate the wheat from the chaff.