(Freepick image)

The global efforts to make the presence of humans less impacting on the Earth that hosts us should include Connected Autonomous Vehicles (CAV). Still far away is the moment, however, when it will be possible to all to ask a CAV in plain language to take us to a destination basically executing the functions that we perform when we are behind the wheel.

One way to accelerate the coming of that moment is to develop standard for components with specified functions and interfaces that can be easily integrated into larger systems. MPAI, at its 34th General Assembly, has issued a Call for Technologies for the first phase “Architecture” of one of its earliest projects called “Connected Autonomous Vehicle (MPAI-CAV)”. The goal is to use the results of the Call to finalise the current already quite mature baseline MPAI-CAV specification. This will be followed by a series of standards each adding more details and strengthening the interoperability of CAV components.

A disclaimer: MPAI does not intend to include the mechanical parts of a CAV in the planned Technical Specification: Connected Autonomous Vehicle – Architecture. MPAI only intends to refer to the interfaces of the Motion Actuation Subsystem with such mechanical parts.

The MPAI-CAV – Architecture standard targeted by the Call – partitions a CAV into subsystems and subsystems into components. Both are identified by their function and interfaces, i.e., the data exchanged between MPAI-CAV subsystems and components.

MPAI defines a CAV as a system that:

- Moves in an environment like the one depicted in Figure 1

- Has the capability to autonomously reach a target destination by:

- Understanding human utterances, e.g., the human’s request to be taken to a certain location.

- Planning a Route.

- Sensing the external Environment and building Representations of it.

- Exchanging such Representations and other Data with other CAVs and CAV-aware entities, such as, Roadside Units and Traffic Lights.

- Making decisions about how to execute the Route.

- Acting on the CAV motion actuation to implement the decisions.

Figure 1 – An environment of CAV operation

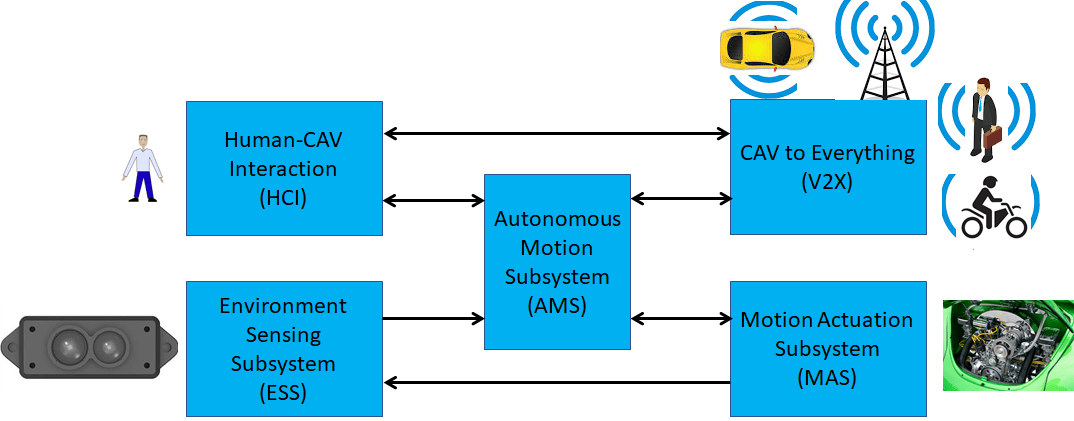

MPAI has found that a CAV can be effectively partitioned into four subsystems as depicted in Figure 2.

The MPAI-CAV – Architecture standard assumes that each of the four CAV Subsystems is an implementation of Version 2 of the MPAI AI Framework (MPAI-AIF) standard, an environment securely executing AI Workflows (AIW) composed of AI Modules (AIM).

The MPAI-CAV – Architecture standard will specify the following elements for each subsystem:

- The Function of the Subsystem.

- The input/output data of the Subsystem.

- The topology of the Components (AI Modules) of the Subsystem.

- For each AI Module of the Subsystem:

- The Function.

- The input/output data.

The four subsystems are briefly described in the following.

Human-CAV Interaction (HCI)

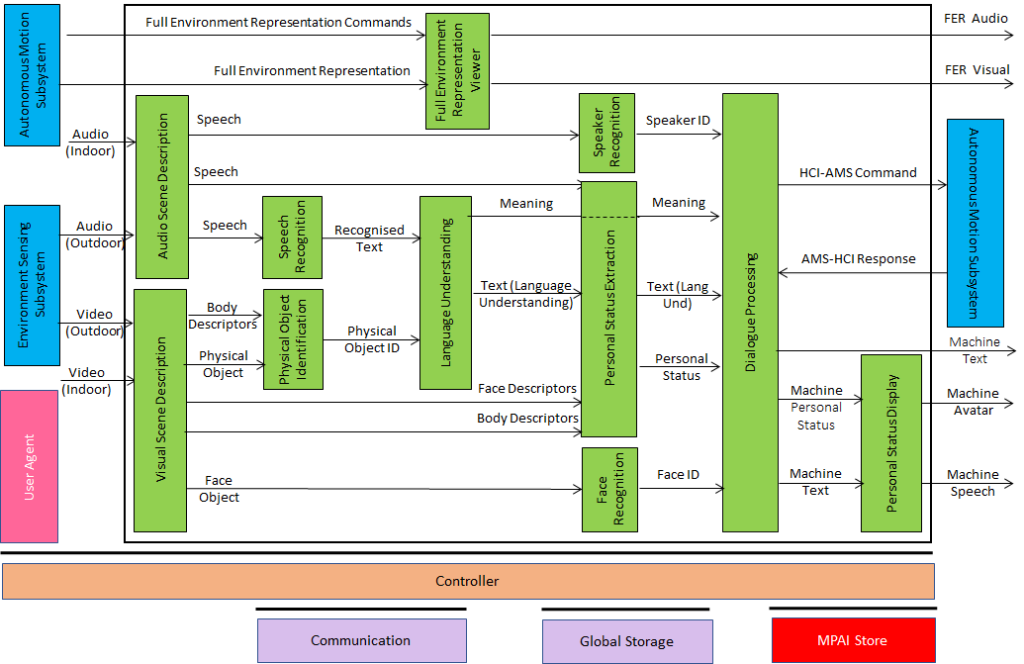

Figure 3 depicts the architecture of the HCI Subsystem.

Figure 3 – Human-CAV Interaction Reference Model

The HCI subsystem comprises two AIMs capturing the audio and the visual scenes and recognising the audio components (speech) and separating the visual components in generic objects and face and body descriptors of humans. This information is used to 1) understand what the speaking human is saying, 2) to extract the so-called Personal Status, a combination of emotion, cognitive state, and social attitude, and 3) identify the speaker via speech and face. HCI can now process the information and respond to the human manifesting itself as a speaking avatar expressing Personal Status. The HCI may also act as the intermediary between the human and the Autonomous Motion Subsystem when the human wants to tell the CAV their intended destination. A CAV passenger may also want to peek in the CAV’s Environment Representation.

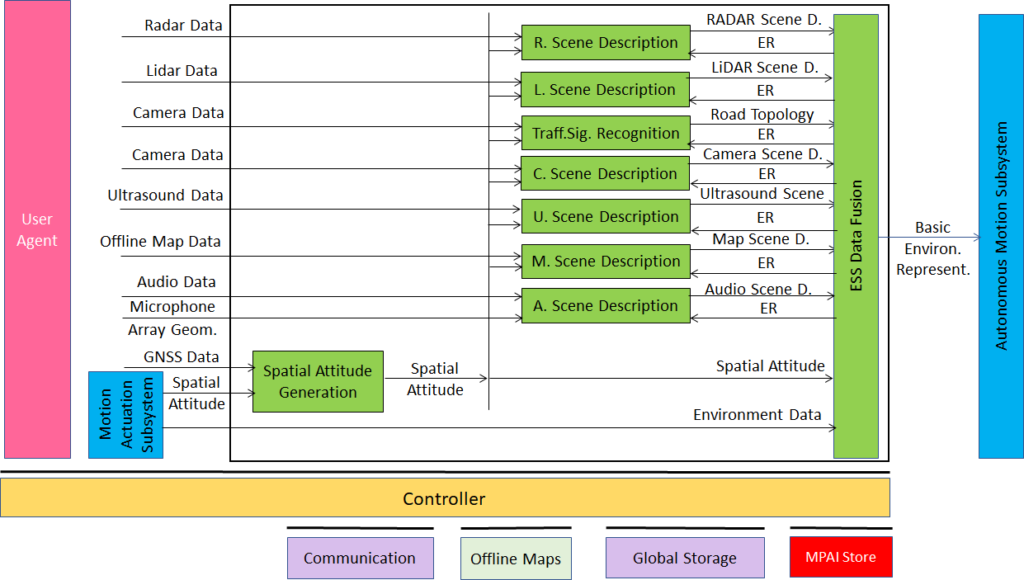

Environment Sensing Subsystem (ESS)

The goal of the Environment Sensing Subsystem (ESS) depicted in Figure 4 is to acquire the best understanding possible of the external environment – called Basic Environment Representation – using the sensors carried by the CAV and passing it to the Autonomous Motion Subsystem. Figure 4 shows RADAR, LiDAR, Cameras, Ultrasound, Offline maps, and Audio as potentially carried sensors. The different Environment Sensing Technologies are processed to produce the Basic Environment Representation.

Figure 4 – Environment Sensing Subsystem Reference Model

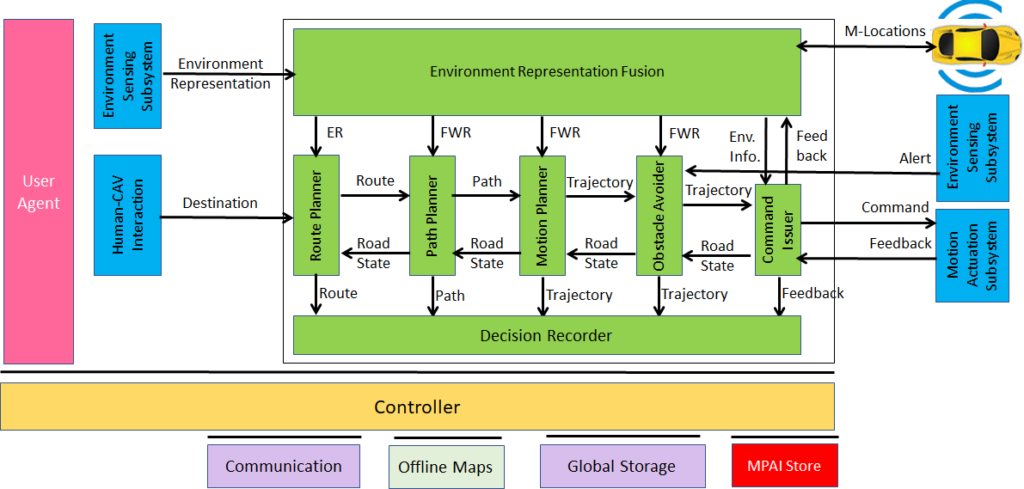

Autonomous Motion Subsystem (AMS)

The Autonomous Motion Subsystem, depicted in Figure 5, is the place where information is acted upon. Specifically, it acts upon instructions received from the human via the HCI subsystem, produces the Full Environment Representation by improving the Basic Environment Representation with information exchanged with CAVs in range, and issues commands to the Motion Actuation Subsystem to reach the intended destination.

Figure 5 – Autonomous Motion Subsystem Reference Model

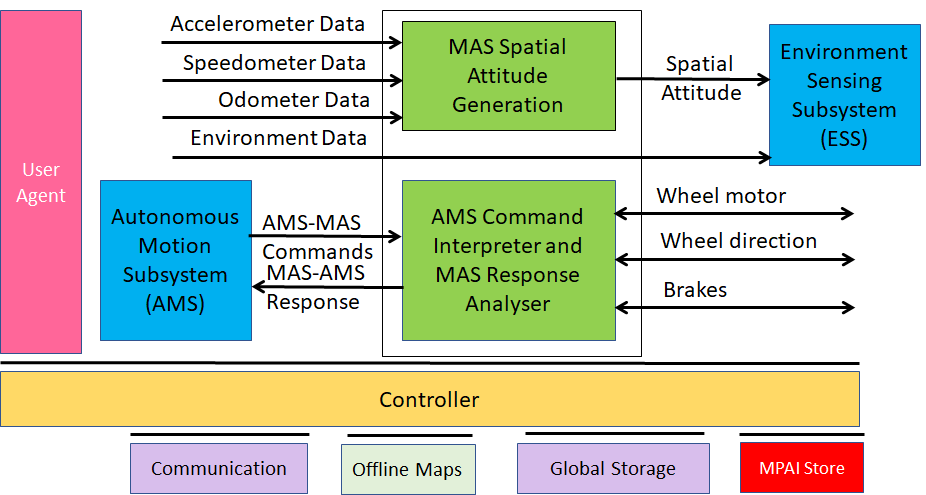

Motion Actuation Subsystem (MAS)

The Motion Actuation Subsystem, depicted in Figure 6, provides spatial and other environments information to the ESS, implements the commands from the AMS, and sends information to it about their execution:

Figure 6 – Motion Actuation Subsystem Reference Model

Finally, CAV-to-Everything (V2X) is the CAV component that allows the CAV Subsystems to communicate to entities external to the Ego CAV. For instance, the HCI of a CAV may send/request information to/from the HCI of another CAV (e.g., about road status impacting the passengers) or an AMS may send/request the Basic Environment Representation to/from the AMS of another CAV.

The Call for Call for Technologies specifically requests comments on, modifications of, and additions to MPAI-CAV Reference Model (see Figure 2), the Terminology (extending over 6 pages), the functions of the subsystems, the input and output data of the subsystems, the functions of the AI Modules, and the input and output data of the AI Modules.

Submission should be sent to the MPAI Secretariat by 15 August 2023.

Register for the online presentations of the Call for Technologies of the MPAI Connected Autonomous Vehicle – Architecture project seeking to promote the development of the CAV industry (2023/07/26 at 8 (https://l1nq.com/HXDow) and 15 UTC (https://l1nq.com/fAL4J)).