MPAI is about Data Coding by Artificial Intelligence (AI), and this is not a statement of intention. Indeed, the first fully developed standard is MPAI-CUI – Compression and Understanding of Industrial Data. If you inject financial statements, governance data and risk data (e.g., cyber and seismic) and a time horizon, an implementation of the standard gives you an estimate of the probability that the company defaults, the adequacy of the governance model and the probability that the company has a serious business interruption.

This is not the only case of data coding in MPAI. Work is ongoing on MPAI-SPG – Server-based Predictive Multiplayer Gaming whose goal is to train a network to predict the game state of an online game server when client data are missing or a client is cheating.

Media are so important and so is their data coding MPAI has several audio or speech related activities and it also has one. MPAI-12 has approved the Technical Specification of MPAI-MMC, where MMC standards for Multimodal Conversation) The MMC Use Case address audio-visual conversation with a machine impersonated by a synthetic voice and an animated face; requesting and receiving information via speech about a displayed object; interpreting speech to one, two or many languages using a synthetic voice that preserves the features of the human speech.

In MPAI-CAE Context-based Audio Enhancement Use Cases a desired emotion is added to an emotion-less speech segment, old audio tapes are preserved, restoring audio segments, and the audio conference experience is improved. For all, AI offers excellent solutions.

Audio is clearly important, but Video is also, at least differently, important. You don’t say hearing is believing, but you say seeing is believing. Audio talks to your heart, but Video talks to your mind or, at least, the to left-hand side of your brain.

From the early days, MPAI has addressed AI-based video coding. Forty-five years of data processing have reduced the bitrate of digital video by 3 orders of magnitude. Thousands of researchers have achieved this impressive result by painstakingly analysing the result of different algorithms and discovering astute tricks to remove redundant information in the processed data. I am sure that more generations of researchers could continue inventing new tricks and save bits, but that may not be the best strategy.

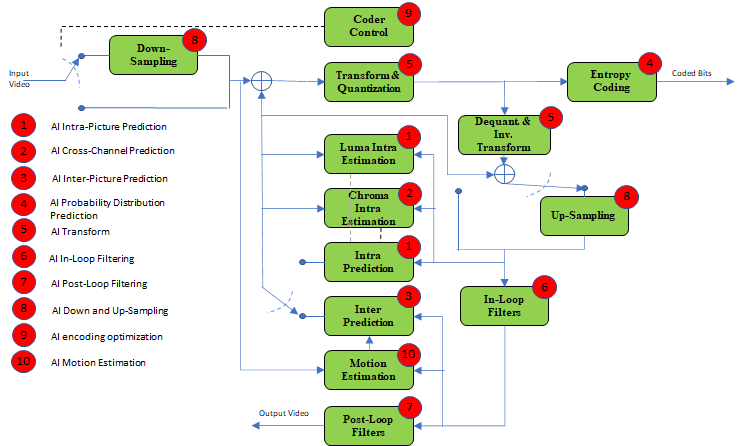

By training neural networks with real data, AI can discover patterns that humans may take ages to discover or possibly never. The data produced by the algorithms applied to video by the MPEG-5 EVC standard are suitable to the application of especially designed neural networks.

The MPAI-EVC AI-Enhanced Video Coding project does exactly that.

| MPAI-EVC takes the coding tools developed for MPEG-5 EVC and applies neural networks to them. At the moment, people working in MPAI-EVC are working on Intraframe Prediction (improving intraframe coding), SuperResolution (applying smart interpolation to pictures out of the coding loop) and In-Loop Filter (doing the same with pictures in the coding loop) and are already showing gains. |  |

MPAI-EVC was the fastest way to obtain video coding gains starting from the known known as the results already obtained prove.

My life has not been devoid of experiences of creating groups with a mission. It is always a great moment when you see people of high intellectual capacity buying into an idea of producing something immaterial where everybody brings an element without which the idea would not take shape.

MPAI-EEV – AI-based End-to-End Video Coding is another great example of creating a group. MPAI-EVC builds on 45 years of video coding. We know that AI can do better. MPAI-EVC piggy-back on an “alien” data-processing technology layer, but MPAI-EEV starts from the problem of a stream of visual data containing “snakes”, i.e. moving objects that we recognise when we “slice the snakes”, e.g., when we take a picture. Intuitively, we know that AI’s ability to build models will help reduce the information over and beyond the “simple” model of taking frames, computing block-wise motion vectors and use these to predict the next frame.

The good point is that MPAI is not alone in facing this case of known unknown. Literature already exists with interesting results. The traditional approach is to let individual researchers explore a field and then organise opportunities or “challenges” where results are exchanged.

MPAI is adopting a closer collaboration approach. At the second MPAI-EEV meeting 3 end-to-end video coding models published in the literature were presented and discussed. The same is planned for the upcoming 3rd meeting. The goal is to identify promising approaches, build a reference model, carry out research, compare results and then proceed for another iteration.

MPAI is a standards developing organisations paying particular attention to the involvement of stakeholders. In this phase of work, anybody can join this exploratory work. When results are obtained and MPAI feels that its is ready to move to the standard development phase, participation in the work will be limited to MPAI members.

But that is not going to be tomorrow 😉

For information, please contact the MPAI Secretariat (mailto:secretariat@mpai.community).