Artificial Intelligence (AI) offers great advantages to humans because an appropriately designed AI system can perform tasks that humans may not wish or be able to do, in ways that have degrees of similarity to what humans do. Advances of AI promise to continuously improve AI system performance.

In the early 1940’s, science fiction writer Isaac Asimov devised the three laws a robot must obey: 1. don’t injure a human or allow a human to be injured, 2. obey a human unless the order is against law #1 and 3. protect yourself unless there is a conflict with laws #1 and #2. These may or may not be useful in a future world when humans will share their life with robots, but I do not know if the field MPAI is currently interested in developing standards for – processing data and producing information with AI – will need laws.

I know that, however, if not laws, I would like an AI system I want to use to satisfy some requirements. One of them is that the AI system I use be able to explain how it got to the result it provided. If I use an information service to get a recommendation on something, I would like to know how the AI system came up with that recommendation. I don’t think I would like to rely on an AI system whose only answer is “believe me, this is the best answer you can find”. Mutatis mutandis, this is the user experience when I use a web search engine. But this is another story.

I do not think this is an outlandish requirement because it is satisfied by the companies selling market studies. They describe how they got the data they used, but they are wary of sharing the methodology they used to produce the data.

The good news is that MPAI’s work methodology will allow it to develop standards that, if implemented in a conforming way, will satisfy the requirements that I have laid down. I interpret this to be what, in the AI lexicon, is called explainability.

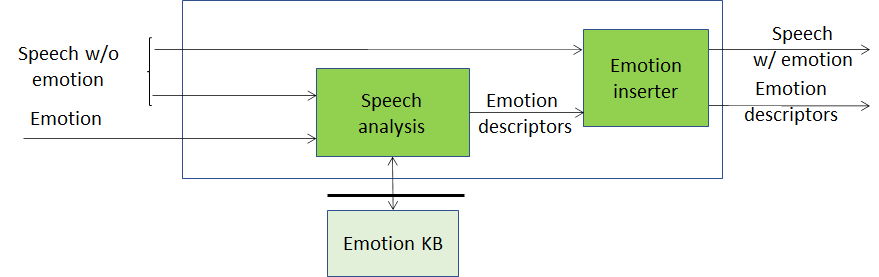

To explain why a conforming implementation of an MPAI standard is explainable (pun intended), let me first deal with a simple example of how MPAI deals with its emerging standard called “Emotion Enhanced Speech” depicted in Figure 1.

Figure 1 – Model of Emotion Enhanced Speech (legacy data processing)

Here “plain” speech (i.e., neutral in terms of emotions) enters a box with an associated emotion information (happiness, anger etc.) that the box should add to the plain speech. MPAI addresses this problem by splitting the inside of the box in two boxes:

- Box #1 extracts features from the input speech and queries the Emotion Knowledge Base (KB) with the features and the input emotion to obtain “Emotion descriptors”.

- Box #2 converts the input speech from neutral to a speech with the desired type and grade of emotion using the Emotion descriptors.

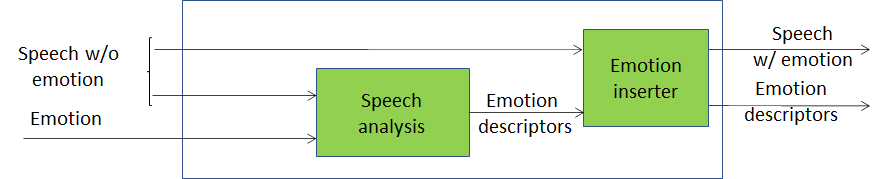

Figure 1 is an example of an Emotion Enhanced Speech implementation that uses legacy data processing technologies. The corresponding AI-based implementation is depicted in Figure 2.

Figure 2 – Model of Emotion Enhanced Speech (AI based)

The Speech analysis in Figure 2 is now a neural network that has been sufficiently trained to do what Speech analysis in Figure 1 does by accessing the Emotion KB. We expect that it will perform better.

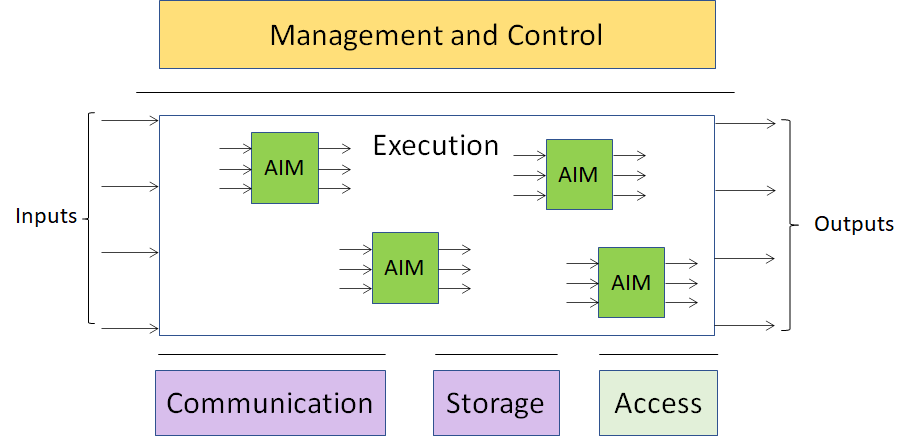

One pillar on which the MPAI standardisation rests is the notion of AI Module (AIM), e.g. the Speech Analysis AIM depicted in Figure 3.

Figure 3 – The Speech analysis AI Module (AIM)

MPAI standardises the I/O data of an AIM. However, MPAI is silent on the AIM internals. Those who will develop better AIMs will sell at a higher price until, I mean, a competitor will come up with a better AIM.

The second MPAI pillar is the AI Framework (AIF), depicted in Figure 4.

Figure 4 – The AI Framework (AIF) architecture

An MPAI AI Framework is an environment where workflows of AIMs (e.g. those in Figure 1 and Figure 2) can be created and executed (actually, it does much more than this, see, e.g., here)

The answer to the obvious question “what are the advantages for MPAI to rely on these two pillars, instead of standardising end-to-end systems?” in included in the 5 point below:

- AIMs allow the reduction of a large problem to a set of smaller problems. Training and retraining of AIMs is less onerous that doing the same for a much larger and complex network.

- AIMs can be provided by independent developers to an open market where the supply of new and more performing solutions foments the accelerated improvement of larger AI systems.

- An application developer can build sophisticated and complex systems with potentially limited knowledge of the details of the technologies required by the system. Actually, developer will typically not disclose their technologies for competitive reasons.

- MPAI systems allow for competitive comparisons of functionally equivalent AIMs.

- An MPAI system has an inherent explainability.

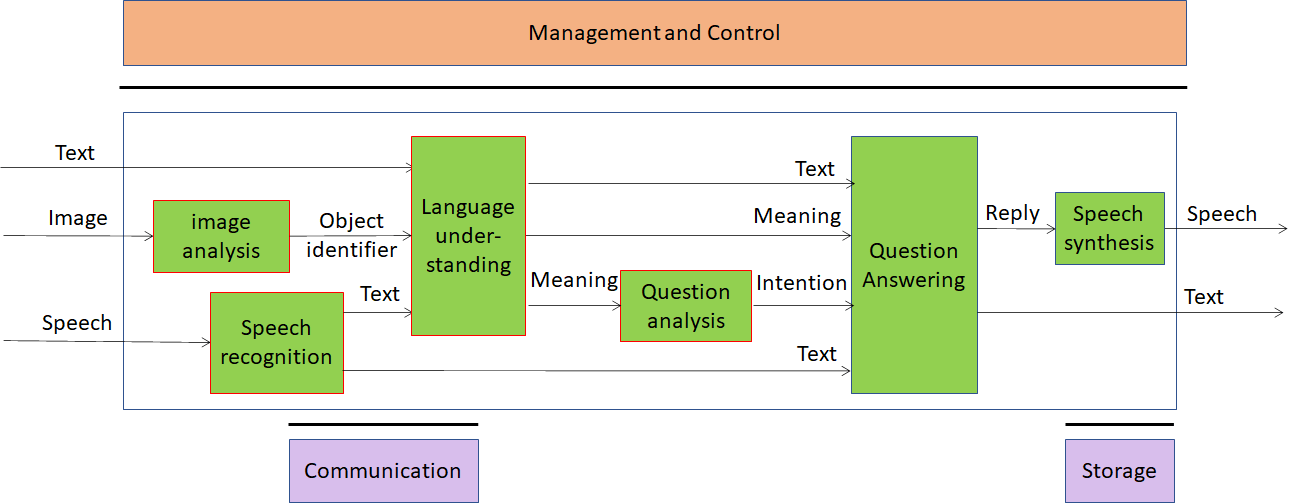

With the last point, I can finally explain in more detail why conforming implementations of an MPAI standard are explainable. As the two-AIM example introduced at the beginning of this article is probably not sufficiently explanatory, let me take a more sophisticated Use Case depicted in Figure 5.

Figure 5 – Multimodal Question Answering

This Use Case is about a user showing a picture and asking “where can I find this object?” Note that, so far, use cases like this one have been implemented as services where everything is in the cloud. But the “edge age” is coming. User devices will embed more and more intelligence and users expect that their devices will provide answers they can rely on because they have a level of control on it.

This control is something MPAI standards will offer. If the AI system the user is using is based on the emerging MPAI Multimodal Conversation standard (MPA-MMC) of which the Multimodal Question Answering Use Case is part, the user will be able to trace the flow and content of the information in and out of the AIMs. Taking the market studies example I used before, the inputs to the AIMs correspond to the description of input data used for amarket study and the AIMs to the methodology used.

Legislating on a technology like AI is a hair-raising enterprise. I suggest that relying on competition between opaque and transparent AI systems is a more relaxed and reliable approach. I may be naïve, but I know what will be my choice between a system which says “believe me, this is the best you can find” and another which gives me the option to trace the path that has led the system to the conclusion.