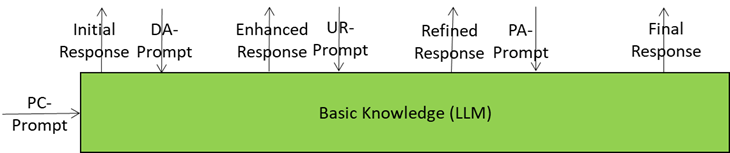

Basic Knowledge: The Generalist Engine Getting Sharper with Every Prompt

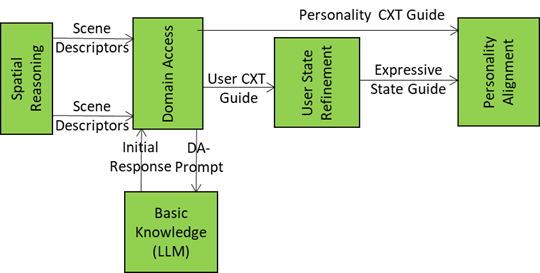

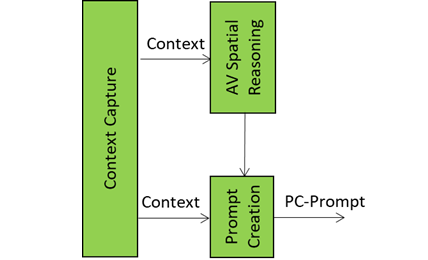

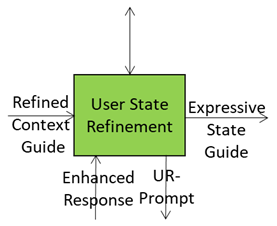

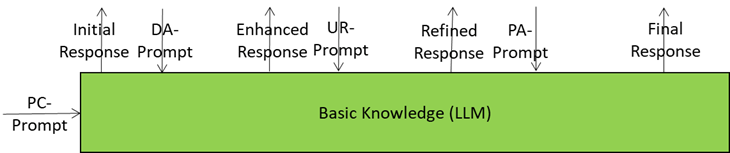

Basic Knowledge is the core language model of the Autonomous User – the “knows-a-bit-of-everything” brain. It’s the provider of the first response to a prompt but the Autonomous User doesn’t fire off just one answer but four of them in a progressive refinement loop, providing smarter and more context-aware responses with every refined prompt. We have already presented the system diagram of the Autonomous User (A-User), an autonomous agent able to move and interact (walk, converse, do things, etc.) with…