- Introduction

Many use the metaverse word with other people, but it is unlikely that they all mean the same. In general one can say that a metaverse instance is a rather complex communication and interaction environment with features, such as synchronous and persistent experiences and virtual reality features such as avatars that may or may not be controlled by humans or objects of the real world.

MPAI Metaverse Model – MPAI-MMM – is the MPAI project developing technical documents – so far Technical Specifications – that can be applied to as many kinds of metaverse instances as possible and enable varied metaverse implementations to interoperate.

The first document, Technical Report – MPAI Metaverse Model – Functionalities, collects the functionalities that potential metaverse users expect a metaverse instance to provide, rather than trying to define what the metaverse is. It includes definitions, assumptions guiding the project, potential sources of functionalities, an organised list of commented functionalities, and an analysis of some of the main technology areas underpinning the development of the metaverse.

The MPAI-MMM is based on the idea of using the notion of Profiles and Levels that digital media standardisation has successfully employed for three decades to cope with the wide variety of expected application domains. As some metaverse technologies are not yet available, the second document, Technical Report – MPAI Metaverse Model – Functionality Profiles, develops Functionality Profiles, a new notion in standardisation that defines profiles for what they do (“functionalities”) rather than for how they do it (“technologies”).

The second document reaches another important milestone by:

- Extending the existing collection of definitions.

- Developing a functional metaverse operation model based on Sources requesting Destinations to perform Actions on Items both containing Data Types.

- Specifying the Actions that Sources request Destinations to perform on Items and the responses of Destinations.

- Specifying the Metadata of the Items but not their Data Formats, in line with the Functionality approach.

- Developing nine Use Cases to test the suitability of Actions and Items.

- Developing four Functionality Profiles.

- MPAI-MMM Functional Operation Model

As it is hard to describe the many terms defined in the document, we will rely on the common meaning of the words. When in doubt about the meaning of a term (starting with a capital letter), please use the search window.

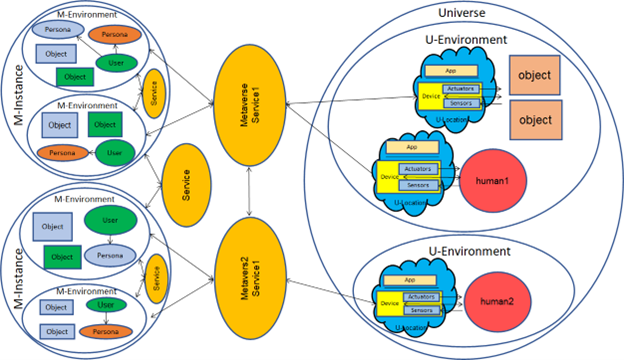

Figure 1 shows a simple example of the connection between the real world (right-hand side, called Universe) and the representations of U-Environments in M-Instances on the left-hand side. Green indicates that the User/Objects represents real-world humans/objects. Users are visualised as Personae: light blue indicates that a Persona or Object is driven by an autonomous agent and brick red that the Persona moves according to its real twin’s movements.

Figure 1 – An example of Metaverse Scenario

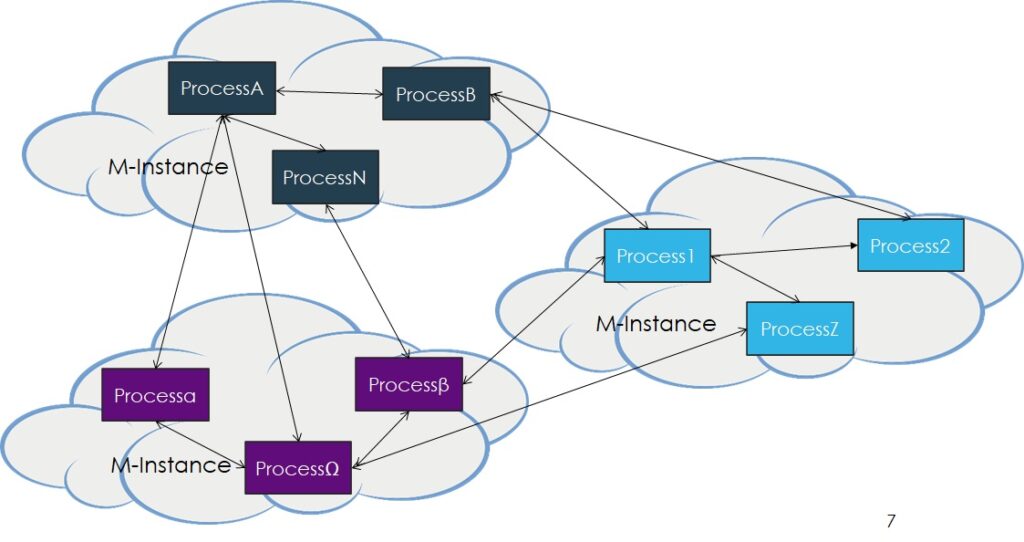

An M-Instance is populated by Processes, e.g., a real or virtual Persona is driven by a Process. A Process may request another Process to perform an Action by sending it a Request-Action and receiving a Response-Action. The Request-Action is an Item, i.e., Data and Metadata, possibly with Rights. The Item contains the Time the request was issued and Source Process, Destination Process, Action requested, InItems provided as input and their InLocations, OutLocations of the output Items, and requested OutRights to Act on the produced Items.

Figure 2 – Processes interacting within and without M-Instances

So far, the following elements have been identified and specified:

- 4 Processes: App, Device, Service, and User.

- 27 Actions, such as Authenticate an Entity (an Item that can be perceived), Discover (request a Service to find Items responding to certain criteria), MM-Embed (place and make perceptible an Entity at an M-Location), UM-Animate (animate an Entity with data from the real world), etc.

- 33 Items such as Account, Asset (an Item that can be Transacted), Map (a list of connections between U-Locations and M-Locations), Model (a representation of an object ready to be UM-Animated by a Stream or to be MM-Animated by an autonomous agent), Rights (a description of what Actions can be done on an Item), etc.

- 13 Data Types such as, Currency, Emotion, Spatial Attitude, Time, etc.

- Use Cases

Nine use cases have been developed. Here a simple use case showing the descriptive capabilities of the MPAI-MMM scene description language.

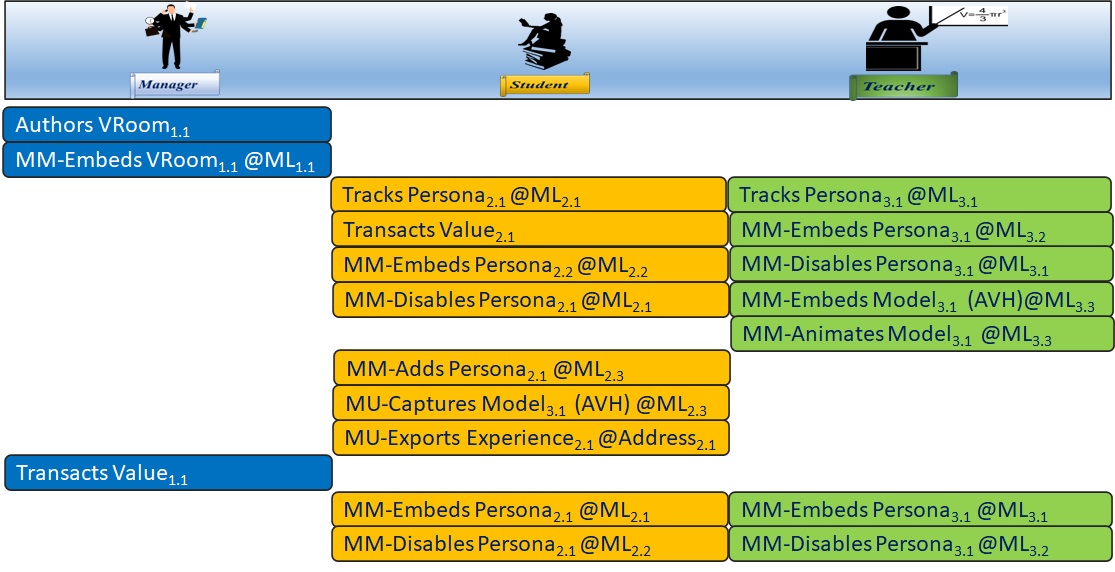

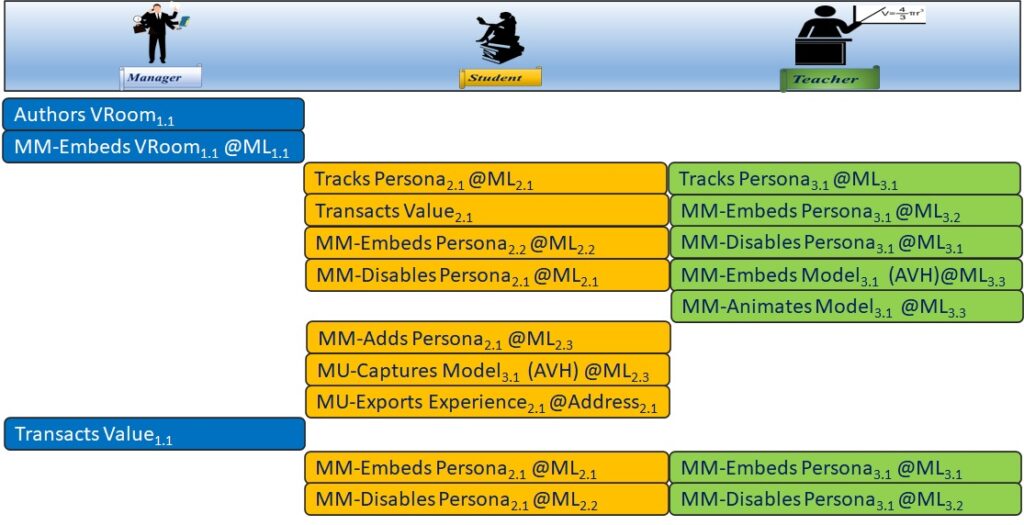

Figure 3 – The Virtual lecture use case

Here is a description of the workflow.

- The meeting manager authors and embeds a virtual classroom.

- The student

- Connects its place in the M-Instance (“home”) with the place where the human is.

- Pays for the right to attend the lecture and save the Experience of the lecture.

- Places its Persona in the virtual classroom and stops the rendering of the Persona at home.

- The teacher

- Does likewise (but does not pay),

- Places a 3D Model used in the lecture and animates it.

- The student

- Moves close to the teacher’s desk without changing the display of its Persona to feels the audio, visual, and haptic components of the 3D Model.

- Saves the lecture how they experienced it.

- The meeting manager pays lecture fees to the teacher.

- Both student and teacher go back home.

The other use cases are: Virtual Meeting, Hybrid Working, eSports Tournament, Virtual Performance, AR Tourist Guide, Virtual Dance, Virtual Car Showroom, and Drive a Connected Autonomous Vehicle.

- Functionality Profiles

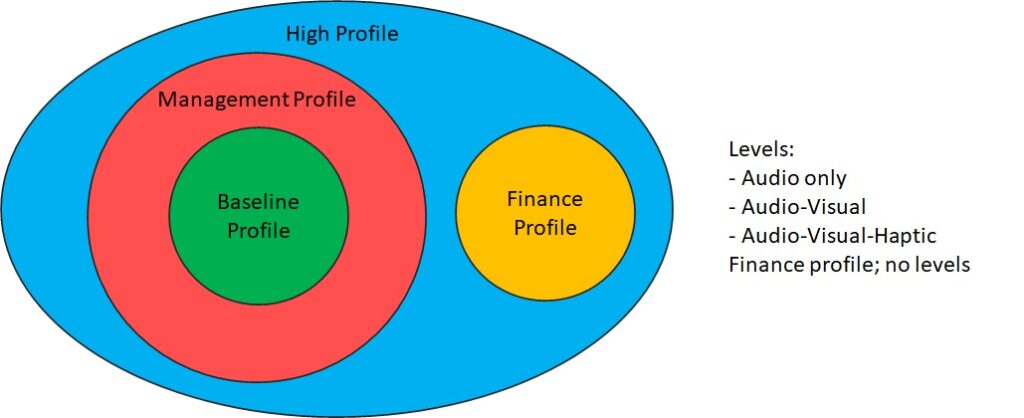

The structure of the Metaverse Functionality Profiles is derived from the Use Cases and includes hierarchical Profiles and independent Profiles. Profiles may have Levels. As depicted in Figure 3, the currently identified Profiles are Baseline, Management, Finance, and High. The currently identified Levels for Baseline, Management, and High Profiles are Audio only, Audio-Visual, and Audio-Visual-Haptic. The Finance Profile does not have Levels.

Figure 4 – The currently identified Functionality Profiles

- What is next

MPAI has now laid down the basic elements and can start from the development of the Technical Specification – Metaverse Architecture. This will contain the main components of an M-Instance, their interconnections and the types of data exchanged. It will also contain the APIs called by the Processes to enable implementation of M-Instances.