XR Venues is an MPAI project addressing contexts enabled by Extended Reality (XR) – any combination of Augmented Reality (AR), Virtual Reality (VR) and Mixed Reality (MR) technologies – and enhanced by Artificial Intelligence (AI) technologies. The word “Venue” is used as a synonym for Real and Virtual Environments.

MPAI thinks that the Live Theatrical Stage Performance use case fits well with the current trend that sees theatrical stage performances such as Broadway theatre, musicals, dramas, operas, and other performing arts increasingly using video scrims, backdrops, and projection mapping to create digital sets rather than constructing physical stage sets, allowing animated backdrops, and reducing the cost of mounting shows.

The use of immersion domes – especially LED volumes – promises to surround audiences with virtual environments that the live performers can inhabit and interact with. In addition, Live Theatrical Stage Performance can extend into the metaverse as a digital twin. Elements of the Virtual Environment experience can be projected in the Real Environment and elements of the Real Environment experience can be rendered in the Virtual Environment (metaverse).

The purpose of the planned MPAI-XRV – Live Theatrical Stage Performance Technical Specification is to address AI Modules performing functions that facilitate live multisensory immersive stage performances which ordinarily require extensive on-site show control staff to operate. Use of the AI Modules organised in AI Workflows enabled by the MPAI-XRV – LTSP Technical Specification will allow more direct, precise yet spontaneous show implementation and control to achieve the show director’s vision. It will also free staff from repetitive and technical tasks allowing them to amplify their artistic and creative skills.

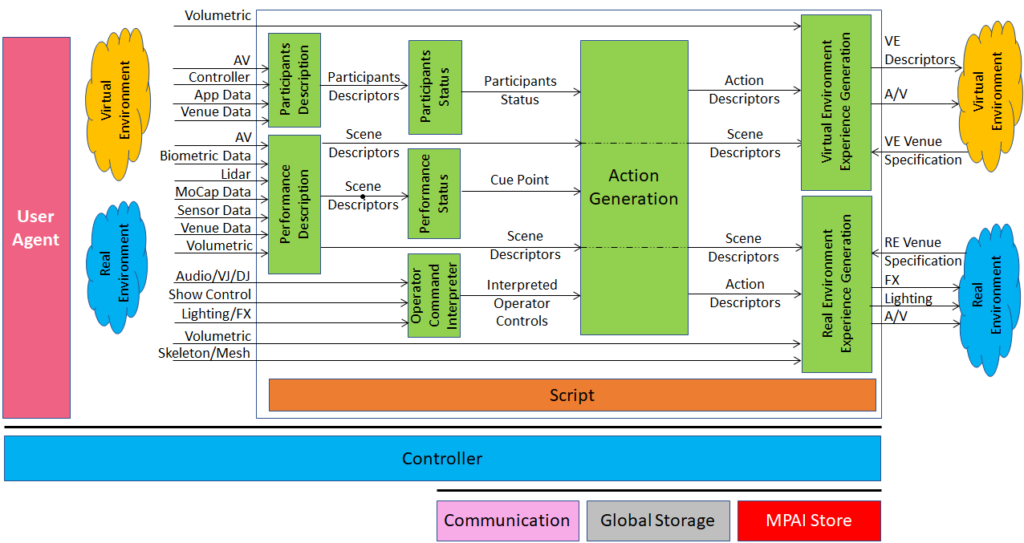

Figure 1 provides the Reference Model of the Live Theatrical Stage Performance Use Case incorporating AI Modules (AIM’s). In this diagram, data extracted from the Real and Virtual Environment (on the left) are processed and injected into the same Real and Virtual Environments (on the right).

Data is collected from both the Real and Virtual Environments including audio, video, volumetric or motion capture (mocap) data from stage performers, signals from control surfaces and more. One or more AIMs extract features from participants and performers which are output as Participant and Scene Descriptors. These Descriptors are further interpreted by Performance and Participant Status AIMs to determine the Cue Point in the show (according to the Script) and Participants Status (in general, an assessment of the audience’s reactions).

Figure 1 – Live theatrical stage performance architecture (AI Modules shown in green)

Likewise, data from the Show Control computer or control surface, consoles for audio, DJ, VJ, lighting and FX (typically commanded by operators) – if needed – are interpreted by the Operator Command Interpreter AIM. The Action Generation AIM accepts Participant Status, Cue Point and Interpreted Operator Commands and uses them to direct action in both the Real and Virtual Environments via Scene and Action Descriptors. These general descriptors are converted into actionable commands required by the Real and Virtual Environments – according to their Venue Specifications – to enable multisensory Experience Generation in both the Real and Virtual Environments. In this manner, the desired experience can automatically be adapted to a variety of real and virtual venue configurations.

MPAI is seeking proposals of technologies that enable the implementation of standard components (AI Modules) to make real the vision described above. See the published documents:

Those intending to submit a response should familiarise with the following documents:

| Call for Technologies | html, pdf |

| Use Cases and Functional Requirements | html, pdf |

| Framework Licence | html, pdf |

| Template for responses | html, docx |

An online presentation of the Call for Technologies, the Use Cases and Functional Requirements, and the Framework Licence will be held on September 12 at 7 and 17 UTC. Register here for the 07 UTC and here for the 17 UTC presentations.