A new brick for the MPAI architecture

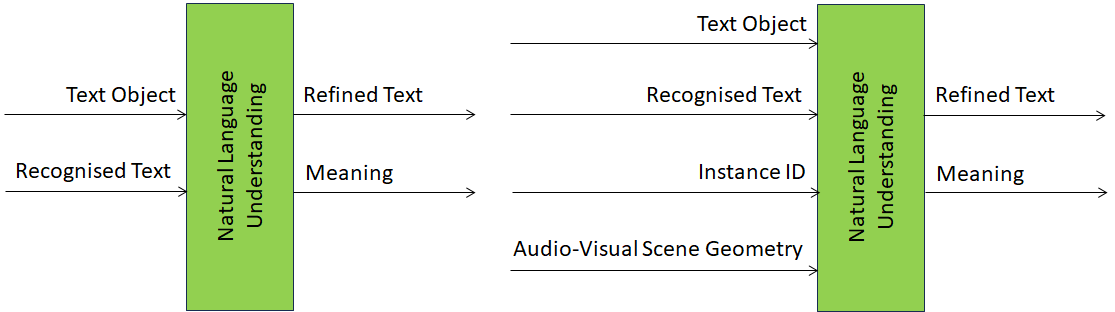

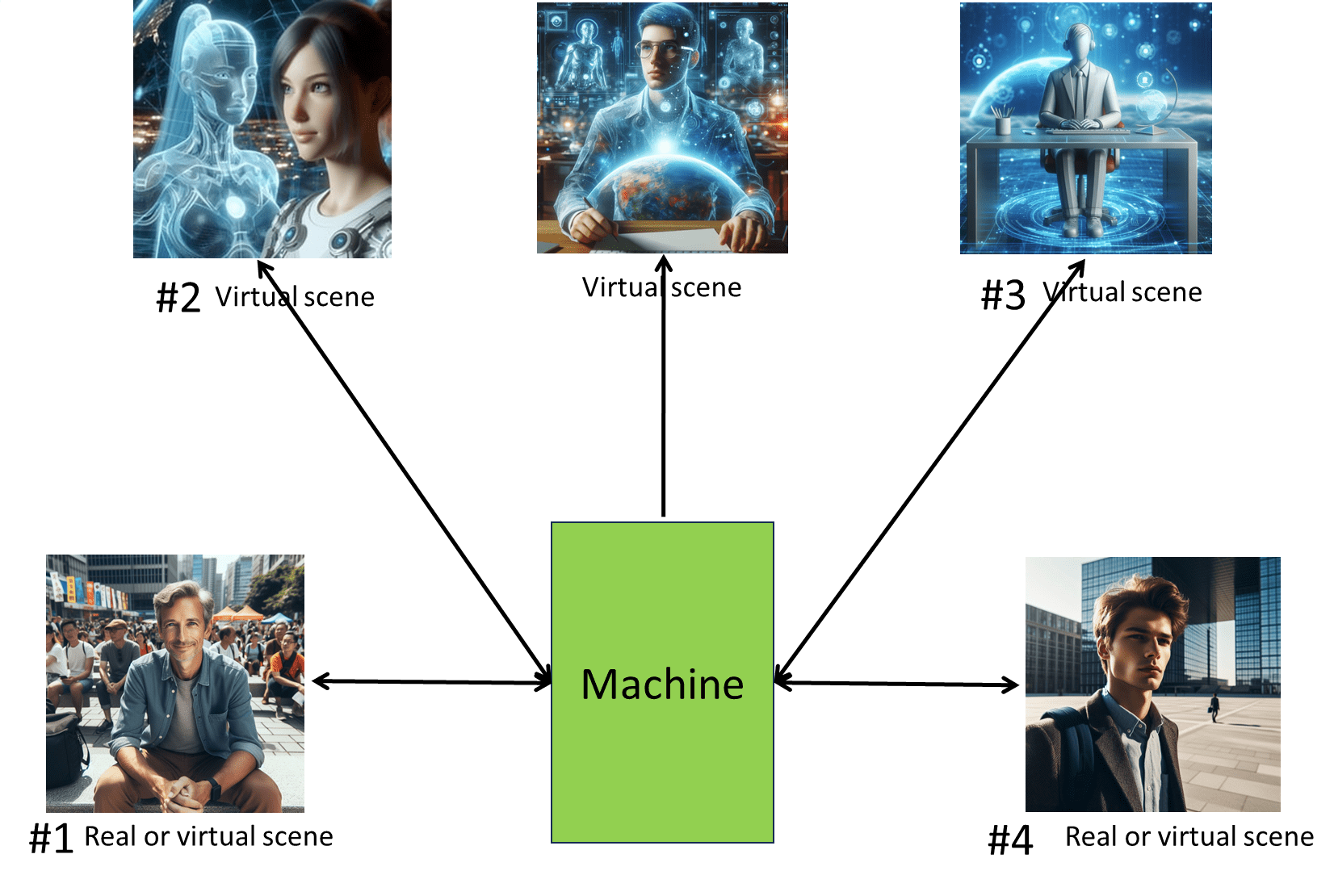

At its 43rd General Assembly of 2024 April17, MPAI approved the publication of the draft AI Module Profiles (MPAI-PRF) standard with a request for Community Comments. The scope of MPAI-PRF is to provide a means to identify AI Modules with the same functionality but with different features. First two words about AIMs. MPAI develops application-oriented standards for applications that MPAI calls AI Workflows (AIW) that can be broken down into components called AI Modules. AIWs are specified by what they…