An introduction to the Neural Network Watermarking Call for Technologies

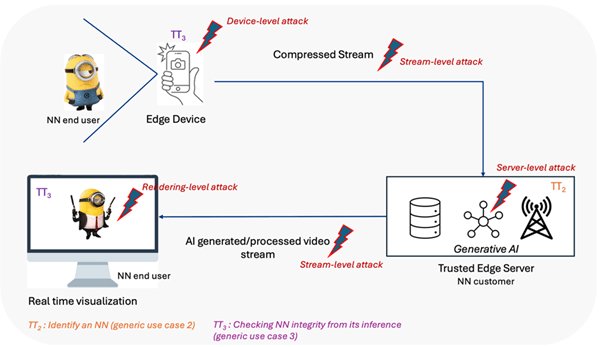

Introduction During the last decade, Neural Networks have been deployed in an increasing variety of domains and the production of Neural Networks became costly, in terms of both resources (GPUs, CPUs, memory) and time. Moreover, users of Neural Network based services more and more express their needs for a certified service quality. NN Traceability offers solutions to satisfy both needs, ensuring that a deployed Neural Network is traceable and any tampering detected. Inherited from the multimedia realm, watermarking assembles a…