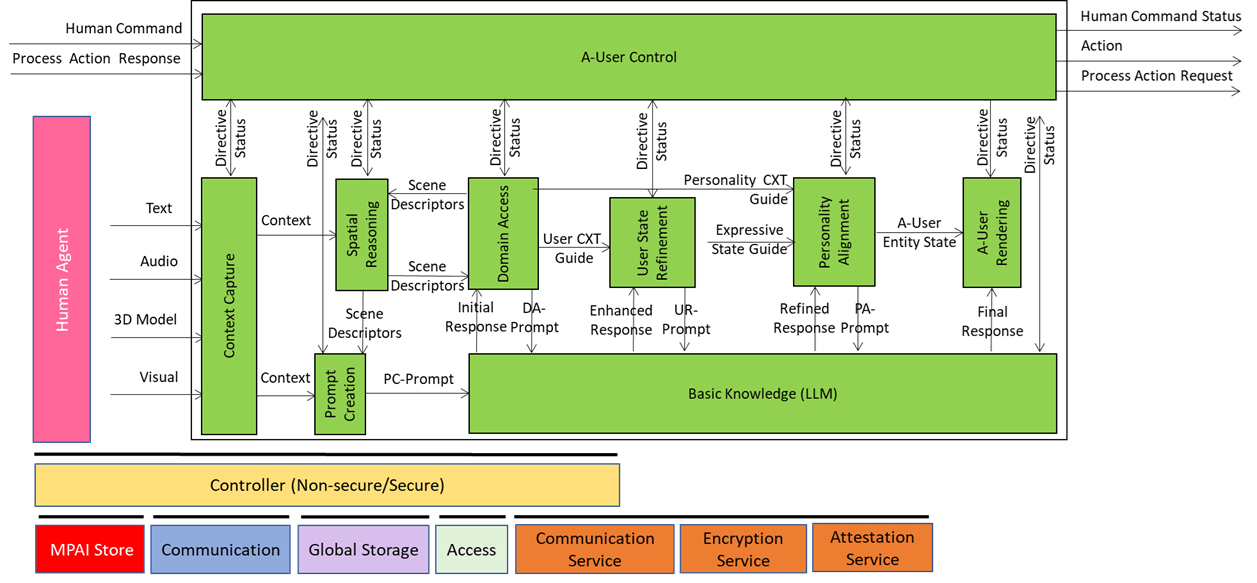

A Walk inside the Autonomous User

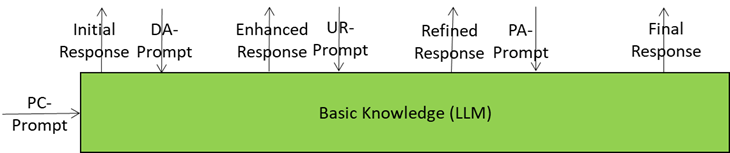

Table of Content A standard for the Autonomous User Architecture A-User Control: The Autonomous Agent’s Brain Context Capture: The A-User’s First Glimpse of the World Audio Spatial Reasoning: The Sound-Aware Interpreter Visual Spatial Reasoning: The Vision‑Aware Interpreter Prompt Creation: Where Words Meet Context Domain Access: The Specialist Brain Plug-in for the Autonomous User Basic Knowledge: The Generalist Engine Getting Sharper with Every Prompt User State Refinement: Turning a Snapshot into a Full Profile Personality Alignment: The Style Engine of A-User…