One of the new Technical Specifications published by MPAI is Version 1.1 of Human and Machine Communication (MPAI-HMC).

The title is definitely not reductive – the scope is not intended to be narrow. Indeed, Human and Machine Communication is a vast area where new technologies are constantly introduced – more so today than before, thanks to the rapid progress of Artificial Intelligence. It is also a vast business area where new products, services, and applications are launched by the day, but many of them are conceived and deployed to operate autonomously.

So, what is MPAI trying to achieve with MPAI-HMC? Part of the answer is contained in the letter “C” of the HMC acronym. If two entities communicate, they must have a common basis and standards are a great way to provide that. Another part of the answer is less “visible”, but no less important. To enable a machine to “understand” a textual or vocal message requires sophisticated AI technologies. Teaching a machine to provide sensible responses to what it has “understood” is an additional challenge. Standards can also facilitate this.

A satisfactory communication between humans involves more than a literal understanding of the message. Many other elements come into play: the context in which the communication is taking place, the parts of the message that are based on the face and body of the sender, the nonpurposive or deliberate mapping of a communicating party’s internal state or cultural environment into the message, and more.

Is it possible to fully realise such a communication system? Some initial elements exist but a full-fledged system will take more time to materialise. MPAI thinks that successfully addressing such a complex system requires reducing it to smaller pieces, developing solutions with the individual pieces, and finally integrating the developed pieces.

MPAI-HMC aims to achieve these goals by breaking down monolithic systems into smaller components with known functions and interfaces, to enable the development of independent components using well-studied technologies developed by people focusing on specific parts of the system. The communication between the components of the system is based on data types have a human understandable semantics.

Currently, MPAI-HMC specifies the “AI Workflow (AIW)” called Communicating Entities in Context (HMC-CEC). Like other MPAI AIWs, this is composed of interconnected “AI Modules (AIM)” exchanging data of specific data types. The AIM acronym indicates the components that can be integrated into a complete system implementing the AIW.

The Communicating Entities in Context AI Workflow enables Machines to communicate with Entities in different Contexts where:

- Machine is software embedded in a device that implements the HMC-CEC specification.

- Entity refers to one of:

- A human in a real audio-visual scene.

- A human in a real scene represented as a Digitised Human in an Audio-Visual Scene.

- A Machine represented as a Virtual Human in an Audio-Visual Scene.

- A Digital Human in an Audio-Visual Scene rendered as a real audio-visual scene.

- Context is information describing attributes of an Entity, such as language, culture etc.

- Digital Human is either a Digitised Human, i.e., the digital representation of a human, or a Virtual Human, i.e., a Machine. Digital Humans can be rendered for human perception as humanoids.

- A word beginning with a small letter represents an object in the real world. A word beginning with a capital letter represents an Object in the Virtual World.

Entities communicate in one of the following ways when communicating to:

- humans:

- Use the Entities’ body, speech, context, and the audio-visual scene that the Entities are immersed in.

- Use HMC-CEC-enabled Machines emitting Communication Items.

- Machines:

- Render Entities as speaking humanoids in audiovisual scenes, as appropriate.

- Emit Communication Items.

Communication Items are implementations of the MPAI-standardised Portable Avatar, a data type providing information on an Avatar and its context and enabling a receiver to render an Avatar as intended by the sender. The standard specifying this is not unexpectedly called Portable Avatar Format (MPAI-PAF). Figure 1 depicts a Portable Avatar.

![]()

Figure 1 – Reference Model of the Portable Avatar

The data that may be included in a Portable Avatar is:

- The ID of the Avatar

- The Space and Time information related to the Avatar.

- The Language of the Avatar.

- A segment of Speech in that Language.

- A Text.

- A Speech Model to synthesise Text into Speech.

- The internal status of the Avatar that guides the synthesis of the Text.

- Space and Time information for an Audio-Visual Scene where the Avatar can be embedded.

- The Descriptors of that Audio-Visual Scene.

MPAI has standardised the representation of the internal status of an Avatar or of a human as “Personal Status”.

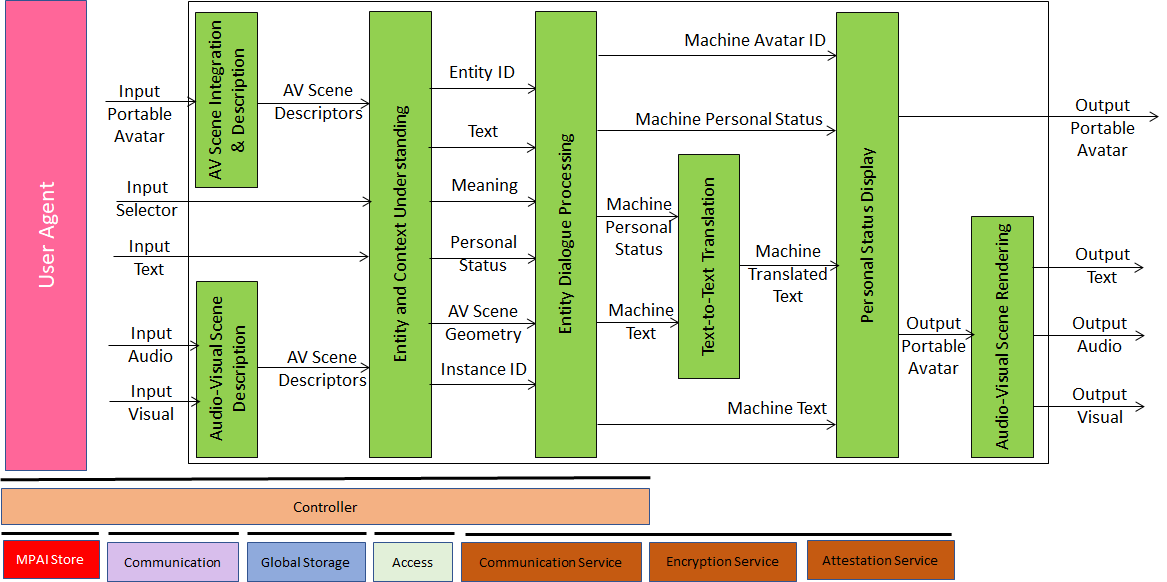

Figure 2 represents the reference model of HMC-CEC.

Figure 2 – Reference Model of Communication Entities in Context (HMC-CEC)

The components of the figure that are not in green belong to the infrastructure that enables dynamic configuration, initialisation, and control of AI Workflows in a standard AI Framework. The operation of HMC-CEC is as follows:

- HMC-CEC may receive a Communication Item/Portable Avatar. AV Scene Integration and Description converts the Portable Avatar to AV Scene Descriptors.

- HMC-CEC may also receive a real scene and has the capability to digital represent it as AV Scene Descriptors.

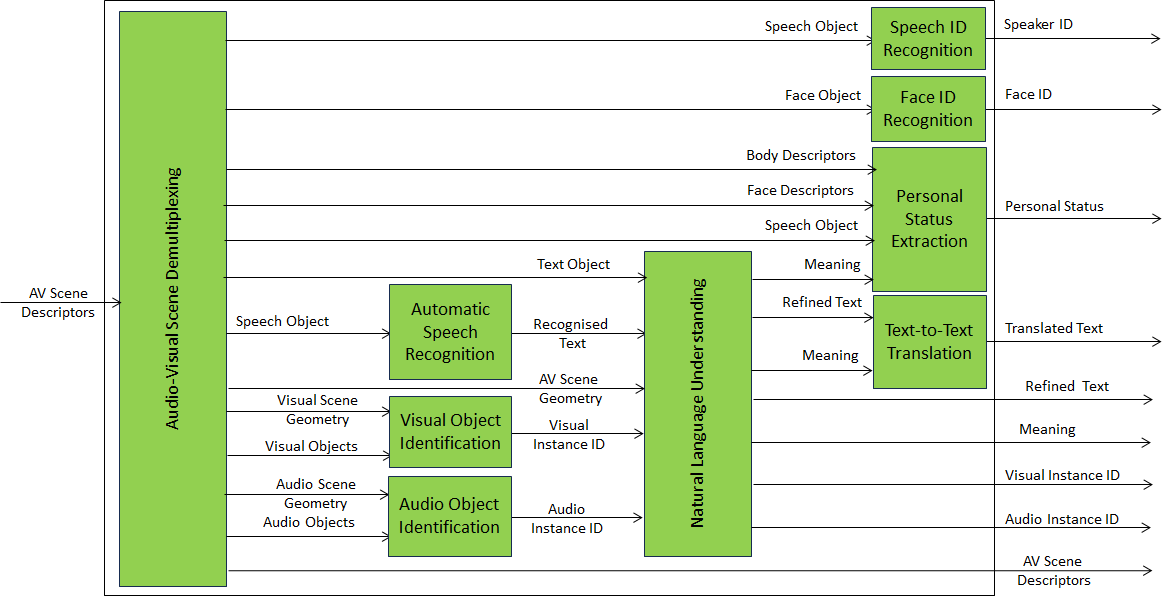

- Therefore, Entity and Context Understanding always deals with AV Scene Descriptors independently of the source – real space or Virtual Space – of the information. The HMC-ECU is a Composite AIM demultiplexing the information streams inside the AV Scene Descriptors. Speech and Face are identified. Audio and Visual Object are identified. Speech is recognised, understood and possibly translated. The Personal Status conveyed by Text, Speech, Face, and Gesture.

Figure 3 – The Reference Model of the Entity Context Understanding (HMC-ECU)

- The Entity Dialogue Processing AIM has the necessary information to generate a (textual) response but can also generate the Personal Status to be embedded in the response.

- The Text-to-Text Translation translates the Machine Text to Machine Translated Text.

- Text, Speech, Face, and Gesture – each possibly conveying the machine’s personal status – are the “Modalities” that the machine can use in its response represented as a Portable Avatar that can be transmitted as is to the party it communicates with or rendered in an audible/visible form.

This paper started by saying that MPAI-HMC addresses complex systems, and this is certainly true for HMC-CEC today. How can we then make two HMC-CEC machines aware of each other capabilities?

MPAI-HMC’s answer is based on Profiles. This well-established notion includes Attributes and Sub-Attributes obtained by categorising the HMC-CEC features into groups of capabilities that an HMC-CEC machine might use to process a Communication Item. The six categories of capabilities are:

| Receive | Communication Items from a machine or Audio-Visual Scenes from a real space. |

| Extract | Personal Status from the Modalities (Text, Speech, Face, or Gesture) in the Communication Item received. |

| Understand | The Communication Item from the Modalities and the extracted Personal Status, with or without use of the spatial information embedded in the Communication Item. |

| Translate | Using the set of Modalities available to the machine. |

| Generate | Response. |

| Display | The response using the Modalities available to the HMC-CEC machine. |

The Attributes and Sub-Attributes of the HMC-CEC Profiles are defined in Table 1 where the Sub-Attributes are expressed with 3 characters. The first two characters of the Sub-Attributes (followed by O representing Object) of:

- Audio-Visual Scene represents Text (TXO), Speech (SPO), Audio (AUO), Visual (VIO), and Portable Avatar (PAF) Sub-Attributes, respectively.

- Personal Status, Understanding, Translation, and Display Response represent Text (TXO), Speech (SPO), Face (FCO), and Gesture (GSO), respectively.

Table 1 – Attribute and Sub-Attribute Codes of HMC-CEC

| Attributes | Codes | Sub-Attribute Codes | ||||

| Audio-Visual Scene | AVS | TXO | SPO | AUO | VIO | PAF |

| Personal Status | EPS | TXO | SPO | FCO | GSO | |

| Understanding | UND | TXO | SPO | FCO | GSO | SPC |

| Translation | TRN | TXO | SPO | FCO | GSO | |

| Display Response | RES | TXO | SPO | FCO | GSO | |

The SPC Sub-Attribute of Understanding represents Spatial Information (SPaCe), i.e., the additional capability of an HMC-CEC implementation to use Spatial Information to understand a Communication Item.

Some 20 years ago, standards were published on paper. Today, standards are typically published as computer files and MPAI standards are also available as pdf files but are primarily available online. Not only chapters are published as web pages, but also the specification of AIWs, AIMs, and Data Types. Individual Most AIMs and Data Types are reused by other MPAI standards. HMC-CEC is an extreme cases of this: it only specifies two AIMs and not a single new data type., It only reuses Data Types defined by other MPAI standards but the resulting architecture is completely different.

Reusability of standard technologies is not just a slogan. In MPAI, it is our practice!