A definite answer to such a question is noto going to come anythime soon, and many have attempted to develop algorithms providing an answer.

MPAI could not miss the opportunity to give its own answer and has published the MPAI-CUI standard, or Compression and Understand of Financial Data. This contains one use case: Company Performance Prediction (CPP).

What does CUI-CPP offer? Imagine that you have a company and you would like to know what is the probability that your company defaults in the next, say, 5 years. The future is not written, but it certainly depends on how your company has performed in the last few years and on the solidity of your company’s governance.

Another element that you would like to know is what is the probability that your company suspends its operations because an unexpected event such as a cyber attack or an earthquake has happened. Finally, you should probably also want to know how adequate is the organisation of your company (but many don’t want to be told ;-).

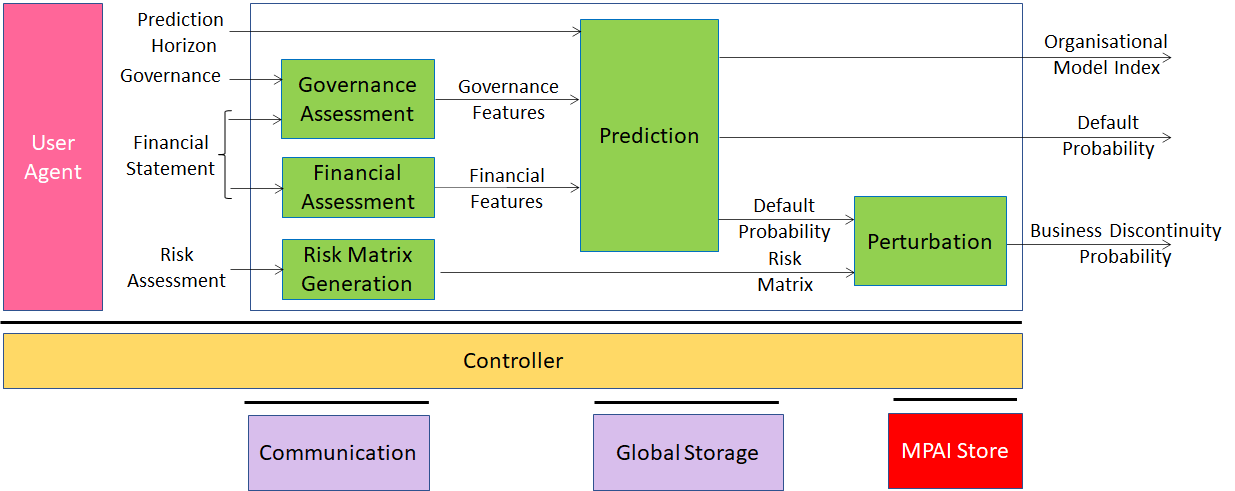

CUI-CPP needs financial statements, governance data, and risk data as input. The first thing the standard does is to compute the financial and governance features from the financial statements and governance data. These features are fed to a neural network that has been trained with many company data and provides the default probability and the organisational model adequacy index (0=inadequate, 1=adequate).

Then CUI-CPP computes a risk matrix based on cyber and seismic risks and uses this to perturb the default probability and obtain the business discontinuity probability.

The full process is described in Figure 1.

Figure 1 – Reference Model of Company Performance Prediction

Many think that once the technical specification is developed, the work is over. Well, that would be like saying that, after you have produced a law, society can develop. That is not the case, because society needs tribunals to assess whether a deed performed by an individual conforms with the law.

Therefore, MPAI standards do not just contain the technical specification, i.e., “the minimum you must know to make an implementation of the standard”, but 4 components in total, of which the technical specification is the first.

The second is the reference software specification, a text describing how to use a software implementation that lets you understand “how the standard works”. Reference software is an ingredient of many standards and many require that the reference software be open source.

MPAI is in an unusual position in the sense that it does not specify the internals of the AI Modules (AIM), but only their function, interfaces, and connections with the other AIMs that make up an AI Workflow (AIW) with a specified function. Therefore, MPAI does not oblige the developers of an MPAI standard to provide the source code of an AIM, the may provide a compiled AIM (of course, they are welcome if they do, more so if the AIM has a high performance).

In the case of CUI-CPP, all AIMs are provided as open-source software, including the neural network called Prediction. Of course, you should expect that the reference software “demonstrates” how the standard works, not that it makes very accurate predictions of a particular company

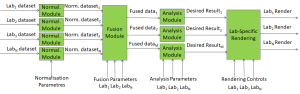

The third component is conformance testing. Many standards do not distinguish between “how to make a thing” (the technical specification, i.e., the law) from “how to test that a thing is correctly implemented” (the conformance testing specification, i.e., the tribunal). MPAI provides a Conformance Testing Specifcitation that specifies the “Means”, i.e.:

- The Conformance Testing Datasets and/or the methods to generate them,

- The Tools, and

- The Procedures

to verify that the AIMs and/or the AIW of a Use Case of a Technical Specification:

- Produce data whose semantics and format conform with the Normative clauses of the selected Use Case of the Technical Specification, and

- Provide a user experience level equal to or greater than the level specified by the Conformance Testing Specification.

In the case of CUI-CPP, MPAI provides test vectors, and the Technical Specification specifies the tolerance of the output vectors produced by an implementation of the AIMs or AIW when fed with the test vectors.

The fourth component is the performance assessment to verify that an implementation is not just “technically correct”, but also “reliable, robust, fair and replicable”. Essentially, this is about assessing that an implementation has been correctly trained, i.e., it is not biased.

The performance assessment specification provides the Means, i.e.:

- The methods to generate the Performance Testing Datasets,

- The Tools, and

- The Procedures

to verify that the training of the Prediction AIM (the only one that it makes sense to implement with a neural network) is not biased against some geographic locations and industry types (service, public, commerce, and manufacturing).

The CUI-CPP performance assessment specification assumes that there are two performance assessment datasets:

- Dataset #1 not containing geographic location and industry type information.

- Dataset #2 containing geographic location and industry type information.

The performance of an implementation is assessed by applying the following procedure:

- For each company compute:

- The Default Probabilities of all records in Dataset #1 and in Dataset #2.

- The Organisational Model Index of all records in Dataset #1 and in Dataset #2.

- Verify that the average of the differences of all

- Default Probabilities in 1.a is < 2%.

- Organisational Model Indices in 1.b is < 2%.

If both 2.a and 2.b are verified, the implementation passes the performance assessment.