For about a year, MPAI has developed Use Cases and Functional Requirements for the Connected Autonomous Vehicles (MPAI-CAV) project, and the document has reached a good maturity. MPAI is now publishing the results achieved so far on this and other web sites, on social networks and newsletters.

Moving Picture, Audio and Data Coding by Artificial Intelligence (MPAI) is an international, unaffiliated, non-profit organisation with the mission is to develop Artificial Intelligence (AI) enabled data coding specifications, with clear Intellectual Property Rights (IPR) licensing frameworks. The scope of data coding includes any instance in which digital data needs to be converted to a different format to suit a specific application. Notable examples are: compression and feature extraction.

MPAI-CAV is an MPAI project seeking to standardise the components required to implement a Connected Autonomous Vehicle (CAV). MPAI defines a CAV as a mechanical system capable of executing commands to move itself autonomously – save for the exceptional intervention of a human – based on the analysis of the data produced by a range of sensors exploring the environment and the information transmitted by other sources in range, e.g., CAVs and roadside units (RSU).

This is the first of several articles reporting about the progress achieved and the developments planned in the MPAI-CAV project.

The current focus is the development of Use Cases and Functional Requirements (MPAI-CAV UCFR), the first step towards publishing a Call for Technologies. Responses to the Call will then enable MPAI to actually develop the MPAI-CAV standard.

There are 3 ways for you to get involved in the MPAI-CAV project:

- Monitor the progress of the MPAI-CAV project.

- Participate in MPAI-CAV activities (confcall meetings) as a non-member.

- Join MPAI as a member.

MPAI invites professionals and researchers to contribute to further developing the MPAI-CAV UCFR.

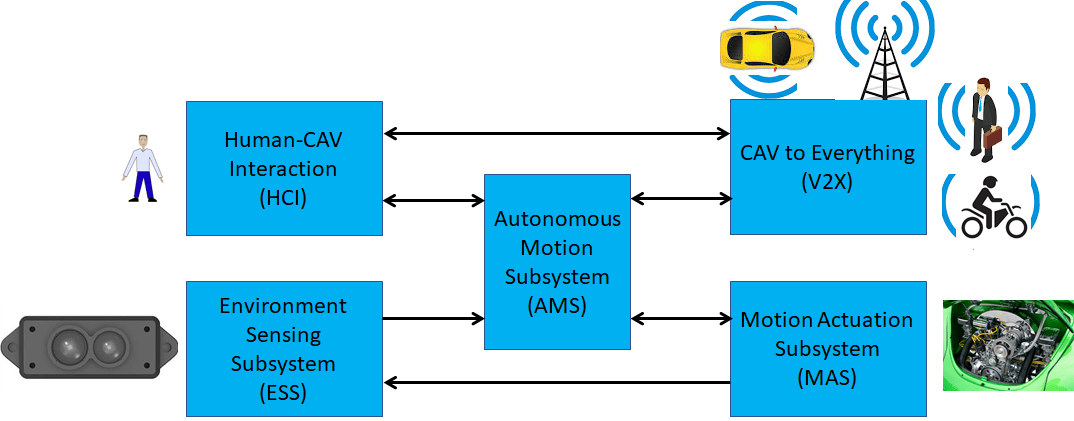

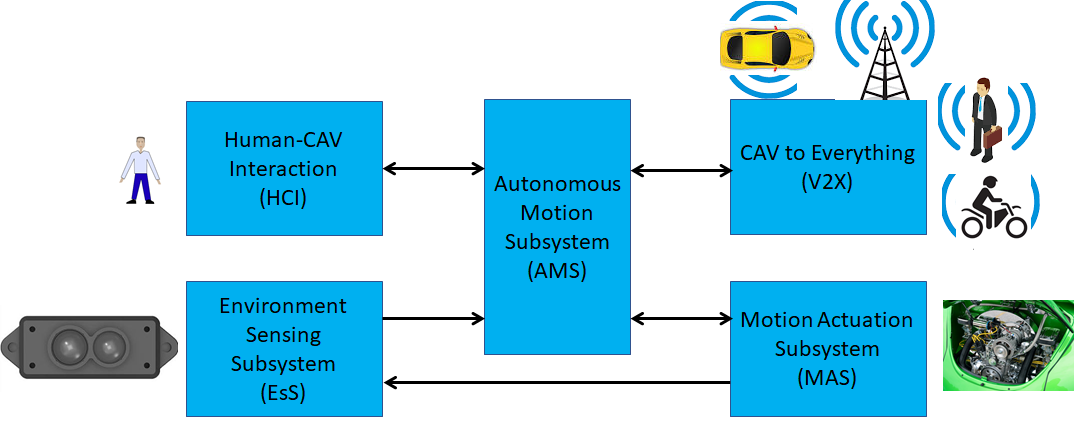

MPAI-CAV has identified 5 main subsystems of a Connected Autonomous Vehicle, as depicted in Figure 1.

Figure 1 – The 5 MPAI-CAV subsystems

The functions of the individual subsystems can be described as follows:

- Human-CAV interaction (HCI) recognises the human CAV rights holder, responds to humans’ commands and queries, provides extended environment representation (Full World Representation) for humans to use, senses human activities during the travel and may activate other Subsystems as required by humans.

- Environment Sensing Subsystem (ESS) acquires information from the environment via a variety of sensors and produces a representation of the environment (Basic World Representation), its best guess given the available sensory data.

- Autonomous Motion Subsystem (AMS) computes the Route to destination, uses different sources of information – CAV sensors, other CAVs and transmitting units – to produce a Full World Representation and gives commands that drive the CAV to the intended destination.

- CAV to Everything Subsystem (V2X) sends/receives information to/from external sources, including other CAVs, other CAV-capable vehicles, Roadside Units (RSU).

- Motion Actuation Subsystem (MAS) provides non-electromagnetic information anout the environment¸ receives and actuates motion commands in the environment.

The next publications will deal with

- Why an MPAI-CAV standard?

- Introduction to MPAI-CAV Subsystems

- Human-CAV interaction

- Environment Sensing Subsystem

- CAV to Everything

- Autonomous Motion Subsystem

- Motion Actuation Subsystem

For any communication or intention to join MPAI-CAV activities, or any other MPAI standards development activities, send an email to Secretariat (secretariat@mpai.community).