Introduction

In the week of the 13th of January, the Free University of Brussels has hosted the 129th MPEG meeting . Two days (11-12) were dedicated to some 15 ad hoc group meetings and 6 days (7-12)to meetings of JVET, the joint MPEG-SG 16 group tasked to develop the VVC standard.

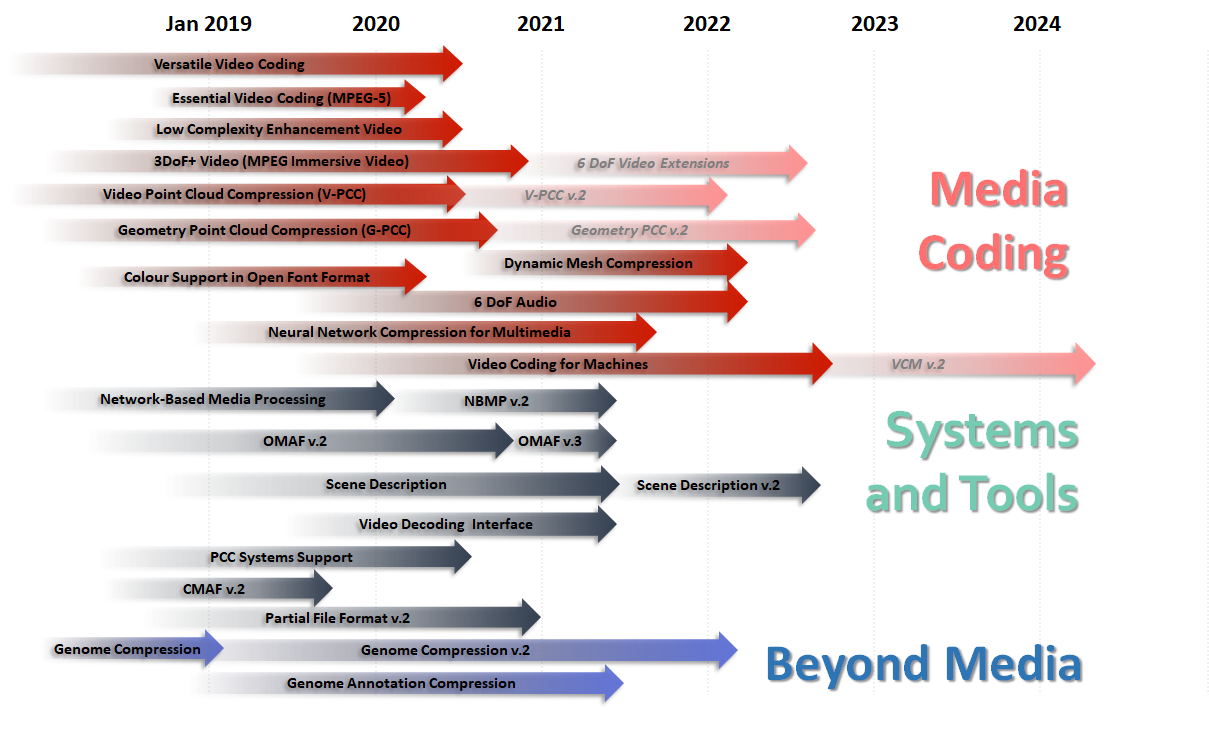

In this status report I will highlight some of the most relevant topics on which progress was made. The figure below captures the essence of the MPEG work plan as it resulted from the meeting.

Versatile Video Coding (VVC)

VVC (part 3 of MPEG-I) is being balloted and the ballot results are expected to be received at the July meeting so that MPEG can approve VVC as FDIS.

MPEG is now working on two related standards that are important for practical deployment: Carriage in MPEG-2 TS (Amendment 2 of MPEG-2 Systems) and Carriage in ISOBMFF (Amendment 2 of MPEG-4 part 15), both expected to be approved in January 2021.

Another activity around VVC is called Multi-Decoder Video Interface for Immersive Media (part 13 of MPEG-I). This aims to support the flexible use of media decoders, for example decoding only a subset of a single elementary stream. This feature is required for processing immersive media composed of a large number of elementary streams.

Essential Video Coding (EVC)

EVC (part 1 of MPEG-5) addresses the needs that have become apparent in some use cases, such as video streaming, where existing ISO video coding standards have not been as widely adopted as might be expected from their purely technical characteristics. EVC is still under ballot and results are expected to become available at the April 2020 meeting (MPEG 130).

The group in charge of EVC has started considering Carriage of EVC in MPEG Systems.

Low Complexity Enhancement Video Coding (LCEVC)

LCEVC will provide a standardised video coding solution that leverages other video codecs in a manner that improves video compression efficiency while maintaining or lowering the overall encoding and decoding complexity. LCEVC will reach DIS in April 2020.

MPEG Immersive Video (MIV) and Video-based Point Cloud Compression (V-PCC)

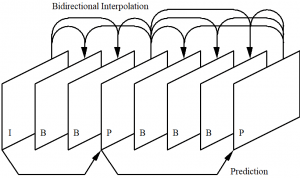

Part 12 of MPEG-I Immersive Video shares with Part 5 of MPEG-I Video-based Point Cloud Coding (V-PCC) the notion of projecting a 3D scene to a series of planes, compressing the 2-D visual information on the planes with off-the-shelf video compression standards and providing a means to communicate how a 3D renderer can use the information contained in the atlases (in the case of MIV) and the patches (in the case of PCC). Outstanding convergence of the two approaches has been reached.

V-PCC will reach FDIS in April 2020 and MIV in January 2021. Both will have extensions, the latter to enable the ambitious, but needed 6 degrees of freedom (6DoF) where user can move in 6 directions.

The MPEG-4 File Format is being extended to include V-PCC and G-PCC data.

Video Coding for Machines (VCM)

VCM is an exploration on a new type of video coding designed to provide efficient representation of video information where the user is not human but a machine, with possible support of viewing by humans. Possible use cases for VCM are video surveillance, intelligent transportation, automatic driving and smart cities.

MPEG has produced a Draft Call for Evidence designed to acquire information on the feasibility of a Video Coding for Machines standard. For this purpose MPEG has published a Call for Test Data for Video Coding for Machines. Test data will be used to assess the responses to the Call for Evidence.

Neural Network-based Audio-Visual Compression

VVC and EVC will support the media industry by providing more compression for transmission and storage. They are both the current endpoints of a compression scheme that dates back to the mid-1980’s. Similarly MPEG-H 3D Audio is the current endpoint of the compression scheme initiated in 1997 with MPEG-2 AAC.

Today, as a result of the demonstration provided in recent years that neural networks can outperform other “traditional” algorithms in selected areas, many laboratories are carrying out significant research on the use of neural networks for coding of audio and visual signals as well as point clouds.

MPEG is calling its members to provide information on this new area of endeavour.

MPEG Immersive Audio

MPEG has produced a Draft CfP for Immersive Audio. The actual CfP will be issued in April 2020 and submissions are requested for July 2020. FDIS is planned for January 2022.

Neural Network Compression for Multimedia

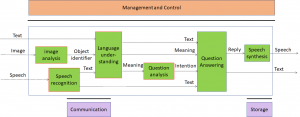

Neyral Networks are used for muktimedia applications, such as speech understanding and image recognition. Industry, however, is coming to the conclusion that the IT infrastructure can very well not be able to cope with the growth of users and that in many cases intelligence is best distributed to the edge. As the size of some of these networks is hundreds of GBytes or even TBytes, compression of neural networks can support distribution of intelligemce to potentially millions of devices. See the figure below for a view of NBMP.

MPEG is progressing its work on the Compression of neural networks for multimedia content description and analysis standard. This is expected to reach CD status in January 2021.

Network-Based Media Processing (NBMP)

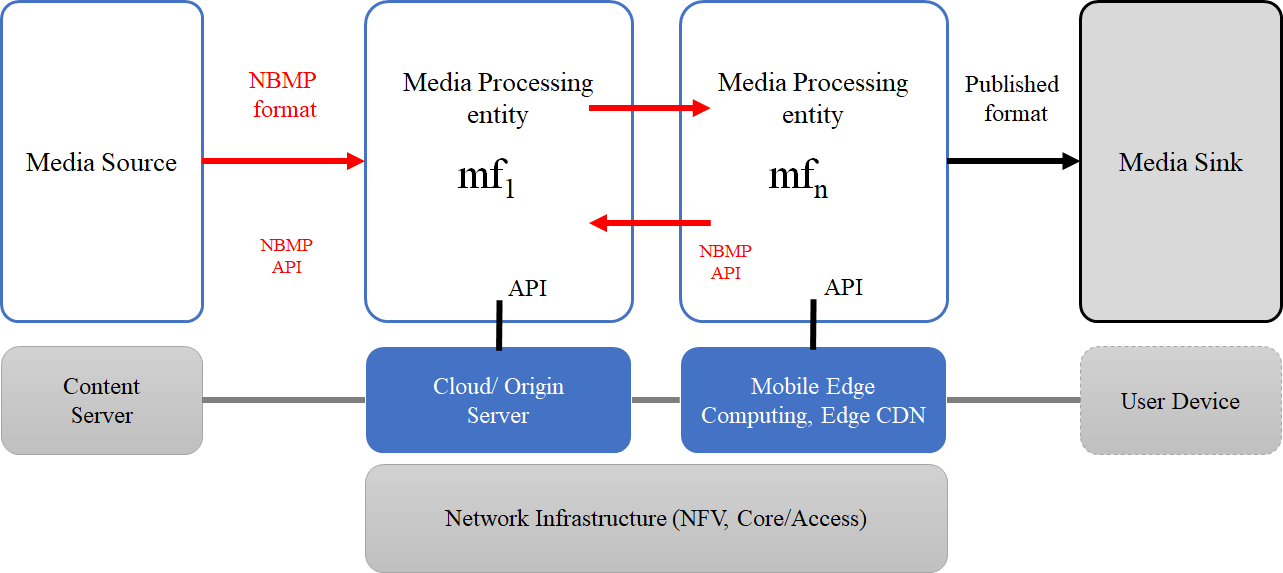

NBMP reached FDIS in January 2020. The standard defines a framework for content and service providers to describe, deploy, and control media processing. The framework includes an abstraction layer deployable on top of existing commercial cloud platforms and able to be integrated with 5G core and edge computing. The NBMP workflow manager enables composition of multiple media processing tasks to process incoming media and metadata from a media source and to produce processed media streams and metadata that are ready for distribution to media sinks.

MPEG is exploring how NBMP can become an instance of the Big Media reference model developed by SC 42 Artificial Intelligence.

Compression of Genomic Annotations

At the January 2020 meeting MPEG received 7 submissions in response to the joint Call for Proposals that MPEG and ISO TC 276/WG 5 on the efficient representation of annotations to sequencing data resulting from the analysis pipelines MPEG meeting in Brussels. MPEG has started working on a set of core experiments with the goal to integrate the proposed technologies into a single standard specification capable of satisfying all identified requirements and support rich varieties of queries.

FDIS is expected to be reached in January 2021.

MPEG and 5G

MPEG compression standards are mostly designed to represent information in an abstract way. However, the great success of MPEG standards is also due to the effort MPEG spent in providing the means to convey compressed information. 5G is being deployed and MPEG is investigating if and how its standards can be affected by 5G/

MPEG-21 Contracts to Smart Contracts

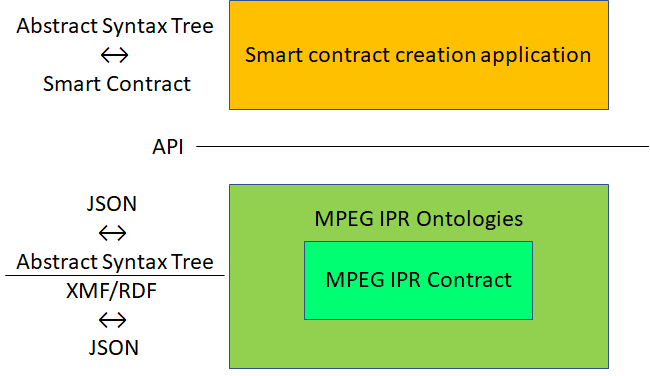

Blockchains offer an attractive way to execute electronic contracts. The problem is that there are many blockchains each with their own way of expressing the terms of a contract. MPEG considers that MPEG-21 can be the intermediate language in which smart contracts for different blockchains can be expressed.

One application can be for the following use case. There is no way to deduce from a smart contract the clauses that the smart contract contains. Publishing the human readable contract alleviates the concern, but does not ensure that the clauses of the human readable contract correspond to the clauses of the smart contract.

The figure below describes how the other party of the smart contract can know the clauses of the smart contract in a human readable form.

Posts in this thread

- MPEG status report (Jan 2020)

- MPEG, 5 years from now

- Finding the driver of future MPEG standards

- The true history of MPEG’s first steps

- Put MPEG on trial

- An action plan for the MPEG Future community

- Which company would dare to do it?

- The birth of an MPEG standard idea

- More MPEG Strengths, Weaknesses, Opportunities and Threats

- The MPEG Future Manifesto

- What is MPEG doing these days?

- MPEG is a big thing. Can it be bigger?

- MPEG: vision, execution,, results and a conclusion

- Who “decides” in MPEG?

- What is the difference between an image and a video frame?

- MPEG and JPEG are grown up

- Standards and collaboration

- The talents, MPEG and the master

- Standards and business models

- On the convergence of Video and 3D Graphics

- Developing standards while preparing the future

- No one is perfect, but some are more accomplished than others

- Einige Gespenster gehen um in der Welt – die Gespenster der Zauberlehrlinge

- Does success breed success?

- Dot the i’s and cross the t’s

- The MPEG frontier

- Tranquil 7+ days of hard work

- Hamlet in Gothenburg: one or two ad hoc groups?

- The Mule, Foundation and MPEG

- Can we improve MPEG standards’ success rate?

- Which future for MPEG?

- Why MPEG is part of ISO/IEC

- The discontinuity of digital technologies

- The impact of MPEG standards

- Still more to say about MPEG standards

- The MPEG work plan (March 2019)

- MPEG and ISO

- Data compression in MPEG

- More video with more features

- Matching technology supply with demand

- What would MPEG be without Systems?

- MPEG: what it did, is doing, will do

- The MPEG drive to immersive visual experiences

- There is more to say about MPEG standards

- Moving intelligence around

- More standards – more successes – more failures

- Thirty years of audio coding and counting

- Is there a logic in MPEG standards?

- Forty years of video coding and counting

- The MPEG ecosystem

- Why is MPEG successful?

- MPEG can also be green

- The life of an MPEG standard

- Genome is digital, and can be compressed

- Compression standards and quality go hand in hand

- Digging deeper in the MPEG work

- MPEG communicates

- How does MPEG actually work?

- Life inside MPEG

- Data Compression Technologies – A FAQ

- It worked twice and will work again

- Compression standards for the data industries

- 30 years of MPEG, and counting?

- The MPEG machine is ready to start (again)

- IP counting or revenue counting?

- Business model based ISO/IEC standards

- Can MPEG overcome its Video “crisis”?

- A crisis, the causes and a solution

- Compression – the technology for the digital age