Thirty-four years ago, the first idea of an “independent” video coding group, later called MPEG, popped up. In a few years, the idea expanded to cover all media for all industries. I have already explained how the very technical and market success of the idea without an internal competition led to its demise.

This is summarised by two facts

- Close to 9 years after publication of HEVC

- Decoders are installed in most TV sets and widely used

- Codecs are installed in mobile handsets but hardly used

- Internet use is limited to 12% (according to the last published statistics).

- VVC is competing with codecs based on different licensing models.

This situation can hardly be described as positive. Still, some groups continue adding technologies to existing standards. The prospects of practical use of standards based on this approach is anybody’s guess.

Taking a more sober stance, MPAI is addressing AI-based video coding with a different approach. Rather than starting from a scheme where traditional coding tools, each yielding minor improvements, are overloaded by IP problems, it starts from a traditional “clean sheet” video coding scheme – MPEG-5 EVC. Here, “clean” is used to mean

- A baseline MPEG-5 EVC profile containing 20 years-old tools

- A main profile with a performance a few % points less than VVC and a licence promised by 3 major companies in a matter of months.

The MPAI-EVC Evidence Project is replacing and/or improving existing MPEG-5 EVC tools with AI tools. When a gain of 25% will be reached, it will issue a Call for Technologies that will be licenced according to the Framework Licence to be developed before the Call.

MPAI-EVC will cover medium-term coding needs, but is that the final solution?

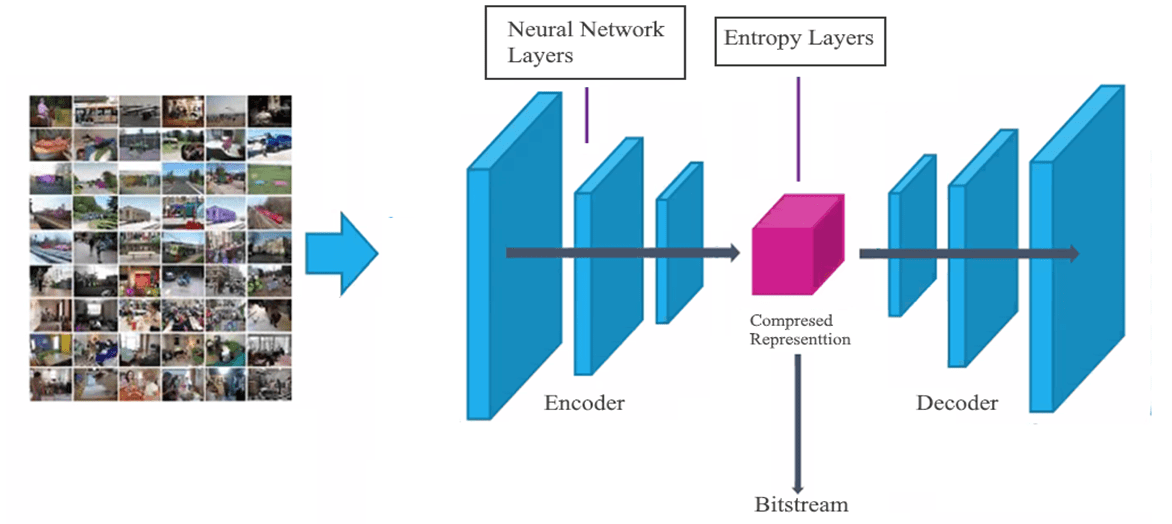

Research seems to answer no. The “end-to-end” deep video approach has been shown to offer a better compression performance. This is just the start and much more can be expected because AI is a burgeoning field of research.

In the extreme case “end-to-end” implies a single neural network trained it in such a way that the data output by the network are compressed and decodable by another network at the receiver. This is depicted by Figure 1 where a a single neural network is trained to compress any video source and capable to learn and manage the trade-off between bitrate and final quality.

Figure 1 – A general E2E architecture

Figure 1 – A general E2E architecture

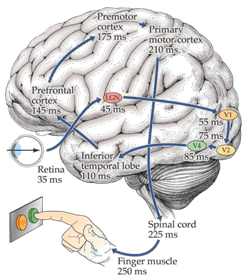

If there is anything to learn from how information is processed in biological systems, this may not be, at least for the time being, the way to go. Indeed, if we take the flow of processing from the human retina (the blue line in Figure 2), we see that information is not processed by unstructured neurons.

Figure 2 – Visual information flow in the human brain

This is a sequential description of an otherwise highly interconnected process:

- The retina carries out a significant amount of processing judging from the amount of information flowing through the optic nerves that is not comparable with the amount of information inpinging the eye

- The two optic nerves end at the respective Lateral Geniculate Nuclei (LGN) in the thalamus.

- The primary visual cortex (V1) is connected to the LGNs. It is organised in cortical colums capable to process edge more than space information. Binocular information is merged in V1.

- The secondary visual cortex (V2) has a 4-quadrant structure that provides a complete map of the visual world.

- The third visual cortex (V3), subdivided in dorsal and vental (and possibly more subfunctions), contains neurons with different capabilities to respond to the information structure.

- The fourth visual cortex (V4) has an internal structure tuned for orientation, spatial frequency, and colour.

- The Middle Temporal Visual Area (V5) is tuned to perception of motion.

In general the ventral part of the cortex recognises the nature of, while the dorsal part localises objects.

The above is not meant to be an accurate description of an extremely broad, complex and unfortunately immature area of investigation, but a description of how evolution has found it convenient to process visual information in variously interconnected “modules” and “submodules” each having different functions.

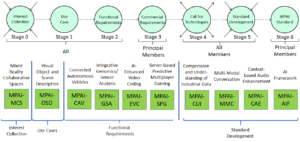

MPAI’s 12th General Assembly (MPAI-12) has confirmed MPAI’s strategic interest in video coding standards and identified two needs: a short/medium-term one served by the already running AI-Enhanced Video Coding project (MPAI-EVC) and a new long-term one served by AI-based End-to-End Video Coding project (MPAI-EEV).

This decision is an answer to the needs of the many who need not only environments where academic knowledge is promoted but also a body that develops common understanding, models and eventually standards-oriented End-to-End video coding. Currently, no standard developing organisation is running a project comparable to MPAI-EEV.

Preparations for the ambitious MPAI-EEV project are under way. A meeting will be called soon to discuss the matter and, as this is at a very early stage (Interest Collection in the MPAI process lingo), non-members are welcome to join. Please keep an eye on https://mpai.community/standards/mpai-eev/.