The birth of an MPEG standard idea

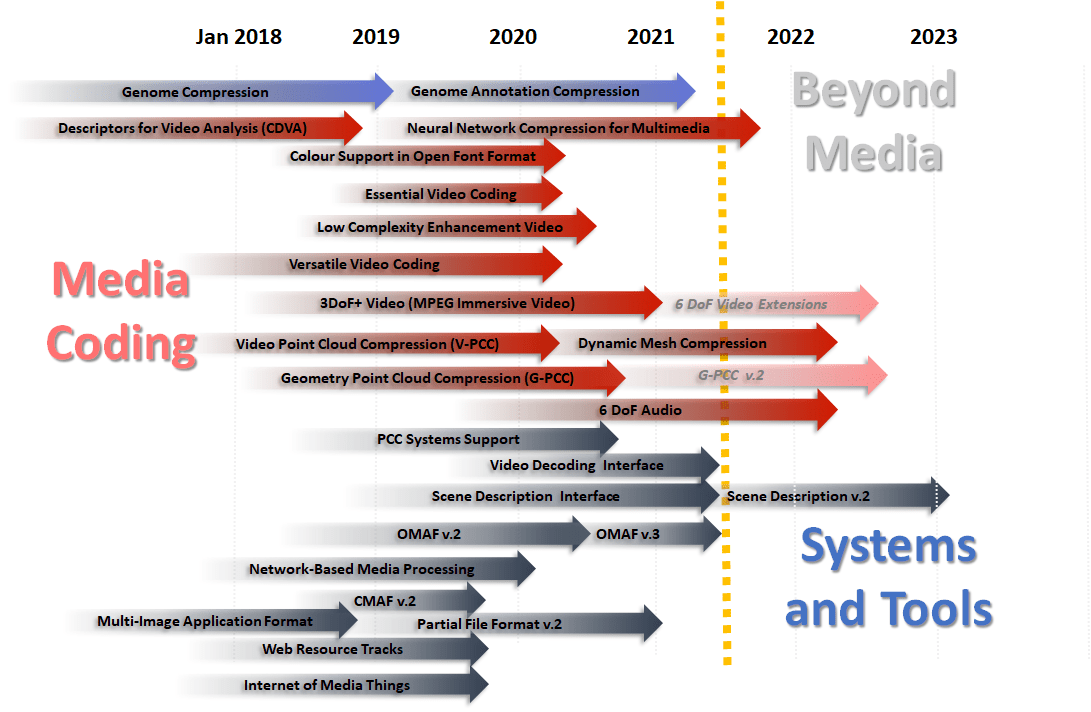

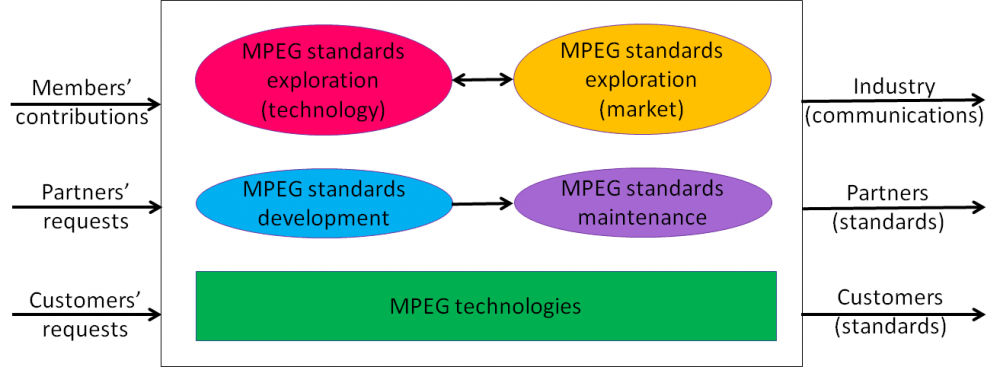

From what I have published so far on this blog it should be clear that MPEG is an unusual ISO working group (WG). To mention a few, duration (31 years), early use of ICT (online document management system in use since 1995), size (1500 experts registered and 500 attending), organisation (the way the work of 500 experts work on multiple projects), number of standards produced (more than any other JTC 1 subcommittee), impact on the industry (1 T$ in devices…