Quality, more quality and more more quality

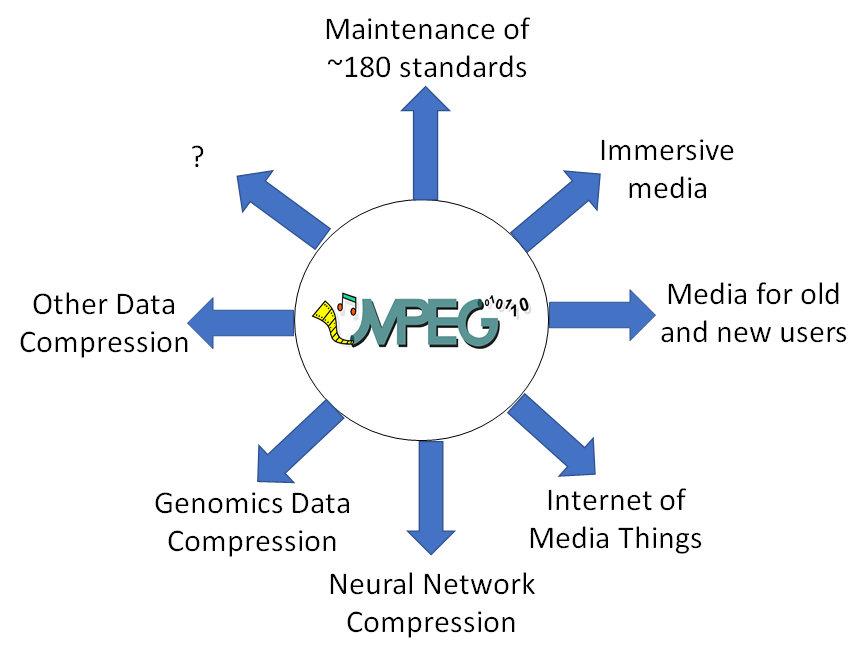

Quality measurement is an essential ingredient of the MPEG business model that targets the development of the best performing standards that satisfy given requirements. MPEG was not certainly the first to discover the importance of media quality assessment. Decades ago, when still called Comité Consultatif International des Radiocommunications (CCIR), ITU-R developed Recommendation 500 - “Methodologies for the subjective assessment of the quality of television images”. This recommendation guided the work of television labs for decades. It was not possible, however,…