Table of contents

- Introduction

- A little bit of history

- Video and audio generate a lot of bits

- More challenges for the future

- Even ancient digital media need compression

- There are no limits to the use of compression

- A bright future, or maybe not

- Acknowledgements

People say that ours is the digital age. Indeed, most of the information around us is in digital form and we can expect that what is not digital now will soon be converted to that form.

But is being digital what matters? In this paper I will show that being digital does not make information more accessible or easier to handle. Actually being digital may very well mean being less able to do things. Media information becomes accessible (even liquid) and processable only if it is compressed.

Compression is the enabler of the evolving media rich communication society that we value.

In the early 1960s the telco industry felt ready to enter the digital world. They decided to digitise the speech signal by sampling it at 8 kHz with a nonlinear 8 bits quantisation. Digital telephony exists in the network and it is no surprise that not many know about it because hardly ever was this digital speech compressed.

In the early 1980s Philips and Sony developed the compact disc (CD). Stereo audio was digitised by sampling the audio waveforms at 44.1 kHz with 16 bits linear and stored on a laser disc. This was a revolution in the audio industry because consumers could have an audio quality that did nor deteriorate with time (until, I mean, the CD stopped playing altogether). Did the user experience change? Definitely. For the better? Some people, even today, disagree.

In 1980 ITU-T defined the Group 3 facsimile standard. In the following decades hundreds of millions Group 3 digital facsimile devices were installed worldwide. Why? Because it cut transmission time of an A4 sheet from 6 min (Group 1), or 3 min (Group 2) to about 1 min. If digital fax had not used compression, transmission with a 9.6 kbit/s modem (a typical value of that time) would have taken more than required by Group 1 analogue facsimile.

A digital uncompressed photo of, say, 1 Mpixel would take half an hour on a 9.6 kbit/s modem (and it was probably never used in this form), but with a compression of 60x it would take half a minute. An uncompressed 3 min CD track on the same modem would take in excess of 7 h, but compressed at 96 kbit/s would take about 30 min. That was the revolution brought about by the MP3 audio compression that changed music for ever.

Digital television was specified by ITU-R in 1980 with luminance and the two colour difference signals sampled at 13.5 and 6.75 MHz, respectively, at 8 bits per sample. The result was an exceedingly high bitrate of 216 Mbit/s. As such it never left the studio if not on bulky magnetic tapes. However, when MPEG developed the MPEG-2 standard capable of yielding studio-quality video compressed at a bitrate of 6 Mbit/s and it became possible to pack 4 TV programs (or more) where there was just one analogue program, TV was no longer the same.

In 1987 several European countries adopted the GSM specification. This time speech was digitised and compressed at 13 kbit/s and GSM spread to all the world.

The bottom line is that being digital is pleasantly good, but it is so much practically better being compressed digital.

Video and audio generate a lot of bits

Digital video, in the uncompressed formats used so far, generates a lot of bits and new formats will continue doing so. This is described in Table 1 that gives the parameters of some of the most important video formats, assuming 8 bits/sample. High Definition with an uncompressed bitrate of ~829 Mbit/s is now well established and Ultra High Definition with an uncompressed bitrate of 6.6 Gbit/s is fast advancing. So-called 8k with a bitrate of 26.5 Gbit/s seems to be the preferred choice for Virtual Reality and higher resolution values are still distant, but may be with us before we are even aware of them.

Table 1: Parameters of video formats

| # Lines | # Pixels/ line | Frame frequency | Bitrate (Gbit/s) | |

| “VHS” | 288 | 360 | 25 | 0.031 |

| Standard definition | 576 | 720 | 25 | 0.166 |

| High definition | 1080 | 1920 | 25 | 0.829 |

| Ultra high definition | 2160 | 3840 | 50 | 6.636 |

| 8k (e.g. for VR) | 4320 | 7680 | 50 | 26.542 |

| 16k (e.g. for VR) | 8640 | 15360 | 100 | 212.336 |

Compression has been and will continue being the enabler of the practical use of all these video formats. The first format in Table 1 was the target of MPEG-1 compression which reduced the uncompressed 31 Mbit/s to 1.2 Mbit/s at a quality comparable to video distribution of that time (VHS). Today it may be possible to send 31 Mbit/s through the network, but no one would do it for a VHS type of video. They would possibly do it for a compressed UHD Video.

In its 30 years of existence MPEG has constantly pushed forward the limits of video compression. This is shown in the third column of Table 2 where it is possible to see the progress of compression over the years: going down in the column, every cell gives the additional compression provided by a new “generation” of video compression compared to the previous one.

Table 2: Improvement in video compression (MPEG standards)

| Std | Name | Base | Scalable | Stereo | Depth | Selectable viewpoint | Date |

| 1 | Video | VHS | – | – | – | 92/11 | |

| 2 | Video | SD | -10% | -15% | – | – | 94/11 |

| 4 | Visual | -25% | -10% | -15% | – | – | 98/10 |

| 4 | AVC | -30% | -25% | -25% | -20% | 5/10% | 03/03 |

| H | HEVC | -60% | -25% | -25% | -20% | 5/10% | 13/01 |

| I | Immersive Video | ? | ? | ? | ? | ? | 20/10 |

The fourth column in Table 2 gives the additional saving (compared to the third column) offered by scalable bitstreams compared to simulcasting two bitstreams of the same original video at different bitrates (scalable bitstreams contain, in the same bitstream, two or more bitstreams of the same scene at different rates). The fifth column gives the additional saving offered by stereo coding tools compared to independent coding of two cameras pointing to the same scene. The sixth column gives the additional saving (compared to the fifth column) obtained by using depth information and the seventh column gives the additional cost (compared to the sixth column) of giving the viewer the possibility to select the viewpoint. The last column gives the date MPEG approved the standard and the last row refers to the next video coding standard under development of which 2020/10 is the expected time of approval.

Table 3 gives the reduction in number of bits required to represent pixels in a compressed bitstream through 3 video compression standards applied to different resolutions (TV-HDTV-UHDTV) at typical bitrates (4-8-16 Mbi/s).

Table 3: Reduction in bit/pixel as new standards appear

816

Std | Name | Application | Mbit/s | Bit/pixel |

| 2 | Video | TV | 4 | 0.40 |

| 4 | AVC | HDTV | 8 | 0.15 |

| H | HEVC | UHDTV | 16 | 0.04 |

MPEG has pushed the limits of audio compression as well. The first columns of Table 4 gives the different types of sound formats (full channels.effects channels) and the sampling frequency. The next columns give the MPEG standard, the target bitrate of the standard and the performance (as subjective audio quality) achieved by the standard at the target bitrate(s)

Table 4: Audio systems

Sampling freq. (kHz) | MPEG Std | kbit/s | Performance | |

| Speech | 8 | – | 64 | Toll Quality |

| CD | 44.1 | – | 1,411 | CD Quality |

| Stereo | 48 | 1-2-4 | 128 –> 32 | Excellent to Good |

| 5.1 surround | 48 | 2-4 | 384 –> 128 | Excellent to Good |

| 11.1 immersive | 48 | H | 384 | Excellent |

| 22.2 immersive | 48 | H | 1,500 | Broadcast Quality |

More challenges for the future

Many think that the future of entertainment is more digital data generated by sampling the electromagnetic and acoustic fields that we call light and sound, respectively. Therefore MPEG is investigating the digital representation of the former by acting in 3 directions.

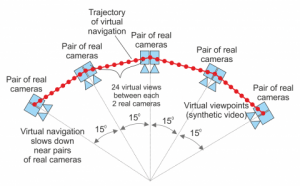

The first direction of investigation uses “sparse sensing elements” capable of capturing both the colour of a point and the distance (depth) of the point from the sensor. Figure 1 shows 5 pairs of physical cameras shooting a scene and a red line of points at which sophisticated algorithms can synthesise the output of virtual cameras.

Figure 1: Real and virtual cameras

Figure 2 shows the output of a virtual camera moving along the red line of Figure 1.

Figure 2: Free navigation example – fencing

(courtesy of Poznan University of Technology, Chair of Multimedia Telecommunications and Microelectronics)

The second direction of investigation uses “dense sensing elements” and comes in two variants. In the first variant each sensing element captures light coming from a direction perpendicular to the sensor plane and, in the second illustrated by Figure 3, each “sensor” is a collection of sensors capturing light from different directions (plenoptic camera).

Figure 3: Plenoptic camera

The second variant of this investigation tries to reduce to manageable levels the amount of information generated by sensors that capture the light field.

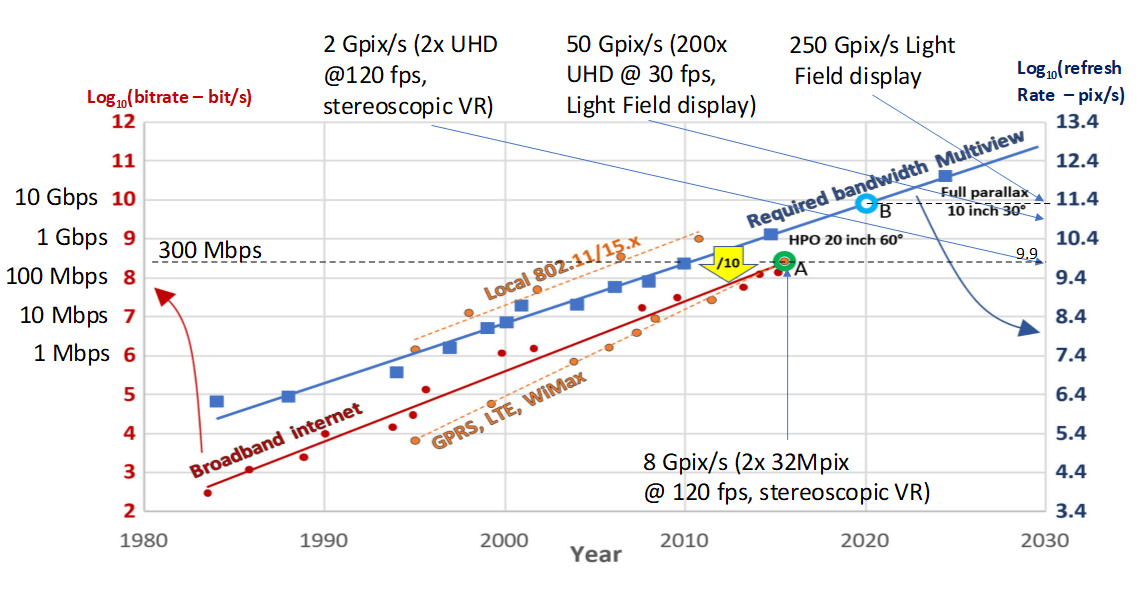

Figure 4 shows the expected evolution of

- The pixel refresh rate of light field displays (right axis [1]) and the bitrate (left axis) required for transmission when HEVC compression is applied (blue line)

- The available broadband and high-speed local networks bitrates (red/orange lines and left axis [2], [3]).

Figure 4: Sensor capabilities, broadband internet and compression

(courtesy of Gauthier Lafruit and Marek Domanski)

The curves remain substantially parallel and separated by a factor of 10. i.e. one unit in log10 (yellow arrow). More compression is needed and MPEG is indeed working to provide it with its MPEG-I project. Actually, today’s bandwidth of 300 Mbps (green dot A) is barely enough to transmit video at 8 Gpix/s (109.9 on the right axis) for high-quality stereoscopic 8k VR displays at 120 frames/s compressed with HEVC.

In 2020 we expect light field displays that project hundreds of high-resolution images in various directions for glasses-free VR to reach point B on the blue line. Again HEVC compression is not sufficient to transmit all data over consumer-level broadband networks. However, this will be possible over local networks, since the blue line stays below the top orange line in in 2020.

The third direction of investigation uses “point clouds”. These are unordered sets of points in a 3D space, used in several industries, and typically captured using various setups of multiple cameras, depth sensors, LiDAR scanners, etc., or synthetically generated. Point clouds have recently emerged as representations of the real world enabling immersive forms of interaction, navigation, and communication.

Point clouds are typically represented by extremely large amounts of data, which is a significant barrier for mass market applications. Point cloud data not only includes spatial location (X,Y,Z) but also colour (R,G,B or YCbCr), reflectance, normals, and other data depending on the needs of the volumetric application domain. MPEG is developing a standard capable of compressing a point cloud to levels that are compatible with today’s networks reaching consumers. This emerging standard compresses 3D data by leveraging decades of 2D video coding technology development and combining 2D and 3D compression technologies. This approach allows industry to take advantage of existing hardware and software infrastructures for rapid deployment of new devices for immersive experiences.

Targeted applications for point cloud compression include immersive real-time communication, six Degrees of Freedom (6 DoF) where the user can walk in a virtual space, augmented/mixed reality, dynamic mapping for autonomous driving, and cultural heritage applications.

The first video (Figure 5) shows a dynamic point cloud representing a lady that a viewer can see from any viewpoint.

Figure 5: Video representing a dynamic point cloud (courtesy of i8)

The second video by Replay Technology shows how a basketball player, represented by a point cloud as in Figure 5, could be introduced in a 360° video and how viewers could create their own viewpoint or view path of the player.

The third video (Figure 6) represents a non-entertainment application where cars equipped with Lidars could capture the environment they navigate as point clouds for autonomous driving or recording purposes.

Figure 6: Point cloud for automotive (courtesy of Mitsubishi)

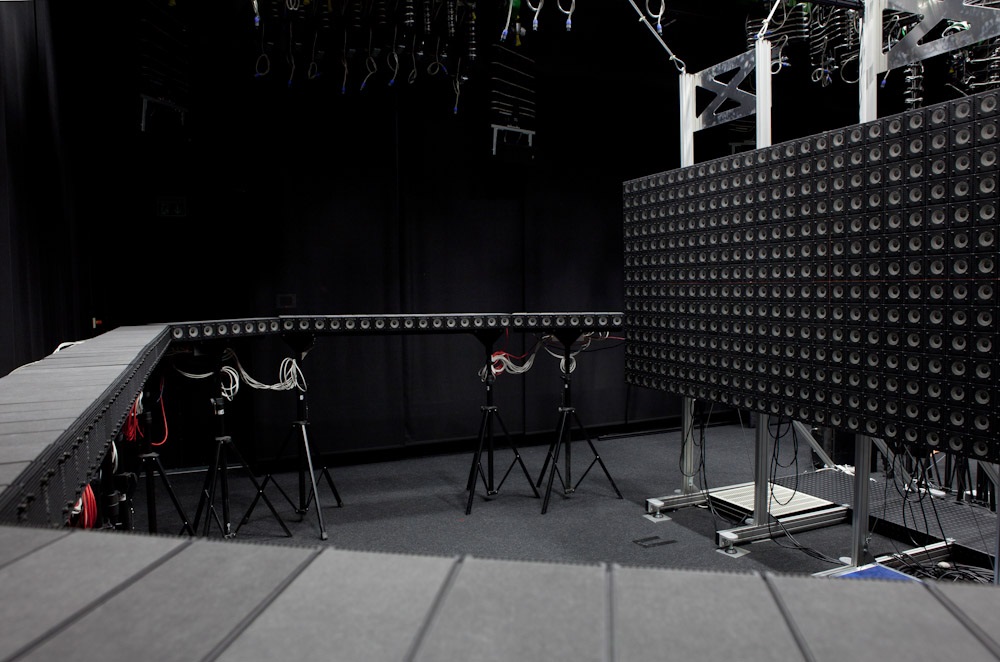

Dense sensing elements are also applicable to audio capture and coding. Wave Field Synthesis is a technique in which a grid or array of sensors (microphones) are spaced at least as closely as one-half the highest wavelength in the sound signal (1.7 cm for 20 kHz). Such an array can be used to capture the performance of a symphony orchestra, where the array is placed between the orchestra and the audience in the concert hall. When that captured signal is played out to an identically placed set of loudspeakers, the acoustic wave field at every seat in the concert hall can be correctly reproduced (subject to the effects of finite array size). Hence, with this technique, every seat in the “reproduction” hall has the exact experience as with the live performance. Figure 7 represents a Wave Field Synthesis laboratory installation.

Figure 7: Wave Field Synthesis equipment (courtesy of Fraunhofer IDMT)

Even ancient digital media need compression

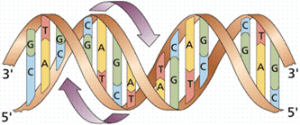

So far we have been talking of media which are intrinsically analogue but can be converted to a digital representation. The roots of nature, however, are digital and so are some of its products, such as the genome, which can be considered as a special type of “program” designed to “run” on a special type of “computer” – the cell. The program is “written” with an alphabet of 4 symbols (A, T, G and C) and physically carried as four types of nucleobases called adenine, thymine, guanine and cytosine on a double helix created by the bonding of adenine and thymine, and cytosine and guanine (see Figure 8).

Figure 8: The double helix of a genome

Each of the cells (~ 37 trillion for a human weighing 70 kg) carries a hopefully intact copy of the “program”, pieces of which it runs to synthesise proteins for its specific needs (troubles arise when the original “program” lacks the instructions to create some vital proteine or when copies of the “program” have changed some vital instructions).

The genome is the oldest – and naturally made – instance of digital media. The length of the human “program” is significant: ~ 3.2 billion base pairs, equivalent to ~800 MBytes.

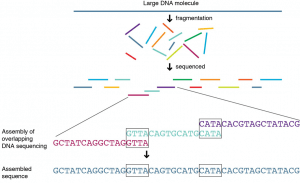

While a seemingly simple entity like a cell can transcript and execute the program contained in the genome or parts of it, humans have a hard time even reading it. Devices called “sequencing machines” do the job, but rather lousily, because they are capable of reading only random fragments of the DNA and of outputting the values of these fragments in a random order.

Figure 9 depicts a simplified description of the process, which also shows how these “reads” must be aligned, using a computer program, against a “reference” DNA sequence to eventually produce a re-assembled DNA sequence.

Figure 9: Reading, aligning and reconstructing a genome

To add complexity to this already complex task, sequencing machines also generate a number (the quality score) that corresponds to the quality of each base call. Therefore, sequencing experiments are typically configured to try to provide many reads (e.g. ~200) for each base pair. This means that reading a human genome might well generate ~1.5 TBytes.

Transporting, processing and managing this amount of data is very costly. Some ASCII formats exist for these genomic data: FASTQ, a container of non-aligned reads and Sequence Alignment Mapping (SAM), a container of aligned/mapped reads. Zip is applied to reduce the size of both FASTQ and SAM, generating zipped FASTQ blocks and BAM (Binary version of SAM) with a compression of ~5. However compression performance is poor, data access awkward and maintenance of the formats a problem.

MPEG was made aware of this situation and, in collaboration with ISO TC 276 Biotechnology, is developing the MPEG-G standard for genomic information representation (including compression) that it plans to approve in January 2019 as FDIS. The target of the standard is not compression of the genome per se, but compression of the many reads related to a DNA sample as depicted in Figure 9. Of course the standard provides lossless compression.

Unlike the BAM format where data with different statistical properties are put together and then zip-compressed, MPEG uses a different and more effective approach:

- Genomic data are represented with statistically homogeneous descriptors

- Metadata associated to classified reads are represented with specific descriptors

- Individual descriptors sub-sets are compressed achieving a compression in the range of 100x

- Descriptors sub-sets are stored in Access Units for selective access via standard API

- Compressed data are packetised thus facilitating the development of streaming applications

- Enhanced data protection mechanisms are supported.

Therefore MPEG-G not only saves storage space and transmission time (the 1.5 TBytes mentioned above could be compressed down to 15 GBytes) but also makes genomic information processing more efficient with an estimated improvement of data access times of around 100. Additionally MPEG-G promises, as for other MPEG standards, to provide more efficient technologies in the future using a controlled process.

It may be time to update the current MPEG logo from what it has been so far

to a new logo

There are no limits to the use of compression

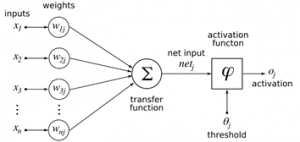

There is another type of “program” called “neural network” that is acquiring more and more importance. As a DNA sample contains simple instructions to create a complex organism, so a neural network is composed of very simple elements assembled to solve complex problems. The technology is more than 50 years old but it has recently received a new impetus and even appears – as Artificial Intelligence – in ads directed to the mass market.

As a human neuron, the neural network element of Figure 10 collect inputs from different elements, processes them and, if the result is above a threshold, generates an activation signal.

Figure 10: An element of a neural network

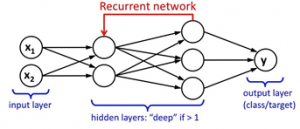

As in the human brain it is also possible to feed back the output signal to the source (see Figure 11).

Figure 11: Recurrency in neural networks

Today, neural networks for image understanding consist of complex configurations and several million weights (see Figure 10) and it is already possible to execute specific neural-network based tasks on recent mobile devices. Why should we then not be able to download to a mobile device a neural network that best solves a particular problem that can range from image understanding to automatic translation to gaming?

The answer is yes but, if the number of neural network-based applications increases, if more users want to download them to their devices and if applications grow in size to solve ever more complex problems, compression will be needed and will play the role of enabler of a new age of mobile computing.

MPEG is currently investigating if and how it can use its compression expertise to develop technologies that efficiently represent neural networks of several million neurons used in some of its standards under development. Unlike MPEG-G, compression of neural networks can be lossy, if the user is willing to trade algorithm efficiency with compression rate.

Implicitly or explicitly, all big social phenomena like MPEG are based on a “social contract”. Which are the parties to and what is the “MPEG social contract” about?

When MPEG started, close to 30 years ago, it was clear that there was no hope of developing decently performing audio and video compression standards after so many companies and universities had invested for decades in compression research. So, instead of engaging in the common exercise of dodging patents, MPEG decided to develop its standards having as goal the best performing standards, irrespective of the IPR involved. The deal offered to all parties was a global market of digital media products, services and applications offering interoperability to billions of people generating hefty royalties to patents holders.

MPEG did keep its part of the deal. Today MPEG-based digital media enable major global industries, allow billions of people to create and enjoy digital media interoperably and provide royalties worth billions of USD (NB: ISO/IEC and MPEG have no role in royalties or in the agreements leading to them).

Unfortunately some parties have decided to break the MPEG social contract. The HEVC standard, the latest MPEG standard targeting unrestricted video compression, was approved in January 2013. Close to 5 years later, there is no easy way to get a licence to practice that standard.

What I am going to say is not meant to have and should not be interpreted in a legal sense. Nevertheless I am terribly serious about it. Whatever the rights granted to patent holders by the laws, isn’t depriving billions of people, thousands of companies and hundreds of patent holders of the benefits of a standard like HEVC and, presumably, other future MPEG standards, a crime against humankind?

I would like to thank the MPEG subgroup chairs, activity chairs and the entire membership (thousands of people over the years) for making MPEG what it is recognised for – the source of digital media standards that have changed the world for the better.

Hopefully they will have a chance to continue doing so in the future as well.

References

[1] “Future trends of Holographic 3D display,” http://www-oid.ip.titech.ac.jp/holo/holography_when.html

[2] “Nielsen’s law of internet bandwidth,” https://www.nngroup.com/articles/law-of-bandwidth/

[3] “Bandwidth growth: nearly what one would expect from Moore’s law,” https://ipcarrier.blogspot.be/2014/02/bandwidth-growth-nearly-what-one-would.html

Posts in this thread (in bold this post)

- The MPEG ecosystem

- Why is MPEG successful?

- MPEG can also be green

- The life of an MPEG standard

- Compression standards and quality go hand in hand

- Digging deeper in the MPEG work

- MPEG communicates

- How does MPEG actually work?

- Life inside MPEG

- Data Compression Technologies – A FAQ

- It worked twice and will work again

- Compression standards for the data industries

- 30 years of MPEG, and counting?

- The MPEG machine is ready to start (again)

- IP counting or revenue counting?

- Business model based ISO/IEC standards

- Can MPEG overcome its Video “crisis”?

- A crisis, the causes and a solution

- Compression – the technology for the digital age

- On my Charles F. Jenkins Lifetime Achievement Award

- Standards for the present and the future