So far MPEG has developed, is completing or is planning to develop 22 standards for a total of 201 specifications. For those not in MPEG, and even for some active in MPEG, there is natural question: what is the purpose of all these standards? Assuming that the answer to this question is given, a second one pops up: is there a logic in all these MPEG standards?

Depending on the amount of understanding of the MPEG phenomenon, you can receive different answers ranging from

“There is no logic. MPEG started its first standard with a vision of giving the telco and CE industries a single format. Later it exploited the opportunities that that its growing expertise allowed.”

to

“There is a logic. The driver of MPEG work was to extend its vision to more industries leveraging its assets while covering more functionalities.”

I will leave it to the reader to decide where to place their decision on this continuum of possibilities after reading this article that will only deal with the first 5 standards.

MPEG-1

The goal of MPEG-1 was to leverage the manufacturing power of the Consumer Electronics (CE) industry to develop the basic audio and video compression technology for an application that was considered particularly attractive when MPEG was established (1988), namely interactive audio and video on CD-ROM. This was the logic of the telco industry who thought that their future would be “real time audio-visual communication” but did not have a friendly industry to ask to develop the terminal equipment.

The bitrate of 1.5 Mbit/s mentioned in the official title of MPEG-1 Coding of moving pictures and associated audio at up to about 1,5 Mbit/s was an excellent common point for the telecom industry with their ADSL technology whose first generation targeted that bitrate and for the CE industry whose Compact Disc had a throughput of 1.44 Mbit/s (1.2 for the CD-ROM). With that bitrate, compression technology of the late 1980’s could only deal with a rather low, but still acceptable resolution (1/2 the horizontal and 1/2 the vertical resolution obtained by subsampling every other field, so that the input video is progressive), Considering that audio had to be impeccable (that is what humans want), at least 200 kbit/s had to be assigned to audio.

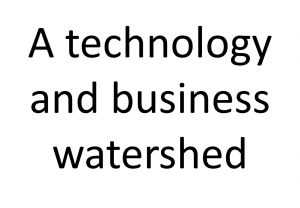

The figure below depicts the model of an MPEG-1 decoder

Figure 1 – Model of the MPEG-1 standard

The structure adopted for MPEG-1 set the pattern for most MPEG standards:

- Part 1 – Systems specifies how to combine one or more audio and video data streams with timing information to form a single stream (link)

- Part 2 – Video specifies the video coding algorithm applied to so-called SIF video of ¼ the standard definition TV (link)

- Part 3 – Audio specifies the audio compression. Audio is stereo and can be compressed with 3 different perfomance “layers”: layer 1 is for an entry level digital audio, layer 2 for digital broadcasting and layer 3, aka MP3, for digital music. The MPEG-1 Audio layers were the predecessors of MPEG-2 profiles (and of most subsequent MPEG standards) (link)

- Part 4 – Compliance testing (link)

- Part 5 – Software simulation (link).

MPEG-2

MPEG-2 was a more complex beast to deal with. A digitised TV channel can yield 20-24 Mbit/s, depending on the delivery system (terrestrial/satellite broadcasting or cable TV). Digital stereo audio can take 0.2 Mbit/s and standard resolution 4 Mbit/s (say a little less with more compression). Audio could be multichannel (say, 5.1) and hopefully consume less bitrate for a total bitrate of a TV program of 4 Mbit/s. Hence the bandwidth taken by an analogue TV program can be used for 5-6 digital TV programs.

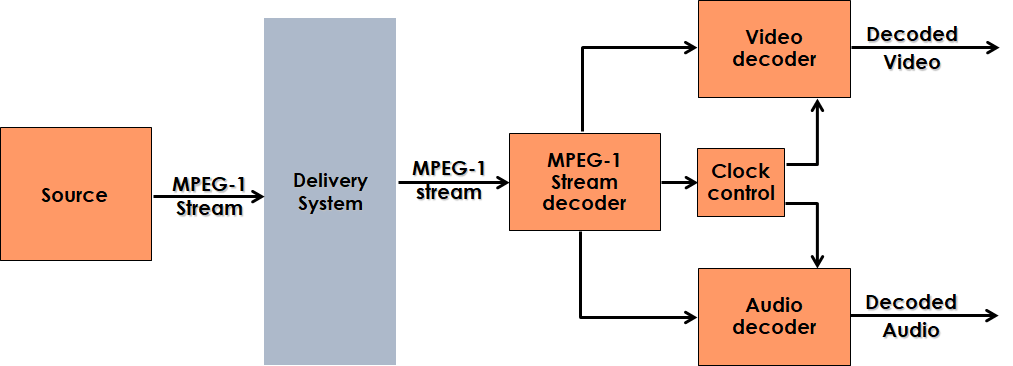

The fact that digital TV programs part of a multiplex may come from independent sources and that digital channels in the real world are subject to errors force the design of an entirely different Systems layer for MPEG-2. The fact that users need to access other data sent in a carousel, that in an interactive scenario (with a return channel) there is a need for session management and that a user may interact with a server forced MPEG to add a new stream for user-to-network and user-to-user protocols.

In conclusion the MPEG-2 model is a natural extension of the MPEG-1 model (superficially, the DSM-CC line, but the impact is more pervasive).

Figure 2 – Model of the MPEG-2 standard

The official title of MPEG-2 is Generic coding of moving pictures and associated audio information. It was originally intended for coding of standard definition television (MPEG-3 was expected to deal with coding of High Definition Television). As the work progressed, however, it became clear that a single format for both standard and high definition was not only desirable but possible. Therefore the MPEG-3 project never took off.

The standard is not specific of a video resolution (this was already the case for MPEG-1 Video) but rationalises the notion of profiles, i.e. assemblies of coding tools and levels a notion that applies to, say, resolution, bitrate etc. Profiles and levels have subsequently adopted in most MPEG standardisation areas.

The standard is composed of 10 parts, some of which are

- Part 1 – Systems specifies the Systems layer to enable the transport of a multichannel digital TV stream on a variety of delivery media (link)

- Part 2 – Video specifies the video coding algorithm. Video is interlaced and may have a wide range of resolutions with support to scalability and multiview in appropriate profiles (link)

- Part 3 – Audio specifies a MPEG-1 Audio backward-compatible multichannel audio coding algorithm. This means that an MPEG-1 Audio decoder is capable of extracting and decoding an MPEG-1 Audio bitstream (link)

- Part 6 – Extensions for DSM-CC specifies User-to-User and User-to-Network protocols for both broadcasting and interactive applications. For instance DSM-CC can be used to enable such functionalities as carousel or session set up (link)

- Part 7 – Advanced Audio Coding (AAC) specifies a non backward compatible multichannel audio coding algorithm. This was done because backward compatibility imposes too big a penalty for some applications, e.g. those that do not need backward compatibility (link), the first time MPEG was forced to develop two standards for apparently the same applications.

MPEG-4

MPEG-4 had the ambition of bringing interactive 3D spaces to every home. Media objects such as audio, video, 2D graphics were an enticing notion in the mid-1990’s. The WWW had shown that it was possible to implement interactivity inexpensively and the extension to media interactivity looked like it would be the next step. Hence the official title of MPEG-4 Coding of audio-visual objects.

This vision did not become true and one could say that even today it is not entirely clear what is interactivity and what is the interactive media experience a user is seeking, assuming that just one exists.

Is this a signal that MPEG-4 was a failure?

- Yes, it was a failure, and so what? MPEG operates like a company. Its “audio-visual objects” product looked like a great idea, but the market thought differently.

- No, it was a success, because 6 years after MPEG-2, MPEG-4 Visual yielded some 30% improvement in terms of compression.

- Yes, it was a failure because a patent pool dealt a fatal blow with their “content fee” (i.e. “you pay royalties by the amount of time you stream”).

- No it was a success because MPEG-4 has 34 parts, the largest number ever achieved by MPEG in a standard, that include some of the most foundational and successful standards such as the AAC audio coding format, the MP4 File Format, the Open Font Format and, of course the still ubiquitous Advanced Video Coding AVC video coding format whose success was not dictated so much by the 20% more compression that it delivers compared to MPEG-4 Visual (always nice to have), but to the industry-friendly licence released by a patent pool. Most important, the development of most MPEG standards is driven by a vision. Therefore, users have available a packaged solution, but they can also take the pieces that they need.

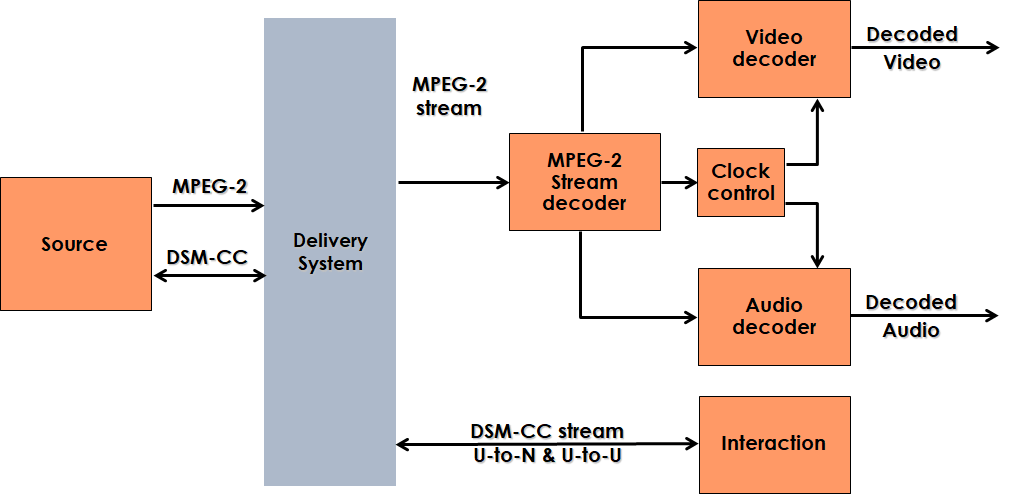

Figure 3 – Model of the MPEG-4 standard

An overview of the entire MPEG-4 standard is available here. The standard is composed of 34 parts, some of which are

- Part 1 – Systems specifies the means to interactively and synchronously represent and deliver audio-visual content composed of various objects (link)

- Part 2 – Visual specifies the coded representation of visual information in the form of natural objects (video sequences of rectangular or arbitrarily shaped pictures) and synthetic visual objects (moving 2D meshes, animated 3D face and body models, and texture) (link).

- Part 3 – Audio specifies a multi-channel perceptual audio coder with transparent quality compression of Compact Disc music coded at 128 kb/s that made it the standard of choice for many streaming and downloading applications (link)

- Part 6 – Delivery Multimedia Integration Framework (DMIF) specifies interfaces to virtualise the network

- Part 9 – Reference hardware description specifies the VHDL representation of MPEG-4 Visual (link)

- Part 10 – Advanced Video Coding adds another 20% of performance to part 2 (link)

- Part 11 – Scene description and application engine provides a time dependent interactive 3D environment building on VRML (link)

- Part 12 – ISO base media file format specifies a file format that has been enriched with many functionalities over the years to satisfy the needs of the multiple MPEG client industries (link)

- Part 16 – Animation Framework eXtension (AFX) specifies a range of 3D Graphics technologies, including 3D mesh compression (link)

- Part 22 – Open Font Format (OFF) is the result of the MPEG effort that took over an industry initiative (OpenType font format specification), brought it under the folds of international standardisation and expanded/maintained it in response to evolving industry needs (link)

- Part 29 – Web video coding (WebVC) specifies the Constrained Baseline Profile of AVC in a separate document

- Part 30 – Timed text and other visual overlays in ISO base media file format supports applications that need to overlay other media to video (link)

- Part 31 – Video coding for browsers (VCB) specifies a video compression format (unpublished)

- Part 33 – Internet Video Coding (IVC) specifies a video compression format (link).

Parts 29, 31 and 33 are the results of 3 attempts made by MPEG to develop Option 1 Video Coding standards with a good performance. All did not reach the goal because ISO rules allow a company to make a patent declaration without specifying which is the patented technology that the declaring company alleges to be affected by a standard. The patented technologies could not be removed because MPEG did not have a clue about which were the allegedly infringing technologies.

MPEG-7

In the late 1990’s the industry had been captured by the vision of “500 hundred channels” and telcos thought they could offer interactive media services. With the then being deployed MPEG-1 and MPEG-2, and with MPEG-4 under development, MPEG expected that users would have zillions of media items.

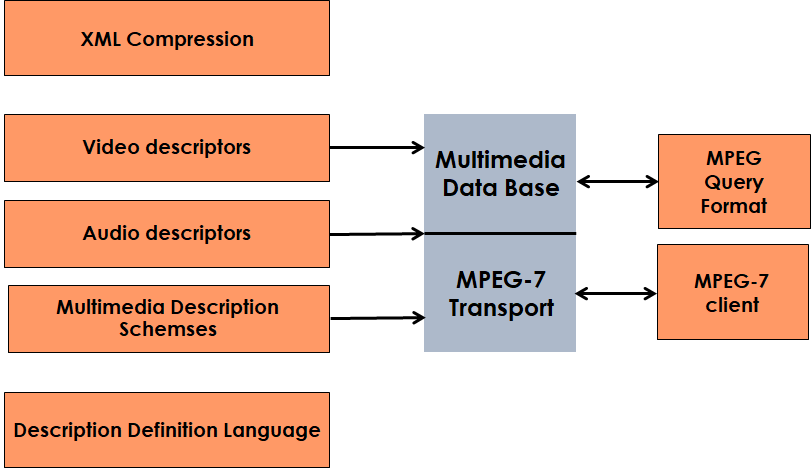

MPEG-7 started with the idea of providing a standard that would enable users to find the media content of their interest in a sea of media content. Definitely MPEG-7 deviates from the logic of the previous two standards and the technologies used reflect that because it provides formats for data (called metadata) extracted from multimedia content to facilitate searching in multimedia items. As shown in the figure, metadata can be classified as Descriptions (metadata extracted from the media items, especially audio and video) and Description Schemes (compositions of descriptions). The figure also shows two additional key MPEG-7 technologies. The first is the Description Definition Language (DDL) used to define new Descriptors and the second id XML Compression. With Descriptions and Description Schemes represented in verbose XML, it is clear that MPEG needed a technology to effectively compress XML.

Figure 4 –Components of the MPEG-7 standard

An overview of the entire MPEG-7 standard is available here. The official title of MPEG-7 is Multimedia content description interface and the standard is composed of 16 parts, some of which are:

- Part 1 – Systems has similar functions as the parts 1 of previous standards. In addition, it specifies a compression method for XML schemas used to represent MPEG-7 Descriptions and Description Schemes.

- Part 2 – Description definition language breaks the Systems-Video-Audio traditional sequences of previous standards to provide a language to describe descriptions (link)

- Part 3 – Visual specifies a wide variety of visual descriptors such as colour, texture, shape, motion etc. (link)

- Part 4 – Audio specifies a wide variety of audio descriptors such as signature, instrument timber, melody description, spoken content description etc. (link)

- Part 5 – Multimedia description schemes specifies description tools that are not visual and audio ones, i.e., generic and multimedia description tools such as description of the content structural aspects (link)

- Part 8 – Extraction and use of MPEG-7 descriptions explains how MPEG-7 descriptions can be practically extracted and used

- Part 12 – Query format defines format to query multimedia repositories (link)

- Part 13 – Compact descriptors for visual search specifies a format that can be used to search images (link)

- Part 15 – Compact descriptors for video analysis specifies a format that can be used to analyse video clips (link).

MPEG-21

In the year 1999 MPEG understood that its technologies were having a disruptive impact on the media business. MPEG thought that the industry should not fend of a new threat with old repressive tools. The industry should convert the threat into an opportunity, but there were no standard tools to do that.

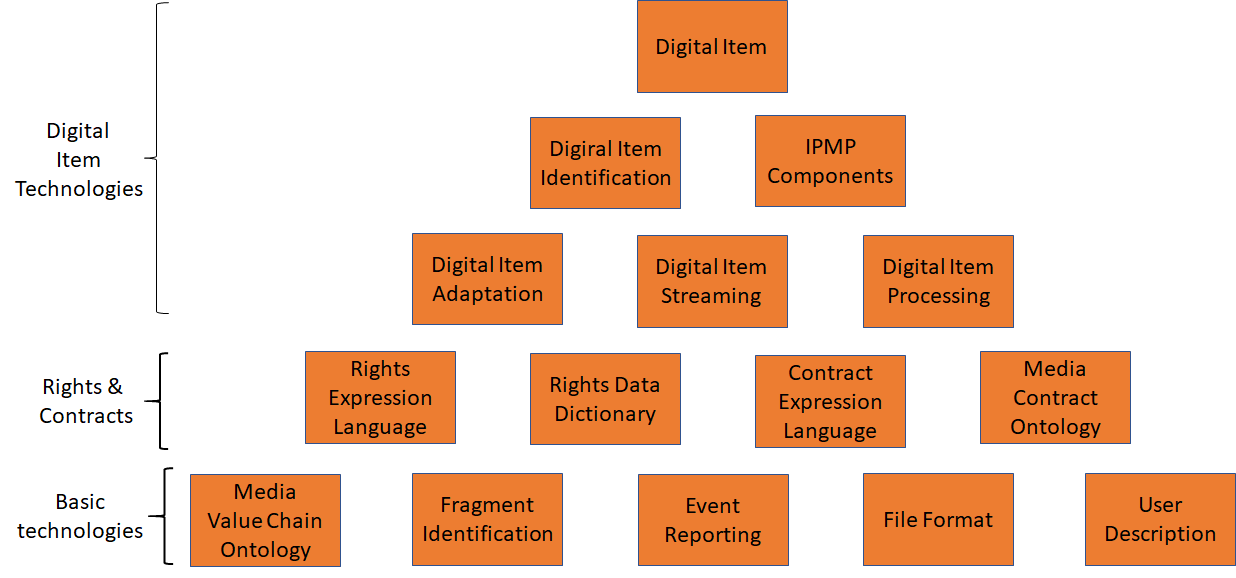

MPEG-21 is the standard resulting from the effort by MPEG to create a framework that would facilitate electronic commerce of digital media. It is a suite of specifications for end-to-end multimedia creation, delivery and consumption that can be used to enable open media markets.

This is represented in the figure below. The basic MPEG-21 element is the Digital Item, a structured digital object with a standard representation, identification and metadata, around which a number of specifications were developed. MPEG-21 also includes specifications of Rights and Contracts and basic technologies such as the file format.

Figure 5 –Components of the MPEG-21 standard

An overview of the entire MPEG-21 standard, whose official title of MPEG-21 is Multimedia Framework, is available here. Some of the 21 MPEG-21 parts are briefly described below:

- Part 2 – Digital Item Declaration specifies Digital Item (link)

- Part 3 – Digital Item Identification specifies identification methods for Digital Items and their components (link)

- Part 4 – Intellectual Property Management and Protection (IPMP) Components specifies how to include management and protection information and protected parts in a Digital Item (link)

- Part 5 – Rights Expression Language specifies a language to express rights (link)

- Part 6 – Rights Data Dictionary specifies a dictionary of rights-related data (link)

- Part 7 – Digital Item Adaptation specifies description tools to enable optimised adaptation of multimedia content (link)

- Part 15 – Event Reporting specifies a format to report events (links)

- Part 17 – Fragment Identification of MPEG Resources specifies a syntax for URI Fragment Identifiers (link)

- Part 19 – Media Value Chain Ontology specifies an ontology for Media Value Chains (link)

- Part 20 – Contract Expression Language specifies a language to express digital contracts (link)

- Part 21 – Media Contract Ontology specifies an ontology for media-related digital contracts (link).

Conclusions

The standards from MPEG-1 to MPEG-21 contain 86 specifications covering the entire 30 years of MPEG activity. They should give a rough idea of how MPEG started from the vision of single standards for all industries belonging to what we can call today the “media industry” and has kept on adapting – without disowning – its vision. The original vision has been a seed that has grown – and continues to grow – into a tree. MPEG keeps track of the evolution of technologies to provide more efficient standards and to the needs of the industry with refurbished old and brand new standards.

Posts in this thread (in bold this post)

- Is there a logic in MPEG standards?

- Forty years of video coding and counting

- The MPEG ecosystem

- Why is MPEG successful?

- MPEG can also be green

- The life of an MPEG standard

- Genome is digital, and can be compressed

- Compression standards and quality go hand in hand

- Digging deeper in the MPEG work

- MPEG communicates

- How does MPEG actually work?

- Life inside MPEG

- Data Compression Technologies – A FAQ

- It worked twice and will work again

- Compression standards for the data industries

- 30 years of MPEG, and counting?

- The MPEG machine is ready to start (again)

- IP counting or revenue counting?

- Business model based ISO/IEC standards

- Can MPEG overcome its Video “crisis”?

- A crisis, the causes and a solution

- Compression – the technology for the digital age

- On my Charles F. Jenkins Lifetime Achievement Award

- Standards for the present and the future