Introduction

Last week I published as an article of this blog the Executive summary of my book A vision made real – Past, present and future of MPEG. This time I publish as an article the first chapter of the book about the four aspects of the media distribution business and their enabling technologies:

- Analogue media distribution describes the vertical businesses of analogue media distribution;

- Digitised media describes media digitisation and why it was largely irrelevant to distribution;

- Compressed digital media describes how industry tried to use compression for distribution;

- Digital technologies for media distribution describes the potential structural impact of compressed digital media for distribution.

Analogue media distribution

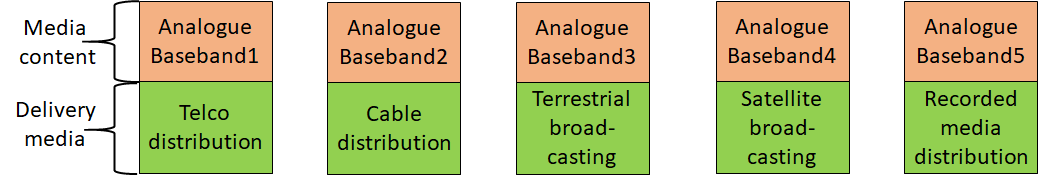

In the 1980’s media were analogue, the sole exception being music on compact disc (CD). Different industries were engaged in the business of distributing media: telecommunication companies distributed music, cable operators distributed television via cable, terrestrial and satellite broadcasters did the same via terrestrial and satellite networks and different types of businesses distributed all sort of recorded media on physical support (film, laser discs, compact cassette, VHS/Betamax cassette, etc.).

Even if the media content was exactly the same, say a movie, the baseband signals that represented the media content were all different and specific of the delivery media: film for the theatrical vision, television for the terrestrial or satellite network or for the cable, a different format for video cassette. Added to these technological differences caused by the physical nature of the delivery media, there were often substantial differences that depended on countries or manufacturers.

Figure 1 depicts the vertical businesses of the analogue world when media distribution was a collection of industry-dependent distribution systems each using their own technologies for the baseband signal. The figure is simplified because it does not take into account the country- or region-based differences within each industry.

Figure 1 – Analogue media distribution

Figure 1 – Analogue media distribution

Digitised media

Since the 1930’s the telecom industry had investigated digitisation of signals (speech at that time). In the 1960’s technology could support digitisation and ITU created G.711, the standard for digital speech, i.e. analogue speech sampled at 8 kHz with a nonlinear 8 bits quantisation. For several decades digital speech only existed in the (fixed) network, but few were aware of it because the speech did not leave the network as bits.

It was necessary to wait until 1982 for Philips and Sony to develop the Compact Disc (CD) which carried digital stereo audio, specified in the “Red Book”: analogue stereo audio sampled at 44.1 kHz with 16 bits linear. It was a revolution because consumers could have an audio quality that did nor deteriorate with time.

In 1980 a digital video standard was issued by ITU-R. The luminance and the two colour-difference signals were sampled at 13.5 and 6.75 MHz, respectively, at 8 bits per sample yielding an exceedingly high bitrate of 216 Mbit/s. It was a major achievement, but digital television never left the studio if not as bulky magnetic tapes.

The network could carry 64 kbit/s of digital speech, but no consumer-level delivery media of that time could carry the 1.41 Mbit/s of digital audio and much less the 216 Mbit/s of digital video. Therefore, in the 1960s studies on compression of digitised media begun in earnest.

Compressed digital media

In the 1980’s compression research yielded its first fruits:

- In 1980 ITU approved Recommendation T.4: Standardization of Group 3 facsimile terminals for document transmission. In the following decades hundreds of million Group 3 facsimile devices were installed worldwide because, thanks to compression, transmission time of an A4 sheet was cut from 6 min (Group 1 facsimile), or 3 min (Group 2 facsimile) to about 1 min.

- In 1982 ITU approved H.100 (11/88) Visual telephone systems for transmission of videoconference at 1.5/2 Mbit/s. Analogue videoconferencing was not unknown at that time because several companies had trials, but many hoped that H.100 would enable diffused business communication.

- In 1984 ITU started the standardisation activity that would give rise to Recommendations H.261: Video codec for audio-visual services at p x 64 kbit/s approved in 1988.

- In the mid-1980s several CE laboratories were studying digital video recording for magnetic tapes. One example was the European Digital Video Recorder (DVS) project that people expected would provide a higher-quality alternative to the analogue VHS or Betamax videocassette recorder, as much as CDs were supposed to be a higher-quality alternative to LP records.

- Still in the area of recording, but for a radically new type of application – interactive video on compact disc – Philips and RCA were independently studying methods to encode video signals at bitrates of 1.41 Mbit/s (the output bitrate of CD).

- In the same years CMTT, a special Group of the ITU dealing with transmission of radio and television programs on telecommunication networks, had started working on a standard for transmission of digital television for “primary contribution” (i.e. transmission between studios).

- In 1987 the Advisory Committee on Advanced Television Service was formed to devise a plan to introduce HDTV in the USA and Europe was doing the same with their HD-MAC project.

- At the end of the 1980’s RAI and Telettra had developed an HDTV codec for satellite broadcasting that was used for demonstrations during the Soccer World cup in 1990 and General Instrument had showed its Digicipher II system for terrestrial HDTV broadcasting in the bandwidth of 6 MHz used by American terrestrial television.

Digital technologies for media distribution

The above shows how companies, industries and standards committees were jockeying for a position in the upcoming digital world. These disparate and often uncorrelated initiatives betrayed the mindset that guided them: future distribution of digital media would have an arrangement similar to the one sketched in Figure 1 for analogue media: the “baseband signal” of each delivery medium would be digital, thus using new technology, but different for each industry and possibly for each country/region.

In the analogue world these scattered roles and responsibilities were not particularly harmful because the delivery media and the baseband signals were so different that unification had never been attempted. But in the digital world unification made a lot of sense.

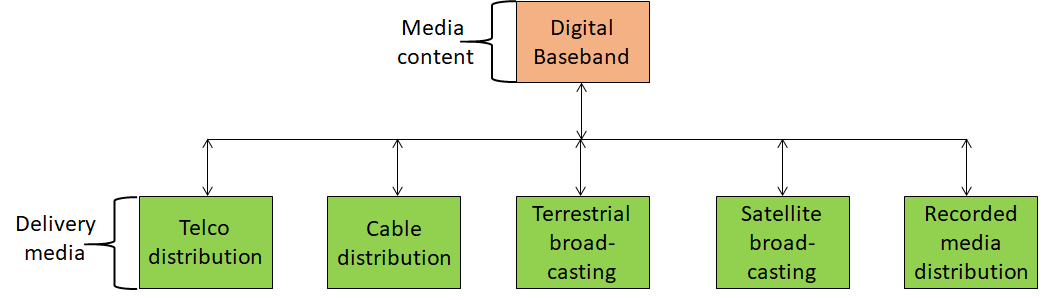

MPEG was conceived as the organisation that would achieve unification and provide generic, i.e. domain-independent digital media compression. In other words, MPEG envisaged the completely different set up depicted in Figure 2.

Figure 2 – Digital media distribution (à la MPEG)

In retrospect that was a daunting task. If its magnitude had been realised, it would probably never have started.

Posts in this thread

- The discontinuity of digital technologies

- The impact of MPEG standards

- Still more to say about MPEG standards

- The MPEG work plan (March 2019)

- MPEG and ISO

- Data compression in MPEG

- More video with more features

- Matching technology supply with demand

- What would MPEG be without Systems?

- MPEG: what it did, is doing, will do

- The MPEG drive to immersive visual experiences

- There is more to say about MPEG standards

- Moving intelligence around

- More standards – more successes – more failures

- Thirty years of audio coding and counting

- Is there a logic in MPEG standards?

- Forty years of video coding and counting

- The MPEG ecosystem

- Why is MPEG successful?

- MPEG can also be green

- The life of an MPEG standard

- Genome is digital, and can be compressed

- Compression standards and quality go hand in hand

- Digging deeper in the MPEG work

- MPEG communicates

- How does MPEG actually work?

- Life inside MPEG

- Data Compression Technologies – A FAQ

- It worked twice and will work again

- Compression standards for the data industries

- 30 years of MPEG, and counting?

- The MPEG machine is ready to start (again)

- IP counting or revenue counting?

- Business model based ISO/IEC standards

- Can MPEG overcome its Video “crisis”?

- A crisis, the causes and a solution

- Compression – the technology for the digital age