Introduction

In my post Compression – the technology for the digital age, I called data compression “the enabler of the evolving media-rich communication society that we value”. Indeed, data compression has freed the potential of digital technologies in facsimile, speech, photography, music, television, video on the web, on mobile, and more.

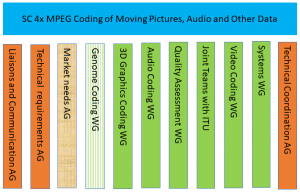

MPEG has been the main contributor to the stellar performance of the digital media industries: content, services and devices – hardware and software. Something new is brewing in MPEG because it is applying its compression toolkit to other non-media data such as point clouds and DNA reads from high speed sequencing machines, and plans on doing the same on neural networks.

Recently UNI – the Italian ISO member body – has submitted to ISO the proposal to create a Technical Committee on Data Compression Technologies (DCT, in the following) with the mandate to develop standards for data compression formats and related technologies to enable compact storage as well as inter-operable and efficient data interchange among users, applications and systems. MPEG activities, standards and brand should be transferred to DCT.

With its track record, MPEG has proved that it is possible to provide standard data compression technologies that are the best in their class at a given time to serve the needs of the digital media industries. The DCT proposal to extend the successful MPEG “horizontal standards” model to the “data industries” at large, of course retaining the media industries.

That giving all industries the means to enjoy the benefits of more data accessed and used by systematically applying standard data compression to all data types is not an option, but a necessity is proved by Forbes. Estimates indicate that by 2025 the world will produce 163 Zettabytes of data. What will we do of those data, when today only 1% of the data created is actually processed?

Why Data Compression is important to all

Handling data is important for all industries: in some cases it is their raison d’être, in other cases it is crucial to achieve the goal and in still others data is the oil lubricating the gears.

Data appear in manifold scenarios: in some cases a few sources create huge amounts of continuous data, in other cases many sources create large amounts of data and in still others each of a very large number of sources creates small discontinuous chunks of data.

Common to all scenarios is the need to store, process and transmit data. For some industries, such as telecommunication and broadcasting, early adopters of digitisation, the need was apparent from the very beginning. For others the need is gradually becoming apparent now.

Let’s see in some representative examples why industries need data compression.

Telecommunication. Because of the nature of their business, telecommunication operators (telcos) have been the first to be affected by the need to reduce the size of digital data to provide better existing services and/or attractive new services. Today telcos are eager to make their networks available to new sources of data.

Broadcasting. Because of the constraints posed by the finite wireless spectrum on their ability to expand their quality and range of services, broadcasters have always welcome more data compression. They have moved from Standard Definition to High Definition then to Ultra High Definition and beyond (“8k”), but also to Virtual Reality. For each quantum step in the quality of service delivered, they have introduced new compression. More engaging future user experiences will require the ability to transmit or receive ever more data, and ever more types of data.

Public security. MPEG standards are already universally used to capture audio and video information for security or monitoring purposes. However, technology progress enables users to embed more capabilities in (audio and video) sensors, e.g. face recognition, counting of people and vehicles etc., and the sharing of that information in a network of more and more intelligent sensors to drive actuators. New standard data compression technologies are needed to support the evolution of this domain.

Big Data. In terms of data volume, audio and video, e.g. those collected by security devices or vehicles, are probably the largest component of Big Data, as shown by the Cisco study forecasting that by 2021 video on the internet will account for more than 80% of total traffic. Moving such large amounts of information from source to the processing cloud in an economic fashion requires data compression and their processing requires standards that allow the data to be processed independently of the information source.

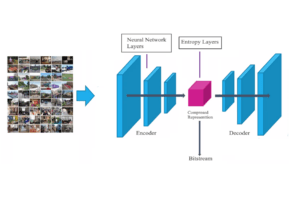

Artificial intelligence uses different types of neural networks, some of which are “large”, i.e. require many Gigabytes and require massive computational complexity. To practically move intelligence across networks, as required by many consumer and professional use scenarios, standard data compression technologies are needed. Compression of neural networks is not only a matter of bandwidth and storage memory, but also of power consumption, timeliness and usability of intelligence.

Healthcare. The healthcare world is already using genomics but many areas will greatly benefit by a 100-fold reduction of the size and the time to access the data of interest. In particular, compression will accelerate the coming-of-age of personalised medicine. As healthcare is often a public policy concern, standard data compression technologies are required.

Agriculture and Food. Almost anything related to agriculture and food has a genomic source. The ability to easily process genomic data thanks to compression opens enormous possibilities to have better agriculture and better food. To make sure that compressed data can be exchanged between users, standardised data compression technologies are required.

Automotive. Vehicles are becoming more and more intelligent devices that drive and control their movement by communicating with other vehicles and fixed entities, sensing the environment, and storing the data for future use (e.g., for assessing responsibilities in a car crash). Data compression technologies are required and, especially when security is involved, the technologies must be standard.

Industry 4.0. The 4th industrial revolution is characterised by “Connection between physical and digital systems, complex analysis through Big Data and real-time adaptation”. Collaborative robots and 3D printing, the latter also for consumer applications, are main components of Industry 4.0. Again, data compression technologies are vital to make Industry 4.0 fly and, to support multi-stakeholder scenarios, technologies should be standard.

Business documents. Business documents are becoming more diverse and include different types of media. Storage and transmission of business documents are a concern if bulky data are part of them. Standards data compression technologies are the principal way to reduce the size of business documents now and more so in the future.

Geographic information. Personal devices consume more and more geographic information and, to provide more engaging user experiences, the information itself is becoming “richer”, which typically means “heavier”. To manage the amount of data data compression technologies must be applied. Global deployment to consumers requires that the technologies be standard.

Blockchains and distributed ledgers enable a host of new applications. Distributed storage of information implies that more information is distributed and stored across the network, hence the need for data compression technologies. These new global distributed scenarios require that the technologies be standard.

Which Data Compression standards?

Data compression is needed if we want to be able to access all the information produced or available anywhere in the world. However, as the amount of data and the number of people accessing it grows, new generations of compression standards are needed. In the case of video, MPEG has already produced five generations of compression standards and one more is under development. In the case of audio, five generations of compression standards have already been produced, with the fifth incorporating extensive use of metadata to support personalisation of the user experience.

MPEG compression technologies have had, and continue to have, extraordinarily positive effects on a range of industries with billions of hardware devices and software applications that use standards for compressing and streaming audio, video, 3D graphics and associated metadata. The universally recognised MP3 and MP4 acronyms demonstrate the impact that data compression technologies have on consumer perception of digital devices and services over the world.

Non-inter-operable silos, however, are the biggest danger in this age of fast industry convergence that only international standards based on common data compression technologies can avoid. Point Clouds and Genomics show that data compression technologies can indeed be re-used for different data types from different industries. Managing different industry requirements is an art and MPEG has developed it over 30 years dealing with such industries as telecom, broadcasting, consumer electronics, IT, media content and service providers and, more recently, bioinformatics. DCT can safely take the challenge and do the same for more industries.

How to develop Data Compression standards?

As MPEG has done for the industries it already serves, DCT should only develop “generic” international standards for compression and coded representation of data and related metadata suitable for a variety of application domains so that the client communities can use them as components for integration in their systems.

The process adopted by MPEG should also be adopted by DCT, namely:

- Identification of data compression requirements (jointly with the target industry)

- Development of the data compression standard (in consultation with the target industry)

- Verification that the standard satisfies the agreed requirements (jointly with the target industry)

- Development of test suites and tools (in consultation with the target industry)

- Maintenance of the standards (upon request of the target industry).

Data Compression is a very specialised field that many technical and business communities in specific domains are ill-equipped to master satisfactorily Even if an industry succeeds in attracting the necessary expertise, the following will likely happen

- The result is less than optimal compared to what could have been obtained from the best experts;

- The format developed is incompatible with other similar formats with unexpected inter-operability costs in an era of convergence;

- The implementation cost of the format is too high because an industry may be unable to offer sufficient returns to developers;

- Test suites and tools cannot be developed because a systematic approach cannot be improvised;

- The experts who have developed the standard are no longer around to ensure its maintenance.

Building the DCT work plan

The DCT work plan will be decided by the National Bodies joining it. However, the following is a reasonable estimate of what that workplan will be.

Data compression for Immersive Media. This is a major current MPEG project that currently comprises systems support to immersive media; video compression; metadata for immersive audio experiences; immersive media metrics; immersive media metadata and network-based media processing (NBMP). A standard for systems support (OMAF) has already been produced, a standard for NBMF is planned for 2019, a video standard in 2020 and an audio standard in 2021. After completing the existing work plan DCT should address the promising light field and audio field compression domains to enable really immersive user experiences.

Data compression for Point Clouds. This is a new, but already quite advanced area of work for MPEG. It makes use of established MPEG video and 3D graphics technologies to provide solutions for entertainment and other domains such as automotive. The first standard will be approved in 2019 but DCT will also work for new generations of point cloud compression standards for delivery in the early 2020s.

Data compression for Health Genomics. This is the first entirely non-media field addressed by MPEG. In October 2018 the first two parts – Transport and Compression – will be completed and the other 3 parts – API, Software and Conformance – will be released in 2019. The work is done in collaboration with ISO/ TC 276 Biotechnologies. Studies for a new generation of compression formats will start in 2019 and DCT will need to drive those studies to completion along with other data types generated by the “health” domain for which data compression standards can be envisaged.

Data compression for IoT. MPEG is already developing standards in the the specific “media” instance of IoT called “Internet of Media Things” (IoMT). This partly relies on the MPEG standard called MPEG-V – Sensors and Actuators Data Coding, which defines a data compression layer that can support different types of data from different types of “things”. The first generation of standards will be released in 2019. DCT will need to liaise with the relevant communities to drive the development of new generations of IoT compression standards.

Data compression for Neural Networks. Several MPEG standards are or will soon employ neural network technologies to implement certain functionalities. A “Call for Evidence” has been issued in July 2018 to get evidence of the state of compression technologies for neural networks after which a “Call for Proposals” will be issued to get the necessary technologies and develop a standard. End of 2021 can be the estimated time of the first neural network compression standard. However, DCT will need to investigate which other compression standards for this extremely dynamic field.

Data compression for Big Data. MPEG has already adapted the ISO Big Data reference model for its “Big Media Data” needs. Specific work has not begun yet and DCT will need to get the involvement of relevant communities, not just in the media domain.

Data compression for health devices. MPEG has considered the need for compression of data generated by mobile health sensors in wearable devices and smartphones to cope with their limited storage, computing, network connectivity and battery. DCT will need to get the involvement of relevant communities and develop data compression standards for heath devices that promote their effective use.

Data compression for Automotive. One of the point cloud compression use cases – efficient storage of the environment captured by sensors on a vehicle – is already supported the Point Cloud Compression standard under development. There are, however, many more types of data that are generated, stored and transmitted inside and outside of a vehicle for which data compression has positive effects. DCT can offer its expertise to the automotive domain to achieve new levels of efficiency, safety and comfort in vehicles.

The list above includes standards MPEG is already deeply engaged in or is already working on. However, the industries that can benefit from data compression standard is much broader than those mentioned above (see e.g., Why Data Compression is important to all) and the main role of DCT will be to actively investigate the data compression needs of industries, get in contact with them and jointly explore new opportunities for standard development.

Is DCT justified?

The purpose of DCT is to make accessible data compression standards, the key enabler of devices, services and application generating digital data, to industries and communities that do not have the necessary estremely specialised expertise to develop and maintain such standards on their own.

The following collects the key justifications for creating DCT:

- Data compression is an enabling technology for any digital data. Data compression has been a business enabler to media production and distribution, tecommunication, and Information and Communication Technologies (ICT) in general by reducing the cost of storing, processing and transmitting digital data. Therefore, data compression will also facilitate enhanced use of digital technologies to other industries that are undergoing – or completing – transition to digital. As it happened for media, by lowering the access threshold to business, in particular to SMEs, data compression will drive industries, to create new business models that will change the way companies generate, store, process, exchange and distribute data.

- Data compression standards trigger virtuous circles. By reducing the amount of data required for transmission, data compression will enable more industries to become digital. Being digital will generate more data and, in turn, further increase the need for data compression standards. Because compressed digital data are “liquid” and easily cross industry borders, “horizontal”. i.e. “generic” data compression standards are required.

- Data compression standards remove closed ecosystems bottlenecks. In closed environments, industry-specific data compression methods are possible. However, digital convergence is driving an increase in data exchange across industry segments. Therefore industry-specific standards will result in unacceptable bottlenecks caused by a lack of interoperability. Reliable, high-performance and fully-maintained data compression standards will help industries avoid the pitfalls of closed ecosystems that limit long-term growth potential.

- Sophisticated technology solutions for proven industry needs. Data compression is a highly sophisticated technology field with 50+ years of history. Creating efficient data compression standards requires a body of specialists that a single industry can ill afford to establish and, more importantly, maintain. DCT will ensure that the needs for specific data compression standards can always be satisfied by a body of experts who identify requirements with the target industries, develop standards, test for satisfactory support of requirements, produce testing tools and suites, and maintain the standards over the years.

- Data compression standards to keep the momentum growing . The industries that have most intensely digitised their products and services prove that their growth is due to their adoption of data compression standards. DCT will offer other industries and communities the means to achieve the same goal with the best standards, compatible with other formats to avoid interoperability costs in an age of convergence, with reduced implementation costs because suppliers can serve a wide global market and with the necessary conformance testing and maintenance support.

- Data compression standards with cross-domain expertise. While the nature of “data” differs depending on the source of data, the MPEG experience has shown that compression expertise transfers well across domains. A good example is MPEG’s Genome Compression standard (ISO/IEC 23092), where MPEG compression experts work with domain experts, combining their respective expertise to produce a standard that is expected to be widely used by the genomic industry. This is the model that will ensure sustainability of a body of data compression experts while meeting the requirements of different industries.

- Proven track record, not a leap in the dark. MPEG has 1400 accredited experts, has produced 175 digital media-related standards used daily by billions of people and collaborates with other communities (currently genomics, point clouds and artificial intelligence) to develop non-audiovisual compression standards. Thirty years of successful projects prove that the MPEG-inspired method proposed for DCT works. DCT will have adequate governance and structure to handle relationships with many disparate client industries with specific needs and to develop data compression standards for each of them. With an expanding industry support, a track record, a solid organisation and governance, DCT will have the means to accomplish the mission of serving a broad range of industries and communities with its data compression standards.

Conclusion

According to the ISO directives these are the steps required to establish a new Technical Committee

- An ISO member body submits a proposal (done by Italy)

- THe ISO Central Secretariat releases a ballot (end of August)

- All ISO member bodies vote on the proposal (during 12 weeks)

- If ISO member bodies accept the proposal the matter is brought to the Technical Management Board (TMB)

- The TMB votes on the proposal (26 January 2029)

An (optimistic?) estimate for the process to end is spring 2019.

Send your comments to leonardo@chiariglione.org

Posts in this thread (in bold this post)

- The MPEG ecosystem

- Why is MPEG successful?

- MPEG can also be green

- The life of an MPEG standard

- Genome is digital, and can be compressed

- Compression standards and quality go hand in hand

- Digging deeper in the MPEG work

- MPEG communicates

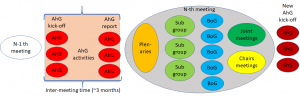

- How does MPEG actually work?

- Life inside MPEG

- Data Compression Technologies – A FAQ

- It worked twice and will work again

- Compression standards for the data industries

- 30 years of MPEG, and counting?

- The MPEG machine is ready to start (again)

- IP counting or revenue counting?

- Business model based ISO/IEC standards

- Can MPEG overcome its Video “crisis”?

- A crisis, the causes and a solution

- Compression – the technology for the digital age

- On my Charles F. Jenkins Lifetime Achievement Award

- Standards for the present and the future