Introduction

Artificial intelligence has reached the attention of mass media and technologies supporting it – Neural Networks (NN) – are being deployed in several contexts affecting end users, e.g. in their smart phones.

If a NN is used locally, it is possible to use existing digital representation of NNs (e.g., NNEF, ONNX). However, these format miss vital features for distributing intelligence, such as compression, scalability and incremental updates.

To appreciate the need for compression let’s consider the case of adjusting the automatic mode of a camera based on recognition of scene/object obtained by using a properly trained NN. As this area is intensely investigated, very soon there will be a new better trained version of the NN or a new NN with additional features. However, as the process to create the necessary “intelligence” usually takes time and labor (skilled and unskilled), in most cases the new created intelligence must be moved from the center to where the user handset is. With today’s NNs reaching a size of several hundred Mbytes and growing, a scenario where millions of users clog the network because they are all downloading the latest NN with great new features looks likely.

This article describes some elements of the MPEG work plan to develop one or more standards that enable compression of neural networks. Those wishing to know more please read Use cases and Requirements, and Call for Proposals.

About Neural Networks

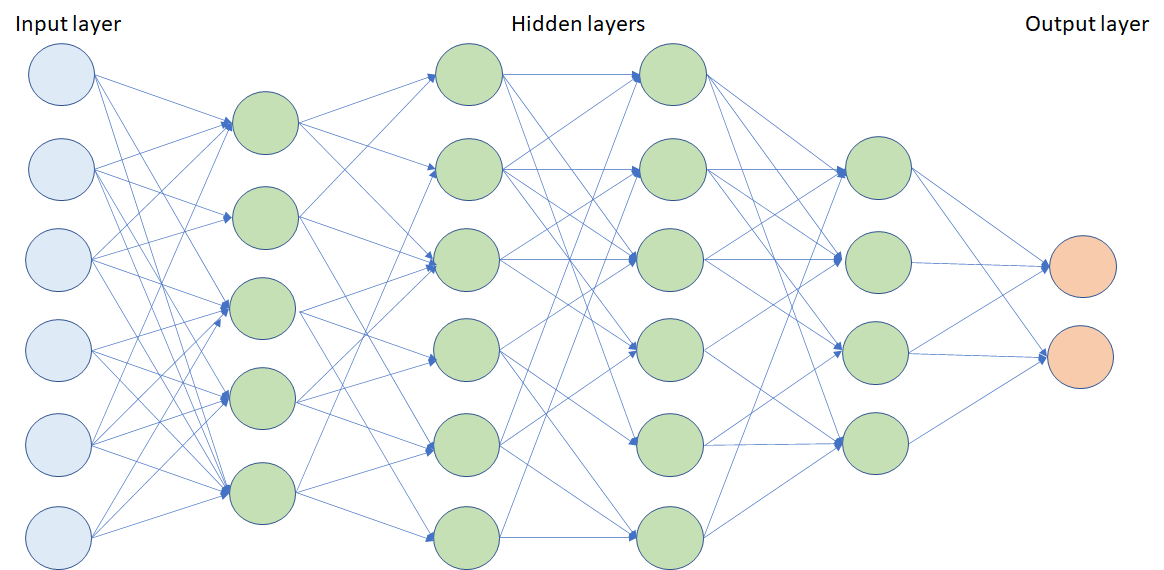

A Neural Network is a system composed of connected nodes each of which can

- Receive input signals from other nodes,

- Process them and

- Transmit an output signal to other nodes.

Nodes are typically aggregated into layers, each performing different functions. Typically the “first layers” are rather specific of the signals (audio, video, various forms of text information etc.). Nodes can send signals to subsequent layers but, depending on the type of network, also to the preceding layers.

Training is the process of “teaching” a network to do a particular job, e.g. recognising a particular object or a particular word. This is done by presenting to the NN data from which it can “learn”. Inference is the process of presenting to a trained network new data to get a response about what the new data is.

When is NN compression useful?

Compression is useful whenever there is a need to distribute NNs to remotely located devices. Depending on the specific use case, compression should be accompanied by other features. In the following two major use cases will be analysed.

Public surveillance

In 2009 MPEG developed the Surveillance Application Format. This is a standard that specifies the package (file format) containing audio, video and metadata to be transmitted to a surveillance center. Today, however, it is possible to introduce to ask the surveillance network to do more more intelligent things by distributing intelligence even down to the level of visual and audio sensors.

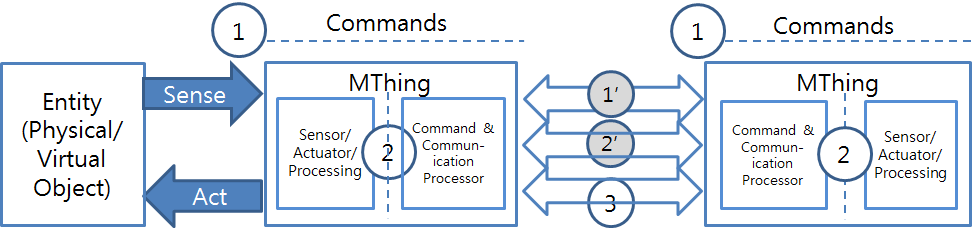

For this more advanced scenarios MPEG is developing a suite of specifications under the title of Internet of Media Things (IoMT) where Media Things (MThing) are the media “versions” of IoT’s Things. The IoMT standard (ISO/IEC 23093) will reach FDIS level in March 2019.

The IoMT reference model is represented in the figure

IoMT standardises the following interfaces:

1: User commands (setup info.) between a system manager and an MThing

1’: User commands forwarded by an MThing to another MThing, possibly in a modified form (e.g., subset of 1)

2: Sensed data (Raw or processed data in the form of just compressed data or resulting from a semantic extraction) and actuation information

2’: Wrapped interface 2 (e.g. for transmission)

3: MThing characteristics, discovery

IoMT is neutral as to the type of semantic extraction or, more generally, to nature of intelligence actually present in the cameras. However, as NNs networks are demonstrating better and better results for visual pattern recognition, such as object detection, object tracking and action recognition, cameras can be equipped with NNs capable to process the information captured to achieve a level of understanding and transmit that understanding through interface 2.

Therefore, one can imagine that re-trained or brand new NNs can be regularly uploaded to a server that distributes NNs to surveillance cameras. Distribution need not be uniform since different neural networks may be needed at different areas, depending on the tasks that need to be specifically carried out at given areas.

NN compression is a vitally important technology to make the described scenarios real because automatic surveillance system may use many cameras (e.g. thousands and even million units) and because, as the technology to create NNs matures, the time between NN updates will progressively become shorter.

Distribution of NN-based apps to devices

There are many cases where compression is useful to efficiently distribute heavy NN-based apps to a large number of devices, in particular mobile. Here 3 case are considered.

- Visual apps. Updating a NN-based camera app in one’s mobile handset will soon become common place. Ditto for the many conceivable application where the smart phone understand some of the objects in the world around. Both will happen at an accelerated frequency.

- Machine translation (speech-to-text, translation, text-to-speech). NN-based translation apps already exist and their number, efficiency, and language support can only increase.

- Adaptive streaming. As AI-based methods can improve the QoE, the coded representation of NNs can initially be made available to clients prior to streaming while updates can be made during streaming to enable better adaptation decisions, i.e. better QoE.

Requirements

The MPEG Call for Proposals identifies a number of requirements that a compressed neural network should satisfy. Even though not all applications need the support of all requirements, the NN comnpression algorithm must eventually be able to support all the identified requirements.

- Compression shall have a lossless mode, i.e. the performance of the compressed NN is exactly the same as the uncompressed NN

- Compression shall have a lossy mode, i.e. the performance of the decompressed NN can be different than the performance of the uncompressed NN of course in exchange for more compression

- Compression shall be scalable, i.e. even if only a subset of the compressed NN is used, there is still a level of performance

- Compression shall support incremental updates, i.e. as more data are received the performance of NN improves

- Decompression shall be possible with limited resources, i.e. with limited processing performance and memory

- Compression shall be error resilient, i.e. if an error occurs during transmission, the file is not lost

- Compression shall be robust to interference, i.e. it is possible to detect that the compressed NN has been tampered with

- Compression shall be possible even if there is no access to the original training data

- Inference shall be possible using compressed NN

- Compression shall supportincremental updates from multiple providers to improve performance of a NN

Conclusions

The currently published Call for Proposals is not requesting technologies for all requirements listed above (which are themselves a subset of all identified requirements). It is expected, however, that the responses to the CfP will provide enough technology to produce a base layer standard that will help the industry move its first steps in this exciting field that will shape the way intelligence is added to things near to all of us.

Posts in this thread

- Moving intelligence around

- More standards – more successes – more failures

- Thirty years of audio coding and counting

- Is there a logic in MPEG standards?

- Forty years of video coding and counting

- The MPEG ecosystem

- Why is MPEG successful?

- MPEG can also be green

- The life of an MPEG standard

- Genome is digital, and can be compressed

- Compression standards and quality go hand in hand

- Digging deeper in the MPEG work

- MPEG communicates

- How does MPEG actually work?

- Life inside MPEG

- Data Compression Technologies – A FAQ

- It worked twice and will work again

- Compression standards for the data industries

- 30 years of MPEG, and counting?

- The MPEG machine is ready to start (again)

- IP counting or revenue counting?

- Business model based ISO/IEC standards

- Can MPEG overcome its Video “crisis”?

- A crisis, the causes and a solution

- Compression – the technology for the digital age

- On my Charles F. Jenkins Lifetime Achievement Award

- Standards for the present and the future