So far, communication standards have been handled in an odd way. Standards are meant to serve the needs of millions, if not billions of people, still the decision about the existence of a standard and what a standard should do is in the hands of people who, no matter how many, are not billions, not millions, not even thousands.

This situation is the end point of the unilateral approach adopted by inventors starting, one can say, from Gutenberg’s moving characters and continuing with Niépce-Daguerre’s photography, Morse’s telegraph, Bell-Meucci’s telephone, Marconi’s radio and tens more.

In retrospect, the times when those people lived were “easy” because each invention satisfied a basic need. They were also “difficult” because technology was so unwieldy. Today, the situation is quite different: basic needs are more than satisfied (at least for a significant part of human beings) while the “other needs” can hardly be addressed by the mentioned unilateral approach at technology use. Today’s technologies get more sophisticated but their number increases by the day.

This is the reason why MPAI – Moving Pictures and Audio Coding for Artificial Intelligence – likes to call itself, as the domain extension says, a “community”. Indeed, MPAI opens its doors to those who have a need for or wish to propose the development of a new standard. As the MPAI “doors” are virtual because all MPAI activities are done online, access to MPAI is all the more immediate and the dream of developing standards driven by direct users’ needs gets closer.

The MPAI standards development process

This MPAI openness should not be taken as a mere “suggestion box”, because MPAI does more than just asking for ideas.

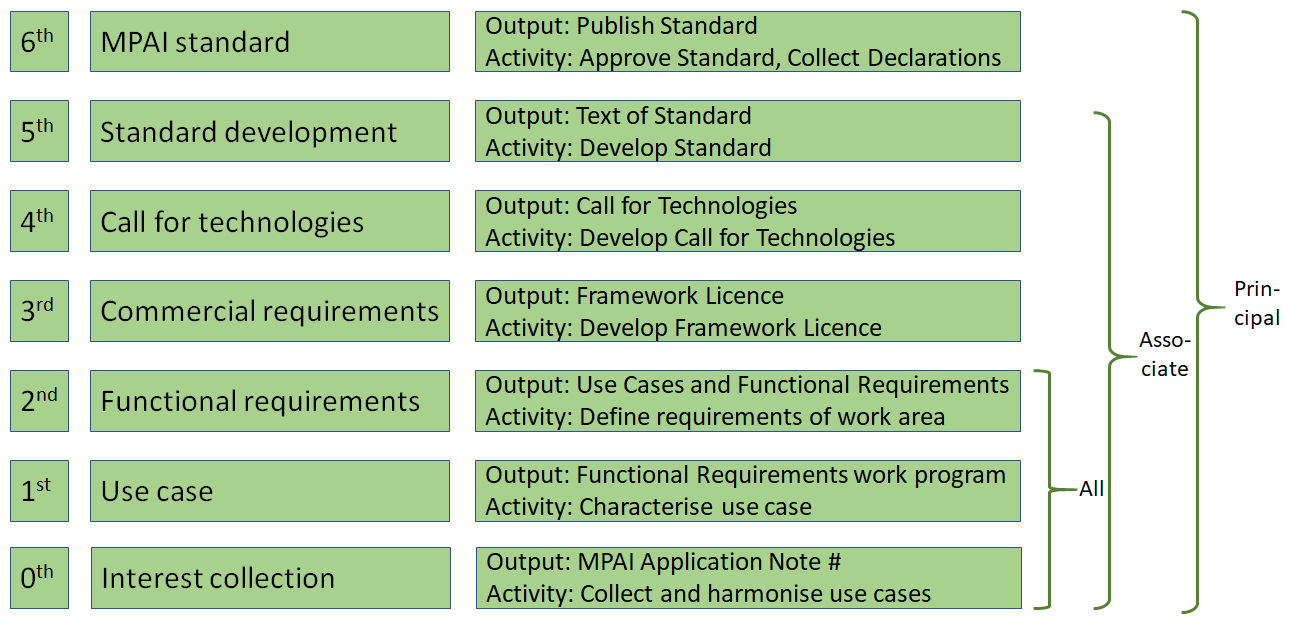

To understand how the MPAI “community” is more than a suggestion box, I need to explain the MPAI process to develop standards, depicted in Figure 1.

Figure 1 – The MPAI standard development stages

Let’s start from the beginning of the process, the bottom. Members – as well as non-members – submit proposals. These are collected and harmonised, some proposals get merged with other similar proposals and some get split because the harmonisation process so demands. The goal is to identify proposals of standard that reflect proponent’s needs while making sense in terms of a specification that may be used across different environments. Non-members can fully participate in this process on par with other members. The result of this process is the definition of one homogeneous area of work called “Use Case”. However, more than one Use Case can also be identified. Each Use Case is described in an Application Note that is made public. An example of Application Note is Context-based Audio Enhancement (MPAI-CAE).

The 1st stage of the process entails a full characterisation of the Use Case and the description of the work program that will produce the Functional Requirements.

The 2nd stage is the actual development of the Functional Requirements of the area of work represented by the Use Case.

The MPAI “openness” is represented by the fact that anybody may participate in the three stages of Interest Collection, Use Case and Functional Requirements. With an exception, though, when a Member makes a proposal that s/he wishes to be exposed to members only. To know more about this can be done read the Invitation to contribute proposals of AI-based coding standards.

The next stage is Commercial Requirements. Why? Because a standard is like any other supply contract. You describe what you supply with its characteristics (Functional Requirements) and at what conditions (Commercial Requirements).

It should be noted that, from this stage on, non MPAI-members are not allowed to participate (but they can become members at any time), because their role of proposing and describing what a standard should do is over.

Antitrust laws do not permit that sellers (technology providers) and buyers (users of the standard) sit together and agree on values such as numbers (of dollars), percentage or dates, but it permit sellers to indicate the conditions, if there are no values. Therefore, the embodiment of the Commercial Requirements, i.e. the Framework Licence, will refrain from adding such details.

Once both Functional and Commercial Requirements are available, MPAI is in a position to draft the call for Technologies (stage 4), review the proposals and develop the standards (stage 5). This is where the role of Associate Members in MPAI ends. Only Principal Members may vote to approve the standard, hence trigger its publication. However, Associate Members may become Principal Members at any time.

The MPAI projects

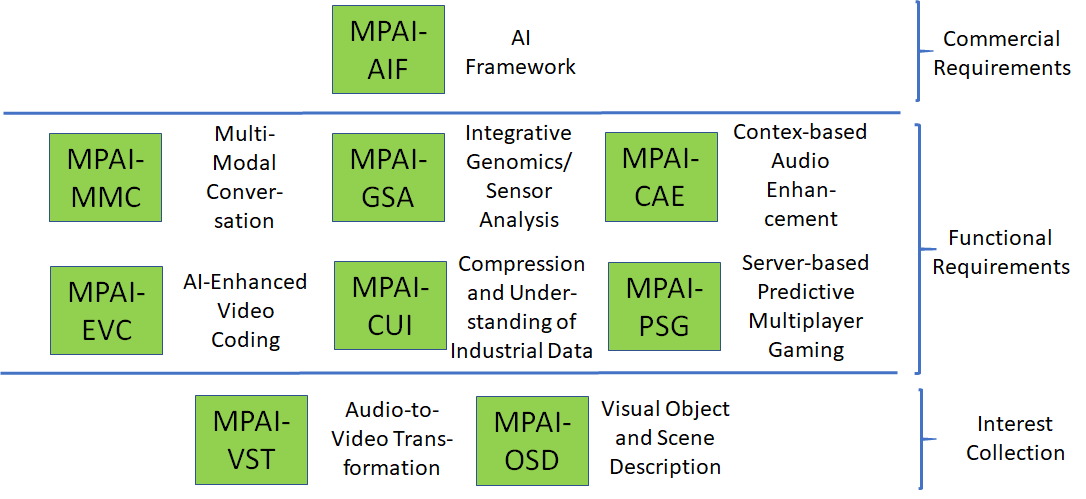

MPAI has been in operation for some 50 days – officially, because a group of interested people immediately started working after MPAI was announced in July 2020. The amount of results is impressive, as shown by Figure 2.

Figure 2 – The MPAI projects on 2020/11/18

In the following a brief account of the current MPAI projects is given.

MPAI-AIF

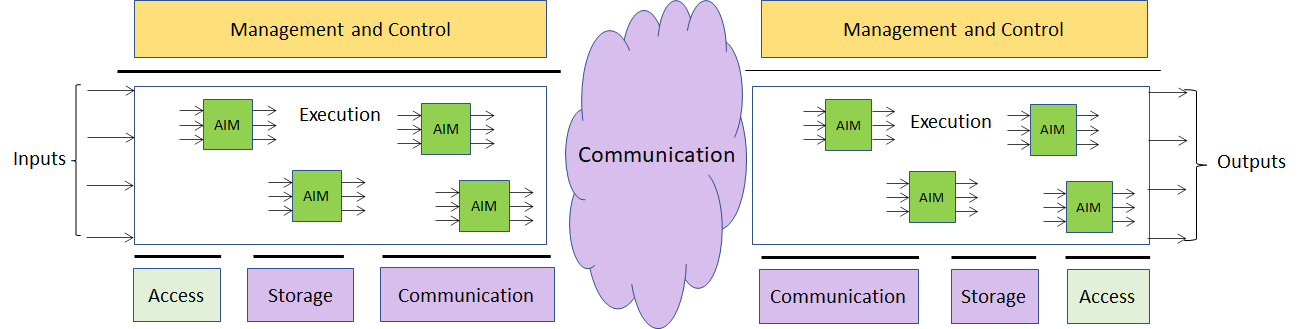

Artificial Intelligence Framework (MPAI-AIF) enables creation and automation of mixed ML-AI-DP processing and inference workflows at scale for the areas of work currently considered at stage 2 of the MPAI work plan.

The said areas of work share the notion of an environment (the Framework) that includes 6 components – Management and Control, Execution, AI Modules (AIM), Communication, Storage and Access. AIMs are connected in a variety of topologies and executed under the supervision of Management and Control. AIMs expose standard interfaces that make them re-usable in different applications.

Figure 3 shows the general MPAI-AIF Reference Model.

Figure 3 – Reference model of the MPAI AI Framework

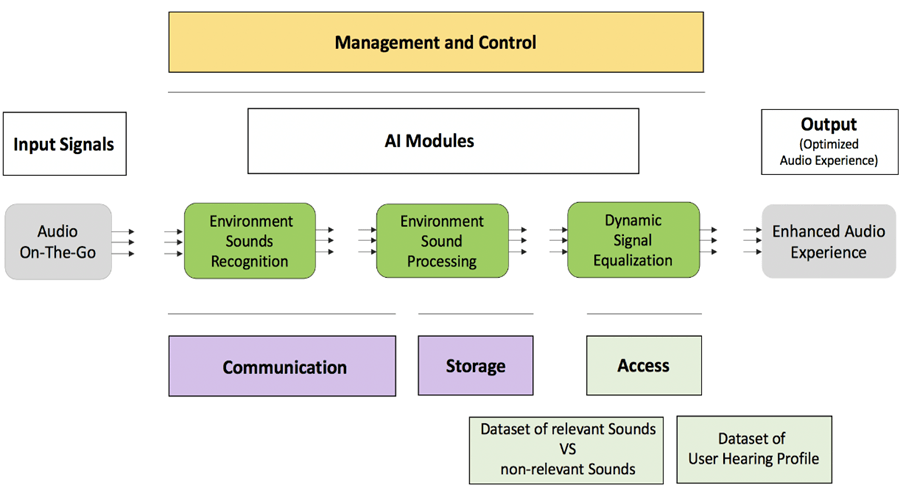

MPAI-CAE

Context-based Audio Enhancement (MPAI-CAE) improves the user experience for several audio-related applications including entertainment, communication, teleconferencing, gaming, post-production, restoration etc. in a variety of contexts such as in the home, in the car, on-the-go, in the studio etc. using context information to act on the input audio content using AI, processing such content via AIMs, and possibly deliver the processed output via the most appropriate protocol.

So far, MPAI-CAE has been found applicable to 11 usage examples, for 4 of which the definition of AIM interfaces is under way: Enhanced audio experience in a conference call, Audio-on-the-go, Emotion enhanced synthesized voice and AI for audio documents cultural heritage.

Figure 4 depicts the operation of the Audio-on-the-go usage example.

Figure 4 – An MPAI-CAE usage example: Audio-on-the-go

MPAI-GSA

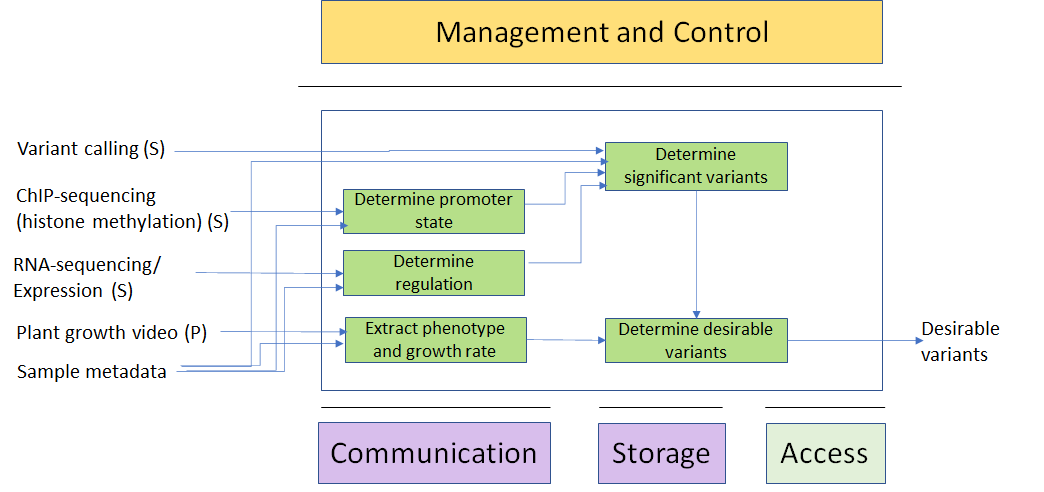

Integrative Genomic/Sensor Analysis (MPAI-GSA) uses AI to understand and compress the result of high-throughput experiments combining genomic/proteomic and other data, e.g. from video, motion, location, weather, medical sensors.

So far, MPAI-GSA has been found applicable to 7 usage examples ranging from personalised medicine to smart farming

Figure 5 depicts the operation of the usage example Smart Farming.

Figure 5 – An MPAI-GSA usage example

MPAI-MMC

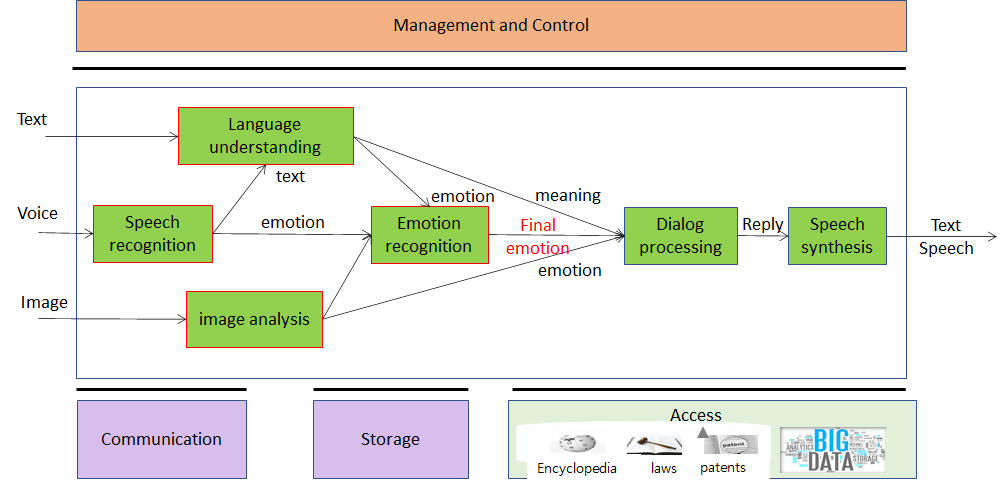

Multi-modal conversation (MPAI-MMC) aims to enable human-machine conversation that emulates human-human conversation in completeness and intensity by using AI.

So far, MPAI-GSA has been found applicable to 3 usage examples: Conversation with emotion, Multimodal Question Answering (QA) and Personalized Automatic Speech Translation.

Figure 6 depicts the operation of usage example Conversation with emotion.

Figure 6 – An MPAI-CAE usage example: Conversation with emotion

Figure 6 – An MPAI-CAE usage example: Conversation with emotion

MPAI-EVC

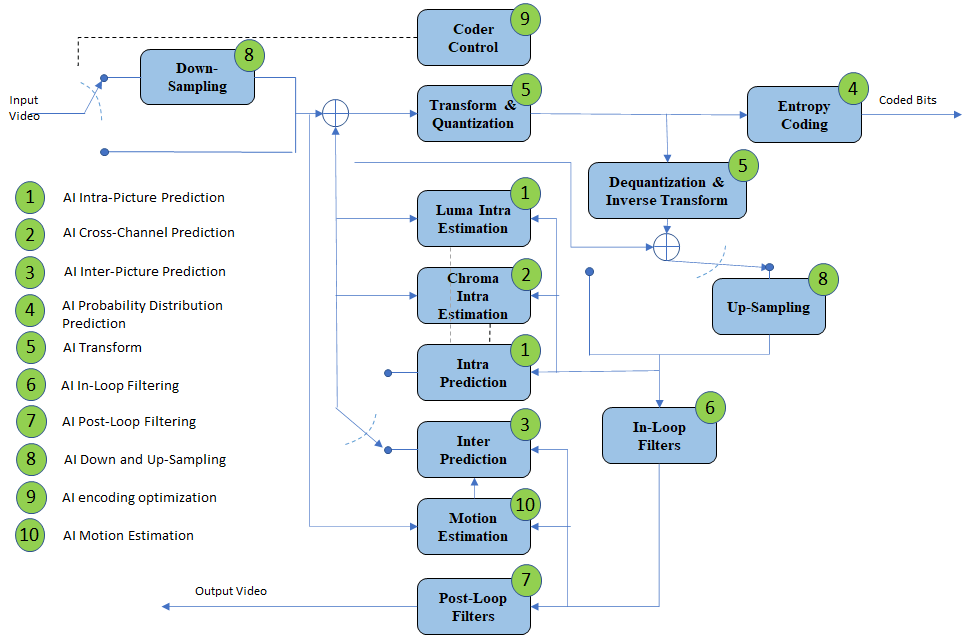

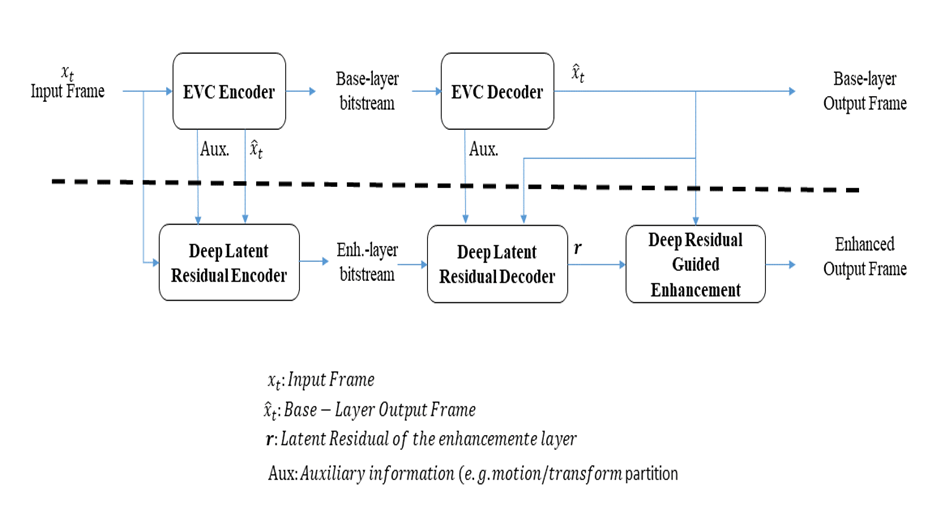

AI-Enhanced Video Coding (MPAI-EVC) is a video compression standard that substantially enhances the performance of a traditional video codec by improving or replacing traditional tools with AI-based tools. Two approaches – Horizontal Hybrid and Vertical Hybrid – are envisaged.

The Horizontal Hybrid approach introduces AI based algorithms combined with traditional image/video codec, replacing one by one the blocks of the traditional schema with AI-based ones. This case can be described by Figure 7 where green circles represent tools that can be replaced or enhanced with their AI-based equivalent.

Figure 7 – A reference diagram for the Horizontal Hybrid approach

The Vertical Hybrid approach enviges an AVC/HEVC/EVC/VVC base layer plus an enhanced machine learning-based layer. This case can be represented by Figure 8.

Figure 8 – A reference diagram for the Vertical Hybrid approach

MPAI is engaged in the MPAI-EVC Evidence Project seeking to find evidence that AI-based technologies provide sufficient improvement to the Horizontal Hybrid approach. A second project on the Vertical Hybrid approach is being considered.

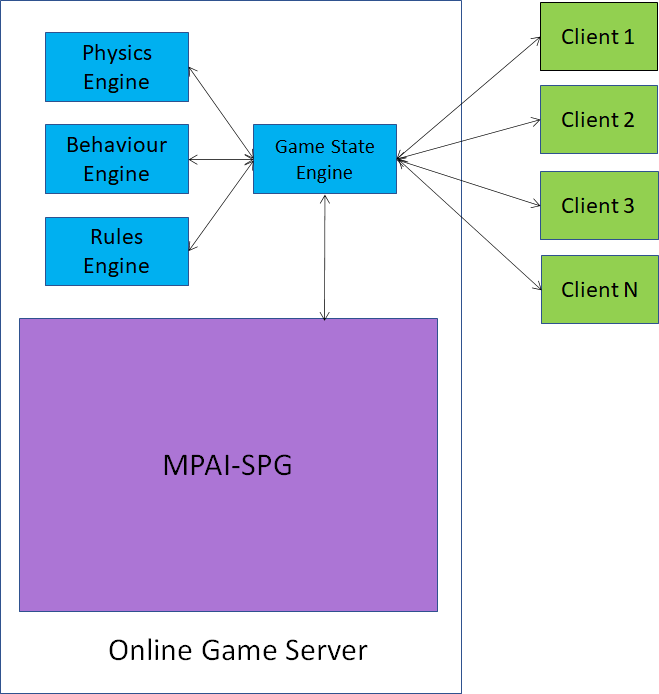

MPAI-SPG

Server-based Predictive Multiplayer Gaming (MPAI-SPG) aims to minimise the audio-visual and game play discontinuities caused by high network latency or packet losses during an online real-time game. In case information from a client is missing, the data collected from the clients involved in a particular game are fed to an AI-based system that predicts the moves of the client whose data are missing.

Figure 9 – Identification of the MPAI-SPG standardisation area

MPAI-CUI

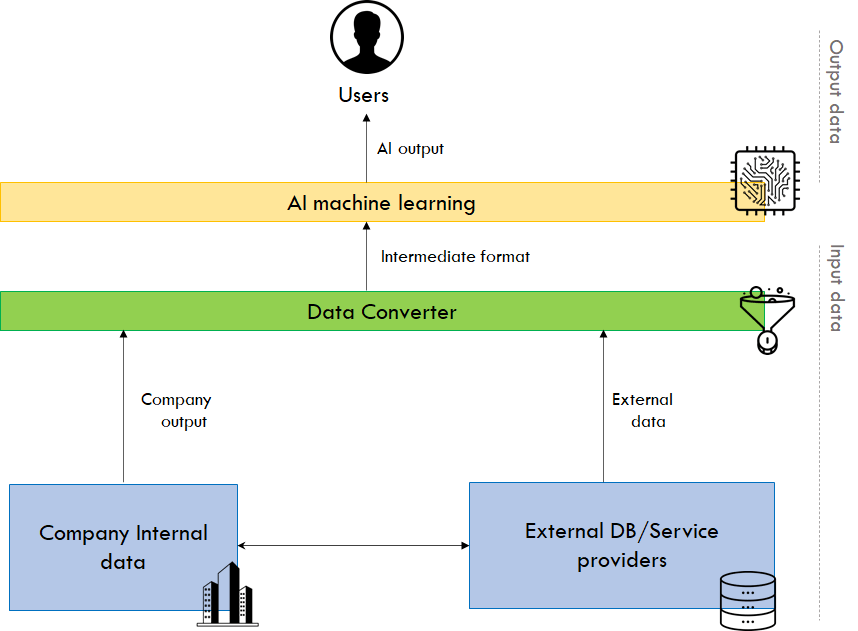

Compression and understanding of industrial data (MPAI-CUI) aims to enable AI-based filtering and extraction of key information from the flow of data that combines data produced by companies and external data (e.g., data on vertical risks such as seismic, cyber etc.)

MPAI-CUI requires standardisation of all data formats to be fed into an AI machine to extract information that is relevant to the intended use. Because the data formats are so diverse, an intermediate format to which any type of data generated by companies in different industries and different countries need to be converted seems to be the only practical solution.

This is depicted in Figure 10.

Figure 10 – A reference diagram for MPAI-CUF

Visual Object and Scene Description (MPAI-OSD)

Visual object and scene description addresses the “scene description” components of several MPAI use cases. Scene description includes the usual description of objects and their attributes in a scene and the semantic description of the objects.

Unlike proprietary solutions that address the needs of the use cases but lack interoperability or force all users to adopt a single technology or application, a standard representation of the objects in a scene allows for better satisfaction of the requirements.

Vision-to-Sound Transformation (MPAI-VST)

It is possible to give a spatial representation of an image that visually impaired people can hear by using two headphones as a localization and description medium. It is a conversion (compression) technique from one space to a different interpretation space.

Patents are the engine that ensure and sustain technology innovation. However, a basic tenet of standardisation is that standards should be developed separately from how standard essential patents (SEP) are monetised. In recent years, however, use of standards with highly sophisticated technologies has met with serious difficulties because the traditional system of Fair, Reasonable and Non-Discriminatory (FRAND) declarations has not been able to keep pace with fast-changing technologies. This has led the market to try approaches to standard development where monetisation is replaced by alternative business models. While royalty-free standards have a role to play in high-tech, they can hardly be expected to support sustainable innovation.

MPAI replaces FRAND declarations with Framework Licences (FWL) defined as the sets of conditions to use a license without values, such as the amount or percentage of royalties or dates due. FWLs are developed in compliance with the generally accepted principles of competition law and agreed upon before a standard is developed.

A FWL is the business model adopted by SEP holders to monetise their IP in a standard without values: no dollars, percentage, dates etc.

The MPAI process of Framework Licences encompasses the following steps

- Before starting the technical work, active members adopt the FWL with a qualified majority.

- During the technical work, active members declare they will make available the terms of their SEP licences according to the FWL after the standard is approved when they make a contribution.

- After the standard is developed, all members declare they will enter into a licensing agreement for other Members’ SEPs, if used, within one year after publication of SEP holders’ licensing terms. Non MPAI-members shall enter into a licensing agreement with SEP holders to use an MPAI standard.

Conclusions

The last few weeks have been extremely intense but rewarding. MPAI has been able to stimulate tens of companies and universities, currently from 15 countries, to provide experts to developing a set of leading-edge project proposals. Currently, the first project planned to achieve MPAI Standard status is MPAI-AIF, whose planned date is July 2021, but other projects are accelerating their pace and may reach MPAI Standard status even before that date.

Articles in the MPAI thread

- A new way to develop useful standards

- A new channel between industry and standards

- From media compression, to data compression, to AI-enabled data coding

- MPAI launches 6 standard projects

- What is MPAI going to do?

- A new organisation dedicated to data compression standards based on AI

- The MPAI machine has started

- A technology and business watershed

- The two main MPAI purposes

- Leaving FRAND for good

- Better information from data

- An analysis of the MPAI framework licence

- MPAI – do we need it?

- New standards making for a new age

| Earlier posts by categories: | MPAI | MPEG | ISO |