In Forty years of video coding and counting I presented a short but intense history of ITU and MPEG video compression standards. In this article I will focus on how more functionalities got added to video compression over the years to MPEG standards and how the next generation of standards will add even more.

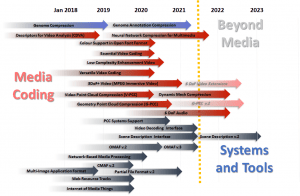

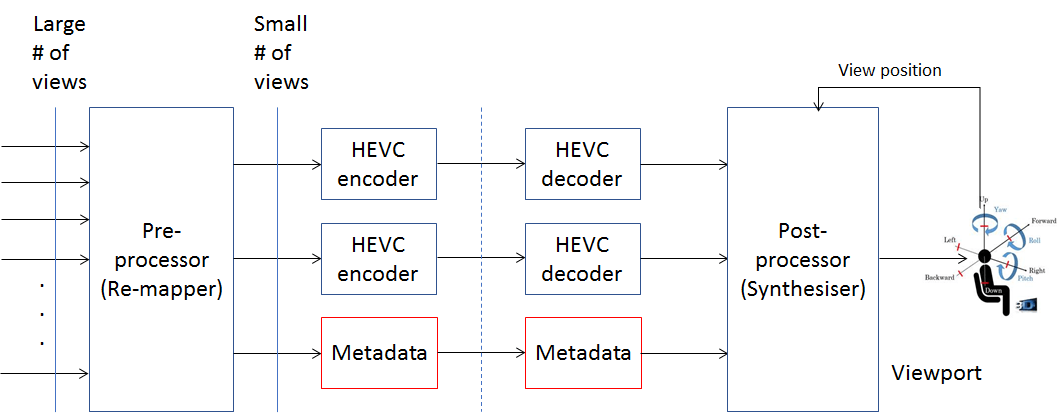

The table below gives an overview of all MPEG video compression standards – past, present and planned. Those in italic have not reached Final Draft International Standard (FDIS) level.

Figure 1 – Video coding standards and functionalities

In 1988 MPEG started its first video coding project for interactive video applications on compact disc (MPEG-1). Input video was assumed to be progressive (25/29.97 Hz, but it also supported more frame rates) and spatial resolution was Source Image Format (CIF ), i.e. 240 or 288 lines x 352 pixels. The syntax supported spatial resolutions up to 16 Kpixels. Obviously progressive scanning is a feature that all MPEG video coding standards have supported since MPEG-1. The (obvious) exception is point clouds because there are no “frames”.

In 1990 MPEG started its second video coding project targeting digital television (MPEG-2). Therefore the input was assumed to be interlaced (frame rate of 50/59.94 Hz, but it also supported more frame rates) and spatial resolution was standard/high definition, and up. The resolution space was quantised by means of levels, the second dimension after profiles. MPEG-4 Visual and AVC are the two last standards with specific interlace tools. An attempt was made to introduce interlace tools in HEVC but the technologies presented did not show appreciable improvements if compared with progressive tools. HEVC does have have some indicators (SEI/VUI) to tell the decoder that the video is interlaced.

MPEG-2 was the first standard to tackle scalability (High Profile), multiview (Multiview Profile) and higher croma resolution (4:2:2 Profile). Several subsequent video coding standards (MPEG-4 Visual and AVC and HEVC) also support these new features. VVC is expected to do the same, probably not in version 1.

MPEG-4 Visual supports coding of video objects and error resilience. The first feature has remained specific to MPEG-4 Visual. Most video codecs allow for some error resilience (e.g. starting from slices in MPEG-1). However, MPEG-4 Visual – mobile communication being one relevant use case – was the first to specifically consider error resilience as a tool.

MPEG-2 first tried to develop 10-bit support and the empty part 8 is what is left of that attempt.

Wide Colour Gamut (WCG), High Dynamic Range (HDR) and 3 Degrees of Freedom (3DoF) are all supported by AVC. These functionalities were first introduced in HEVC, and later added to AVC and are planned to be supported in VVC as well. WCG allows to display a wider gamut of colours, HDR allows to display pictures with brighter regions and with more visible detail in dark areas, SCC allows to achieve better compression of non natural (synthetic) material such as characters and graphics and 3DoF (also called Video 360) allows to represent pictures projected on a sphere.

AVC supports more than 8 quantisation bits extended to 14 bits. HEVC even support 16 bits. VVC, EVC and LCEVC are expected to also support more than 8 quantisation bits.

WebVC was the first MPEG attempt at defining a video coding standard that would not require a licence that involves payment of fees (Option 1 in ISO language, legal language more complex than this). Strictly speaking, WebVC is not a new standard because MPEG has simply extracted what was the Constrained Baseline Profile in AVC (originally, AVC tried to define an Option 1 profile but did not achieve the goal and did not define the profile) with the hope that WebVC could achieve Option 1 status. The attempt failed because some companies confirmed their Option 2 patent declarations (i.e. a licence is required to use the standard) already made against the AVC standard. The brackets in the figure convey this fact.

Video Coding for Browsers (VCB) is the result of a proposal made by a company in response to an MPEG Call for Proposals for Option 1 video coding technology. Another company made an Option 3 patent declaration (i.e. unavailability to license the technology). As the declaration did not contain any detail that could allow MPEG to remove the allegedly infringing technologies, ISO did not publish VCB as a standard. The square brackets in the figure convey this fact.

Internet Video Coding (IVC) is the third video coding standard intended to be Option 1. Three Option 2 patent declarations were received and MPEG has declared its availability to remove patented technology from the standard if specific technology claims will be made. The brackets convey this fact.

Finally, Essential Video Coding (EVC), part 1 of MPEG-5 (however, the project has not been formally approved by ISO yet), is expected to be a two-layer video coding standard. The EVC Call for Proposals requested that the technologies provided in response to the Call for the first (lower) layer of the standard be Option 1. Technologies for the second (higher) layer are Option 2. The curled brackets in the figure convey this fact.

Screen Content Coding (SCC) SCC allows to achieve better compression of non natural (synthetic) material such as characters and graphics. It is supported by HEVC and is planned to be supported in VVC and possibly EVC.

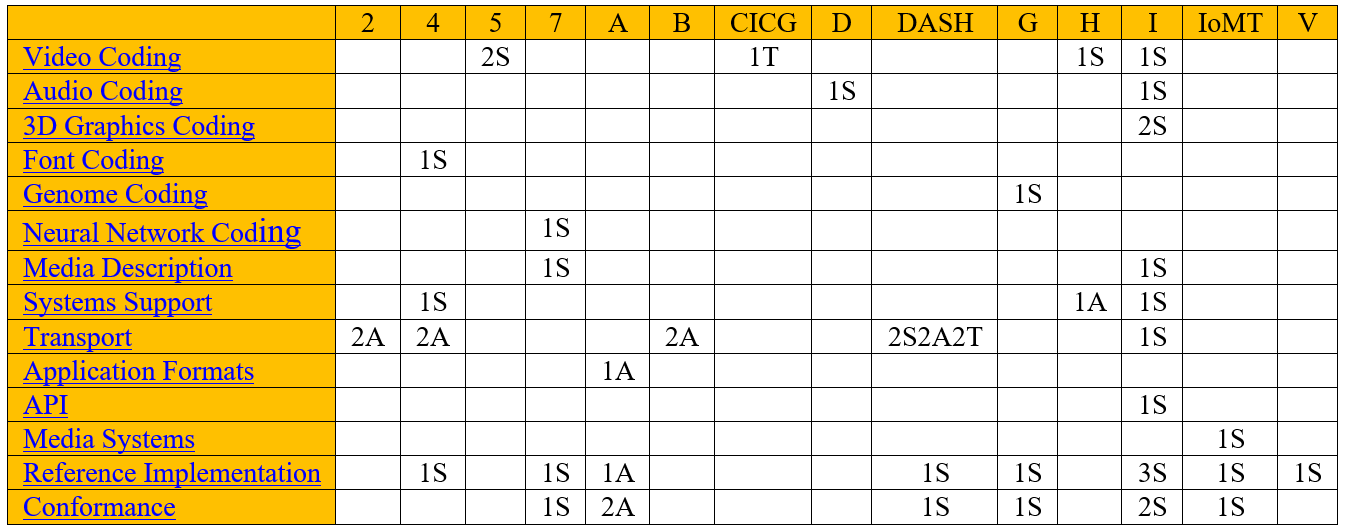

Low Complexity Enhancement Video Coding (LCEVC) is another two-layer video coding standard. Unlike EVC, however, in LCEVC the lower layer is not tied to any specific technology and can be any video codec. The goal of the 2nd layer is to extend the capability of an existing video codec. A typical usage scenario is to give a large amount of already deployed standard definition set top boxes that cannot be recalled the ability to decode high definition pictures. The LCEVC decoder is depicted in Figure 2.

Figure 2 – Low Complexity Enhancement Video Coding

Today technologies are available to capture 3D point clouds, typically with multiple cameras and depth sensors producing up to billions of points for realistically reconstructed scenes. Point clouds can have attributes such as colors, material properties and/or other attributes and are useful for real-time communications, GIS, CAD and cultural heritage applications. MPEG-I part 5 will specify lossy compression of 3D point clouds employing efficient geometry and attributes compression, scalable/progressive coding, and coding of point clouds sequences captured over time with support of random access to subsets of the point cloud.

Other technologies capture points clouds potentially with low density of points to allow users to freely navigate in multi-sensory 3D media experiences. Such representations require a large amount of data, not feasible for transmission on today’s networks. MPEG is developing a second, graphics-based PCC standard, as opposed to the previous one which is video-based, for efficient compression of sparse point clouds.

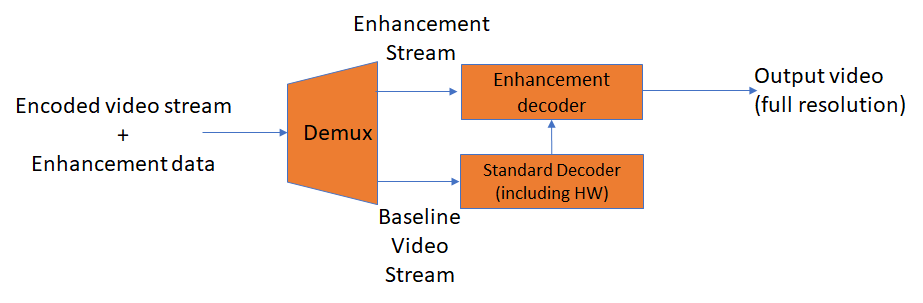

3DoF+ is a terms used by MPEG to indicate a usage scenario where the user can have translational movements of the head. In a 3DoF scenario if the user moves the head too much, annoying parallax error is felt. In March 2019 MPEG has received responses to its Call for Proposals requesting appropriate metadata (see the red blocks in Figure 3) to help the Post-processor present the best image based on the viewer’s position if available, or to synthesise a missing one, if not available.

Figure 3 – 3DoF+ use scenario

Figure 3 – 3DoF+ use scenario

6DoF indicates a use scenario where the user can freely move in a space and enjoy a 3D virtual experience that matches the one in the real world. Light field refers to new devices that can capture a spatially sampled version of a light field that has both spatial and angular light information in one shot. The size of captured data is not only larger but also different than traditional camera images. MPEG is investigating new and compatible compression methods for potential new services.

In 30 years compressed digital video has made a lot of progress, e.g., bigger and brighter pictures with less bitrate and other features. The end point is nowhere in sight.

Thanks to Gary Sullivan and Jens-Rainer Ohm for useful comments.

Posts in this thread

- More video with more features

- Matching technology supply with demand

- What would MPEG be without Systems?

- MPEG: what it did, is doing, will do

- The MPEG drive to immersive visual experiences

- There is more to say about MPEG standards

- Moving intelligence around

- More standards – more successes – more failures

- Thirty years of audio coding and counting

- Is there a logic in MPEG standards?

- Forty years of video coding and counting

- The MPEG ecosystem

- Why is MPEG successful?

- MPEG can also be green

- The life of an MPEG standard

- Genome is digital, and can be compressed

- Compression standards and quality go hand in hand

- Digging deeper in the MPEG work

- MPEG communicates

- How does MPEG actually work?

- Life inside MPEG

- Data Compression Technologies – A FAQ

- It worked twice and will work again

- Compression standards for the data industries

- 30 years of MPEG, and counting?

- The MPEG machine is ready to start (again)

- IP counting or revenue counting?

- Business model based ISO/IEC standards

- Can MPEG overcome its Video “crisis”?

- A crisis, the causes and a solution

- Compression – the technology for the digital age

- On my Charles F. Jenkins Lifetime Achievement Award

- Standards for the present and the future