Introduction

In How does MPEG actually work? I described the MPEG process: once an idea is launched, context and objectives of the idea are identified; use cases submitted and analysed; requirements derived from use cases; and technologies proposed, validated for their effectiveness for eventual incorporation into the standard.

Some people complain that MPEG standards contain too many technologies supporting “non-mainstream” use cases. Such complaints are understandable but misplaced. MPEG standards are designed to satisfy the needs of different industries and what is a must for some, may well not be needed by others.

To avoid burdening a significant group of users of the standard with technologies considered irrelevant, from the very beginning MPEG adopted the “profile approach”. This allows to retain a technology for those who need it without encumbering those who do not.

It is true that there are a few examples where some technologies in an otherwise successful standard get unused. Was adding such technologies a mistake? In hindsight yes, but at the time a standard is developed the future is anybody’s guess and MPEG does not want find out later that one of its standards misses a functionality that was deemed to be necessary in some use cases and that technology could support at the time the standard was developed.

For sure there is a cost in adding the technology to the standard – and this is borne by the companies proposing the technology – but there is no burden to those who do not need it because they can use another profile.

Examples of such “non-mainstream” technologies are provided by those supporting stereo vision. Since as early as MPEG-2 Video, multiview and/or 3D profile(s) have been present in most MPEG video coding standards. Therefore, this article will review the attempts made by MPEG at developing new and better technologies to support what are called today immersive experiences.

The early days

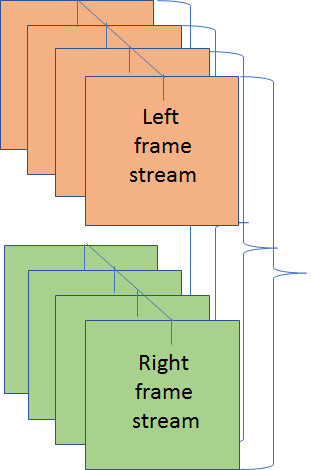

MPEG-1 did not have big ambitions (but the outcome was not modest at all ;-). MPEG-2 was ambitious because it included scalability – a technology that reached maturity only some 10 years later – and multiview. As depicted in Figure 1, multiview was possible because if you have two close cameras pointing to the same scene, you can exploit intraframe, interframe and interview redundancy.

Figure 1 – Redundancy in multiview

Both MPEG-2 scalability and multiview saw little take up.

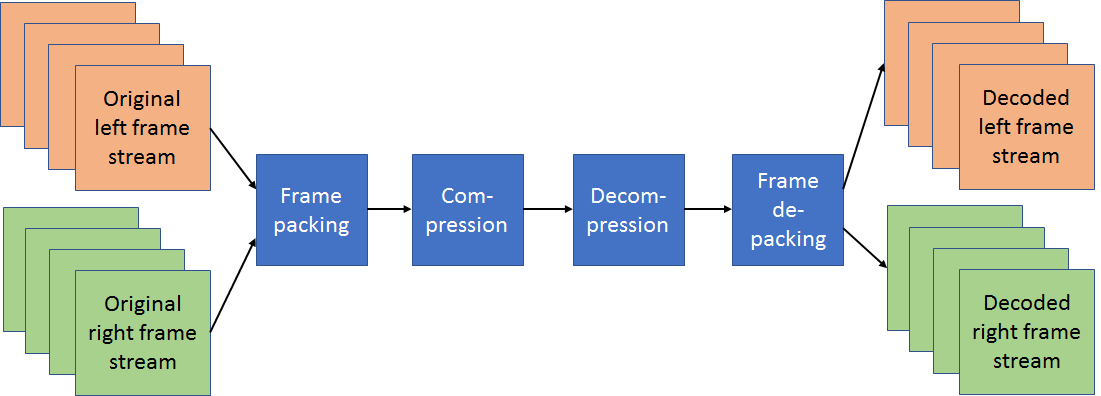

Both MPEG-4 Visual and AVC had multiview profiles. AVC had 3D profiles next to multiview profiles. Multiview Video Coding (MVC) of AVC was adopted by the Blu-ray Disc Association, but the rest of the industry took another turn as depicted in Figure 2.

Figure 2 – Frame packing in AVC and HEVC

If the left and right frames of two video streams are packed in one frame, regular AVC compression can be applied to the packed frame. At the decoder, the frames are de-packed after decompression and the two video streams are obtained.

This is a practical but less that optimal solution. Unless the frame size of the codec is not doubled, you either compromise the horizontal or the vertical resolution depending on the frame-packing method used. Because of this a host of other more sophisticates, but eventually non successful, frame packing methods have been introduced into the AVC and HEVC standards. The relevant information is carried by Supplemental Enhancement Information (SEI) messages, because the specific frame packing method used is not normative.

The HEVC standard, too, supports 3D vision with tools that efficiently compress depth maps, and exploit the redundancy between video pictures and associated depth maps. Unfortunately use of HEVC for 3D video has also been limited.

MPEG-I

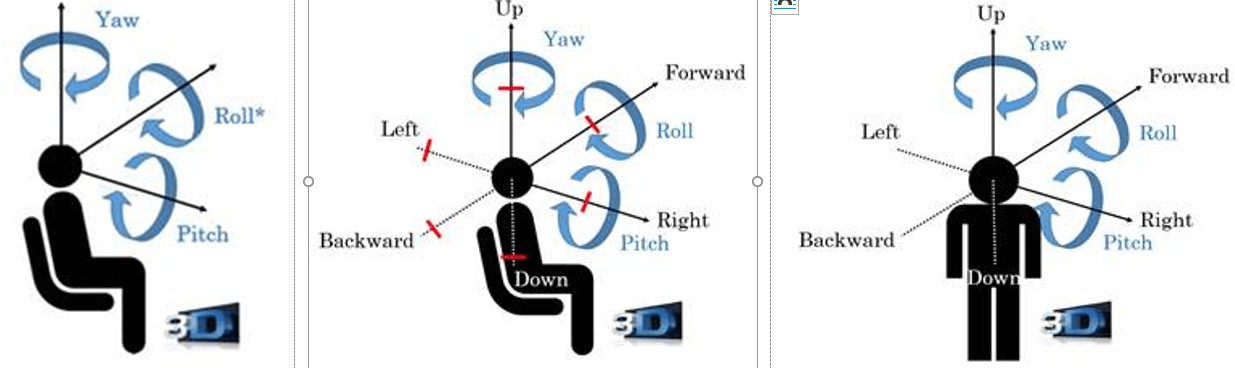

The MPEG-I project – ISO/IEC 23090 Coded representation of immersive media – was launched at a time when the word “immersive” was prominent in many news headings. Figure 3 gives three examples of immersivity where technology challenges increase moving from left to right.

Figure 3 – 3DoF (left), 3DoF+ (centre) and 6DoF (left)

In 3 Degrees of Freedom (3DoF) the user is static but the head that can Yaw, Pitch and Roll. In 3DoF+ the user has the added capability of some head movements in the three directions. In 6 Degrees of Freedom the user can freely walk in a 3D space.

Currently there are several activities in MPEG that aim at developing standards that support some form of immersivity. While they had different starting points, they are likely to converge to one or, at least, a cluster of points (hopefully not to a cloud?).

OMAF

Omnidirectional Media Application Format (OMAF) is not a way to compress immersive video but a storage and delivery format. Its main features are:

- Support of several projection formats in addition to the equi-rectangular one

- Signalling of metadata for rendering of 360ᵒ monoscopic and stereoscopic audio-visual data

- Use of MPEG-H video (HEVC) and audio (3D Audio)

- Several ways to arrange video pixels to improve compression efficiency

- Use of the MP4 File Format to store data

- Delivery of OMAF content with MPEG-DASH and MMT.

MPEG has released OMAF in 2018 that is now published as an ISO standard (ISO/IEC 23090-2).

3DoF+

If the current version of OMAF is applied to a 3DoF+ scenario, the user may feel parallax errors that are more annoying the larger the movement of the head.

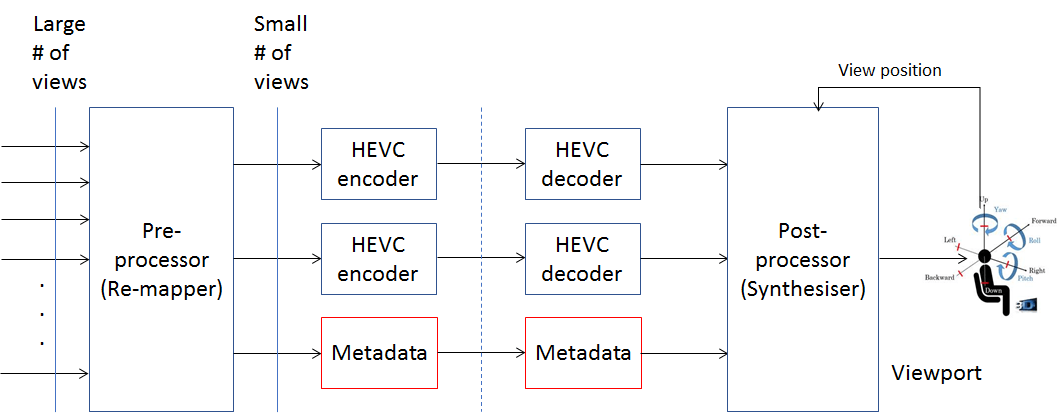

To address this problem, at the January 2019 meeting MPEG has issued a call for proposals requesting appropriate metadata (see the red blocks in Figure 4) to help the Post-processor to present the best image based on the viewer’s position if available, or to synthesise a missing one, if not available.

Figure 4 – 3DoF+ use scenario

Figure 4 – 3DoF+ use scenario

The 3DoF+ standard will be added to OMAF which will be published as 2nd edition. Both standards are planned to be completed in October 2020.

VVC

Versatile Video Coding (VVC) is the latest in the line of MPEG video compression standards supporting 3D vision. Currently VVC does not specifically include full-immersion technologies, as it only supports omnidirectional video as in HEVC. However, VVC could not only replace HEVC in the Figure 4, but also be the target of other immersive technologies as will be explained later.

Point Cloud Compression

3D point clouds can be captured with multiple cameras and depth sensors with points that can number a few thousands up to a few billions, and with attributes such as colour, material properties etc. MPEG is developing two different standards whose choice depends on whether the points are dense (Video-based PCC) or less so (Graphic-based PCC). The algorithms in both standards are lossy, scalable, progressive and support random access to subsets of the point cloud. See here for an example of a Point Cloud test sequence being used by MPEG for developing the V-PCC standard.

MPEG plans to release Video-based Point Cloud Compression as FDIS in October 2019 and Graphic-based PCC Point Cloud Compression as FDIS in April 2020.

Next to PCC compression MPEG is working on Carriage of Point Cloud Data with the goal to specify how PCC data can be stored in ISOBMFF and transported with DASH, MMT etc.

Other immersive technologies

6DoF

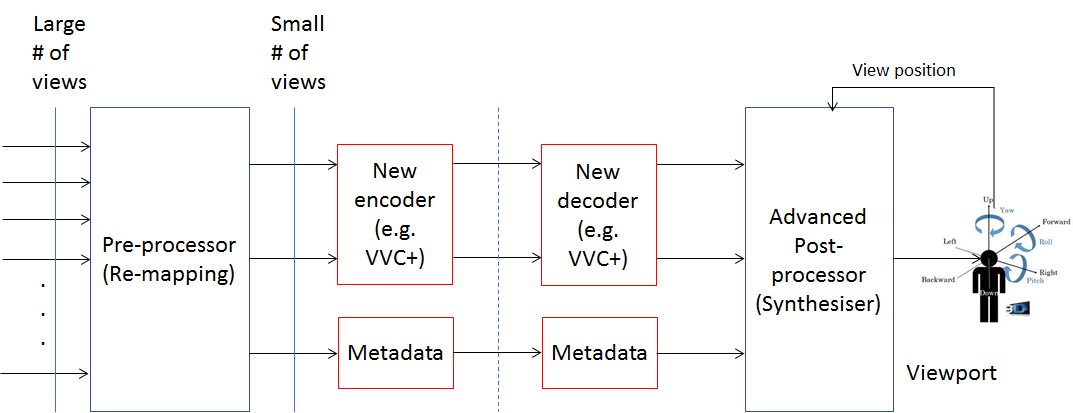

MPEG is carrying out explorations on technologies that enable 6 degrees of freedom (6DoF). The reference diagram for that work is what looks like a minor extension of the 3DoF+ reference model (see Figure 5), but may have huge technology implications.

Figure 5 – 6DoF use scenario

To enable a viewer to freely move in a space and enjoy a 3D virtual experience that matches the one in the real world, we still need some metadata as in 3DoF+ but also additional video compression technologies that could be plugged into the VVC standard.

Light field

The MPEG Video activity is all about standardising efficient technologies that compress digital representations of sampled electromagnetic fields in the visible range captured by digital cameras. Roughly speaking we have 4 types of camera:

- Conventional cameras with a 2D array of sensors receiving the projection of a 3D scene

- An array of cameras, possibly supplemented by depth maps

- Point clouds cameras

- Plenoptic cameras whose sensors capture the intensity of light from a number of directions that the light rays travel to reach the sensor.

Technologically speaking, #4 is an area that has not been shy in promises and is delivering on some of them. However, economic sustainability for companies engaged in developing products for the entertainment market has been a challenge.

MPEG is currently engaged in Exploration Experiments (EE) to check

- The coding performance of Multiview Video Data (#2) for 3DoF+ and 6DoF, and Lenslet Video Data (#4) for Light Field

- The relative coding performance of Multiview coding and Lenslet coding, both for Lenslet Video Data (#4).

However, MPEG is not engaged in checking the relative coding performance of #2 data and #4 data because there are no #2 and #4 test data for the same scene.

Conclusion

In good(?) old times MPEG could develop video coding standards – from MPEG-1 to VVC – by relying on established input video formats. This somehow continues to be true for Point Clouds as well. On the other hand, Light Field is a different matter because the capture technologies are still evolving and the actual format in which the data are provided has an impact on the actual processing that MPEG applies to reduce the bitrate.

MPEG has bravely picked up the gauntlet and its machine is grinding data to provide answers that will eventually lead to one or more visual compression standards to enable rewarding immersive user experiences.

MPEG is planning a “Workshop on standard coding technologies for immersive visual experiences” in Gothenburg (Sweden) on 10 July 2019. The workshop, open to the industry, will be an opportunity for MPEG to meet its client industries, report on its results and discuss industries’ needs for immersive visual experiences standards.

Posts in this thread

- The MPEG drive to immersive visual experiences

- There is more to say about MPEG standards

- Moving intelligence around

- More standards – more successes – more failures

- Thirty years of audio coding and counting

- Is there a logic in MPEG standards?

- Forty years of video coding and counting

- The MPEG ecosystem

- Why is MPEG successful?

- MPEG can also be green

- The life of an MPEG standard

- Genome is digital, and can be compressed

- Compression standards and quality go hand in hand

- Digging deeper in the MPEG work

- MPEG communicates

- How does MPEG actually work?

- Life inside MPEG

- Data Compression Technologies – A FAQ

- It worked twice and will work again

- Compression standards for the data industries

- 30 years of MPEG, and counting?

- The MPEG machine is ready to start (again)

- IP counting or revenue counting?

- Business model based ISO/IEC standards

- Can MPEG overcome its Video “crisis”?

- A crisis, the causes and a solution

- Compression – the technology for the digital age

- On my Charles F. Jenkins Lifetime Achievement Award

- Standards for the present and the future