Introduction

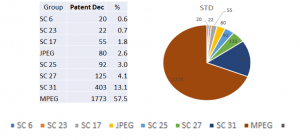

In Is there a logic in MPEG standards? I described the first steps in MPEG life that look so “easy” now: MPEG-1 (1988) for interactive video and digital audio broadcasting; MPEG-2 (1991) for digital television; MPEG-4 (1993) for digital audio and video on fixed and mobile internet; MPEG-7 (1997) for audio-video-multimedia metadata; MPEG-21 (2000) for trading of digital content. Just these 5 standards, whose starting dates cover 12 years i.e. 40% of MPEG’s life time, include 86 specifications, i.e. 43% of the entire production of MPEG standards.

MPEG-21 was the first to depart from the one and trine nature of MPEG standards: Systems, Video and Audio, and that departure has continued until MPEG-H. Being one and trine is a good qualification for success, but MPEG standards do not have to be one and trine to be successful, as this paper will show.

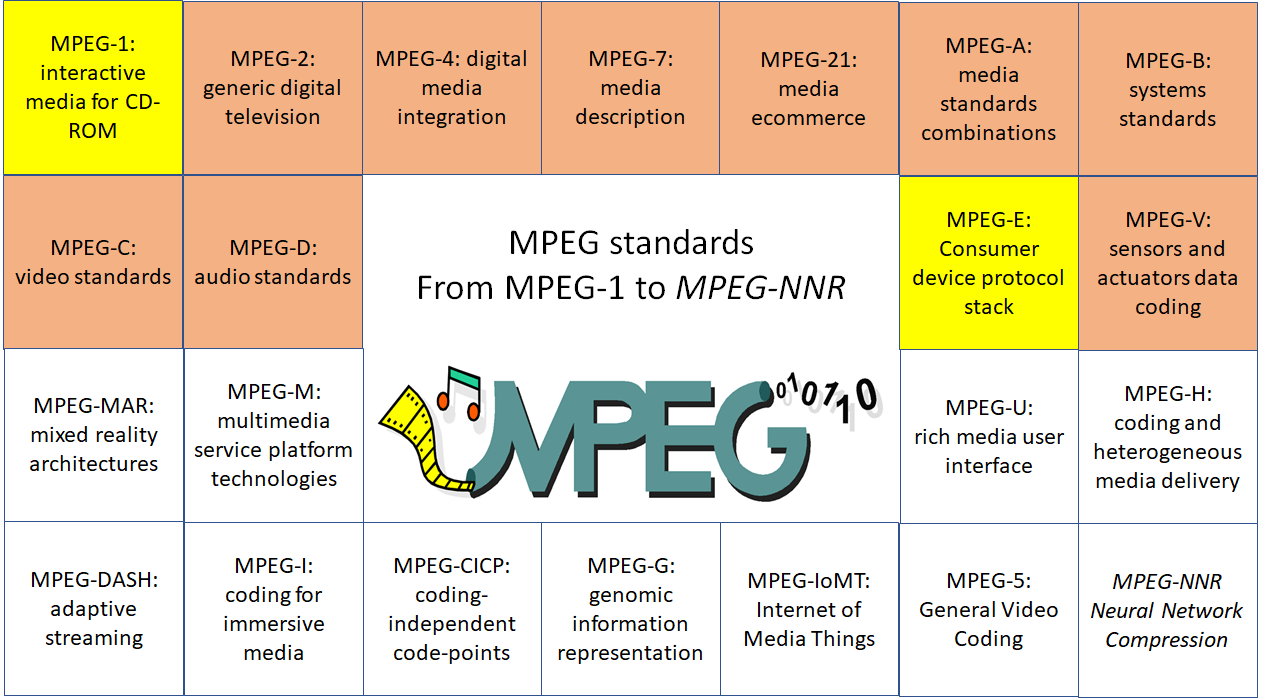

A bird’s eye view of MPEG standards

The figure below presents a view of all MPEG standards, completed, under development or planned. Yellow indicates that the standard has been dormient for quite some time, light brown indicates that the standard is still active, and white indicates standards that will be illustrated in the future

MPEG-A

The official title of MPEG-A is Multimedia Application Formats. The idea behind MPEG-A is kind of obvious: we have standards for media elements (audio, video 3D graphics, metadata etc.), but what should one do to be interoperable when combining different media elements? Therefore MPEG-A is a suite of specifications that define application formats integrating existing MPEG technologies to provide interoperability for specific applications. Unlike the preceding standards that provided generic technologies for specific contexts, the link that unites MPEG-A specifications is the task of combing MPEG and, when necessary, other technologies for specific needs.

An overview of the MPEG-A standard is available here. Some of the 20 MPEG-A specifications are briefly described below:

- Part 2 – MPEG music player application format specifies an “extended MP3 format” to enable augmented sound experiences (link)

- Part 3 – MPEG photo player application format specifies additional information to a JPEG file to enable augmented photo experiences (link)

- Part 4 – Musical slide show application format is a superset of the Music and Photo Player Application Formats enabling slide shows accompanied by music

- Part 6 – Professional archival application format specifies a format for carriage of content, metadata and logical structure of stored content and related data protection, integrity, governance, and compression tools (link)

- Part 10 – Surveillance application format specifies a format for storage and exchange of surveillance data that include compression video and audio, file format and metadata (link)

- Part 13 – Augmented reality application format specifies a format to enable consumption of 2D/3D multimedia content including both stored and real time, and both natural and synthetic content (link)

- Part 15 – Multimedia Preservation Application Format specifies the Multimedia Preservation Description Information (MPDI) that enables a user to discover, access and deliver multimedia resources (link)

- Part 18 – Media Linking Application Format specifies a data format called “bridget”, a link from a media item to another media item that includes source, destination, metadata etc. (link)

- Part 19 – Common Media Application Format combines and restricts different technologies to deliver and combine CMAF Media Objects in a flexible way to form multimedia presentations adapted to specific users, devices, and networks (link)

- Part 22 – Multi-Image Application Format enables precise interoperability points for creating, reading, parsing, and decoding images embedded in a High Efficiency Image File (HEIF).

MPEG-B

The official title of MPEG-B is MPEG systems technologies. After developing MPEG-1, -2, -4 and -7, MPEG realised that there were specific systems technologies that did not fit naturally into any part 1 of those standards. Thus, after using the letter A in MPEG-A, MPEG decided to use the letter B for this new family of specifications.

MPEG-B is composed of 13 parts, some of which are

- Part 1 – Binary MPEG format for XML, also called Binary MPEG format for XML or BiM, specifies a set of generic technologies for encoding XML documents adding to and integrating the specifications developed in MPEG-7 Part 1 and MPEG-21 part 16 (link)

- Part 4 – Codec configuration representation specifies a framework that enables a terminal to build a new video or 3D Graphics decoder by assembling standardised tools expressed in the RVC CAL language (link)

- Part 5 – Bitstream Syntax Description Language (BSDL) specifies a language to describe the syntax of a bistream

- Part 7 – Common encryption format for ISO base media file format files specifies elementary stream encryption and encryption parameter storage to enable a single MP4 file to be used on different devices supporting different content protection systems (link)

- Part 9 – Common Encryption for MPEG-2 Transport Streams is a similar specification as Part 7 for MPEG-2 Transport Stream

- Part 11 – Green metadata specifies metadata to enable a decoder to consume less energy while still providing a good quality video (link)

- Part 12 – Sample Variants defines a Sample Variant framework to identify the content protection system used in the client (link)

- Part 13 – Media Orchestration contains tools for orchestrating in time (synchronisation) and space the automated combination of multiple media sources (i.e. cameras, microphones) into a coherent multimedia experience rendered on multiple devices simultaneously

- Part 14 – Partial File Format enables the description of an MP4 file partially received over lossy communication channels by providing tools to describe reception data, the received data and document transmission information

- Part 15 – Carriage of Web Resource in MP4 FF specifies how to use MP4 File Format tools to enrich audio/video content, as well as audio-only content, with synchronised, animated, interactive web data, including overlays.

MPEG-C

The official title of MPEG-C is MPEG video technologies. As for systems, MPEG realised that there were specific video technologies supplemental to video compression that did not fit naturally into any part 2 of the MPEG-1, -2, -4 and -7 standards.

MPEG-B is composed of 6 parts, two of which are

- Part 1 – Accuracy requirements for implementation of integer-output 8×8 inverse discrete cosine transform, was created after IEEE had discontinued a similar standard which is at the basis of important video coding standards

- Part 4 – Media tool library contains modules called Functional Units expressed in the RVC-CAL language. These can be used to assemble some of the main MPEG Video coding standards, including HEVC and 3D Graphics compression standards, including 3DMC.

MPEG-D

The official title of MPEG-C is MPEG audio technologies. Unlike MPEG-C, MPEG-D parts 1, 2 and 3 actually specify audio codecs that are not generic, as MPEG-1, MPEG-2 and MPEG-4 but intended to address specific application targets.

MPEG-D is composed of 5 parts, the first 4 of which are

- Part 1 – MPEG Surround specifies an extremely efficient method for coding of multi-channel sound via the transmission of a 1) compressed stereo or monoaudio program and 2) a low-rate side-information channel with the advantage of retaining backward compatibility to now ubiquitous stereo playback systems while giving the possibility to next-generation players to present a high-quality multi-channel surround experience

- Part 2 – Spatial Audio Object Coding (SAOC) specifies an audio coding algorithm capable to efficiently handle individual audio objects (e.g. voices, instruments, ambience, ..) in an audio mix and to allow the listener to adjust the mix based on their personal taste, e.g. by changing the rendering configuration of the audio scene from stereo over surround to possibly binaural reproduction

- Part 3 – Unified Speech and Audio Coding (USAC) specifies an audio coding algorithm capable to provide consistent quality for mixed speech and music content with a quality that is better than codecs that are optimized for either speech content or music content

- Part 4 – Dynamic Range Control (DRC) specifies a unified and flexible format supporting comprehensive dynamic range and loudness control, addressing a wide range of use cases including media streaming and broadcast applications. The DRC metadata attached to the audio content can be applied during playback to enhance the user experience in scenarios such as ‘in a crowded room’ or ‘late at night’

MPEG-E

This standard is the result of an entirely new direction of MPEG standardisation. Starting from the need to define API that applications can call to access key MPEG technologies MPEG developed a Call for Proposal to which several responses were received MPEG reviewed the responses and developed the ISO/IEC standard called Multimedia Middleware.

MPEG-E is composed of 8 parts

- Part 1 – Architecture specifies the MPEG Multimedia Middleware (M3W) architecture that allows applications to execute multimedia functions without requiring detailed knowledge of the middleware and to update, upgrade and extend the M3W

- Part 2 – Multimedia application programming interface (API) specifies the M3W API that provide media functions suitable for products with different capabilities for use in multiple domains

- Part 3 – Component model specifies the M3W component model and the support API for instantiating and interacting with components and services

- Part 4 – Resource and quality management, Part 5 – Component download, Part 6 – Fault management and Part 7 – System integrity management specify the support API and the technology used for M3W Component Download Fault Management Integrity Management and Resource Management, respectively

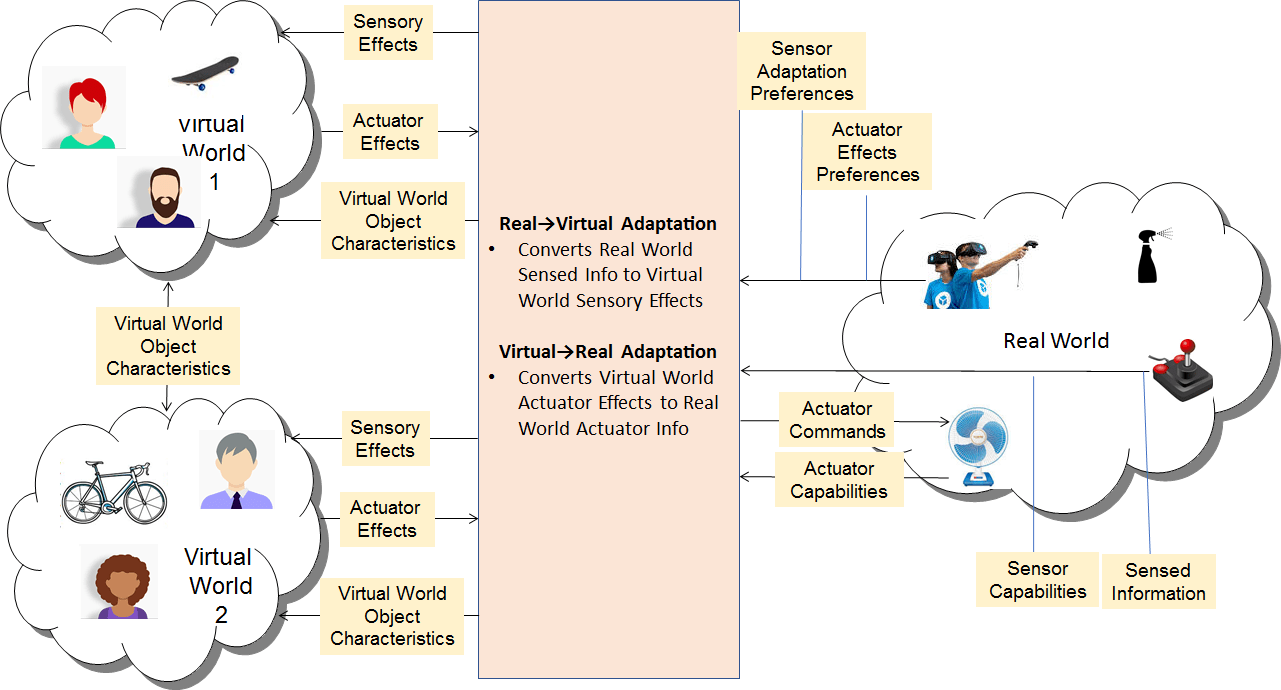

MPEG-V

The development of the MPEG-V standard Media context and control started in 2006 from the consideration that MPEG media – audio, video, 3D graphics etc. – offer virtual experiences that may be a digital replica of a real world, a digital instance of a virtual world or a combination of natural and virtual worlds. At that time, however, MPEG could not offer users any means to interact with those worlds.

MPEG undertook the task to provide standard interactivity technologies that allow a user to

- Map their real-world sensor and actuator context to a virtual-world sensor and actuator context, and vice-versa and

- Achieve communication between virtual worlds.

This is depicted in the figure

All data streams indicated are specified in one or more of the 7 MPEG-V parts

- Part 1 – Architecture expands of the figure above

- Part 2 – Control information specifies control devices interoperability (actuators and sensors) in real and virtual worlds

- Part 3 – Sensory information specifies the XML Schema-based Sensory Effect Description Language to describe actuator commands such as light, wind, fog, vibration, etc. that trigger human senses

- Part 4 – Virtual world object characteristics defines a base type of attributes and characteristics of the virtual world objects shared by avatars and generic virtual objects

- Part 5 – Data formats for interaction devices specifies syntax and semantics of data formats for interaction devices – Actuator Commands and Sensed Information – required to achieve interoperability in controlling interaction devices (actuators) and in sensing information from interaction devices (sensors) in real and virtual worlds

- Part 6 – Common types and tools specifies syntax and semantics of data types and tools used across MPEG-V parts.

Conclusion

The standards from MPEG-A to MPEG-V include 59 specifications that extend over the entire 30 years of MPEG activity. These standards account for 29% of the entire production of MPEG standards.

In this period of time MPEG standards have addessed more of the same technologies – systems (MPEG-B), video (MPEG-C) and audio (MPEG-D) – and have covered other features beyond those initially addressed: application formats (MPEG-A), media application life cycle (MPEG-E), and interaction of the real world with virtual worlds, and between virtual world (MPEG-V).

Media technologies evolve and so do their applications. Sometimes applications succeed and sometimes fail. So do MPEG standards.

Posts in this thread

- There is more to say about MPEG standards

- Moving intelligence around

- More standards – more successes – more failures

- Thirty years of audio coding and counting

- Is there a logic in MPEG standards?

- Forty years of video coding and counting

- The MPEG ecosystem

- Why is MPEG successful?

- MPEG can also be green

- The life of an MPEG standard

- Genome is digital, and can be compressed

- Compression standards and quality go hand in hand

- Digging deeper in the MPEG work

- MPEG communicates

- How does MPEG actually work?

- Life inside MPEG

- Data Compression Technologies – A FAQ

- It worked twice and will work again

- Compression standards for the data industries

- 30 years of MPEG, and counting?

- The MPEG machine is ready to start (again)

- IP counting or revenue counting?

- Business model based ISO/IEC standards

- Can MPEG overcome its Video “crisis”?

- A crisis, the causes and a solution

- Compression – the technology for the digital age

- On my Charles F. Jenkins Lifetime Achievement Award

- Standards for the present and the future