| Communication and standard are synonyms: to communicate the symbols that convey our messages must be agree upon, i.e. made standard. Humans have left traces of messages dating back to several thousands of years ago, as in the case of the image of a bison found in the Cave of Altamira. |  |

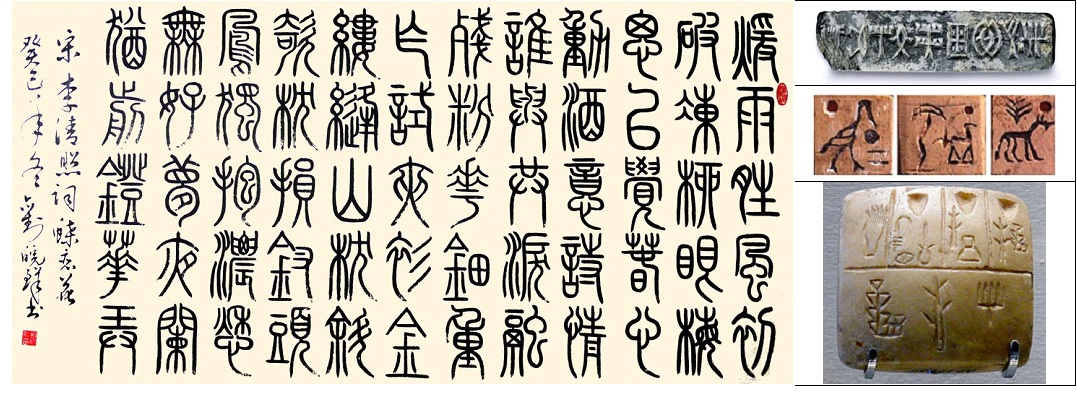

We also have more recent traces of messages of which we often know or have derived syntax and semantics going back several thousands of years ago. Examples are in the figures below from China, the Indus Valley, the Nile Valley and Mesopotamia.

Looking at a few hundreds of years back , we notice that different ways have been used to set the symbols used. In the figure below the symbols were, in the order, defined by the king, used its inventor and successful entrepreneur, derived from preceding alphabets and agreed in a technical committee.

The Morse telegraph was demonstrated in 1844 and its use spread like fire. This market reality prompted the establishment of the first modern (an international) standards organisation – the ITU whose R series of recommendations is for telegraph transmission.

PAL was patented by Telefunken in 1962, presented to EBU in 1963, adopted as national standard in various countries and became part of the ITU-R Recommendation 624. Victor Company of Japan (JVC) released the VHS video cassette recorder in 1976 and IEC developed IEC 60774-1 “Interchangeability of recorded VHS video cassettes”. Philips and Sony released the Compact Disc in 1982 and IEC developed IEC 60908 “Compact disc digital audio system”.

In the early 1980’s, the coverage of communication standard areas was rather complex:

- In ITU-T, Working Party 1 (WP 1) of Study Group XV (SG XV) set standards for speech and WP 2 set standards for video.

- In ITU-R SG 10 set standards for audio and SG 11 set standards for video.

- In ISO Technical Committee 42 (TC 42) set standards for Cinematography, TC 36 for Photography and Subcommittee 2 (SC 2) of TC 94 set standards for Character sets.

- In IEC SC 60 A set standards for recording of audio, SC 60 B set standards for recording of video, TC 84 for audio-visual equipment and SC 12A and G for receivers.

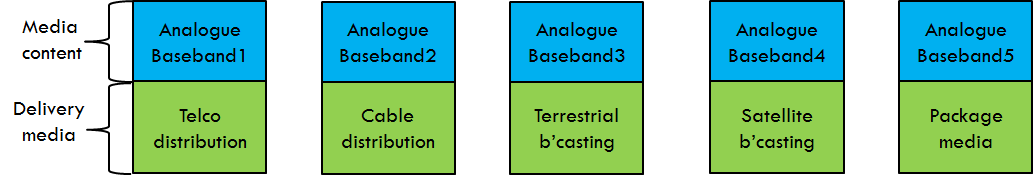

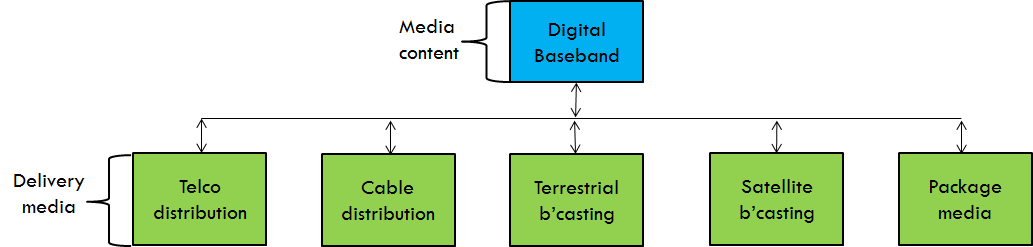

The article The Mule, Foundation and MPEG describes how the Mule came and unified disparate activities into one that covered compression of all media, including transport, serving all industries while at the same time being independent of industry and turning upside down a market reality made of non-communicating silos.

to a market reality where content could move across industries, a fact that some called industry convergence, a phenomenon that was not endogenous but exogenous (because of the Mule)

This was just the first and more visible impact of the arrival of the Mule. However, more revolutions in media standards were in the making.

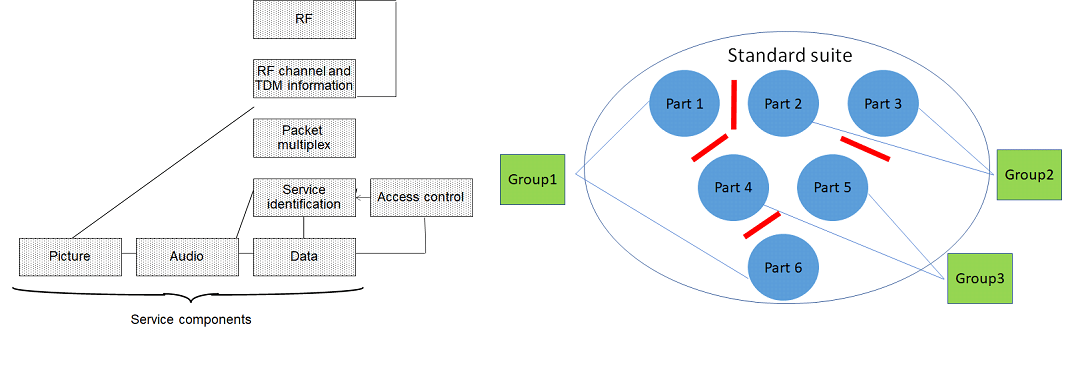

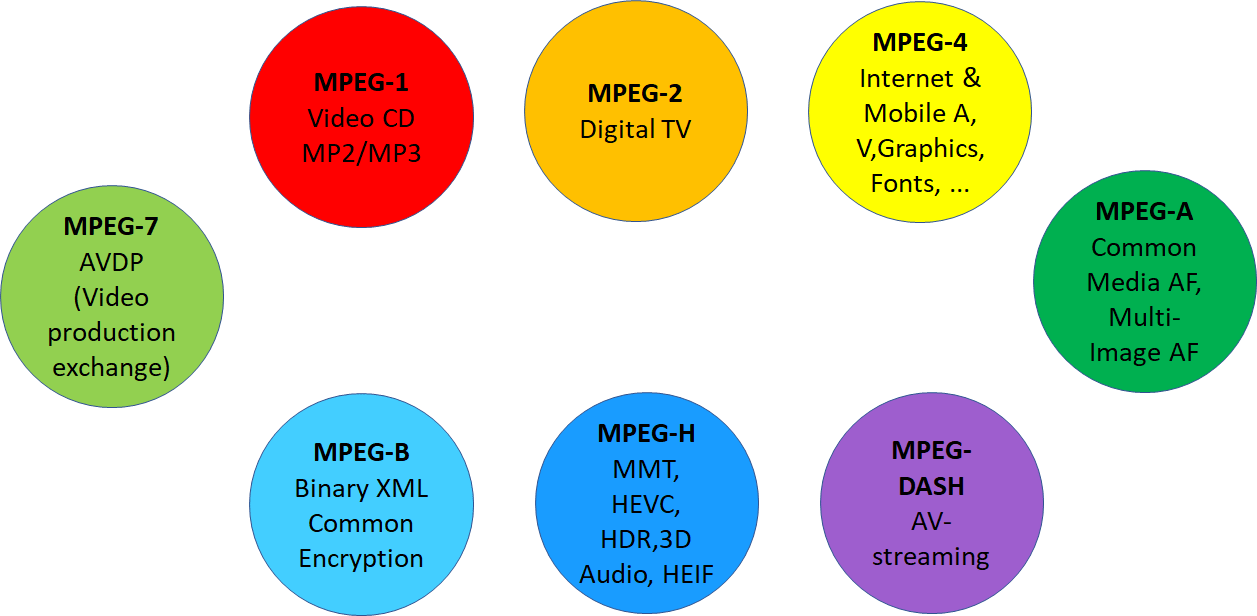

The Multiplexed Analogue Components (MAC) standard was a monolith, as well represented by the left-hand side figure below where everything is specified in a single document, from radio-frequency to audio, video and data presented to the user. The Mule replaced this monolith with a structure of independent but integrated standards that could be used independently but also in conjunction with other standards.

This structure was adopted in the first (MPEG-1) standard and was preserved in most of the standards produced henceforth.

This structure was adopted in the first (MPEG-1) standard and was preserved in most of the standards produced henceforth.

In MPEG-1: Pt. 1 Systems, Pt. 2 Video, Pt. 3 Audio

In MPEG-2: Pt. 1 Systems, Pt. 2 Video, Pt. 3 Audio

In MPEG-4: Pt. 1 Systems, Pt. 2 Video, Pt. 3 Audio, Pt. 10 AVC, Pt. 11 BIFS

In MPEG-7: Pt. 1 Systems, Pt. 3 Video, Pt. 4 Audio, Pt. 5 Multimedia

In MPEG-H: Pt. 1 MMT, Pt. 2 HEVC, Pt. 3 3D Audio

In MPEG-I: Pt. 2 OMAF, Pt. 3 VVC, Pt. 4 Immersive Audio, Pt. 5 V-PCC, Pt. 9 G-PCC, Pt. 12 MIAF

In the same vein is this additional piece of history that shows how the middle ground between anarchy and inflexibility was found when a country wanted to enshrine a proprietary audio solution as part of MPEG-2. My objections were corroborated by a solution that accommodated the need that had prompted the request. MPEG-2 Systems now carries the format_identifier field, managed by a Registration Authority, whose value indicates the presence of a non-standard format.

Another innovation was made in determining the conformity of an implementation to a standard. I like to call this the equivalent of the problem that human societies solved by delegating to the law the definition of what actions are legitimate and to the tribunals the decisions on whether specific actions are legitimate.

In the telecom world, Authorised Testing Laboratories used to ensure that devices from different manufacturers could connect to the network. However, the consumer electronics and IT world did not have a comparable notion of conformance testing. The Mule guided the establishment of the means to test an implementation for conformance based on the following principles

- An encoder should produce a bitstream that is correctly decoded by the standard software decoder

- A decoder should be able to correctly decode conformance testing bitstreams

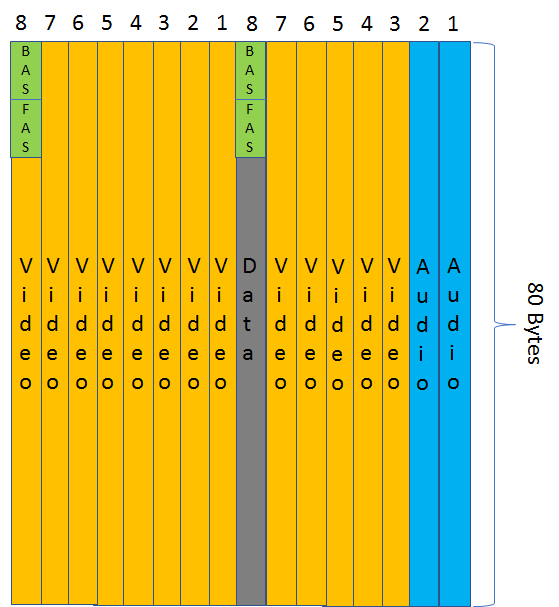

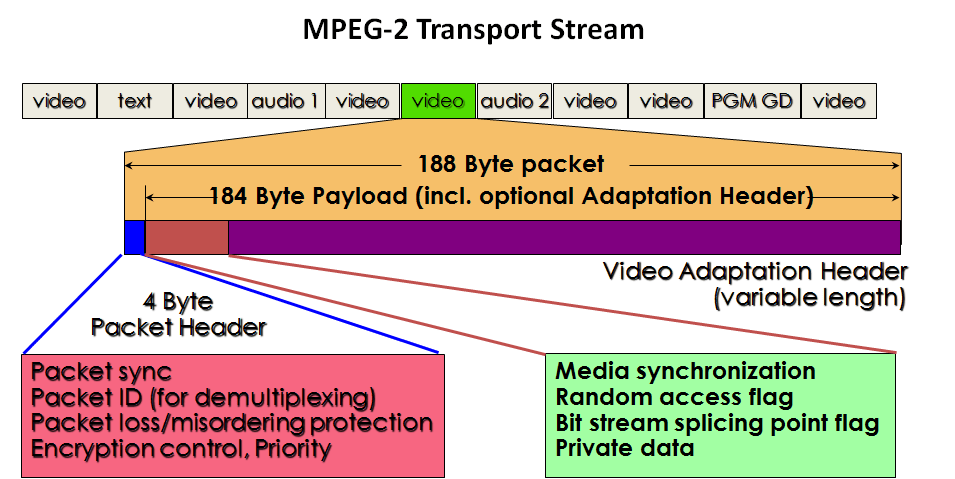

| Another fundamental technical innovation was made in the digital transport area. In the early 1980’s, ITU started the development of the H.221 recommendation: Frame structure for a 64 to 1920 kbit/s channel in audio-visual teleservices. In this standard the 8th bit in each octet carries the Service Channel. Within the Service Channel bits 1-8 are for Frame Alignment Signal (FAS) and bits 9-16 are for Bit Alignment Signal (BAS). Audio is always carried by the first B channel, e.g. by the first 2 subchannels, Video and Data by the other subchannels less the bitrate allocated to FAS and BAS. |  |

| The Mule took advantage of the new environment and silenced the backward and outdated. The MPEG-1 and MPEG-2 standards put the media world on aright track. |  |

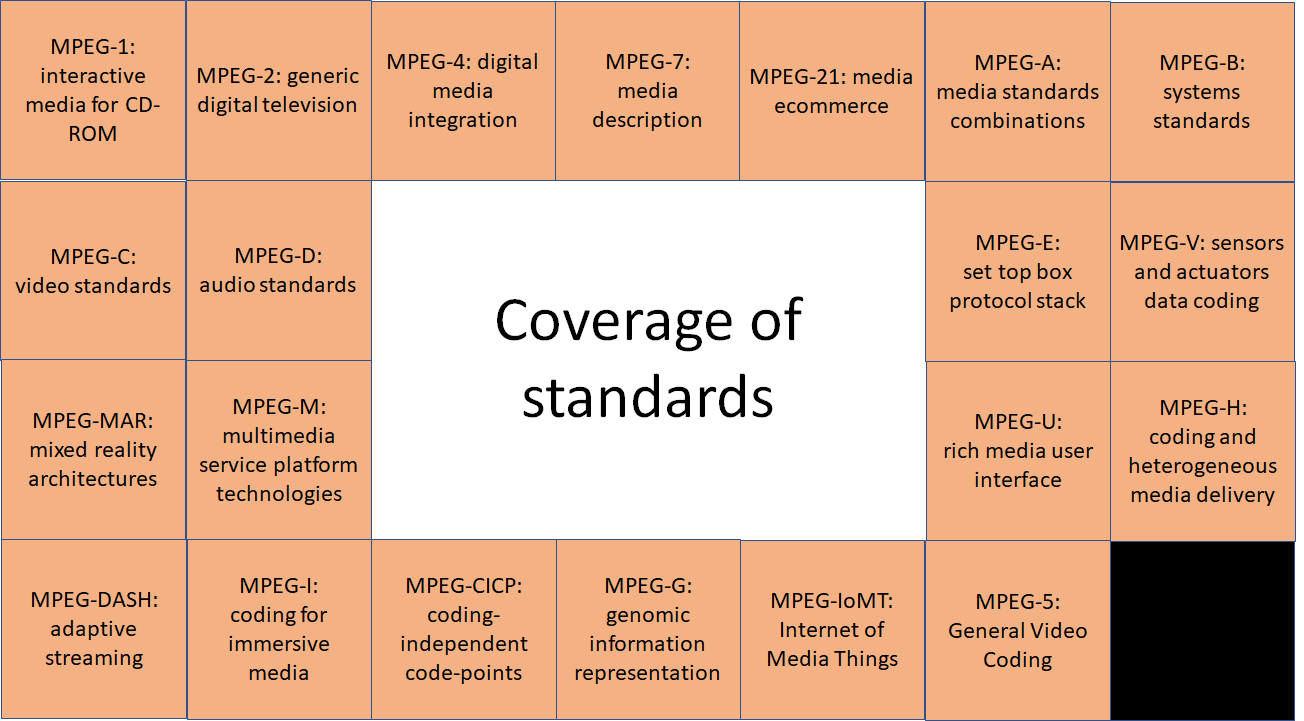

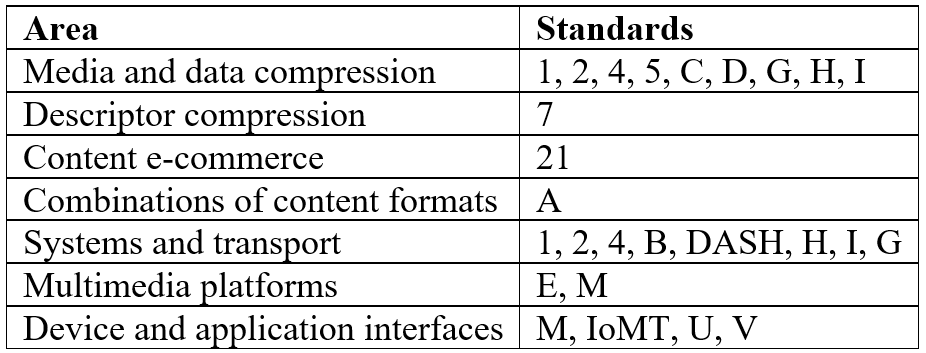

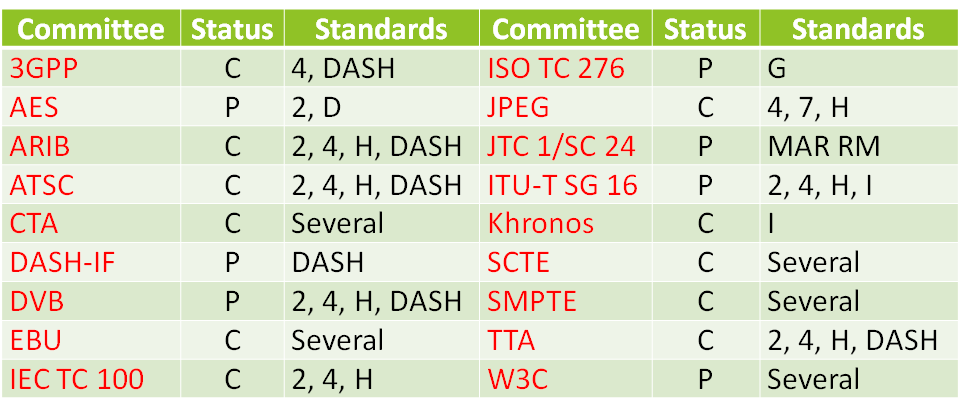

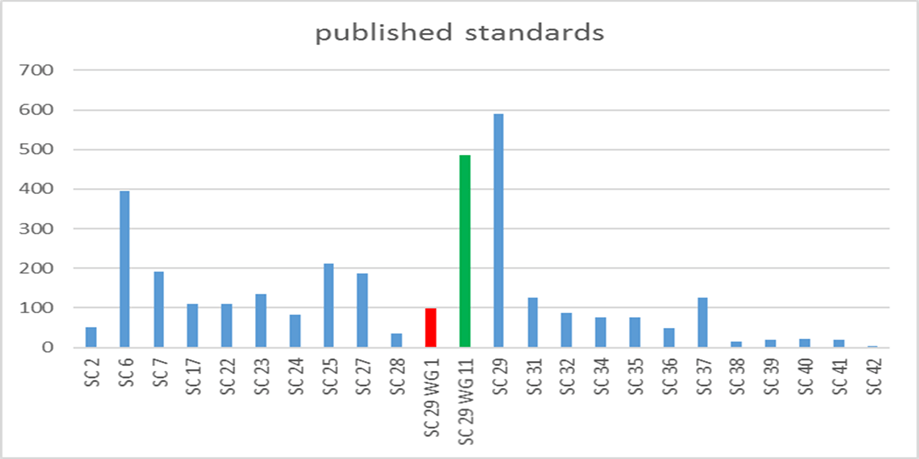

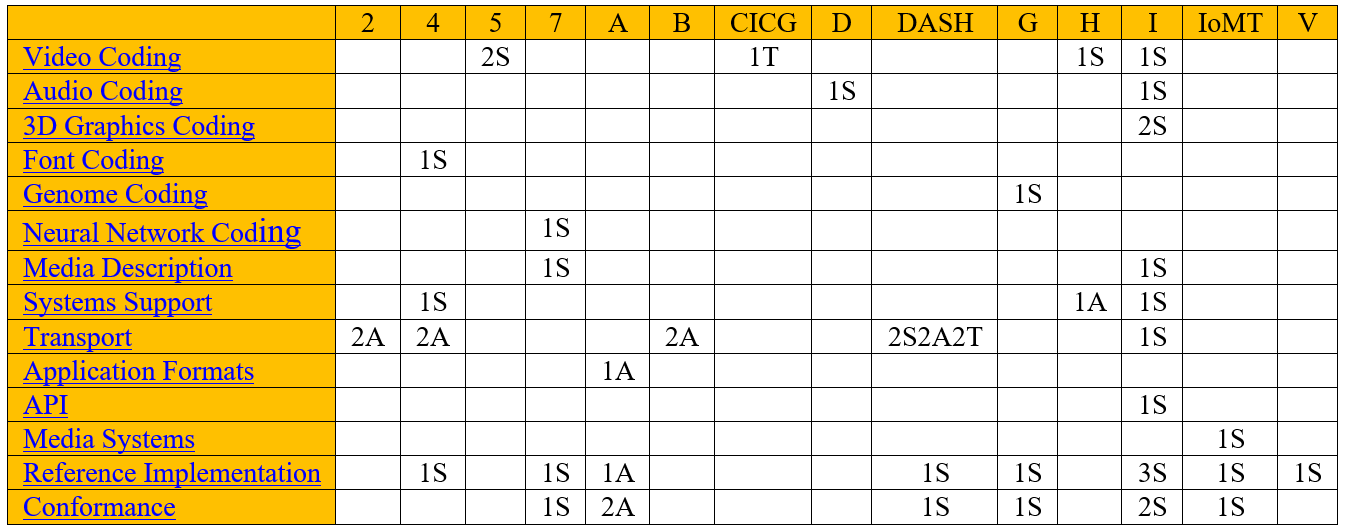

The notable thing is that the large number of standards shown below

applicable to such a wide application areas

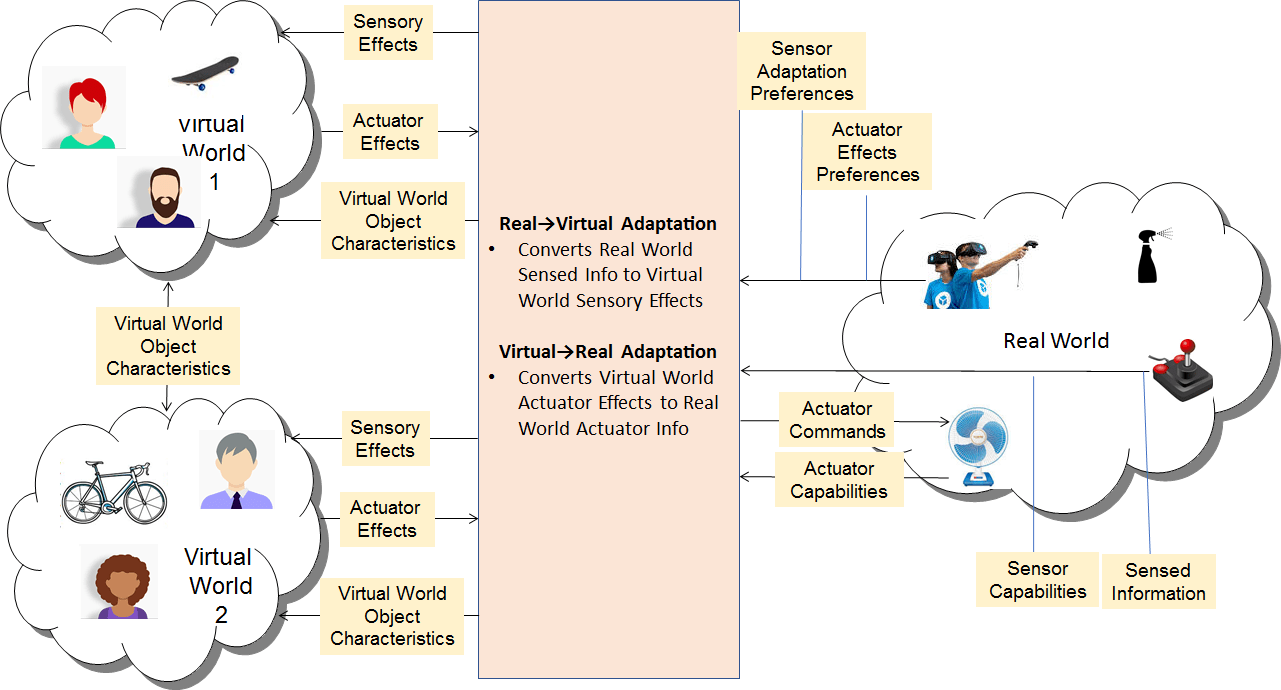

were creates without industry “control” by defining comprehensive models, as in the case of MPEG-V where standards for real-to-virtual and virtual-to-virtual interactions were developed

working in a community of Partners (P) and Customers (C).

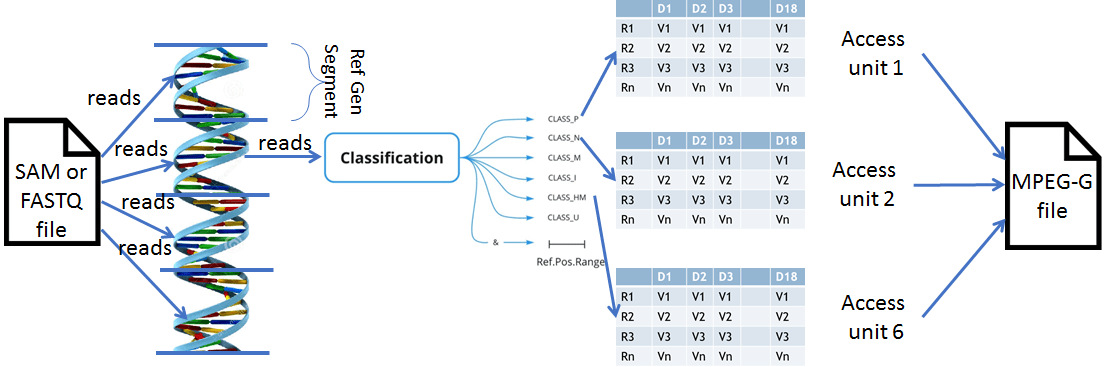

Audio, Video and 3D Graphics were great areas in need of compression standards, but there are other areas that can draw benefits from compression. Genomics is one of them because high-speed sequencing machines can read the DNA of living organisms, but at the cost of storing large amounts of largely repetitive data. By combining data with comparable statistical features, it was possible to compress those data significantly more than it is possible with currently used algorithms.

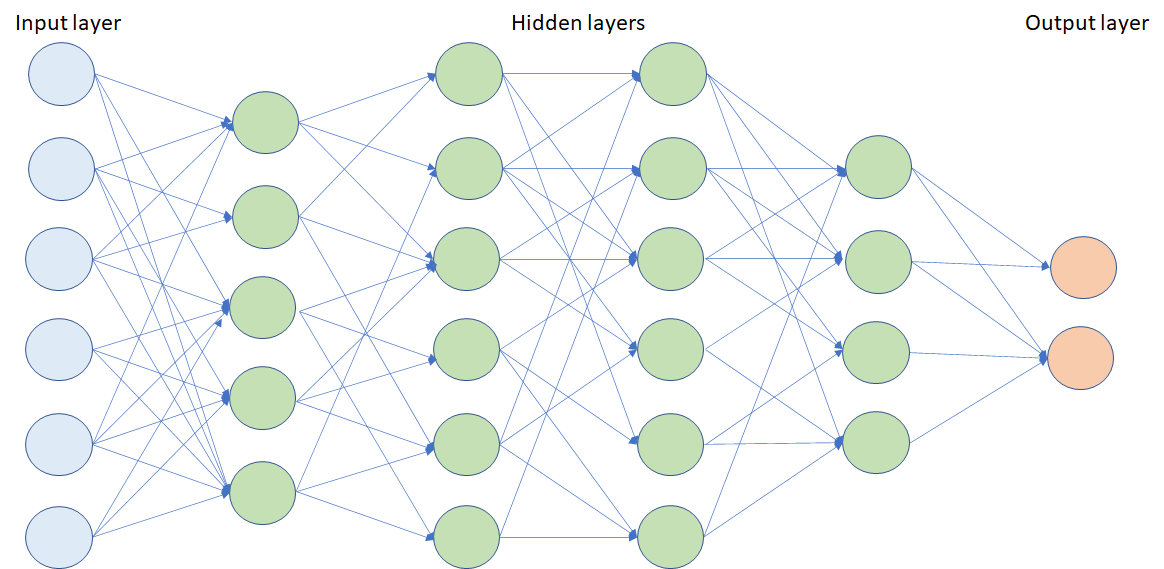

Neural networks, too, are not audio-visual data. Their use is spreading to many areas because of their unique features. However, the more performing they are, the bigger the neural networks are, and the performance is constantly improving. Compression can be applied to neural networks to reduce the time it takes to download a neural network-based application.

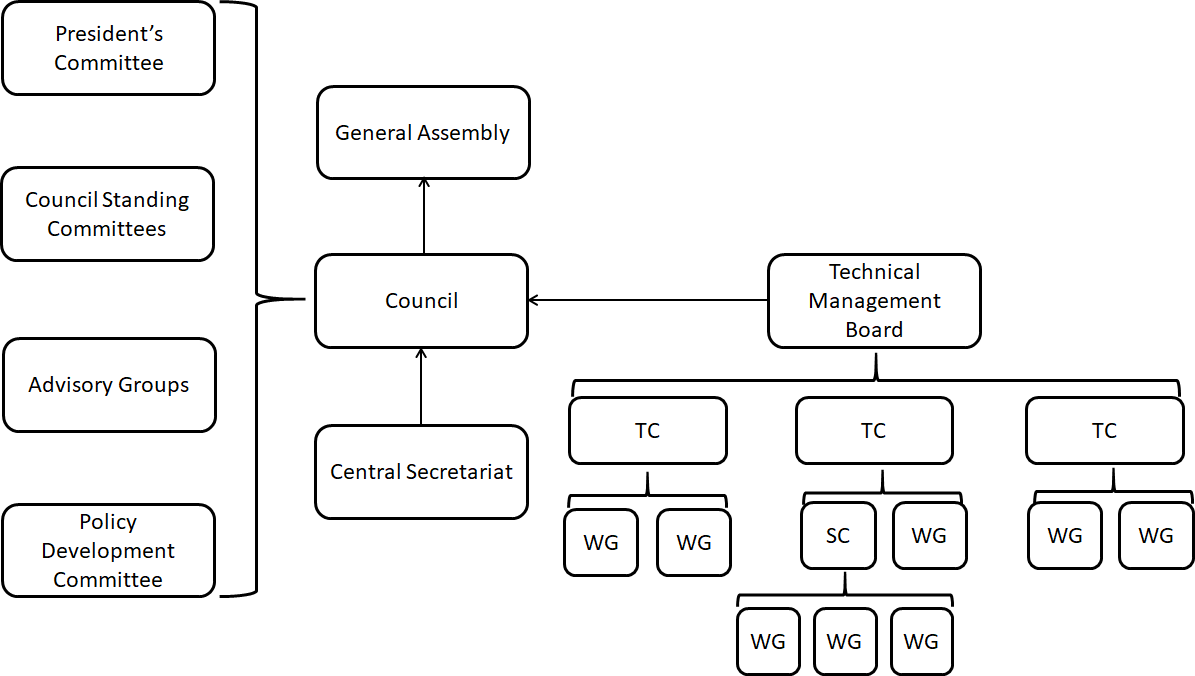

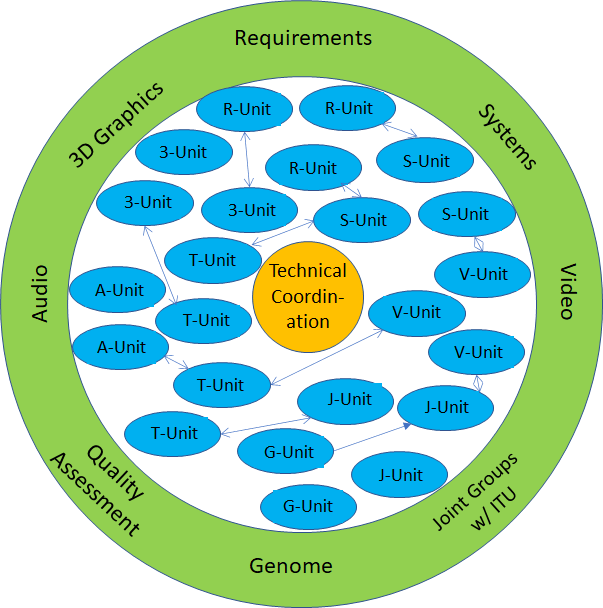

In an organisation where everything hierarchical (actually, also feudal, chaotic, hypocritical, obtuse and incompetent)

the Mule introduced a flat and liquid organisation where work actually happened in small groups combined in an ad hoc fashion to tackle multidisciplinary issues (the green names in the green annulus) coordinated by a group composed by the chairs of the green areas.

The hierarchical, feudal, chaotic, hypocritical, obtuse and incompetent organisation has many high-sounding organisational entities, still, the organisation created by the Mule was definitely the most productive.

The figure above gives the aggregated result. However, as always in life, success has not been uniform. The figure below depicts the most successful standards and their main application domains

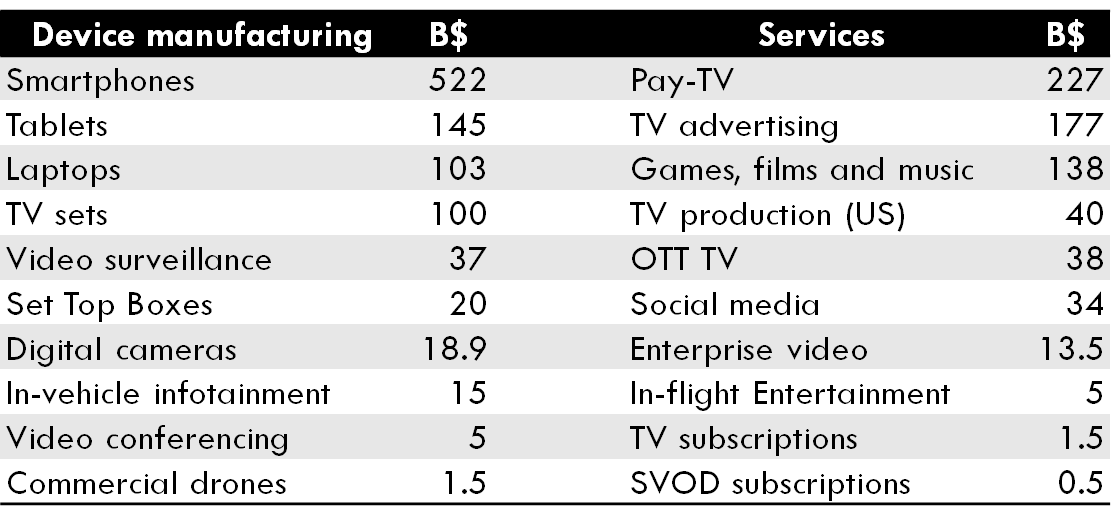

There is no doubt that the Mule’s organisation was a success. But how big was that success? With all its limitations, the market answers this question in the table below where the value of devices and services that have the standards as enablers is given. We are talking of a market value per annum of 1,500 billion USD, close to 2% of the Gross World Product.

Earlier in this paper I talked about the old way of going from products to standards. The table above shows that the Mule’s way of going from standards to products has more value.

But the new way adds a variable to the picture: the patent holders in the standard. This was managed differently for the different standards, using the best information that could be collected

- MPEG-1

- Video: no known licence

- Audio: “enlightened” licence

- MPEG-2

- Video & Systems: well-intentioned people established a patent pool

- MPEG-4

- Visual: same patent pool

- AVC: same patent pool

- Audio: different patent pool

- MPEG-7

- Attempt at creating a patent pool thwarted by one patent holder

- MPEG-H

- HEVC: 3 patent pools, >10 SEP holders not in a patent pool

- 3D Audio: no published licence

- MPEG-I

- VVC: no news (good news?)

Anticipating at this situation, the Mule thought that, if we don’t act, we risk becoming fossils. Indeed, after 30 years, things have substantially changed: scarcity of bandwidth needs not be the constraining factor any longer, not everybody looks for the best, some just look for the good, the market is mature with many standard providers..

In response to this changed market conditions three attempts were made to develop royalty free standards. The depressing result was

- Web video coding: AVC baseline with FRAND declarations

- Internet Video Coding: performance better than AVC with 3 FRAND declarations

- Video Coding for Browsers: 1 no-licence declaration

Some worked in ISO to put an obligation on those who make declarations that do not allow use of their patents to declare what is their infringed technology. This is, unfortunately. the right solution for the wrong problem. What we need is the identification of the claimed Standard Essential Patents (SEP) , something that is suggested in the forms, but seldom done.

Indeed, there where and are fossils at the risk of extinction.

Going back to the main topic, the table below shows that there have been many compression standards for audio-video-3D Graphics, but also a significant number of data compression standards.

Building on that evidence, in July 2018 UNI, the Italian member of ISO, proposed to create a new ISO Technical Committee on Data Compression Technologies. The proposal was rejected.

That was a proposal that lacked a strategic analysis. In many cases the momentum of traditional data compression technologies is wearing off, while Artificial Intelligence plays an important role in more and more applications of industrial interest and improves the coding efficiency of existing data types while benefiting the coding of new data types.

But what is data coding? The transformation of data given in one representation to an equivalent representation that is more suitable for specific applications. The semantics of the data must be preserved as much as possible but we also want to “bring out” the aspects of the semantics that are most important to an application. Traditional “less bits for about the same quality” compression, however, continues to be an important area.

AI technologies have a momentum that traditional technologies are losing. Moreover there is a huge and global research effort that will ensure the possibility to tap from the results of such areas as:

- representation learning: the discovery of data coding effective in solving AI tasks

- transfer learning: the adaptation of an AI model to work with different data

- edge AI: the deployment of AI models to the edge

- model integration: the creation of larger AI models by combining simpler models

- reproducibility of performance: giving an AI model the same level of performance in different contexts.

Thirty years of history have shown that standards are important because they ensure interoperability and integration of applications. As there is no organisation working for standards on data coding focused on AI as core technology, a new organisation should be created.

MPAI – Moving Picture, Audio and Data Coding by Artificial Intelligence is the organisation that is built on the strategic analysis above. It is a not-for-profit organisation with the mission to promote the efficient use of Data

- By developing Technical Specifications of

- Coding and decoding for any type of Data, especially using new technologies such as Artificial Intelligence, and

- Technologies that facilitate integration of Data Coding and Decoding com-ponents in Information and Communication Technology systems, and

- By bridging the gap between Technical Specifications and their practical use through the development of Intellectual Property Rights Guidelines (“IPR Guidelines”), such as Framework Licences and other instruments.

Any legal entity supporting the mission of MPAI may apply for Membership, provided that it is able to contribute to the development of Technical Specifications for the efficient use of Data. Individuals representing technical departments of academic institutions may also apply for Associate Membership, stating their qualification in their application.

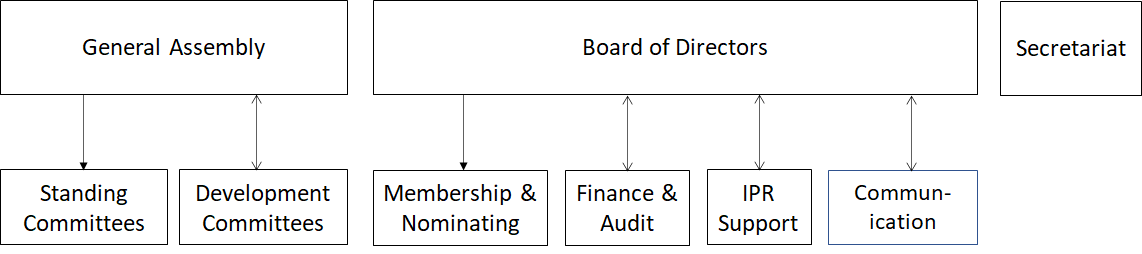

The organisational chart is given by the figure below.

MPAI is an inclusive organisation where

- Non-members may

- Submit proposals of use cases

- Contribute to the aggregation of uses cases into areas

- Participate in the development of requirements

- Associate Members may fully participate in and contribute to the development of MPAI standards with their technologies

- Principal Members may

- Elect and participate in MPAI governance

- Vote on policy matters

by applying the MPAI Community guidelines

- MPAI discusses proposals of uses cases from non-members, in open meetings and from members, in open meetings, if the proposing member agrees.

- Further discussions of technical proposals initiated in open meetings can continue by email, forum etc. with non-member participation

- Discussion on and access to MPAI documents relevant to technical proposals discussed in open meetings is open during the Use Case stage.

- Discussion on technical proposals in open meetings may continue during the Requirements stage unless the General Assembly decides to inhibit participation to non-members.

- Participation in stages beyond Functional Requirements by non-members is excluded.

Use cases are where new standards may take shape and MPAI dedicates much attention to make sure that valuable ideas are identified, improved and, if possible, converted to standards. Use cases are collected in a document organised by data types: Still Pictures, Moving Pictures, Audio, Event Sequences and Other Data.

Each data type is subdivided in ten main application areas

- Media & Entertainment

- Transportation

- Telco

- Information Technology

- Aerospace

- Manufacturing

- Healthcare

- Food & Beverage

- Science &Technology

- Other Domains

Each use case is then described with the following structure

| Element | What it is about |

| Proponent | Proponent’s name and affiliation |

| Description | Explains and delimits the scope of the use case |

| Comments | General comment on why and how AI can support the use case |

| Examples | Illustrate coverage of standard use in different contexts |

| Requirements | Preliminary reqs clarifying use case (full reqs in subsequent stage) |

| Object of standard | Identifies normative part of standard |

| Benefits | Advantages offered by standard over existing solutions/new opportunities |

| Bottlenecks | Technical issues limiting or facilitating use of the standard |

| Social aspects | Cases where using the standard may have social impacts (optional) |

| Success criteria | Measure of standard success outcomes & impact |

| Feature table | Table of key features, listed and defined in the table below |

MPAI innovates the traditional standards organisation work plan by introducing a Commercial Requirements stage as follows:

Stage 1 – Use Cases (UC): Anybody, including non-members, may propose use cases. There are collected and aggregated in cohesive areas possibly applicable across industries.

Stage 2 – Functional Requirements (FR): Functional requirements that the standard should support are identified and documented. In this and the preceding stage, non-members may participate in MPAI meetings, if members affected agree.

Stage 3 – Commercial Requirements (CR): The Framework Licence of the standard is developed. Those intending to contribute technologies to the standard approve the Framework Licence by qualified majority.

Stage 4 – Call for Technologies (CT): A Call is published requesting technologies that satisfy both the functional and the commercial requirements. All contributors to the standard declare they will make available the terms of their SEP licences according to the FWL after the standard is approved.

Stage 5 – Standard Development (SD): The standards is developed in a specific Development Committee on the basis on consensus.

Stage 6 – MPAI Standard (MS): The development of the standard is complete. MPAI members declare they will enter into a licensing agreement for other Members’ SEPs, if used, within 1 year after publication of SEP holders’ licensing terms. Non MPAI members shall enter into a licensing agreement with SEP holders to use an MPAI standard.

MPAI has identified several initial areas covering video, audio and data for a variety of application domains that span from genomics to gaming applications. Five areas have reached the FR stage. Other areas are at UC stage. In the following a summary descriptions of areas that have reached FR stage is given.

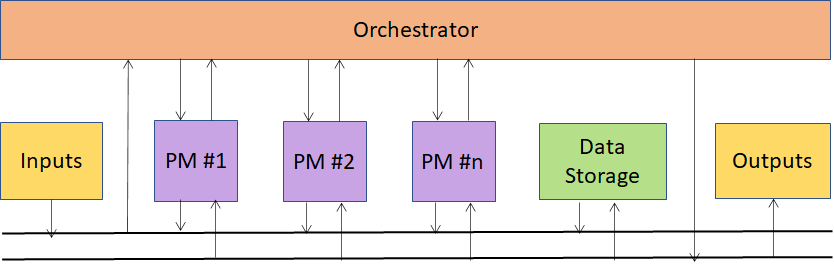

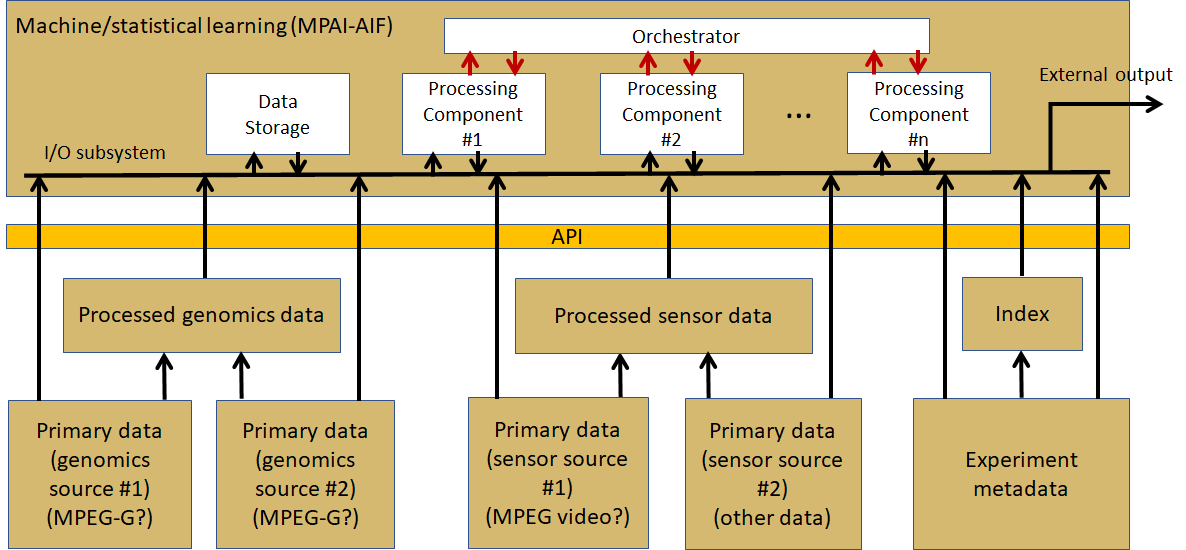

Artificial Intelligence Framework (MPAI-AIF) is an area providing a standard framework populated by AI-based or traditional Processing Modules. MPAI-AIF requirements are being developed to satisfy the requirements of the currently identified application oriented standards and possibly of others as the work progresses.

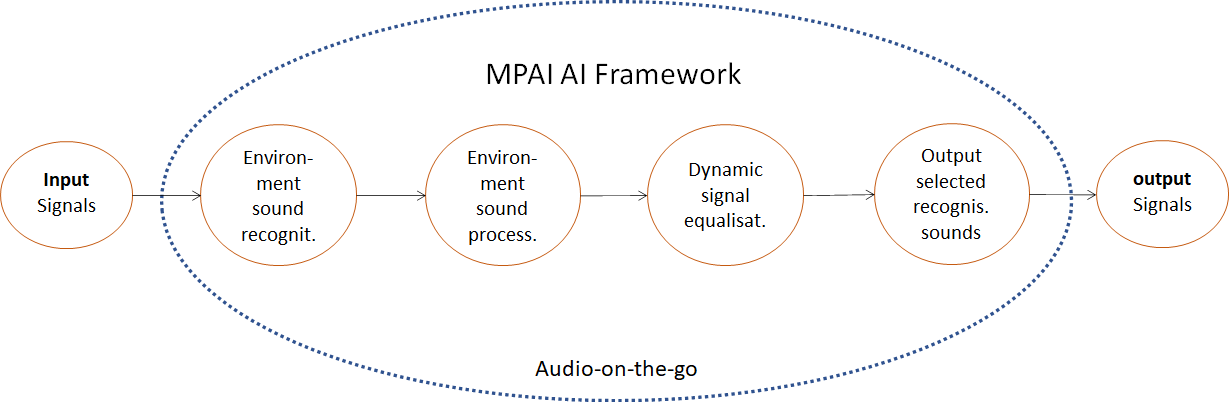

Context-based Audio Enhancement (MPAI-CAE) intends to use AI, to improve the user experience for a variety of uses such as entertainment, communication, teleconferencing, gaming, post-production, restoration etc. in a variety of contexts such as in the home, in the car, on-the-go, in the studio etc.

Integrative Genomic/Sensor Analysis (MPAI-GSA) uses AI to understand and compress the results of high-throughput experiments combining genomic/proteomic and other data – for instance from video, motion, location, weather, medical sensors. Use cases range from personalised medicine to smart farming.

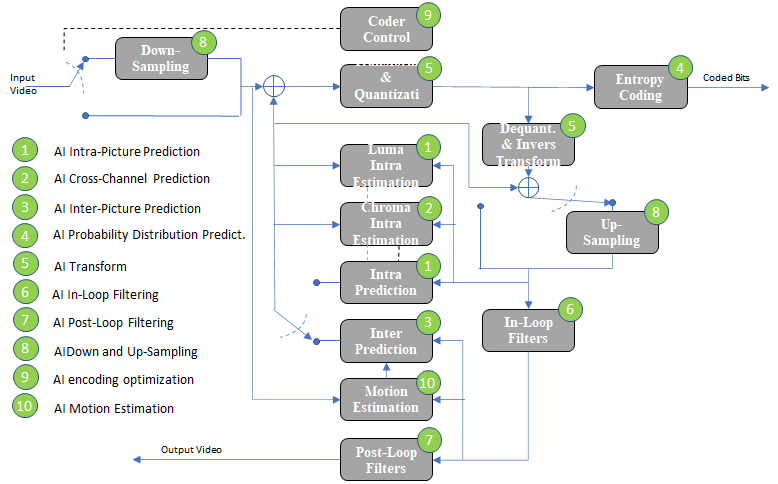

AI-Enhanced Video Coding (MPAI-EVC) is a video compression standard that substantially enhances the performance of a traditional video codec by improving or replacing traditional tools with AI-based tools, as described the figure below where green circles represent tools that can be replaced or enhanced with their AI-based equivalent

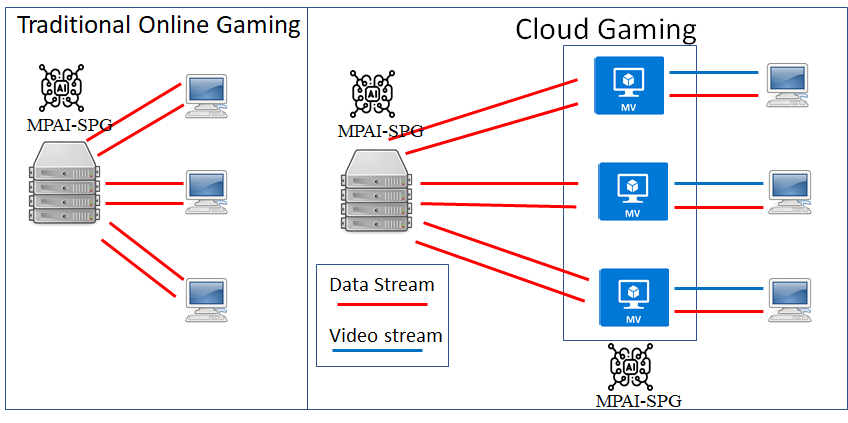

Server-based Predictive Multiplayer Gaming (MPAI-SPG) aims to minimise the audio-visual and gameplay discontinuities caused by high latency or packet losses during an online real-time game. In case information from a client is missing, the data collected from the clients involved in a particular game are fed to an AI-based system that predicts the moves of the client whose data are missing.

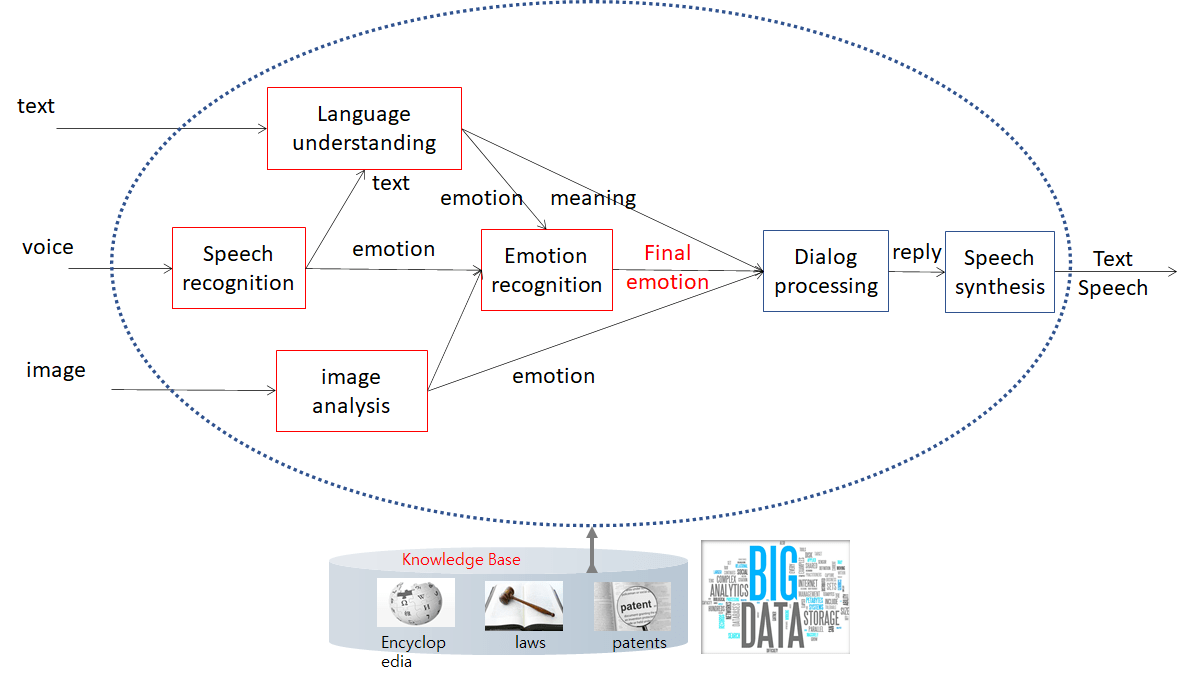

Multi-modal conversation (MPAI-MMC) aims to enable human-machine conversation that emulates human-human conversation in completeness and intensity by using AI. The figure below depicts a specific configuration of Processing Modules focused on emotion detection.

The second half of the MPAI mission is to bridge the gap between standards and their practical use. The MPAI statutes offer the notion of Framework Licence (FWL), namely the business model adopted by SEP holders to monetise their IP in a standard without values: no $, %, dates etc.

Before starting the technical work, active members (i.e. those who intend to contribute technologies to the standard) adopt the FWL

During the technical work

- Active members declare they will make available the terms of their SEP licences according to the FWL after the standard is approved when they make a contribution

- All members declare they will enter into a licensing agreement for other Members’ SEPs, if used, within 1 year after publication of SEP holders’ licensing terms

Non MPAI members shall enter into a licensing agreement with SEP holders to use an MPAI standard.

An example of a FWL is

- Licence has worldwide coverage and includes right to make, use and sell

- Royalty paid for products includes right to use encoders/decoders for content

- Products is sold to end users by a licensee

- Vendors of products that contain an encoder/decoder may pay royalties on behalf of their customers

- Royalties of R$/unit apply from date if licensee sells more than N units/ year or the royalties paid are below a cap of C$

- The royalty program is divided in terms, the first of which ends on date

- The percent increase from one term to another is less than x%

The FWL shall not contain the actual value in the red parts.

MPAI is working to create a new world for data compression standards.

Articles in the MPAI thread

- From media compression, to data compression, to AI-enabled data coding

- MPAI launches 6 standard projects

- What is MPAI going to do?

- A new organisation dedicated to data compression standards based on AI

- The MPAI machine has started

- A technology and business watershed

- The two main MPAI purposes

- Leaving FRAND for good

- Better information from data

- An analysis of the MPAI framework licence

- MPAI – do we need it?

- New standards making for a new age

| Earlier posts by categories: | MPAI | MPEG | ISO |