That video is a high profile topic to people interested in MPEG is obvious – MP stands for Moving Pictures – and is shown by the most visited article in this blog Forty years of video coding and counting. Audio is also a high profile topic, so it should not be a surprise given that the official MPEG title is “Coding of Moving Pictures and Audio” and is confirmed by the fact that Thirty years of audio coding and counting has received almost the same amount of visits as the previous one.

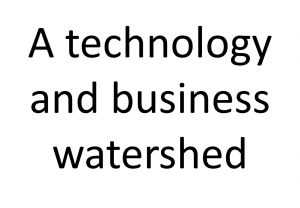

What is less known, but potentially very important, is the fact that MPEG has already developed a few standards for compression of a wide range of other data types. Point Cloud is the data type that is acquiring a higher profile by the day is, but there are many more types, as represented by the table below.

Figure 1 – Data types and relevant MPEG standards

Video

The articles Forty years of video coding and counting and More video with more features provide a detailed history of video compression in MPEG from two different perspectives. Here I will briefly list the video-coding related standards produced or being produced by MPEG mentioned in the table.

- MPEG-1 and MPEG-2 both produced widely used video coding standards.

- MPEG-4 has been much more prolific.

- It started with Part 2 Visual

- It continued with Part 9 Reference Hardware Description, a standard that supports a reference hardware description of the standard expressed in VHDL (VLSI Hardware Description Language), a hardware description language used in electronic design automation.

- Part 10 is the still high-riding Advanced Video Coding standard.

- Part 29, 31 and 33 are the result of three attempts at developing Option 1 video compression standards (in a simple but imprecise way, standards that do not require payment of royalties).

- MPEG-5 is currently expected to be a standard with 2 parts:

- Part 1 Essential Video Coding will have a base layer/profile which is expected to be Option 1 and a second layer/profile with a performance ~25% better than HEVC. Licensing terms are expected to be published by patent holders within 2 years.

- Part 2 Low Complexity Enhancement Video Coding (LCEVC) will be a two-layer video coding standard. The lower layer is not tied to any specific technology and can be any video codec; the higher layer is used to extend the capability of an existing video codec.

- MPEG-7 is about Multimedia Content Description. There are different tools to describe visual information:

- Part 3 Visual is a form of compression as it provides tools to describe Color, Texture, Shape, Motion, Localisation, Face Identity, Image signature and Video signature.

- Part 13 Compact Descriptors for Visual Search can be used to compute compressed visual descriptors of an image. An application is to get further information about an image captured e.g. with a mobile phone.

- Part 15 Compact Descriptors for Video Analysis allows to manage and organise large scale data bases of video content, e.g. to find content containing a specific object instance or location.

- MPEG-C is a collection of video technology standard that do not fit with other standards. Part 4 – Media Tool Library is a collection of video coding tools (called Functional Units) that can be assembled using the technology standardised in MPEG-B Part 4 Codec Configuration Representation.

- MPEG-H part 2 High Efficiency Video Coding is the latest MPEG video coding standard with an improved compression of 60% compared to AVC.

- MPEG-I is the new standard, mostly under development, for immersive technologies

- Part 3 Versatile Video Coding is the ongoing project to develop a video compression standard with an expected 50% more compression than HEVC.

- MPEG-I part 7 Immersive Media Metadata is the current project to develop a standard for compressed Omnidirectional Video that allows limited translational movements of the head.

- Exploration in 6 Degrees of Freedom (6DoF) and Lightfield are ongoing.

Audio

The article Thirty years of audio coding and counting provides a detailed history of audio compression in MPEG. Here I will briefly list the audio-coding related standards produced or being produced by MPEG mentioned in the table.

- MPEG-1 part 3 Audio produced, among others, the foundational digital audio standard better known as MP3.

- MPEG-2

- Part 3 Audio extended the stereo user experience of MPEG-1 to Multichannel.

- Part 7 Advanced Audio Coding is the foundational standard on which MPEG-4 AAC is based.

- MPEG-4 part 3 Advanced Audio Coding (AAC) currently supports some 10 billion devices and software applications growing by half a billion unit every year.

- MPEG-D is a collection of different audio technologies:

- Part 1 MPEG Surround provides an efficient bridge between stereo and multi-channel presentations in low-bitrate applications as it can transmit 5.1 channel audio within the same 48 kbit/s transmission budget.

- Part 2 Spatial Audio Object Coding (SAOC) allows very efficient coding of a multi-channel signal that is a mix of objects (e.g. individual musical instruments).

- Part 3 Unified Speech and Audio Coding (USAC) combines the tools for speech coding and audio coding into one algorithm with a performance that is equal or better than AAC at all bit rates. USAC can code multichannel audio signals, and can also optimally encode speech content.

- Part 4 Dynamic Range Control is a post-processor for any type of MPEG audio coding technology. It can modify the dynamic range of the decoded signal as it is being played.

2D/3D Meshes

Polygons meshes can be used to represent the approximate shape of a 2D image or a 3D object. 3D mesh models are used in various multimedia applications such as computer game, animation, and simulation applications. MPEG-4 provides various compression technologies

- Part 2 Visual provides a standard for 2D and 3D Mesh Compression (3DMC) of generic, but static, 3D objects represented by first-order (i.e., polygonal) approximations of their surfaces. 3DMC has the following characteristics:

- Compression: Near-lossless to lossy compression of 3D models

- Incremental rendering: No need to wait for the entire file to download to start rendering

- Error resilience: 3DMC has a built-in error-resilience capability

- Progressive transmission: Depending on the viewing distance, a reduced accuracy may be sufficient

- Part 16 Animation Framework eXtension (AFX) provides a set of compression tools for Shape, Appearance and Animation.

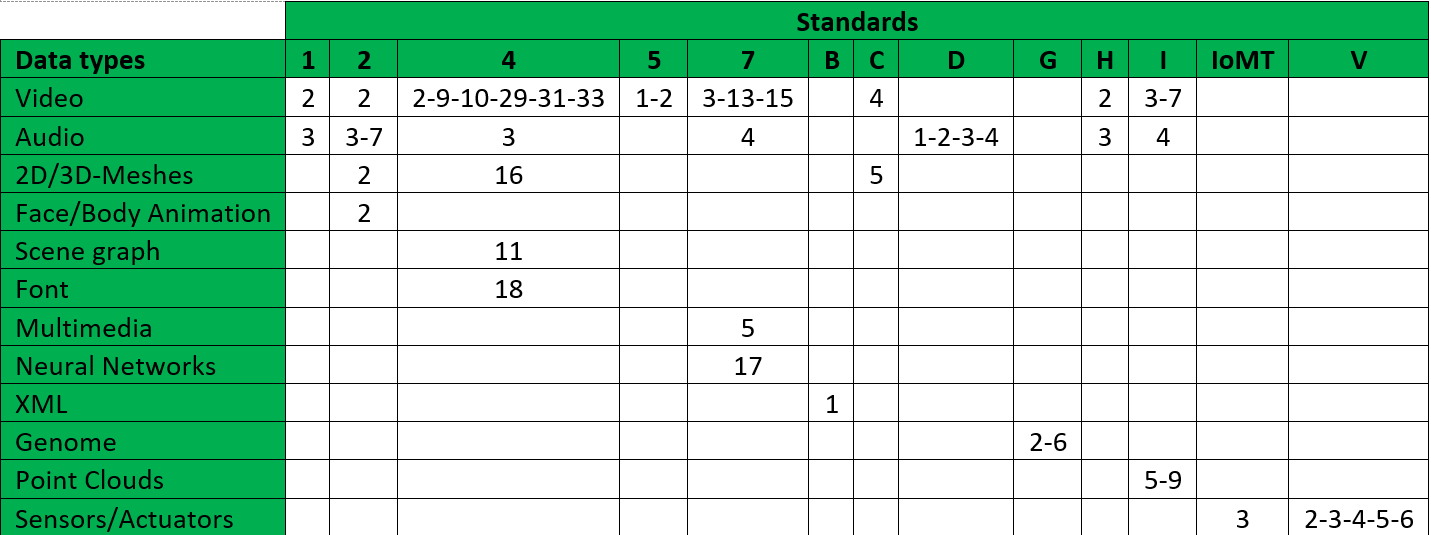

Face/Body Animation

Imagine you have a face model that you want to animate from remote. How do you represent the information that animates the model in a bit-thrifty way? MPEG-4 Part 2 Visual has an answer to this question with its Facial Animation Parameters (FAP). FAPs are defined at two levels.

- High level

- Viseme (visual equivalent of phoneme)

- Expression (joy, anger, fear, disgust, sadness, surprise)

- Low level: 66 FAPs associated with the displacement or rotation of the facial feature points.

In the figure feature points affected by FAPs are indicated as a black dot. Other feature point are indicated as a small circle.

Figure 2 – Facial Animation Parameters

It is possible to animate a default face model in the receiver with a stream of FAPs or a custom face can be initialised by downloading Face Definition Parameters (FDP) with specific background images, facial textures and head geometry.

MPEG-4 Part 2 uses a similar approach for Body Animation.

Scene Graphs

So far MPEG has never developed a Scene Description technology. In 1996, when the development of the MPEG-4 standard required it, it took the Virtual Reality Modelling Language (VRML) and extended it to support MPEG-specific functionalities. Of course compression could not be absent from the list. So the Binary Format for Scenes (BiFS), specified in MPEG-4 Part 11 Scene description and application engine was born to allow for efficient representation of dynamic and interactive presentations, comprising 2D & 3D graphics, images, text and audiovisual material. The representation of such a presentation includes the description of the spatial and temporal organisation of the different scene components as well as user-interaction and animations.

In MPEG-I scene description is playing again an important role. However, MPEG this time does not even intend to pick a scene description technology. It will define instead some interface to a scene description parameters.

Font

Many thousands of fonts are available today for use as components of multimedia content. They often utilise custom design fonts that may not be available on a remote terminal. In order to insure faithful appearance and layout of content, the font data have to be embedded with the text objects as part of the multimedia presentation.

MPEG-4 part 18 Font Compression and Streaming defines and provides two main technologies:

- OpenType and TrueType font formats

- Font data transport mechanism – the extensible font stream format, signaling and identification

Multimedia

Multimedia is a combination of multiple media in some form. Probably the closest multimedia “thing” in MPEG is the standard called Multimedia Application Formats. However, MPEG-A is an integrated package of media for specific applications and does not does define any specific media format. It only specifies how you can combine MPEG (and sometimes other) formats.

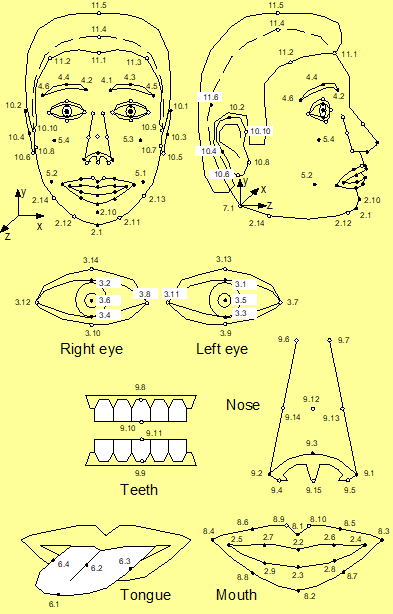

MPEG-7 part 5 Multimedia Description Schemes (MDS) specifies the different description tools that are not visual and audio, i.e. generic and multimedia. By comprising a large number of MPEG-7 description tools from the basic audio and visual structures MDS enables the creation of the structure of the description, the description of collections and user preferences, and the hooks for adding the audio and visual description tools. This is depicted in Figure 3.

Figure 3 – The different functional groups of MDS description tools

Figure 3 – The different functional groups of MDS description tools

Neural Networks

Requirements for neural network compression have been exposes in Moving intelligence around. After 18 months of intense preparation with development of requirements, identification of test material, definition of test methodology and drafting of a Call for Proposals(CfP), at the March 2019 (126th) meeting , MPEG analysed nine technologies submitted by industry leaders. The technologies proposed compress neural network parameters to reduce their size for transmission, while not or only moderately reducing their performance in specific multimedia applications. MPEG-7 Part 17 Neural Network Compression for Multimedia Description and Analysis is the standard, the part and the title given to the new standard.

XML

MPEG-B part 1 Binary MPEG Format for XML (BiM) is the current endpoint of an activity that started some 20 years ago when MPEG-7 Descriptors defined by XML schemas were compressed in a standard fashion by MPEG-7 Part 1 Systems. Subsequently MPEG-21 needed XML compression and the technology was extended in Part 15 Binary Format.

In order to reach high compression efficiency BiM relies on schema knowledge between encoder and decoder. It also provides fragmentation mechanisms to provide transmission and processing flexibility, and defines means to compile and transmit schema knowledge information to enable decompression of XML documents without a priori schema knowledge at the receiving end.

Genome

Genome is digital, and can be compressed presents the technology used in MPEG-G Genomic Information Representation. Many established compression technologies developed for compression of other MPEG media have found good use in genome compression. MPEG is currently busy developing the MPEG-G reference software and is investigating other genomic areas where compression is needed. More concretely MPEG plans to issue a Call for Proposal for Compression of Genome Annotation at its July 2019 (128th) meeting.

Point Clouds

3D point clouds can be captured with multiple cameras and depth sensors with points that can number a few thousands up to a few billions, and with attributes such as colour, material properties etc.

MPEG is developing two different standards whose choice depends on whether the point cloud is dense (this is done in MPEG-I Part 5 Video-based Point Cloud Compression) or less so (MPEG-I Part 9 Graphic-based PCC). The algorithms in both standards are lossy, scalable, progressive and support random access to subsets of the point cloud.

MPEG plans to release Video-based PCC as FDIS in October 2019 and Graphic-based PCC Point Cloud Compression as FDIS in April 2020.

Sensors/Actuators

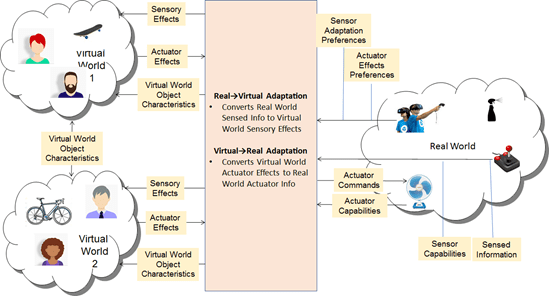

MPEG felt the need to address compression for data from sensor and data to actuator when it considered the exchange of information taking place between the physical world where the user is located and any sort of virtual world generated by MPEG media.

So MPEG undertook the task to provide standard interactivity technologies that allow a user to

- Map their real-world sensor and actuator context to a virtual-world sensor and actuator context, and vice-versa, and

- Achieve communication between virtual worlds.

Figure 3 describes the context of the MPEG-V Media context and control standard.

Figure 3 – Communication between real and virtual worlds

Figure 3 – Communication between real and virtual worlds

The MPEG-V standards defines several data types and their compression

- Part 2 – Control information specifies control devices interoperability (actuators and sensors) in real and virtual worlds

- Part 3 – Sensory information specifies the XML Schema-based Sensory Effect Description Language to describe actuator commands such as light, wind, fog, vibration, etc. that trigger human senses

- Part 4 – Virtual world object characteristics defines a base type of attributes and characteristics of the virtual world objects shared by avatars and generic virtual objects

- Part 5 – Data formats for interaction devices specifies syntax and semantics of data formats for interaction devices – Actuator Commands and Sensed Information – required to achieve interoperability in controlling interaction devices (actuators) and in sensing information from interaction devices (sensors) in real and virtual worlds

- Part 6 – Common types and tools specifies syntax and semantics of data types and tools used across MPEG-V parts.

MPEG-IoMT Internet of Media Things is the mapping of the general IoT context to MPEG media developed by MPEG. MPEG-IoMT Part 3 – IoMT Media Data Formats and API also addresses the issue of media-based sensors and actuators data compression.

What is next in data compression?

In Compression standards for the data industries I reported the proposal made by the Italian ISO member body to establish a Technical Committee on Data Compression Technologies. The proposal was rejected on the ground that Data Compression is part of Information Technology.

It was a big mistake because it has stopped the coordinated development of standards that would have fostered the move of different industries to the digital world. The article identified a few such as Automotive, Industry Automation, Geographic information and more.

MPEG has done some exploratory work and found that there quite a few of its existing standards could be extended to serve new application areas. One example is the conversion of MPEG-21 Contracts to Smart Contracts. An area of potential interest is data generated by machine tools in industry automation.

Conclusions

MPEG audio and video compression standards are the staples of the media industry. MPEG continues to develop those standards while investigating compression of other data types in order to be ready with standards when the market matures. Point clouds and DNA reads from high speed sequencing machines are just two examples of how, by anticipating market needs, MPEG prepares to serve timely the industry with its compression standards.

Posts in this thread

- Data compression in MPEG

- More video with more features

- Matching technology supply with demand

- What would MPEG be without Systems?

- MPEG: what it did, is doing, will do

- The MPEG drive to immersive visual experiences

- There is more to say about MPEG standards

- Moving intelligence around

- More standards – more successes – more failures

- Thirty years of audio coding and counting

- Is there a logic in MPEG standards?

- Forty years of video coding and counting

- The MPEG ecosystem

- Why is MPEG successful?

- MPEG can also be green

- The life of an MPEG standard

- Genome is digital, and can be compressed

- Compression standards and quality go hand in hand

- Digging deeper in the MPEG work

- MPEG communicates

- How does MPEG actually work?

- Life inside MPEG

- Data Compression Technologies – A FAQ

- It worked twice and will work again

- Compression standards for the data industries

- 30 years of MPEG, and counting?

- The MPEG machine is ready to start (again)

- IP counting or revenue counting?

- Business model based ISO/IEC standards

- Can MPEG overcome its Video “crisis”?

- A crisis, the causes and a solution

- Compression – the technology for the digital age

- On my Charles F. Jenkins Lifetime Achievement Award

- Standards for the present and the future