Introduction

MPEG has developed standards in many areas. One the latest is compression of DNA reads from high-speed sequencing machines and is now working on Compression of Neural Networks for Multimedia Content Description and Analysis.

How could a group who was originally tasked to develop standards for video coding for interactive applications on digital storage media (CD-ROM) get to this point?

This article posits that the answer is in the same driving force that pushed the original settlers on the East Coast of the North American continent to reach the West Coast. This article also posits that, unlike the ocean after the West Coast that put an end to the frontier and forced John F. Kennedy to propose the New Frontier, MPEG has an endless series of frontiers in sight. Unless, I mean, some mindless industry elements will declare that there is no longer a frontier to overcome.

The MPEG “frontiers”

The ideal that made MPEG experts work over the last 31 years finds its match in the ideal that defined the American frontier. As much as “the frontier”, according to Frederick Jackson Turner, was the defining process of American civilisation, so the development of a series of 180 standards for Coding of Moving Pictures and Audio that extended the capability of compression to deliver better and new services has been the defining process of MPEG, the Moving Picture Experts Group.

The only difference is that the MPEG frontier is a collection of frontiers held together by the title “Coding of Moving Pictures and Audio”. It is difficult to give a rational order in a field undergoing a tumultous development, but I count 10 frontiers:

- Making rewarding visual experiences possible by reducing the number of bits required to digitally represent video while keeping the same visual quality and adding more features (see Forty years of video coding and counting and More video with more features)

- Making rewarding audio experiences possible by reducing the number of bits required to digitally represent audio with enhanced user experiences (see Thirty years of audio coding and counting)

- Making rewarding user experiences by reducing the number of bits required by other non-audio-visual data such as computer-generated or sensor data

- Adding infrastructure components to 1, 2 and 3 so as to provide a viable user experience

- Making rewarding spatially remote or time-shifted user experiences possible by developing technologies that enable the transport of compressed data of 1, 2 and 3 (What would MPEG be without Systems?)

- Making possible user experiences involving combinations of different media

- Giving users the means to search for the experiences of 1, 2, 3 and 4 of interest to them

- Enabling users to interact with the experiences made possible by 1, 2, 3 and 4

- Making possible electronic commerce of user experiences made possible by 1, 2, 3, 4 5 and 6

- Defining interfaces to facilitate the development of services.

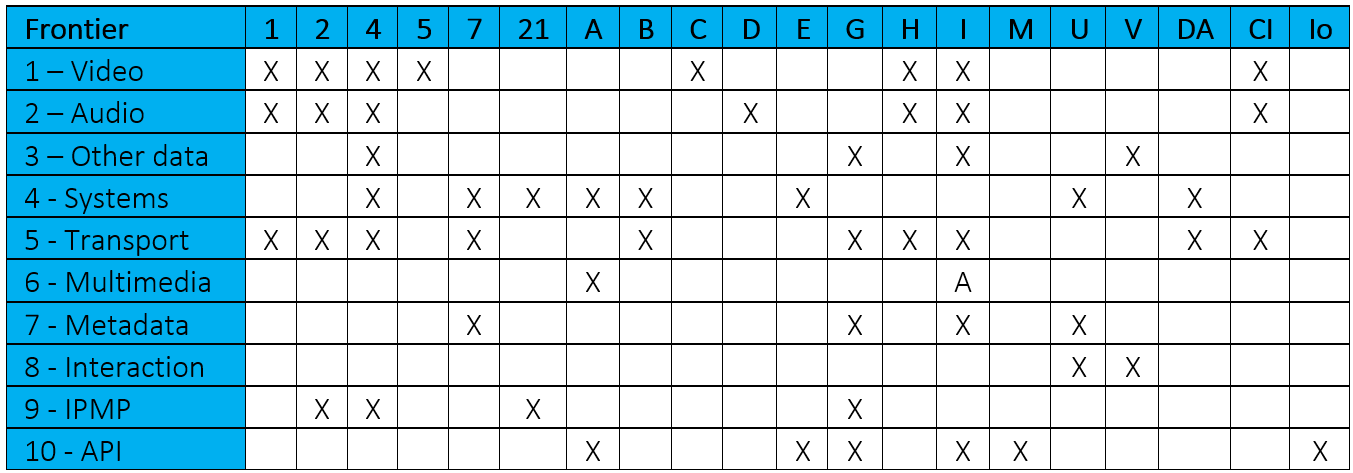

The table below provides a mapping between MPEG frontiers and MPEG standards, both completed and under development.

Legend: DA=MPEG DASH, CI=CICP, Io=IoMT

No end to the MPEG frontier

Thirty-one years and 180 standards later, MPEG has not accomplished its mandate, yet. That is not because it has not tried hard enough, but because there is an unceasing stream of new technologies providing new opportunities to better accomplish its mandate with improved user satisfaction.

While developing its standards, MPEG has substantially clarified the content of its mandate, still within the title of “Coding of Moving Pictures and Audio”. The following topics highlight what will be the main directions of work in the next few (I must say, quite a few) years to come.

More of the same

The quest for new solutions that do better or just simply different than what has been done in the past 31 years will continue unabated. The technologies that will achieve the stated goals will change and new ones will be added. However, old needs are not going to disappear because solutions exist today.

Immersive media

This is the area that many expect will host the bulk of MPEG standards due to appear in the next few years. Point Cloud Compression is one of the first standards that will provide immediately usable 3D objects – both static and dynamic – for a variety of applications. But other, more traditional, video based approached are also being investigated. Immersive Audio will also be needed to provide complete user experiences. The Omnidirectional MediA Format (OMAF) will probably be, at least for some time, the platform where the different technologies will be integrated. Other capturing and presentation technologies will possibly require new approaches at later stages.

Media for all types of users

MPEG has almost completed the development of the Internet of Media Things (IoMT) standard (in October 2019 part 1 will become FDIS, while parts 2 and 3 have already achieved FDIS in March 2019). IoMT is still an Internet of Things, but Media Things are much more demanding because the amount of information transmitted and processed is typically huge (Mbit/s if not Gbit/s) and at odds with the paradigm of distributed Things that are expected to stay unattended possibly for years. In the IoMT paradigm information is typically processed by machines. Sometimes, however, human users are also involved. Can we develop standards that provide satisfactory (compressed) information to human and machine users alike?

Digital data are natively unsuitable to processing

The case of Audio and Video compression has always been clear. In 1992/1994 industry could only thank MPEG for providing standards that made it economically feasible to deliver audio-visual services to millions (at that time), and billions (today), of users. Awareness for other data types took time to percolate, but industry now realises that point clouds is an excellent vehicle for delivery of content for entertainment and of 3D environments for automotive; DNA reads from high-speed sequencing machines can be compressed and made easier to process; large neural networks can be compressed for delivery to millions of devices. There is an endless list of use cases all subject to the same paradigm: huge amount of data that can hardly be distributed but can be compressed with or without loss of information, depending on the application.

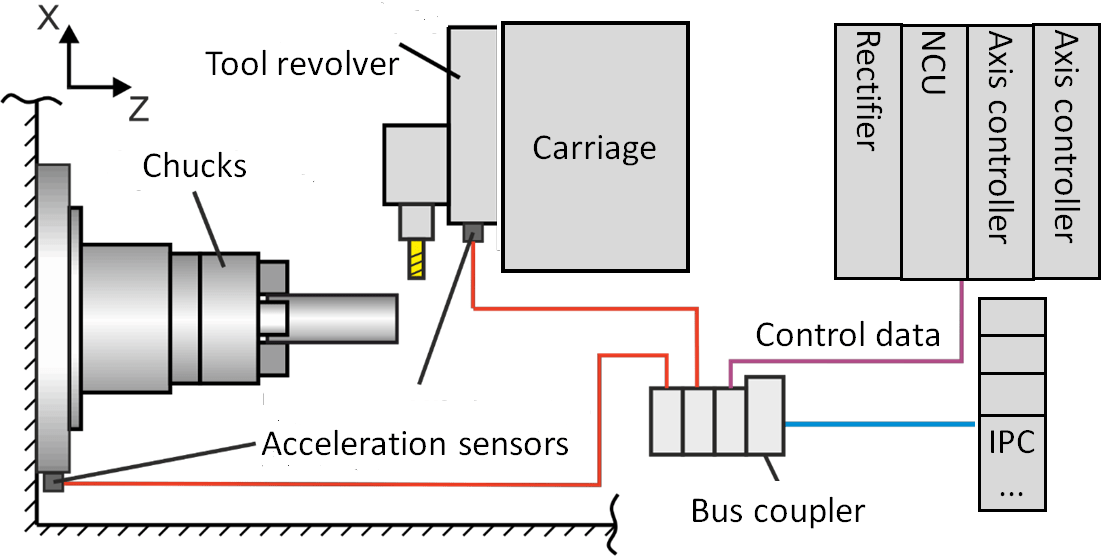

MPEG is now exploring the use case of machine tools where signals are sampled at a sampling rate > 40 kHz with 10 bit accuracy. In these conditions the machine tool generates 1 TByte/year. The data stored are valuable resources for machine manufactures and operators because they can be used for optimisation of machine operation, determination of on-demand maintenance and factory optimisation.

The password here is: industry 4.0. In order to limit the amount of data stored on the factory floor, a standard for data compression of machine tool data would be invaluable.

In you are interested in this new promising area please subscribe at https://lists.aau.at/mailman/listinfo/mpeg-mc.at and join the email reflector mpeg-mc@lists.aau.at.

Is MPEG going to change?

MPEG is going to change but, actually, nothing needs to change because the notions outlined above are already part of MPEG’s cultural heritage. In the future we will probably make use of neural networks for different purposes. This, however, is already a reality today because the Compact Descriptors for Video Analysis (CDVA) standard uses neural networks and many proposals to use neural networks in the Versatile Video Coding (VVC) standard have already been made. We will certainly need neural network compression but we are already working on it in MPEG-7 part 17 Neural Networks for Multimedia Content Description and Analysis.

MPEG has been working on MPEG-I Coded representation of immersive media for some time. A standard has already been produced (OMAF), two others (V-PCC and NBMP) have reached DIS level and two others have reached CD level (G-PCC and VVC). Many parallel activities are under way at different stages of maturity.

I have already mentioned that MPEG has produced standards in the IoMT space, but the 25 year old MPEG-7 notion of describing, i.e. coding, content in compressed form is just a precursor of the current exploratory work on Video Coding for Machines (reflector: mpeg-vcm@lists.aau.at, subscription: https://lists.aau.at/mailman/listinfo/mpeg-vcm).

In the Italian novel The Leopard, better known in the 1963 film version (director Luchino Visconti, starring Burt Lancaster, Claudia Cardinale and Alain Delon), the grandson of the protagonist says: “Se vogliamo che tutto rimanga come è, bisogna che tutto cambi” (if we want that everything stays the same, everything needs to change).

The MPEG version of this cryptic sentence is “if we want that everything changes, everything needs to stay the same”.

Guidance for the future

MPEG is driven by a 31-year long ideal that it has pursued using guidelines that it is good to revisit here while we are about to enter a new phase:

- MPEG standard are designed to serve multiple industries. MPEG does not – and does not want to – have a “reference industry”. MPEG works with the same dedication for all industries trying to extract the requirements of each without favouring any.

- MPEG standards are provided to the market, not the other way around. At times when de facto standards are popping up in the market, it is proper to reassert the policy that international standards should be developed by experts in a committee.

- MPEG standards anticipate the future. MPEG standard cannot trail technology development. If it did otherwise is would be forced to adopt solution that a particular company in a particular industry has already developed.

- MPEG standards are the result of a competition followed by collaboration. Competition is as the root of progress. MPEG should continue publishing its work plan so that companies can develop their solutions. MPEG will assess the proposals, select and integrate the best technologies and develop its standards in a collaborative fashion.

- MPEG standards thrive on industry research. MPEG is not in the research business, but MPEG would go nowhere is not constantly fed with research, in responses to Calls for Proposals and in the execution of Core Experiments.

- MPEG Standards are enablers, not disablers. As MPEG standards are not “owned” by a specific industry, MPEG will continue assessing and accommodating all legitimate functional requirements from whichever source they come.

- MPEG standards need a business model. MPEG standards has been successful also because those successful in contributing good technologies MPEG standard have been handsomely remunerated and could invest in new technologies. This business model will not be sufficient to sustain MPEG in its new endeavours.

Conclusions

Leonardo da Vinci, an accomplished performer in all arts and probably the greatest inventor of all times, lived in the unique age of history called Renaissance, when European literati became aware that knowledge was boundless and that they had the capability to know everything. Leonardo’s dictum “Homo sum, humani nihil a me alienum puto” (I am human, and nothing human I consider alien to me) well represents the new consciousness of the intellectual power of humans in the Renaissance age.

MPEG does not have the power to know everything – but it knows quite a few useful things for its mission. MPEG does not have the power to do everything – but it knows how to make the best standards in the area of Coding of Moving Pictures and Audio (and in a few nearby areas as well).

It would indeed be a great disservice if MPEG could not continue serving industry and humankind in the challenges to come as it has successfully done in the challenges of the last 31 years.

Posts in this thread

- The MPEG frontier

- Tranquil 7+ days of hard work

- Hamlet in Gothenburg: one or two ad hoc groups?

- The Mule, Foundation and MPEG

- Can we improve MPEG standards’ success rate?

- Which future for MPEG?

- Why MPEG is part of ISO/IEC

- The discontinuity of digital technologies

- The impact of MPEG standards

- Still more to say about MPEG standards

- The MPEG work plan (March 2019)

- MPEG and ISO

- Data compression in MPEG

- More video with more features

- Matching technology supply with demand

- What would MPEG be without Systems?

- MPEG: what it did, is doing, will do

- The MPEG drive to immersive visual experiences

- There is more to say about MPEG standards

- Moving intelligence around

- More standards – more successes – more failures

- Thirty years of audio coding and counting

- Is there a logic in MPEG standards?

- Forty years of video coding and counting

- The MPEG ecosystem

- Why is MPEG successful?

- MPEG can also be green

- The life of an MPEG standard

- Genome is digital, and can be compressed

- Compression standards and quality go hand in hand

- Digging deeper in the MPEG work

- MPEG communicates

- How does MPEG actually work?

- Life inside MPEG

- Data Compression Technologies – A FAQ

- It worked twice and will work again

- Compression standards for the data industries

- 30 years of MPEG, and counting?

- The MPEG machine is ready to start (again)

- IP counting or revenue counting?

- Business model based ISO/IEC standards

- Can MPEG overcome its Video “crisis”?

- A crisis, the causes and a solution

- Compression – the technology for the digital age