Introduction

A group of people caring about the future of MPEG has established MPEG Future. According to the MPEG Future Manifesto, the group plans to

- Support and expand the academic and research community which provides the life blood of MPEG standards;

- Enhance the value of intellectual property that make MPEG standards unique while facilitating their use;

- Identify and promote the development of new compression-related standards benefitting from the MPEG approach to standardisation;

- Further improve the connection between industry and users, and the MPEG standards group

- Preserve and enhance the organisation of MPEG, the standards group who can achieve the next goals because it brought the industry to this point.

In this article I would like to contribute to item 4 “Further improve the connection between industry and users, and the MPEG standards group”. I will examine what MPEG currently does or plans to do in the area of Video and Point Cloud compression and will solicit feedbacks and comments from the MPEG Future community.

Video and Point Cloud compression in MPEG

Video and Point Cloud compression are two of the most important MPEG work areas. In MPEG Video and Point Cloud compression is quite articulated because 6 ongoing projects. Tn temporal order of standard approval they are:

- Video-based Point Cloud Compression (V-PCC), part 5 of MPEG-I. This standard, to be approved by MPEG in April 2020, will provide a standard means to compress 3D objects whose points on the surface are “densely” captured with (x,y,z) coordinates, colour and depth (and potentially other attributes as well). V-PCC is the natural response to market needs for a standard that compress point clouds without requiring the deployment of new hardware. Here you can see an example of a dynamic point cloud suitable for V-PCC compression.

- Essential Video Coding (EVC), part 1 of MPEG-5. This standard, to be approved by MPEG in April 2020, will provide an improved compression efficiency over HEVC, that is less than VVC’s, but with promises to offer a simplified IP landscape. EVC is thus designed to provide a solution to those who need compression but are not ready or capable to access the powerful but sophisticated VVC technology.

- Versatile Video Coding (VVC), part 3 of MPEG-I. This standard, to be approved by MPEG in July 2020, is expected to provide the usual improvement of an MPEG video coding standard over the next generation’s. VVC natively supports 3 degrees of freedom (3DoF) viewing (HEVC did already), especially in conjunction with Omnidirectional Media Format (OMAF), part 2 of MPEG-I. VVC is the latest entry of the line MPEG video compression standards and crowns 30 years of efforts by reaching a compression of 1,000 over uncompressed video.

- Low Complexity Enhancement Video Coding (LCEVC), part 2 of MPEG-5. This standard, to be approved by MPEG in July 2020, will specify an enhancement stream, suitable for software processing implementation, that enables an existing device to offer higher resolution at competitive quality and with sustainable power consumption.

- Geometry-based Point Cloud Compression (G-PCC), part 9 of MPEG-I. This standard, to be approved by MPEG-I in October 2020, will also provide a standard means to compress 3D objects whose points on the surface are “sparsely” captured with (x,y,z) coordinates, colour and depth (and potentially other attributes as well). G-PCC, is designed to compressed point clouds that cannot be compressed satisfactorily with V-PCC. Here you can see an example of a dynamic point cloud suitable for G-PCC compression.

- MPEG Immersive Video Coding (MIV), part 12 MPEG-I. This standard, to be approved in January 2021, will provide an immersive video experience to users when they navigate the scene with limited displacements (so-called 3DoF+) because the reduced number of views compressed with standard video coding technologies sent by the encoder are supplemented by the specific view requested by the view synthesised by the decoder.

Why is MPEG engaged in 6 visual coding projects?

In 30 years, the audio-visual market has evolved a lot and the mantra of “one single video compression (albeit with profiles and levels) fits all needs” no longer holds true. Users have different and often incompatible requirements:

- High quality is desirable, and you get more quality by increasing bitrate or the amount of technology. Bitrate has a cost but technology, too, has a cost. Therefore, users should be able to achieve the quality they need with different combinations of bitrate and technology,

- 2D video has always been the bread and butter of visual applications, but 3D is an attractive mermaid that continuously morphs depending on the capturing technology that becomes available.

- Silicon has always been considered a must for constrained devices. This statement continues to be true but using software that implements clever technology allowing the extension of the basic silicon capabilities without changing the device (e.g. V-PCC and LCEVC).

More to come

In 12 months the 6 MPEG projects described will all gradually achieve FDIS level and industry will take over. But MPEG does not only have these 6 projects in store. In the exploration pipeline MPEG has 4 more activities that may become projects.

More Point Cloud Compression

The release of V-PCC and G-PCC does not mean that point cloud compression activitieswill stop. MPEG must listen to what industry and users will say about the first release of these standards and will add what is required to achieve more satisfactory user experiences.

As MPEG in Point Cloud Compression is still in the learning curve, expert more breakthroughs.

Six Degrees of Freedom (6DoF)

With this standard MPEG expects to be able to provide “unrestricted” immersive navigation where users can not only yaw, pitch and roll their heads (as in 3DoF) but move in the 3D space as well. The project is connected with the current 3DoF+ project in ways that are still to be fully determined.

Light fields

MPEG has been exploring the use of light field cameras to generate light field data. Technology, however, is evolving fast form plenoptic-1 cameras (microlens array placed at the image plane of the main lens) to plenoptic-2 cameras (microlens array placed behind the image plane of the main lens to re-image the captured scene). Read here about the challenges light field compression has to overcome for the technology to be deployable to millions of users.

Video coding for machines (VCM)

This exploration is driven by the increasing number of applications that capture video for (remote) consumption by a machine, such as connected vehicles, video surveillance systems, and video capure for smart cities. The fact that the user is a remote machine does not change the fact that transmission bandwidth is often at a premium and compression is required. However, unlike traditional video coding where users expect high visual fidelity, in video coding for machines, “users” expect high fidelity of the parameters that will be processed by the machine users. The bold idea of VCM is to have a unified video coding system for machines, where one type of machine is human. Technology is expected to be uniformly based on neural networks.

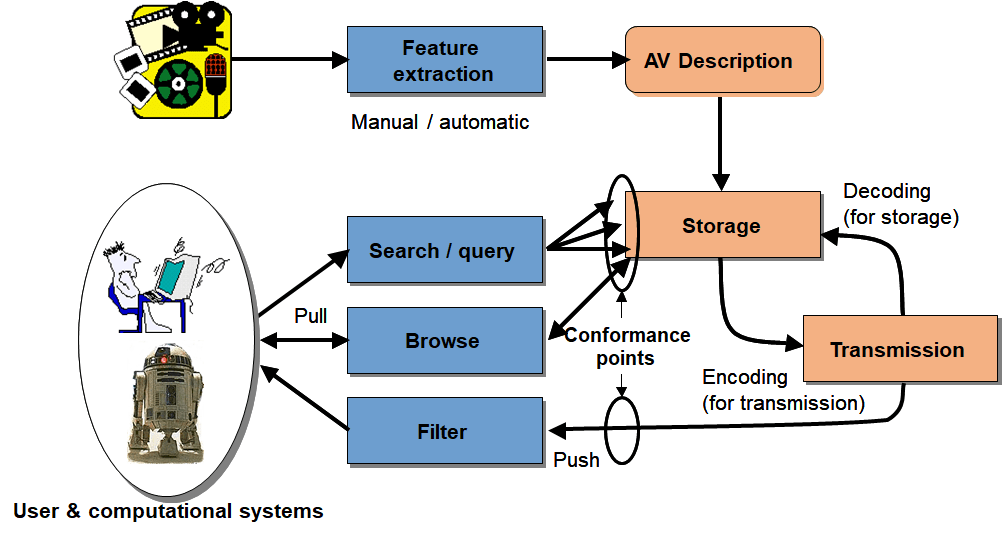

As noted here, however, VCM is not an entirely new field for MPEG. Twenty years ago, MPEG was working on MPEG-7 (multimedia description) and a figure of that time (below) clearly depicts what MPEG had in mind: MPEG-7 was eminently targeted to searching and browsing applications but the users were both humans and machines.

The same figure with minor modifications can be used for VCM. Feature extraction is probably going to be automatic (but manual feature extraction is not excluded because this is an encoder matter). Storage is probably not needed but cannot be excluded either. Search/query and browse are not specifically the target of VCM but cannot be excluded either.

The MPEG Future community should react

MPEG Future participants have a role to play in providing feedbacks on the future of video and point cloud coding. They can be in 3 different directions

- Feedbacks on the suitability of the 6 standards that MPEG will release in the next 12 months to market needs;

- Comments on the current explorations that will hopefully be converted to MPEG standards, Comments can be on 1) the identified applications, 2) their requirements and 3) the technologies most suitable to support them;

- Proposals of new applications.

This will not be the end of the involvement of the MPEG Future community. However, it will be a big step forward.

Posts in this thread

- An action plan for the MPEG Future community

- Which company would dare to do it?

- The birth of an MPEG standard idea

- More MPEG Strengths, Weaknesses, Opportunities and Threats

- The MPEG Future Manifesto

- What is MPEG doing these days?

- MPEG is a big thing. Can it be bigger?

- MPEG: vision, execution,, results and a conclusion

- Who “decides” in MPEG?

- What is the difference between an image and a video frame?

- MPEG and JPEG are grown up

- Standards and collaboration

- The talents, MPEG and the master

- Standards and business models

- On the convergence of Video and 3D Graphics

- Developing standards while preparing the future

- No one is perfect, but some are more accomplished than others

- Einige Gespenster gehen um in der Welt – die Gespenster der Zauberlehrlinge

- Does success breed success?

- Dot the i’s and cross the t’s

- The MPEG frontier

- Tranquil 7+ days of hard work

- Hamlet in Gothenburg: one or two ad hoc groups?

- The Mule, Foundation and MPEG

- Can we improve MPEG standards’ success rate?

- Which future for MPEG?

- Why MPEG is part of ISO/IEC

- The discontinuity of digital technologies

- The impact of MPEG standards

- Still more to say about MPEG standards

- The MPEG work plan (March 2019)

- MPEG and ISO

- Data compression in MPEG

- More video with more features

- Matching technology supply with demand

- What would MPEG be without Systems?

- MPEG: what it did, is doing, will do

- The MPEG drive to immersive visual experiences

- There is more to say about MPEG standards

- Moving intelligence around

- More standards – more successes – more failures

- Thirty years of audio coding and counting

- Is there a logic in MPEG standards?

- Forty years of video coding and counting

- The MPEG ecosystem

- Why is MPEG successful?

- MPEG can also be green

- The life of an MPEG standard

- Genome is digital, and can be compressed

- Compression standards and quality go hand in hand

- Digging deeper in the MPEG work

- MPEG communicates

- How does MPEG actually work?

- Life inside MPEG

- Data Compression Technologies – A FAQ

- It worked twice and will work again

- Compression standards for the data industries

- 30 years of MPEG, and counting?

- The MPEG machine is ready to start (again)

- IP counting or revenue counting?

- Business model based ISO/IEC standards

- Can MPEG overcome its Video “crisis”?

- A crisis, the causes and a solution

- Compression – the technology for the digital age