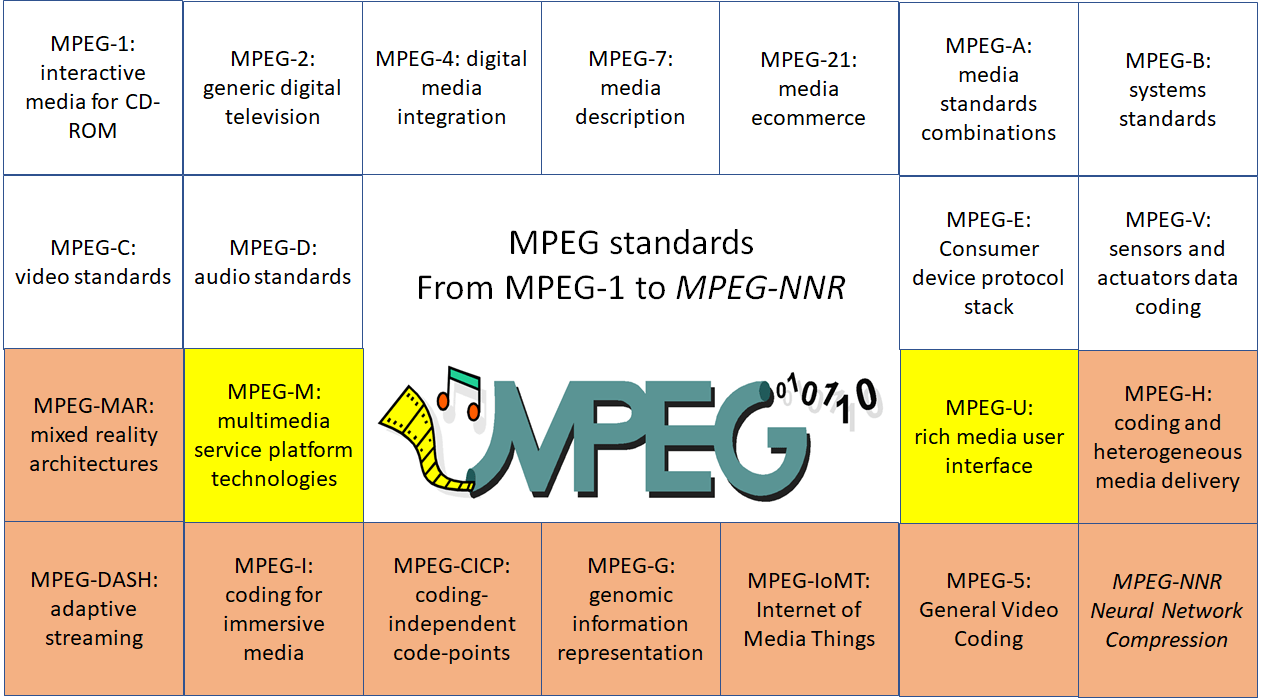

In Is there a logic in MPEG standards? and There is more to say about MPEG standards I have made an overview of the first 11 MPEG standards (white squares in Figure 1). In this article I would like to continue the overview and briefly present the remaining 11 MPEG standards, including those what are still being developed. Using the same convention as before those marked yellow indicate that no work was done on them for a few years

Figure 1 – The 22 MPEG standards. Those in colour are presented in this article

MPEG-MAR

When MPEG begun the development of the Augmented Reality Application Format (ARAF) it also started a specification called Augmented Reality Reference Model. Later it became aware that SC 24 Computer graphics, image processing and environmental data representation was doing a similar work and joined forces to develop a standard called Mixed and Augmented Reality Reference Model with them.

In the Mixed and Augmented Reality (MAR) paradigm, representations of physical and computer mediated virtual objects are combined in various modalities. The MAR standard has been developed to enable

- The design of MAR applications or services. The designer may refer and select the needed components from those specified in the MAR model architecture taking into account the given application/service requirements.

- The development of a MAR business model. Value chain and actors are identified in the Reference Model and implementors may map them to their business models or invent new ones.

- The extension of existing or creation of new MAR standards. MAR is interdisciplinary and creates ample opportunities for extending existing technology solutions and standards.

MAR-RM and ARAF paradigmatically express the differences between MPEG standardisation and “regular” IT standardisation. MPEG defines interfaces and technologies while IT standardars typically defines architectures and reference models. This explains why the majority of patent declarations that ISO receives relate to MPEG standards. It is also worth noting that in the 6 years it took to develop the standard, MPEG developed 3 editions of its ARAF standard.

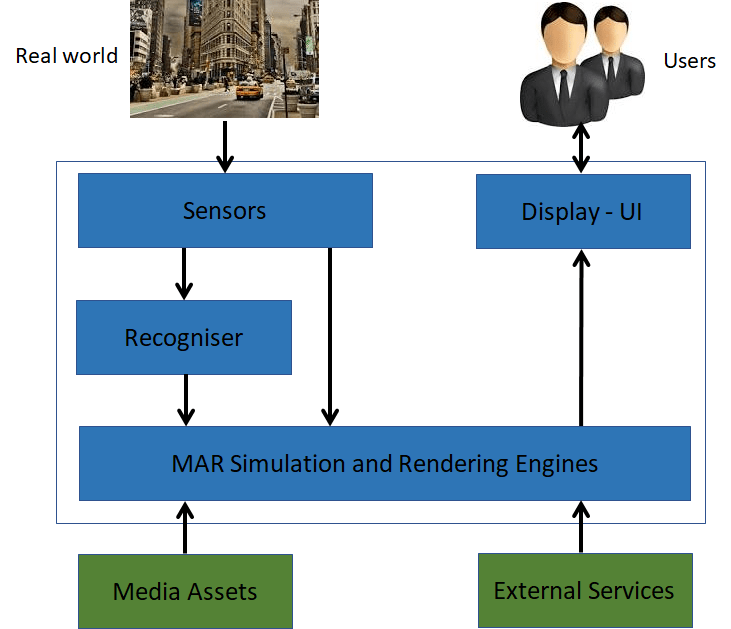

The Reference architecture of the MAR standard is depicted in the figure below.

Information from the real world is sensed and enters the MAR engine either directly or after being “understood”. The engine can also access media assets or external services. All information is processed by the engine which outputs the result of its processing and manages the interaction with the user.

Figure 2 – MAR Reference System Architecture

Based on this model, the standard elaborates the Entreprise Viewpoint with classes of actors, roles, business model, successful criteria, the Computational Viewpoint with functionalities at the component level and the Informational Viewpoint with data communication between components.

MM-RM is a one-part standard.

MPEG-M

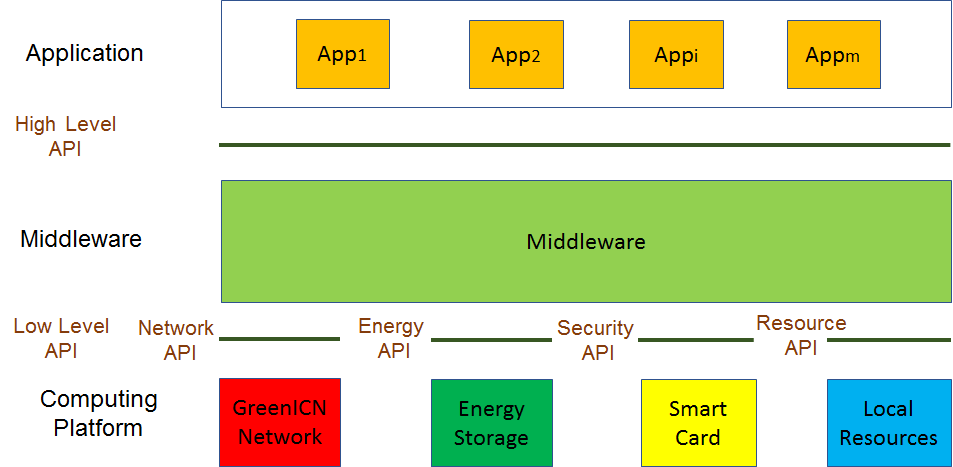

Multimedia service platform technologies (MPEG-M) specifies two main components of a multimedia device, called peer in MPEG-M.

As shown in Figure 3, the first component is API: High-Level API for applications and Low Level API for network, energy and security.

Figure 3 – High Level and Low Level MPEG-M API

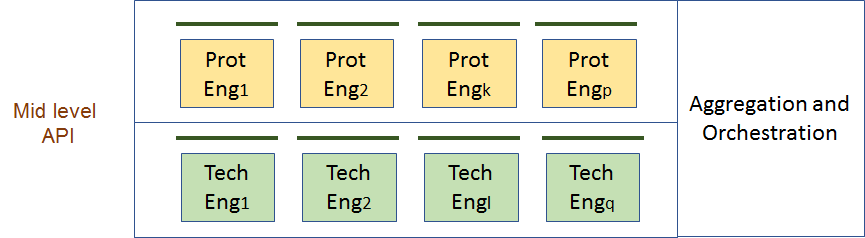

The second components is a middleware called MXM that relies specifically on MPEG multimedia technologies (Figure 4)

Figure 4 – The MXM architecture

The Middleware is composed of two types of engine. Technology Engines are used to call functionalities defined by MPEG standards such as creating or interpreting a licence attached to a content item. Protocol Engines are used to communicate with other peer, e.g. in case a peer does not have a particular Technology Engine that another peer has. For instance, a peer can use a Protocol Engine to call a licence server to get a licence to attach to a multimedia content item. The MPEG-M middleware has the ability to create chains of Technology Engines (Orchestration) or Protocol Engines (Aggregation).

MPEG-M is a 5-part standard

- Part 1 – Architecture specifies the architecture, and High and Low level API of Figure 3

- Part 2 – MPEG extensible middleware (MXM) API specifies the API of Figure 4

- Part 3 – Conformance and reference software

- Part 4 – Elementary services specifies the elementary services provided by the Protocol Engines

- Part 5 – Service aggregation specifies how elementary services can be aggregated.

MPEG-U

The development of the MPEG-U standards was motivated by the evolution of User Interfaces that integrate advanced rich media content such as 2D/3D, animations and video/audio clips and aggregate dedicated small applications called widgets. These are standalone applications embedded in a Web page and rely on Web technologies (HTML, CSS, JS) or equivalent.

With its MPEG-U standard, MPEG sought to have a common UI on different devices, e.g. TV, Phone, Desktop and Web page.

Therefore MPEG-U extends W3C recommendations to

- Cover non-Web domains (Home network, Mobile, Broadcast)

- Support MPEG media types (BIFS and LASeR) and transports (MP4 FF and MPEG-2 TS)

- Enable Widget Communications with restricted profiles (without scripting)

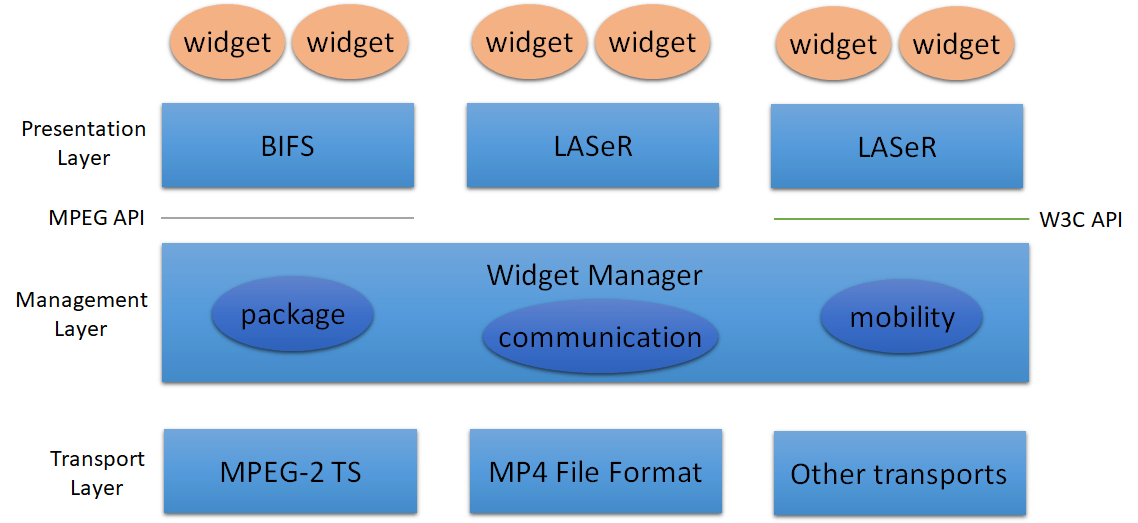

The MPEG-U architecture is depicted in Figure 5.

Figure 5 – MPEG-U Architecture

The normative behaviour of the Widget Manager includes the following elements of a widget

- Packaging formats

- Representation format (manifest)

- Life Cycle handling

- Communication handling

- Context and Mobility management

- Individual rendering (i.e. scene description normative behaviour)

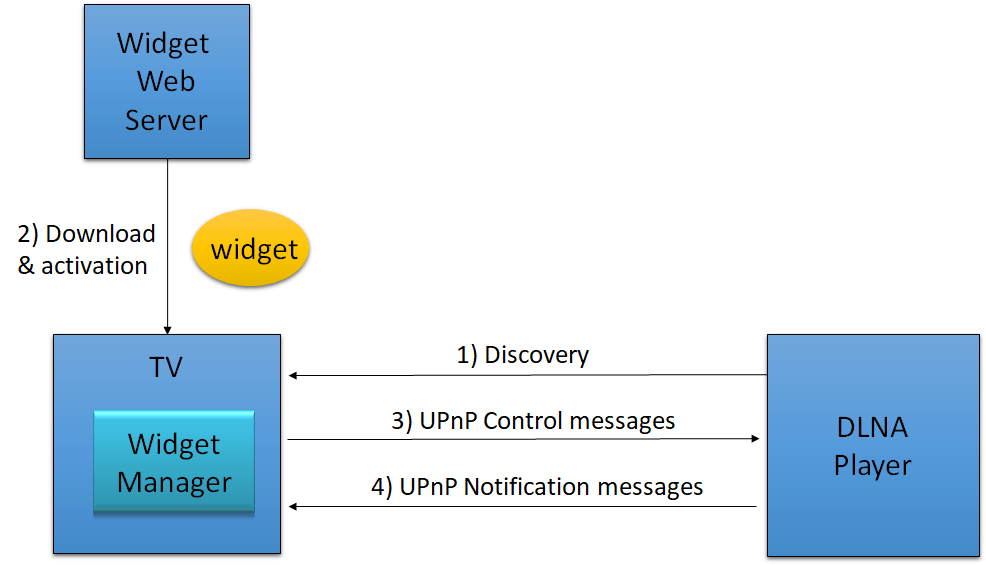

Figure 6 depicts the operation of an MPEG-U widget for TV in a DLNA enviornment.

Figure 6 – MPEG-U for TV in a DLNA environment

MPEG-U is a 3-part standard

- Part 1 – Widgets

- Part 2 – Additional gestures and multimodal interaction

- Part 3 – Conformance and reference software

MPEG-H

High efficiency coding and media delivery in heterogeneous environments (MPEG-H) is an integrated standard that resumes the original MPEG “one and trine” Systems-Video-Audio standards approach. In the wake of those standards, the 3 parts can be and are actually used independently, e.g. in video streaming applications. On the other hand, ATSC have adopted the full Systems-Video-Audio triad with extensions of their own.

MPEG-H has 15 parts, as follows

- Part 1 – MPEG Media Transport (MMT) is the solution for the new world of broadcasting where delivery of content can take place over different channels each with different characteristics, e.g. one-way (traditional broadcasting) and two-way (the ever more pervasive broadband network). MMT assumes that the Internet Protocol is common to all channels.

- Part 2 – High Efficiency Video Coding (HEVC) is the latest approved MPEG video coding standard supporting a range of functionalities: scalability, multiview, from 4:2:0 to 4:4:4, up to 16 bits, Wider Colour Gamut and High Dynamic Range and Screen Content Coding

- Part 3 – 3D Audio il the latest approved audio coding standards supporting enhanced 3D audio experiences

- Parts 4, 5 and 6 Reference software for MMT, HEVC and 3D Audio

- Parts 7, 8, 9 Conformance testing for MMT, HEVC and 3D Audio

- Part 10 – MPEG Media Transport FEC Codes specifies several Forward Erroro Correcting Codes for use by MMT.

- Part 11 – MPEG Composition Information specifies an extention to HTML 5 for use with MMT

- Part 12 – Image File Format specifies a file format for individual images and image sequences

- Part 13 – MMT Implementation Guidelines collects useful guidelines for MMT use

- Parts 14 – Conversion and coding practices for high-dynamic-range and wide-colour-gamut video and 15 – Signalling, backward compatibility and display adaptation for HDR/WCG video are technical reports to guide users in supporting HDR/WCC,

MPEG-DASH

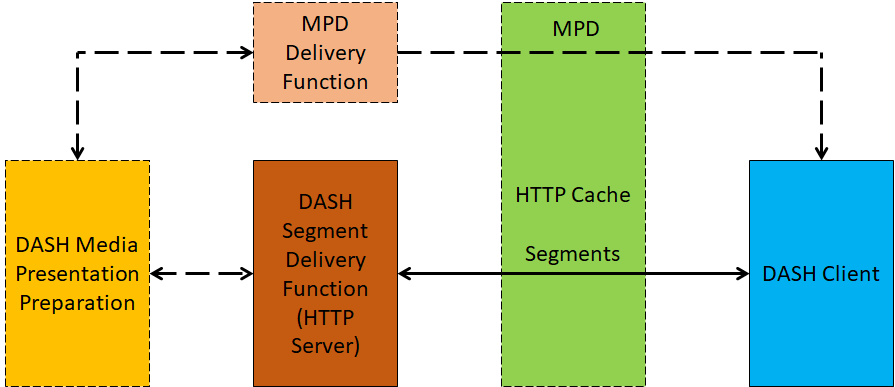

Dynamic adaptive streaming over HTTP (DASH) is a suite of standards for the efficient and easy streaming of multimedia using available HTTP infrastructure (particularly servers and CDNs, but also proxies, caches, etc.). DASH was motivated by the popularity of HTTP streaming and the existence of different protocols used in different streaming platforms, e.g. different manifest and segment formats.

By developing the DASH standard for HTTP streaming of multimedia content, MPEG has enabled a standard-based client to stream content from any standard-based server, thereby enabling interoperability between servers and clients of different vendors.

As depicted in Figure 7, the multimedia content is stored on an HTTP server in two components: 1) Media Presentation Description (MPD) which describes a manifest of the available content, its various alternatives, their URL addresses and other characteristics, and 2) Segments which contain the actual multimedia bitstreams in form of chunks, in single or multiple files.

Figure 7 – DASH model

Currently DASH is composed of 8 parts

- Part 1 – Media presentation description and segment formats specifies 1) the Media Presentation Description (MPD) which provides sufficient information for a DASH client to adaptive stream the content by downloading the media segments from a HTTP server, and 2) the segment formats which specify the formats of the entity body of the request response when issuing a HTTP GET request or a partial HTTP GET.

- Part 2 – Conformance and reference software the regular component of an MPEG standard

- Part 3 – Implementation guidelines provides guidance to implementors

- Part 4 – Segment encryption and authentication specifies encryption and authentication of DASH segments

- Part 5 – Server and Network Assisted DASH specifies asynchronous network-to-client and network-to-network communication of quality-related assisting information

- Part 6 – DASH with Server Push and WebSockets specified the carriage of MPEG-DASH media presentations over full duplex HTTP-compatible protocols, particularly HTTP/2 and WebSockets

- Part 7 – Delivery of CMAF content with DASH specifies how the content specified by the Common Media Application Format can be carried by DASH

- Part 8 – Session based DASH operation will specify a method for MPD to manage DASH sessions for the server to instruct the client about some operation continuously applied during the session.

MPEG-I

Coded representation of immersive media (MPEG-I) represents the current MPEG effort to develop a suite of standards to support immersive media products, services and applications.

Currently MPEG-I has 11 parts but more parts are likely to be added.

- Part 1 – Immersive Media Architectures outlines possible architectures for immersive media services.

- Part 2 – Omnidirectional MediA Format specifies an application format that enables consumption of omnidirectional video (aka Video 360). Version 2 is under development

- Part 3 – Immersive Video Coding will specify the emerging Versatile Video Coding standard

- Part 4 – Immersive Audio Coding will specify metadata to enable enhanced immersive audio experiences compared to what is possible today with MPEG-H 3D Audio

- Part 5 – Video-based Point Cloud Compression will specify a standard to compress dense static and dynamic point clouds

- Part 6 – Immersive Media Metrics will specify different parameters useful for immersive media services and their measurability

- Part 7 – Immersive Media Metadata will specify systems, video and audio metadata for immersive experiences. One example is the current 3DoF+ Video activity

- Part 8 – Network-Based Media Processing will specify APIs to access remote media processing services

- Part 9 – Geometry-based Point Cloud Compression will specify a standard to compress sparse static and dynamic point clouds

- Part 10 – Carriage of Point Cloud Data will specify how to accommodate compressed point clouds in the MP4 File Format

- Part 11 – Implementation Guidelines for Network-based Media Processing is the usual collection of guidelines

MPEG-CICP

Coding-Independent Code-Points (MPEG-CICP) is a collecion of code points that have been assemnled in single media- and technology-specific documents because they are not standard-specific.

Part 1 – Systems, Part 2 – Video and Part 3 – Audio collelct the respective code points and Part 4 – Usage of video signal type code points contains guidelines for their use

MPEG-G

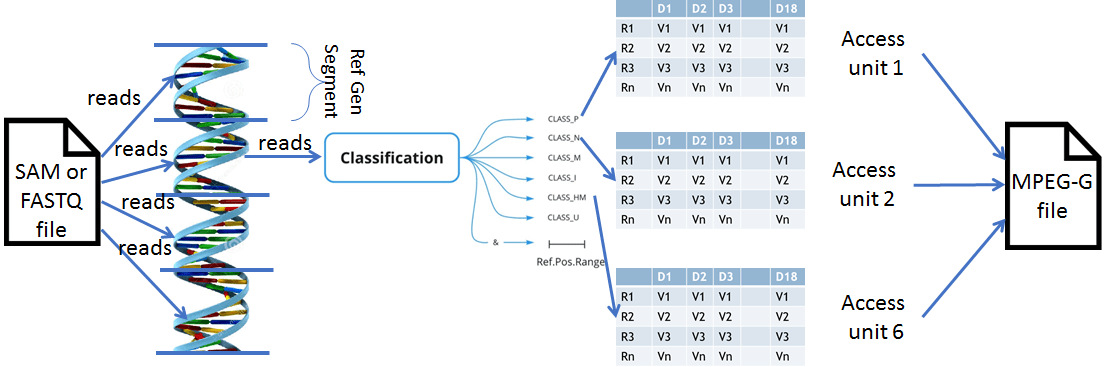

Genomic Information Representation (MPEG-G) is a suite of specifications developed jointly with TC 276 Biotechnology that allows to reduce the amount of information required to losslessly store and transmit DNA reads from high speed sequencing machines.

Figure 8 depicts the encoding process

An MPEG-G file can be created with the following sequence of operations:

- Put the reads in the input file (aligned or unaligned) in bins corresponding to segments of the reference genome

- Classify the reads in each bin in 6 classes: P (perfect match with the reference genome), M (reads with variants), etc.

- Convert the reads of each bin to a subset of 18 descriptors specific of the class: e.g., a class P descriptor is the start position of the read etc.

- Put the descriptors in the columns of a matrix

- Compress each descriptor column (MPEG-G uses the very efficient CABAC compressor already present in several video coding standards)

- Put compressed descriptors of a class of a bin in an Access Unit (AU) for a maximum of 6 AUs per bin

Figure 8 – MPEG-G compression

MPEGG-G currently includes 6 parts

- Part 1 – Transport and Storage of Genomic Information specifies the file and streaming formats

- Part 2 – Genomic Information Representation specified the algorithm to compress DNA reads from jigh speed sequencing machines

- Part 3 – Genomic information metadata and application programming interfaces (APIs) specifies metadat and API to access an MPEG-G file

- Part 4 – Reference Software and Part 5 – Conformance are the usual components of a standard

- Part 6 – Genomic Annotation Representation will specify how to compress annotations.

MPEG-IoMT

Internet of Media Things (MPEG-IoMT) is a suite of specifications:

- API to discover Media Things,

- Data formats and API to enable communication between Media Things.

A Media Thing (MThing) is the media “version” of IoT’s Things.

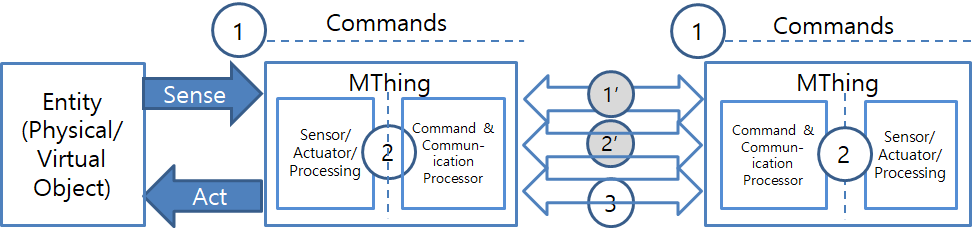

The IoMT reference model is represented in Figure 9

Figure 9: IoT in MPEG is for media – IoMT

Currently MPEG-IoMT includes 4 parts

- Part 1 – IoMT Architecture will specify the architecture

- Part 2 – IoMT Discovery and Communication API specifies Discovery and Communication API

- Part 3 – IoMT Media Data Formats and API specifies Media Data Formats and API

- Part 4 – Reference Software and Conformance is the usual part of MPEG stndards

MPEG-5

General Video Coding (MPEG-5) is expected to contain video coding specifications. Currently two specifications are envisaged

- Part 1 – Essential Video Coding is expected to be the specification of a video codec with two layers. The first layer will provide a significant improvement over AVC but significantly less than HEVC and the second layer will provide a significant improvement over HEVC but significantly less than to VVC.

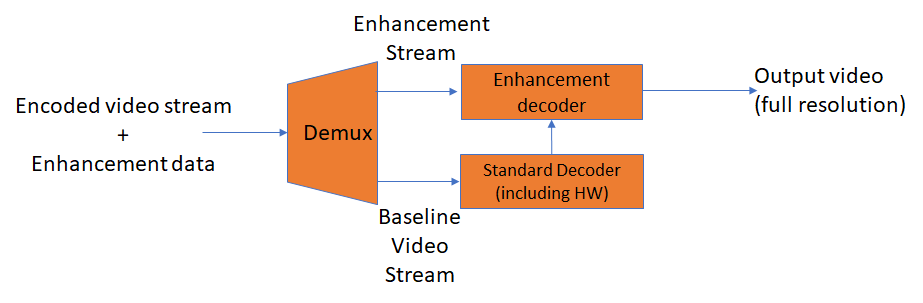

- Part 2 – Low Complexity Video Coding Enhancements is expected to be the specification of a data stream structure defined by two component streams, a base stream decodable by a hardware decoder, and an enhancement stream suitable for software processing implementation with sustainable power consumption. The enhancement stream will provide new features such as compression capability extension to existing codecs, lower encoding and decoding complexity, for on demand and live streaming applications. The LCEVC decoder is depicted in Figure 18.

Figure 18: Low Complexity Enhancement Video Coding

That’s all?

Well, yes, in terms of standards that have been developed, are being developed or being extended, or for which MPEG thinks that a standard should be developed. Well, no, because MPEG is a forge of ideas and new proposals may come at every meeting.

Currently MPEG is investigating the following topics

- In advance signalling of MPEG containers content is motivated by scenarios where the full content of a file is not available to a player but the player needs to take a decision to retrieve the file or not. Therefore the player needs to have sufficient information to determine if it can/cannot play the entire content or only a part.

- Data Compression continues the exploration in search for non typical media areas that can benefit from MPEG’s compression expertise. Currently MPEG is investigating Data compression for machine tools.

- MPEG-21 Based Smart Contracts investigates the benefits of converting MPEG-21 contract technologies, which can be human readable, to smart contracts for execution on blockchains.

Posts in this thread

- Still more to say about MPEG standards

- The MPEG work plan (March 2019)

- MPEG and ISO

- Data compression in MPEG

- More video with more features

- Matching technology supply with demand

- What would MPEG be without Systems?

- MPEG: what it did, is doing, will do

- The MPEG drive to immersive visual experiences

- There is more to say about MPEG standards

- Moving intelligence around

- More standards – more successes – more failures

- Thirty years of audio coding and counting

- Is there a logic in MPEG standards?

- Forty years of video coding and counting

- The MPEG ecosystem

- Why is MPEG successful?

- MPEG can also be green

- The life of an MPEG standard

- Genome is digital, and can be compressed

- Compression standards and quality go hand in hand

- Digging deeper in the MPEG work

- MPEG communicates

- How does MPEG actually work?

- Life inside MPEG

- Data Compression Technologies – A FAQ

- It worked twice and will work again

- Compression standards for the data industries

- 30 years of MPEG, and counting?

- The MPEG machine is ready to start (again)

- IP counting or revenue counting?

- Business model based ISO/IEC standards

- Can MPEG overcome its Video “crisis”?

- A crisis, the causes and a solution

- Compression – the technology for the digital age

- On my Charles F. Jenkins Lifetime Achievement Award

- Standards for the present and the future