Introduction

Purpose of this article is to offer some glimpses of 7 (actually 12, counting JVET activity) days of hard work at the 127th MPEG meeting (8 to 12 July 2019) in Sweden.

MPEG 127 was an interesting conjunction of the stars because the first MPEG meeting in Sweden (Stockholm, July 1989) was #7 (111 binary) and the last meeting in Sweden (Gothenburg, July 2019) was #127 (111111 binary). Will there be a 255th (1111111 binary) meeting in Sweden in July 2049? Maybe not, looking at some odd – one would call suicidal – proposals for the future of MPEG.

Let’s first have the big – logistic, but emblematic – news. For a few years the number of MPEG participants has been lurking at the level of 500, but in Gothenburg the number of participants has crossed the 600 people mark for the first time. Clearly MPEG remains a highly attractive business proposition if it has mobilised such a huge mass of experts.

It is not my intention to talk about everything that happened at MPEG 127. I will concentrate on some major results starting from, guess what, video.

MPEG-I Versatile Video Coding (VVC)

Versatile Video Coding (VVC) reached the first formal stage in the ISO/IEC standards approval process: Committee Draft (CD). This is the stage of a technical document that has been developed by experts but has not undergone any official scrutiny outside the committee.

The VVC standard has been designed to be applicable to a very broad range of applications, with substantial improvements compared to older standards but also with new functionalities. It is too early to announce a definite level of improvement in coding efficiency, but the current estimate is in the range of 35–60% bitrate reduction compared to HEVC in a range of video types going from 1080p HD to 4K and 8K, for both standard and high dynamic range video, and also for wide colour gamut.

Beyond these “flash news” like announcement, it is important to highlight the fact that, to produce the VVC CD, at MPEG 127 some 800 documents were reviewed. Many worked until close to midnight to process all input documents.

MPEG-5 Essential Video Coding (EVC)

Another video coding standard reached CD level at MPEG 127. Why is it that two video coding standards reached CD at the same meeting? The answer is simple: as a provider of digital media standards, MPEG has VVC as its top of the line video compression “product” but it is has other “products” under development that are meant to satisfy different needs.

One of them is “complexity”, a multi-dimensional entity. VVC is “complex” on several aspects. Therefore EVC does not have the goal to provide the best video quality money can buy, which is what VVC does, but a standard video coding solution for business needs that cover cases, such as video streaming, where MPEG video coding standards have hitherto not had the wide adoption that their technical characteristics suggested they should have.

Currently EVC includes two profiles:

- A baseline profile that contains only technologies that are over 20 years old or are otherwise expected to be obtainable royalty-free by a user.

- A main profile with a small number of additional tools, each providing significant performance gain. All main profile tools are capable of being individually switched off or individually switched over to a corresponding baseline tool.

Worth noting is the fact that organisations making proposals for the main profile have agreed to publish applicable licensing terms within two years of FDIS stage, either individually or as part of a patent pool.

MPEG-5 Low Complexity Enhancement Video Coding (LCEVC)

LCEVC is another video compression technology MPEG is working on. This is still at Working Draft (WD) level, but the plans call for achieving CD level at the next meeting.

LCEVC specifies a data stream structure made up of two component streams, a base stream decodable by a hardware decoder, and an enhancement stream suitable for software processing implementation with sustainable power consumption. The enhancement stream will provide new features, such as compression capability extension to existing codecs, lower encoding and decoding complexity. The standard is intended for on demand and live streaming applications.

It should be noted that LCEVC is not, stricto sensu, a video coding standard like VVC or EVC, but does cover the business need of enhancing a large number of deployed set top boxes with new capabilities without replacing them.

3 Degrees of Freedom+ (3DoF+) Video

This activity, still at an early (WD) stage, will reach CD stage in January 2020. The standard will allow an encoder to send a limited number of views of a scene so that a decoder can display specific views at the request of the user. If the request is for a view that is actually available in the bitstream, the decoder will simply display it. If the request is for a view that is not in the bitstream, the decoder will synthesise the view using all available information.

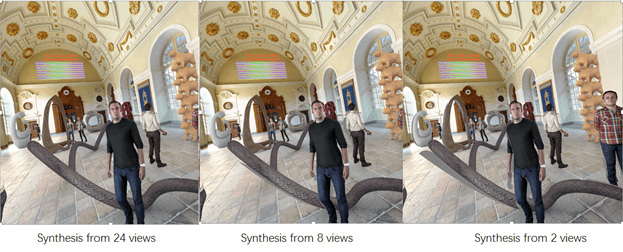

Figure 1 shows the effect of decreasing the number of views available at the decoder. With 32 views the image looks perfect, with 8 views there are barely visible artifacts on the tube on the floor, but with only two views artifacts become noticeable.

Of course this is an early stage result that will further be improved until the standard reaches Final Draft International Standard (FDIS) stage in October 2020.

Figure 1 – Quality of synthesised video as a function of the number of views

Video coding for machines

This is an example of work that looks like it is brand new to MPEG but has memories of the past.

In 1996 MPEG started working on MPEG-7, a standard to describe images, video, audio and multimedia data. The idea was that a user would tell a machine what was being looked for. The machine would then convert the request into some standard descriptors and use them to search in the data base where the descriptors of all content of interest had been stored.

I should probably not have to say that the descriptors had a compressed representation because moving data is always “costly”.

Some years ago, MPEG revisited the issue and developed Compact Descriptors for Visual Search (CVDS). The standard was meant to provide a unified and interoperable framework for devices and services in the area of visual search and object recognition.

Soon after CDVS, MPEG revisited the issue for video and developed Compact Descriptord for Video Analysis (CDVA). The standard is intended to achieve the goals of designing interoperable applications for object search, minimising the size of video descriptors and ensuring high matching performance of objects (both in accuracy and complexity).

As the “compact” adjective in both standards signals, CDVS and CDVA descriptors are compressed, with a user-selectable compression ratio.

Recently MPEG has defined requirements, issued a call for evidence and a call for proposals, and developed a WD of a standard whose long name is “Compression of neural networks for multimedia content description and analysis”. Let’s call it for simplicity Neural Network Representation (NNR).

Artificial neural networks are already used for extraction of descriptors, classification and encoding of multimedia content. A case in point is provided by CDVA that is already using several neural networks in its algorithm.

The efficient transmission and deployment of neural networks for multimedia applications require methods to compress these large data structures. NNR defines tools for compressing neural networks for multimedia applications and representing the resulting bitstreams for efficient transport. Distributing a neural network to billions of people may be hard to achieve if the neural network is not compressed.

I am now ready to introduce the new, but also old, idea behind video coding for machines. The MPEG “bread and butter” video coding technology is a sort of descriptor extraction: DCT (or other) coefficients provide the average value of a regions and frequency analysis, motion vectors describe how certain areas in the image move from frame to frame etc.

So far, video coding “descriptors” were designed to achieve the best visual quality – as assessed by humans – at a given bitrate. The question asked by video coding for machines is: “what descriptors provide the best performance for use by a machine at a given bitrate?”

Tough question for which currently there is no answer.

If you want to contribute to the answer, you can join the email reflector after subscribing here. MPEG 128 will be eager to know how the question has been addressed.

A consideration

MPEG is a unique organisation with activities covering the entire scope of a standard: idea, requirements, technologies, integration and specification.

How can mindless industry elements think they can do better than a Darwinian process that has shaped the MPEG machine for 30 years?

Maybe they think they are God, because only He can perform better than Darwin.

Posts in this thread

- Tranquil 7+ days of hard work

- Hamlet in Gothenburg: one or two ad hoc groups?

- The Mule, Foundation and MPEG

- Can we improve MPEG standards’ success rate?

- Which future for MPEG?

- Why MPEG is part of ISO/IEC

- The discontinuity of digital technologies

- The impact of MPEG standards

- Still more to say about MPEG standards

- The MPEG work plan (March 2019)

- MPEG and ISO

- Data compression in MPEG

- More video with more features

- Matching technology supply with demand

- What would MPEG be without Systems?

- MPEG: what it did, is doing, will do

- The MPEG drive to immersive visual experiences

- There is more to say about MPEG standards

- Moving intelligence around

- More standards – more successes – more failures

- Thirty years of audio coding and counting

- Is there a logic in MPEG standards?

- Forty years of video coding and counting

- The MPEG ecosystem

- Why is MPEG successful?

- MPEG can also be green

- The life of an MPEG standard

- Genome is digital, and can be compressed

- Compression standards and quality go hand in hand

- Digging deeper in the MPEG work

- MPEG communicates

- How does MPEG actually work?

- Life inside MPEG

- Data Compression Technologies – A FAQ

- It worked twice and will work again

- Compression standards for the data industries

- 30 years of MPEG, and counting?

- The MPEG machine is ready to start (again)

- IP counting or revenue counting?

- Business model based ISO/IEC standards

- Can MPEG overcome its Video “crisis”?

- A crisis, the causes and a solution

- Compression – the technology for the digital age