It is a now a few months since I last talked about the standards being developed by MPEG. As the group dynamics is fast, I think it is time to make an update about the main areas of standardisation: Video, Audio, Point Clouds, Fonts, Neural Networks, Genomic data, Scene description, Transport, File Format and API. You will also find a few words on three exploration that MPEG is making

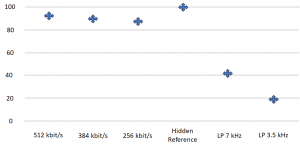

Video continues to be a very active area of work. New SEI messages are being defined for HEVC while there a high activity in VVC that is due to reach FDIS in July 2020. Verification Tests for VVC have not been carried out yet, but the expectation is that VVC will bring compression of video of about ~1000, as can be seen from the following table where bitrate reduction of a standard is measured with respect to that of the previous standard. MPEG-1 bitrate reduction with respect to uncompressed video. VVC bitrate reduction is estimated.

| Standard | Bitrate reduction | Year |

| MPEG-1 Video | -98% | 1992 |

| MPEG-2 Video | -50% | 1994 |

| MPEG-4 Visual | -25% | 1999 |

| MPEG-4 AVC | -30% | 2003 |

| MPEG-H HEVC | -60% | 2013 |

| MPEG-I VVC | -50% | 2020 |

Compression of 1000 is obtained by computing the inverse of 0.02*0.5*0.75*0.7*0.4*0.5.

SEI messages for VVC are now being collected in MPEG-C Part 7 “SEI messages for coded video bitstreams”. The specification of SEI messages is generic in the sense that the transport of SEI messages can be effected both in the video bitstream or at the Systems layer. Care is also taken to make messages transport possible on previous video coding standards.

MPEG CICP (Coding-Independent Code-Points) Part 4 “Usage of video signal type code points” has been released. This Technical Report provides guidance on combinations of video properties that are widely used in industry production practices by documenting the usage of colour-related code points and description data for video content production.

MPEG is also working on two more “traditional” video coding standard, both included in MPEG-5.

- Essential Video Coding (EVC) will be a standard video coded that addresses business needs in some use cases, such as video streaming, where existing ISO video coding standards have not been as widely adopted as might be expected from their purely technical characteristics. EVC is now being balloted as DIS. Experts working on EVC are actively preparing for the Verification Tests to see how much “addressing business needs” will cost in terms of performance.

- Low Complexity Enhancement Video Coding (LCEVC) will be a standardvideo coded that leverages other video codecs yo improves video compression efficiency while maintaining or lowering the overall encoding and decoding complexity. LCEVC is now being balloted as CD.

MPEG-I OMAF already supports (2018) 3 Degrees of Freedom (3DoF), where a user’s head can yaw, pitch and roll, but the position of the body is static. However, rendering flat 360° video, i.e. supporting head rotations only, may generate visual discomfort especially when rendering objects close to the viewer.

6DoF enables translation movements in horizontal, vertical, and depth directions in addition to 3DoF orientations. The translation support enables interactive motion parallax giving viewers natural cues to their visual system and resulting in an enhanced perception of volume around them.

MPEG is currently working on a video compression standard (MPEG-I Part 12 Immersive Video – MIV) that enables head-scale movements within a limited space. In the article On the convergence of Video and 3D Graphics I have provided some details of the technology being used to achieve the goal, comparing it with the technology used for Video-based Point Cloud Compression (V-PCC). MIV is planned to reach FDIS in October 2020.

Audio experts are working with the goal to leverage MPEG-H 3D Audio to provide a full 6DoF Audio experience, viz. where the user can localise sound objects in horizontal and vertical planes, and perceive sound objects’s loudness changes as a user moves around an audio object, sound reverberation as in a real room and occlusion when a physical object is interposed between a sound source and a user.

The components of the system to be used to test proposals are

- Coding of audio sources: using MPEG-H 3D Audio

- Coding of meta-data: e.g. source directivity or room acoustic properties

- Audio and visual presentations for immersive VR worlds (correctly perceiving a virtual audio space without any visual cues is very difficult)

- Virtual Reality basketball court where the Immersive Audio renderer makes all the sounds in response to the user interaction of bouncing the ball and all “bounce sounds” are compressed and transmitted from server to client.

Evaluation of proposals will be done via

- Full, real-time audio-visual presentation

- Head-Mounted Display for “Unity” visual presentation

- Headphones and “Max 8” for audio presentation

- Proponent technology will run in real-time in Max VST3 plugin.

Currently this is the longest term MPEG-I project as FDIS is planned for January 2022.

MPEG Immersive Video and Audio share a number of features. The most important is the fact that both are not “compression standards”, in the sense that they use existing compression technologies on top of which immersive features are provided by metadata that will be defined by Immersive Video (part 12 of MPEG-I) and Immersive Audio (part 5 of MPEG-I). MPEG-I Part 7 Immersive Media Metadata will specify additional metadata coming from the different subgroups.

Video-based Point Cloud Compression is progressing fast as FDIS is scheduled for January 2020. The maturity of the technology, suitable for dense point clouds (see, e.g. https://mpeg.chiariglione.org/webtv?v=802f4cd8-3ed6-4f9d-887b-76b9d73b3db4) is reflected in related Systems activities that will be reported later.

Geometry-based Point Cloud Compression, suitable for sparse point clouds (see, e.g. https://mpeg.chiariglione.org/webtv?v=eeecd349-61db-497e-8879-813d2147363d) is following with a delay of 6 months, as FDIS is expected for July 2020.

MPEG is extending MPEG-4 Part 22 Open Font Format with an amendment titled “Colour font technology and other updates”.

Neural Networks are a new data type. Strictly speaking is addressing the compression of Neural Networks trained for multimedia content description and analysis.

NNR, as MPEG experts call it, has taken shape very quickly. First aired and discussed at the October 2017, a Call for Evidence (CfE) was issued in July 2018 and a Call for Proposal (CfP) issued in October 2018. Nine responses were received at the January 2019 meeting that enabled the group to produce the first working draft in March 2019. A very active group is working to produce the FDIS in October 2020.

Read more abour NNR at Moving intelligence around.

With MPEG-G parts 1-3 MPEG has provided a file and transport format, compression technology, metadata specifications, protection support and standard APIs for the access of sequencing data in the native compressed format. With the companion parts 4 and 5 reference software and conformance, due to reach FDIS level in April 2020, MPEG will provide a software implementation of a large part of the technologies in parts 1 to 3 and the means to test an implementation for conformity to MPEG-G.

January 2020 is the deadline for responding to the Call for Proposals on Coding of Genomic Annotations. The call is in response to the need of most biological studies based on sequencing protocols to attach different types of annotations, all associated to one or more intervals on the reference sequences, resulting from so-called secondary analyses. The purpose of the call is to acquire technologies that will allow to provide a compressed representation of such annotation.

MPEG’s involvement in scene description technologies dates back to 1996 when it selected VRML as the starting point for its Binary Format for Scenes (BIFS). MPEG’s involvement continued with MPEG-4 LASeR, MPEG-B Media Orchestration and MPEG-H Composition Information.

MPEG-I, too, cannot do without a scene technology. As for the past, MPEG will start from an existing specification – glTF2 (https://www.khronos.org/gltf/) – selected because it is an open, extensible, widely supported with many loaders and exporters and enables MPEG to extend glTF2 capabilities of for audio, video and point cloud objects.

The glTF2-based Scene Description will be part 14 of MPEG-I.

Transport is a fundamental function of real-time media and MPEG continues to develop standards, not just for its own standards, but also for JPEG standards (e.g. JPEG 2000 and JPEG XS). This is what MPEG is currently doing in this vital application area:

- MPEG-2 part 1 Systems: a WD of an amendment on Carriage of VVC in MPEG-2 TS. This is urgently needed because broadcasting is expected to be a good user of VVC.

- MPEG-H part 10 MMT FEC Codes: an amendment on Window-based Forward Error Correcting (FEC) code

- MPEG-H part 13 MMT Implementation Guidelines: an amendment on MMT Implementation Guidelines.

The ISO-based Media File Format is an extremely fertile standards area that extends over many MPEG standards. This is what MPEG is doing in this vital application area:

- MPEG-4 part 12 ISO Base Media File Format: two amendments on Compact movie fragments and EventMessage Track Format

- MPEG-4 part 15 Carriage of NAL unit structured video in the ISO Base Media File Format: an amendment on HEVC Carriage Improvements and the start of an amendment on Carriage of VVC, a companion of Carriage of VVC in MPEG-2 TS

- MPEG-A part 19 Common Media Application Format: the start of an amendment on Additional media profile for CMAF. The expanding use of CMAF prompts the need to support more formats

- MPEG-B part 16 Derived Visual Tracks in ISOBMFF: a WD is available as a starting point

- MPEG-H part 12 Image File Format: an amendment on Support for predictive image coding, bursts, bracketing, and other improvements to give HEIF the possibility to store predictively encoded video

- MPEG-DASH part 1 Media presentation description and segment formats: start of a new edition containing CMAF support, events processing model and other extensions

- MPEG-DASH part 5 Server and network assisted DASH (SAND): the FDAM of Improvements on SAND messages has been released

- MPEG-DASH part 8 Session based DASH operations: a WD of Session based DASH operations has been initiated

- MPEG-I part 2 Omnidirectional Media Format: the second edition of OMAF has started

- MPEG-I part 10 Carriage of Video-based Point Cloud Compression Data: currently a CD.

This area is more and more being populated with MPEG standards

- MPEG-I part 8 Network-based Media Processing is on track to become FDIS in January 2020

- MPEG-I part 11 Implementation Guidelines for NBMP is due to reach TR stage in April 2020

- MPEG-I part 13 Video decoding interface is a new interface standard to allow an external application to provide one or more rectangular video windows from a VVC bitstream.

MPEG is carrying out explorations in areas than may give rise to future standards: 6DoF, Dense Light Fields and Video Coding for Machines (VCM). VCM is motivated by the fact that, while traditional video coding aims to achieve the best video/image under certain bit-rate constraints having humans as consumption targets, the sheer quantity of data being/to be produced by connected vehicles, video surveillance, smart cities etc. makes the traditional human-oriented scenario inefficient and unrealistic in terms of latency and scale.

Twenty years ago the MPEG-7 project started the development of a comprehensive set of audio, video and multimedia descriptors. Other parts of MPEG-7 have added other standard descriptions of visual information for search and analysis application. VCM may leverage that experience and frame it in the new context of expanded use of neural networks. Those interested can subscribe to the Ad hoc group on Video Coding for Machines at https://lists.aau.at/mailman/listinfo/mpeg-vcm and participate in the discussions at mpeg-vcm@lists.aau.at.

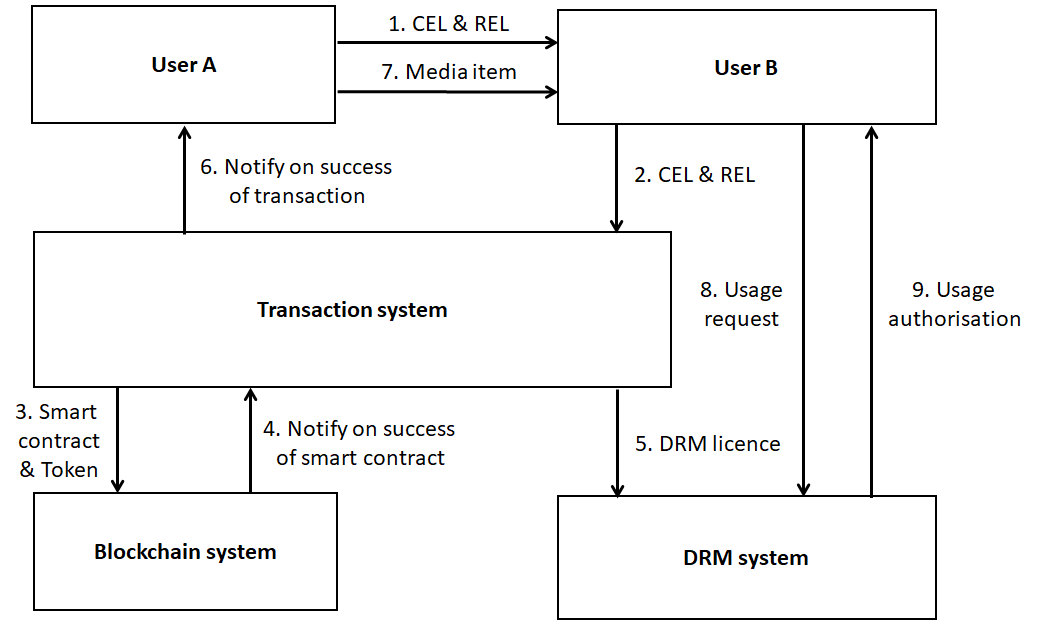

MPEG has developed several standards in the framework of MPEG-21 media ecommerce framework addressing the issue of digital licences and contracts. Blockchain can execute smart contracts, but is it possible to translate an MPEG-21 contract to a smart contract?

Let’s consider the following use case where User A and B utilise a Transaction system that interfaces with a Blockchain system and a DRM system. If the transaction on the Blockchain system is successful, DRM System authorises User B to use the media item.

The workflow is

- User A writes a CEL contract and a REL licence and sends both to User B

- User B sends the CEL and the REL to a Transaction system

- Transaction system translates CEL to smart contract, creates token and sends both to Blockchain system

- Blockchain system executes smart contract, records transaction and notifies Transaction system of result

- If notification is positive Blockchain system translates REL to native DRM licence and notifies User A

- User A sends media item to User B

- User B requests DRM system to use media item

- DRM system authorises User B

In this use case, Users A and B can communicate using the standard CEL and REL languages, while Transaction system is tasked to interface with Blockchain system and DRM system.

A standard way to translate MPEG-21 contracts to smart contracts will ensure users that the smart contract executed by a block chain corresponds to the human-readable MPEG-21 contract.

Those interested in exploring this topic can subscribe to the Ad hoc group on MPEG-21 Contracts to Smart Contracts at https://lists.aau.at/mailman/listinfo/smart-contracts and participate in the discussions at smart-contracts@lists.aau.at.

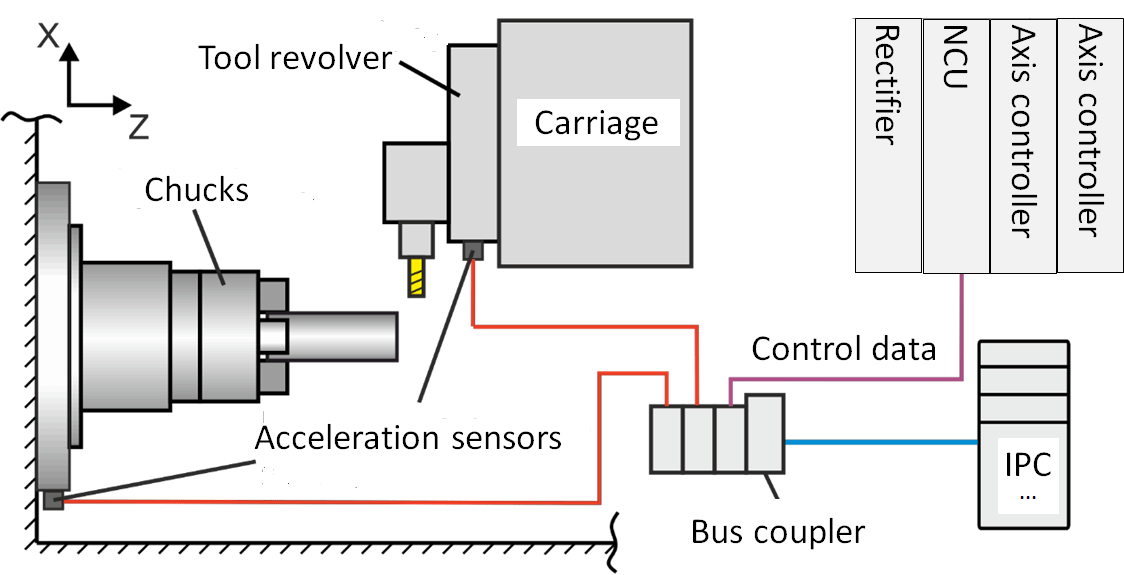

Mechanical systems are become more and more sophisticated in terms of functionalities but also in terms of capability to generate data. Virgin Atlantic says that a Boeing 787s may be able to create half a terabyte of data per flight. The diversity of data generated by an aircraft makes the problem rather challenging, but machine tools are less complex machines that may still generate 1 Terabyte of data per year. The data are not uniform in nature and can be classified in 3 areas: Quality control, Management and Monitoring.

There are data available to test what is means to process machine tool data.

Other data

MPEG is deeply engaged in compressing two strictly non-media data: Genomic and Neural Networks, even though the latter is currently considered as a compression add-on to multimedia content description and analysis. It is also exploring compression of machine tool data.

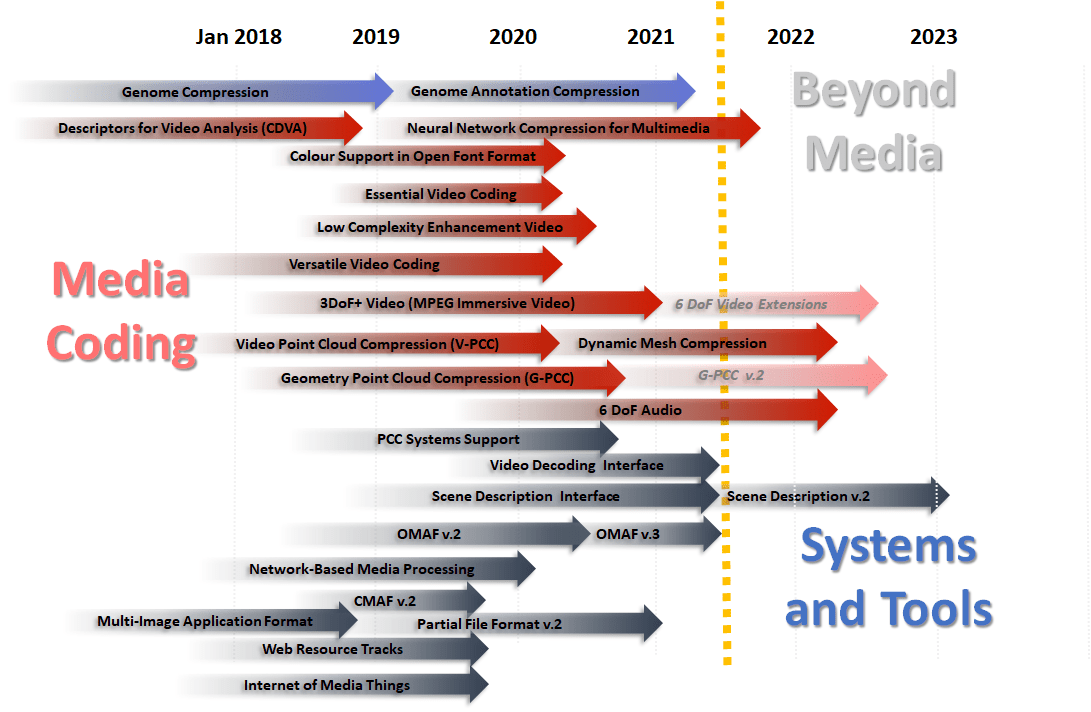

The MPEG work plan

The figure graphically illustrates the current MPEG work plan. Dimmed coloured items are not (yet) firm elements of the workplan.

Posts in this thread

- What is MPEG doing these days?

- MPEG is a big thing. Can it be bigger?

- MPEG: vision, execution,, results and a conclusion

- Who “decides” in MPEG?

- What is the difference between an image and a video frame?

- MPEG and JPEG are grown up

- Standards and collaboration

- The talents, MPEG and the master

- Standards and business models

- On the convergence of Video and 3D Graphics

- Developing standards while preparing the future

- No one is perfect, but some are more accomplished than others

- Einige Gespenster gehen um in der Welt – die Gespenster der Zauberlehrlinge

- Does success breed success?

- Dot the i’s and cross the t’s

- The MPEG frontier

- Tranquil 7+ days of hard work

- Hamlet in Gothenburg: one or two ad hoc groups?

- The Mule, Foundation and MPEG

- Can we improve MPEG standards’ success rate?

- Which future for MPEG?

- Why MPEG is part of ISO/IEC

- The discontinuity of digital technologies

- The impact of MPEG standards

- Still more to say about MPEG standards

- The MPEG work plan (March 2019)

- MPEG and ISO

- Data compression in MPEG

- More video with more features

- Matching technology supply with demand

- What would MPEG be without Systems?

- MPEG: what it did, is doing, will do

- The MPEG drive to immersive visual experiences

- There is more to say about MPEG standards

- Moving intelligence around

- More standards – more successes – more failures

- Thirty years of audio coding and counting

- Is there a logic in MPEG standards?

- Forty years of video coding and counting

- The MPEG ecosystem

- Why is MPEG successful?

- MPEG can also be green

- The life of an MPEG standard

- Genome is digital, and can be compressed

- Compression standards and quality go hand in hand

- Digging deeper in the MPEG work

- MPEG communicates

- How does MPEG actually work?

- Life inside MPEG

- Data Compression Technologies – A FAQ

- It worked twice and will work again

- Compression standards for the data industries

- 30 years of MPEG, and counting?

- The MPEG machine is ready to start (again)

- IP counting or revenue counting?

- Business model based ISO/IEC standards

- Can MPEG overcome its Video “crisis”?

- A crisis, the causes and a solution

- Compression – the technology for the digital age