From what I have published so far on this blog it should be clear that MPEG is an unusual ISO working group (WG). To mention a few, duration (31 years), early use of ICT (online document management system in use since 1995), size (1500 experts registered and 500 attending), organisation (the way the work of 500 experts work on multiple projects), number of standards produced (more than any other JTC 1 subcommittee), impact on the industry (1 T$ in devices and 0.5 T$ in services per annum).

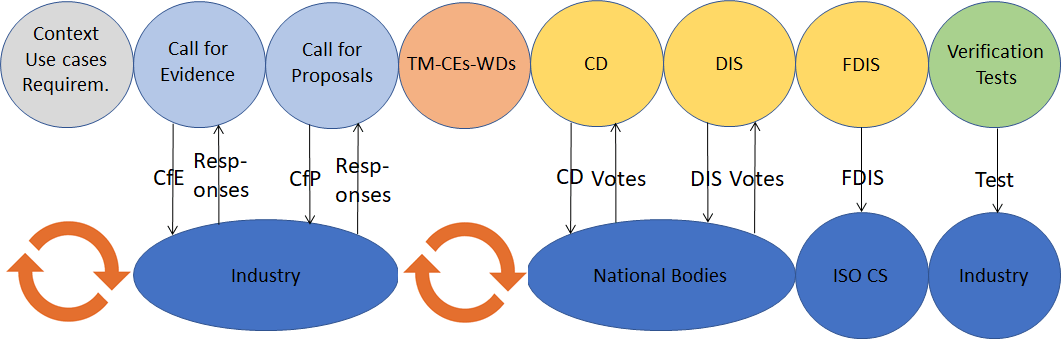

In How does MPEG actually work? I have talked about the life cycle of an MPEG standard, depicted in Figure 1.

Figure 1 – The MPEG standard development process

However, that article does say much about the initial phase of the life cycle, i.e. the moment new ideas that will turn into standard are generated, which is not even identified in the figure. By looking into that moment, we will see again how the way new ideas for MPEG standards are generated, makes MPEG an unusual WG.

The structure of this article is

The work standard is used to indicate both a series of standards (identified by the 5-digit ISO number) and a part of a standard (identified by 5 digits a dash and a number). In this article we will use “standard” for the former and “part of standard” for the latter,

MPEG-1 and 2

The idea of the first two MPEG standards was generated in 1988 when a new “Moving Picture Experts Group” was created in JTC 1/SC 2/WG 8. The original MPEG work items were (the acronym DSM stands for Digital Storage Media):

- Coding of moving pictures for DSM’s having a throughput of 1-1.5 Mbit/s (1988-1990)

- Coding of moving pictures for DSM’s having a throughput of 1.5-5 Mbit/s (1990-1992)

- Coding of moving pictures for DSM’s having a throughput of 5-60 Mbit/s (to be defined).

I was the one who proposed the first work item (video coding for interactive video on compact disc) later to be named MPEG-1 while Hiroshi Yasuda added the second (later to be named MPEG-2). In MPEG-2 the word DSM was kept in order not to upset other standards groups (but the eventual title of MPEG-2 became “Generic coding of moving pictures and associated audio information”). The third standard was little more than a placeholder. The time assigned to develop the standard was definitely optimistic. It took two more years than planned for both MPEG-1 and MPEG-2 to reach FDIS (and even so it was really a rush).

The third standard was the first and an early case of the birth of a new standard idea that was “miscarried” because the third work item was combined with the second. That is the reason why there is no MPEG-3, but there is MP3 which is just something else.

MPEG-4

MPEG-4 was born with a similar process and was motivated by the fact MPEG-1 and MPEG-2 targeted high bitrates. However, low bitrates, too, were important and not covered by MPEG standards. Eventually MPEG-4 became the container of foundational digital media technologies such as audio for internet distribution, file format and open font format.

MPEG-7

MPEG-7 had a different story. It was proposed by Italy to SC 29 in response to the prospects of users having to navigate 500 TV channels to find what they wanted to see. The study in SC 29 went nowhere, so MPEG took it over and developed a complete standard framework for media metadata.

MPEG-21

MPEG-21 was driven by the upheaval brought about by MP3 as exemplified by Napster. The response was to create a complete standard framework for media ecommerce. The framework included the definition of Digital Items, and standards for Right and Contact Expression Languages and much more

MPEG-A

MPEG-A was the result of an investigation carried out by the MPEG plenary. AS a result of the investigation MPEG realised that, beyond standards for individual media, it was necessary to also develop standard combinations of media encoded according to MPEG standards.

MPEG-B, MPEG-C and MPEG-D

MPEG-B, -C and -D were proposed by MPEG at a time the Systems-Video-Audio trinity appeared to be no longer a response to standards needs, while individual Systems, Video and Audio standards were still in demand. All three standards include parts that can be classified as Systems, Video and Audio. As a note the Systems, Video and Audio trinity is alive and kicking. Actually it has become a quternity with Point Clouds.

MPEG-E

MPEG-E was driven by the idea of providing the industry with a standard specifying the architecture and software components of a digital media consumption device.

MPEG-V

MPEG-V was probably the first standard that was not the result of a decision by MPEG to propose a new standard but the result of a individual proposals coming from two different directions: virtual worlds (the much touted Second Life of the mid-2000’s) and the enhanced user experience made possible by existing and new sensors and actuators. MPEG succeeded in developing a comprehensive standard framework for interaction of humans with and between virtual worlds.

MPEG-M

MPEG-M was influenced by work done within the Digital Media Project (DMP) to address a standard middleware for digital media. MPEG-M became an extensible standard framework that specifies the architecture with High Level API, the middleware composed of MPEG technologies with Low Level API. and the aggregation of services leveraging those technologies.

MPEG-U

MPEG-U was probably the first standard whose birth happened in a subgroup – Systems. Eventually MPEG-U became a standard for exchanging, displaying, controlling and communicating widgets with other entities, and for advanced interaction.

MPEG-H and DASH

MPEG-H and DASH were two standards born out of the strongly felt need to overhaul the then 15-year old MPEG-2 Transport Stream (TS). The result was that the market 1) strongly reaffirmed its confidence in MPEG-2 TS, 2) enthusiastically embraced MPEG-H (integrated broadcast-broadband distribution over IP) and widely deployed DASH (media distribution accommodating unpredictable variations of available bandwidth) inspired by and developed in collaboration with 3GPP.

MPEG-I

MPEG-I was the result of the drive of the industry toward immersive services and devices enabling them. This is currently the MPEG flagship project, now with 14 parts but certainly designed to compete withe the number of parts (34) of MPEG-4.

MPEG-CICP

MPEG-CICP was a “housekeeping” action with the goal to collect code points for non-standard specific media formats in a single place (actually 4 documents).

MPEG-G

MPEG-G resulted from the proposal of a single organisation for a framework standard for storage, compression and access of non-media data (DNA reads). The organisation proposed the activity, but of course the development of the standard was fully open and in line with the MPEG process of Figure 1.

MPEG-IoMT

MPEG-IoMT resulted from a single organisation proposing a framework standard for media-specific Things (as defined in the context of Internet of Things), i.e. cameras and displays.

MPEG-5

MPEG-5 resulted from the proposal of a group of companies who needed a video compression standard that addressed business needs of some use cases, such as video streaming, where existing ISO video coding standards have not been as widely adopted as might be expected from their purely technical characteristics. This requirement was not met by state of the art MPEG video coding standard and the proposal was, after much debate, discussed and accepted by the MPEG plenary.

Looking at the birth of parts

From the roundup above the reader may have gotten the impression that most MPEG standards are the result of a collective awareness of the need of a standard. As I have described above, this is largely, but not exclusively, true, of standards identified by the 5-digit ISO number. But if we look at the parts of MPEG standards, we get a picture that does not contradict the first statement but provides a different view.

In its 31 years of activity MPEG has produced parts of standards in the following areas: Video Coding, Audio Coding, 3D Graphics Coding, Font Coding, Digital Item Coding, Sensors and Actuators Data Coding, Genome Coding, Neural Network Coding, Media Description, Media Composition, Systems support, Intellectual Property Management and Protection (IPMP), Transport, Application Formats, Application Programming Interfaces (API), Media Systems, Reference implementation and Conformance.

In the following we will see how the nature of standard parts influences the birth of MPEG standards.

Video Coding

Video coding standards are mostly driven by the realisation that some of existing MPEG standards are no longer aligned with the progress of technology. This was the case of MPEG-2 Video because it promised more compression than MPEG-1 Video, MPEG-4 Visual because there was no MPEG Video Coding standard for very low (much below 1 Mbit/s) bitrates, MPEG-4 AVC was developed because it promised more compression than MPEG-4 Visual, MPEG-H HEVC was developed because it promised more compression than MPEG-4 AVC and MPEG-I VVC was developed because it promised more compression than MPEG-H HEVC.

Forty years of video coding and counting tells the full story of the MPEG video compression standards.

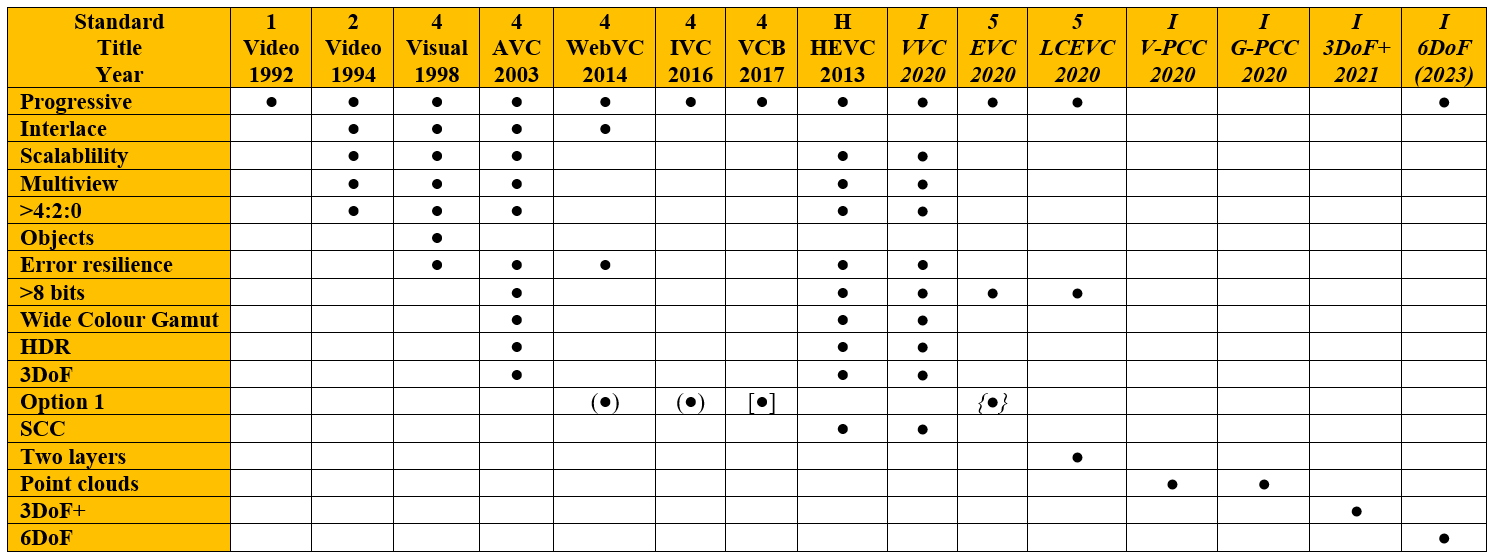

This is not the full story, though. Table 1 is a version of the table in More video with more features, slightly edited to accommodate recent evolutions. The table describes the functionalities, beyond the basic compression functionality, that have been added over the years to MPEG Video Coding standards. The birth of each of these proposals for new functionalities has been the result of much wrangling between those who wanted to add the functionalities because they believed the necessary technology was available and those who saw the technology was immature and not ready for a standard.

Table 1 – Functionalities added to MPEG video coding standards

Audio Coding

The companion Audio Coding standards had a much different evolution. Unlike Video, whose bitrate was and is so high and growing to justify new generations of compression standards, Audio Coding was driven to large extent by applications and functionality, with compression always playing a role. Thirty years of audio coding and counting talks about the first MP3, then of MPEG-4 AAC in all its shapes, and then of MPEG Surround, Spatial Audio Object Coding (SAOC), Unified Speech and Audio Coding (USAC), Dynamic Range Control (DRC), MPEG-H 3D Audio and the coming MPEG-I Immersive Audio. MPEG-7 Audio should not be forgotten even though compression is appl;ied to descriptors, not to audio itself. The birth of each of these Audio Coding standards is a history in itself, ranging from being a part of a bigger plan developed at MPEG Plenary level, to specific Audio standards agreed by the Audio group, to a specific functionality to be added to a standard either developed or being developed.

3D Graphics Coding

The 3DG group worked on 2D/3D graphic compression, and then on Animation Framework eXtension (AFX). In the case of 2D/3D graphic compression, the birth of the standards was the result of an MPEG plenary decision, but the standards kept on evolving by adding new technologies for new functionalities, most often at the instigation or individual experts and companies.

Talking of 3D Graphics Coding, I could quote Rudyard Kipling’s verse

Oh, East is East, and West is West, and never the twain shall meet

and associate West to Video Coding and East to 3D Graphics Coding (or vice versa). Indeed, it looked like Video and 3D Graphics Coding would comply with Kipling’s verse until Point Cloud Compression (PCC) came to the fore. Proposed by an individual expert and under exploration for quite some time, it suddenly became one of the sexiest MPEG developments merging, in an intricated way, Video and 3D Graphics.

We can indeed say with Kipling

But there is neither East nor West, Border, nor Breed, nor Birth,

When two strong men stand face to face, though they come from the ends of the earth!

Font Coding

Font Coding is again a new story. This time the standard was proposed by the 3 companies – Adobe, Apple and Microsoft – who had developed the OpenType specification. The reason was that it had become burdensome for them to maintain and expand the specification in response to market needs. The MPEG plenary accepted the request, took over the task and developed several parts of several standards in multiple editions. As participants in the Font Coding activity do not typically attend MPEG meetings, new functionalities are mostly added at the request of experts or companies on the email reflector of the Font Coding ad hoc group.

Digital Item Coding

The initiative to start MPEG-21 was taken by the MPEG plenary, but the need to develop the 22 parts of the standards were largely identified by the subgroups – Requirements, Multimedia Description Schemes (MDS) and Systems,

Sensors and Actuators Data Coding

The birth of MPEG-V was the decision of the MPEG plenary, but the parts of the standard kept on evolving at the instigation or individual experts and companies. Four editions of the standard were produced.

Genome Coding

Development of Part 1 Storage and Transport and Part 2 Compression of a Genome Coding standard was obviously a major decision of the MPEG plenary. The need for other parts of the MPEG-G standard, namely Part 3 Metadata and API, and Part 6 Compression of Annotations, was identified by the Requirements group working on the standard.

Neural Network Coding

Neural Network Coding was proposed at the October 2017 meeting. The MPEG plenary was in doubt whether to call this “compression of another data type” (neural networks) or something in line with its “media” mandate. Eventually it opted to call it “Compression of neural networks for multimedia content description and analysis”, which is partly what it really is, an extended new version of CDVS and CDVA with embedded compression of descriptors. Neural Network Compression (NNC) is now (October 2019) at Working Draft 2 and is planned to reach FDIS in April 2021. Experts are too busy working on the current scope to have the time to think of more features, but we know there will be more because the technologies in the current draft do not support all the requirements.

Media Description

As mentioned above, the MPEG plenary decided to develop MPEG-7 seeing the inability of SC 29 to jump on the opportunity of a standard that would describe media in a standard way to help user access the content of their interest. The need for the early parts of MPEG-7 were largely identified by the groups working on MPEG-7. The need for later standards, such as CDVS and CDVA, was identified by a company (CDVS) and by a consortium (CDVS). The need for the latest standard (Neural Network Compression) was identified by the MPEG plenary.

Media Composition

Until 1995 MPEG was really a group working mostly for Broadcasting and Consumer Electronics (but MP3 was a sign of things to come) and did not have the need for a standard Media Description. MPEG-4 was the standard that extended the MPEG domain to the IT world and the “Coding of audio-visual objects” title of MPEG-4 meant that a Media Description technology was needed.

The MPEG plenary took the decision to extend the Virtual Reality Mark-up Language (VRML) establishing contacts with that group. The MPEG plenary did the same when a company proposed a new W3C recommendation-based Media Description technology. A company proposed to develop the MPEG Orchestration standard. After much investigation and debate, the Requirements and Systems groups have recently come to the conclusion that the MPEG-I Scene Description should be based on an extension to Khronos’ glTF2.

Systems support

Systems support was the first non-video need after audio identified by the MPEG plenary a few months after the establishment of MPEG. Today Systems support standards are mostly, but not exclusively, in MPEG-B. This standard contains parts that were the result of a decision of the MPEG plenary (e.g. Binary MPEG format for XML and Common encryption), the proposal of the Systems group (e.g. Sample Variants and Partial File Format) or the proposal of a company (e.g. Green metadata).

Intellectual Property Management and Protection (IPMP)

IPMP parts appear in MPEG-2, MPEG-4 and MPEG-21. They were triggered by the same context that produced MPEG-21, i.e. how to combine the liquidity of digital content with the need to guarantee a return to rights holders.

The need for MPEG-4 IPMP was identified by the MPEG plenary, but MPEG-2 and MPEG 21 IPMP was proposed by the Systems and MDS groups, respectively.

Transport

Although partly already in MPEG-1, Transport technology in MPEG flourished in MPEG-2 with Transport Stream and Program Stream. The development of MPEG-2 Systems was a major MPEG plenary decision, as was the case for MPEG-4 System, that at the time included the Scene description technology. MPEG-2 TS has dominated the broadcasting market (and is even used by AOM). As said above, MPEG-H MPEG Media Transport (MMT) and DASH are two major transport technologies whose development was identified and decided by the MPEG plenary. All 3 standards have been published several times (MPEG-2 Systems 7 times) as a result of needs identified by the Systems group or individual companies.

Application Formats

The first Application Formats were launched by the MPEG Plenary and the following Application Formats by different MPEG groups. Later individual companies or consortia proposed several Application Formats. The Common Media Application Format (CMAF) proposed by Apple and Microsoft is one of the most successful MPEG standards.

Application Programming Interfaces (API)

MPEG Extensible Middleware (MXM) was the first MPEG API standard. The decision to do this was made by the MPEG plenary but the proposal was made by a consortium. The MPEG-G Metadata and API standard was proposed by the Requirements group. The IoMT API standard was proposed by the 3D Graphics group.

Media Systems

This area collects the parts of standards that describe or specify the architecture underpinning MPEG standards. This is the case of part 1 of MPEG-7, MPEG-E, MPEG-V, MPEG-M, MPEG-I and MPEG-IoMT. These parts are typically kicked off by the MPEG plenary.

Reference implementation and Conformance

MPEG takes seriously its statement that MPEG standards should be published in two languages – one that is understood by humans and another that is understood by a machine – and that the two should be equivalent in terms of specification. The reference software – the version of the specification understood by a machine – is used to test conformance of an implementation to the specification. For these reasons the need for reference software and conformance is identified by the MPEG plenary.

With the understanding that all decision are formally made by the MPEG plenary, the trigger of an MPEG decision happens at different levels. Very often – and more often as MPEG matures and its organisation more solid – the trigger is in the hand of an individual experts or group of experts, or of an MPEG subgroup.

Posts in this thread

- The birth of an MPEG standard idea

- More MPEG Strengths, Weaknesses, Opportunities and Threats

- The MPEG Future Manifesto

- What is MPEG doing these days?

- MPEG is a big thing. Can it be bigger?

- MPEG: vision, execution, results and a conclusion

- Who “decides” in MPEG?

- What is the difference between an image and a video frame?

- MPEG and JPEG are grown up

- Standards and collaboration

- The talents, MPEG and the master

- Standards and business models

- On the convergence of Video and 3D Graphics

- Developing standards while preparing the future

- No one is perfect, but some are more accomplished than others

- Einige Gespenster gehen um in der Welt – die Gespenster der Zauberlehrlinge

- Does success breed success?

- Dot the i’s and cross the t’s

- The MPEG frontier

- Tranquil 7+ days of hard work

- Hamlet in Gothenburg: one or two ad hoc groups?

- The Mule, Foundation and MPEG

- Can we improve MPEG standards’ success rate?

- Which future for MPEG?

- Why MPEG is part of ISO/IEC

- The discontinuity of digital technologies

- The impact of MPEG standards

- Still more to say about MPEG standards

- The MPEG work plan (March 2019)

- MPEG and ISO

- Data compression in MPEG

- More video with more features

- Matching technology supply with demand

- What would MPEG be without Systems?

- MPEG: what it did, is doing, will do

- The MPEG drive to immersive visual experiences

- There is more to say about MPEG standards

- Moving intelligence around

- More standards – more successes – more failures

- Thirty years of audio coding and counting

- Is there a logic in MPEG standards?

- Forty years of video coding and counting

- The MPEG ecosystem

- Why is MPEG successful?

- MPEG can also be green

- The life of an MPEG standard

- Genome is digital, and can be compressed

- Compression standards and quality go hand in hand

- Digging deeper in the MPEG work

- MPEG communicates

- How does MPEG actually work?

- Life inside MPEG

- Data Compression Technologies – A FAQ

- It worked twice and will work again

- Compression standards for the data industries

- 30 years of MPEG, and counting?

- The MPEG machine is ready to start (again)

- IP counting or revenue counting?

- Business model based ISO/IEC standards

- Can MPEG overcome its Video “crisis”?

- A crisis, the causes and a solution

- Compression – the technology for the digital age