Introduction

Quality pervades our life: we talk of quality of life and we choose things on the basis of declared or perceived quality.

A standard is a product, and as such may also be judged, although not exclusively, in terms of its quality. MPEG standards are no exception and the quality of MPEG standards has been a feature has considered of paramount importance since its early days.

Cosmesis is related to quality, but is a different beast. You can apply cosmesis at the end of a process, but that will not give quality to a product issued from that process. Quality must be an integral part of the process or not at all.

In this article I will describe how MPEG has embedded quality in all phases of its standard development process and how it has measured quality in some illustrative cases.

Quality in the MPEG process

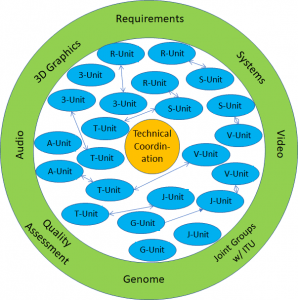

The business of MPEG is to produce standards that process information in such a way that users do not notice, or notice in as a reduced ways as possible, the effect of that standard processing when implemented in a product or service.

When MPEG considers the development of a new standard, it defines the objective of the standard (say, compression of video of a particular range of resolutions), range of bitrates and functionality. Typically, MPEG makes sure that it can deliver the standard with the agreed functionality by issuing a Call for Evidence (CfE). Industry members are requested to provide evidence that their technology is capable to achieve part of all the identified requirements.

Quality is now an important, if not essential, parameter for making a go-no go decision. When MPEG assesses the CfE submissions, it may happen that established quality assessment procedures are found inadequate. That was the case of the call for evidence on High-Performance Video Coding (HVC) of 2009. The high number of submissions received required the design of a new test procedure: the Expert Viewing Protocol (EVP). Later on the EVP test method became ITU recommendation ITU-R BT-2095. While the execution of any other ITU recommendation of that time would require more than three weeks, the EVP allowed the complete testing of all the submissions in three days.

If MPEG has become confident of the feasibility of the new standard from the results of the CfE, a Call for Proposals (CfP) is issued with attached requirements. These can be considered as the terms of the contract that MPEG stipulates with its client industries.

Testing of CfP submissions allows MPEG to develop a Test Model and initiate Core Experiments (CE). These aim to achieve optimisation of a part of the entire scheme.

In most cases the result of CEs involves quality evaluation. In the case of CfP responses subjective testing is necessary because there are typically large differences between the different coding technologies proposed. However, in the assessment of CE results where smaller effects are involved, , objective metrics are typically, but not exclusively, used because formal subjective testing is not feasible for logistic or cost reasons.

When the development of the standard is completed MPEG engages in the process called Verification Tests which will produce a publicly available report. This can be considered as the proof on the part of the supplier (MPEG) that the terms of the contract with its customer have been satisfied.

Samples of MPEG quality assessment

MPEG-1 Video CfP

The first MPEG CfP quality tests were carried out at the JVC Research Center in Kurihama (JP) in November 1989. 15 proposals of video coding algorithms operating at a maximum bitrate of 1.5 Mbit/s were tested and used to create the first Test Model at the following Eindhoven meeting in February 1990 (see the Press Release).

MPEG-2 Advanced Audio Coding (AAC)

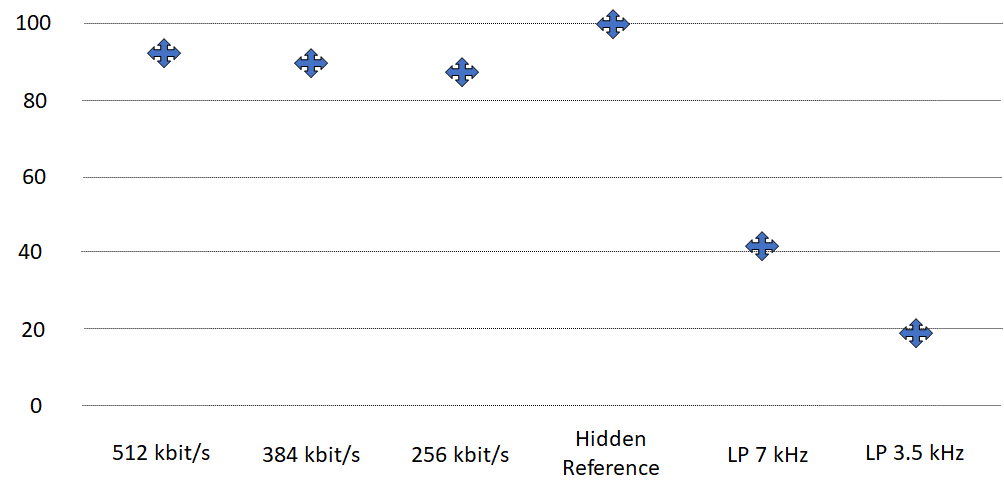

In February 1998 the Verification Test allowed MPEG to conclude that “when auditioning using loudspeakers, AAC coding according to the ISO/IEC 13818-7 standard gives a level of stereo performance superior to that given by MPEG-1 Layer II and Layer III coders” (see the Verification Test Report). This showed that the goal of high audio quality at 64 kbps per channel for MPEG-2 AAC had been achieved.

Of course that was “just” MPEG-2 AAC with no substantial encoder optimisation. More that 20 years of MPEG-4 AAC progress has brought down the bitrate per channel.

MPEG-4 Advanced Video Coding (AVC) 3D Video Coding CfP

The CfP for new 3D (stereo & auto-stereo) technologies was issued in 2012 and received a total of 24 complete submissions. Each submission produced 24 files representing the different viewing angle for each test case. Two sets of two and three viewing angles were blindly selected and used to synthesise the stereo and auto-stereo test files.

The test was carried out on standard 3D displays with glasses and auto-stereoscopic displays. A total of 13 test laboratories took part in the test running a total of 224 test sessions, hiring around 5000 non-expert viewers. Each test case was run by two laboratories making it a full redundant test.

MPEG-High Efficiency Video Coding (HEVC) CfP

The HEVC CfP covered 5 different classes of content covering resolutions from WQVGA (416×240) up to 2560×1600. For the first time MPEG introduced two set of constrains (low delay and random access) for different classes of target applications.

The HEVC CfP was a milestone because it requested the biggest ever testing effort performed by any laboratory or group of laboratories until then. The CfP generated a total of 29 submissions and 4205 coded video files plus the set of anchor coded files. Three testing laboratories took part in the tests that lasted four months and involved around 1000 naïve (non-expert) subjects allocated to a total of 134 test sessions.

A common test set of about 10% of the total testing effort was included to monitor the consistency of results from the different laboratories. With this procedure it was possible to detect a set of low quality test results from one laboratory.

Point Cloud Compression (PCC) CfP

The CfP was issued to assess how a proposed PCC technology could provide some 2D representations of the content synthesised using PCC techniques, resulting in some video suitable for evaluation by means of established subjective assessment protocols.

Some video clips for each of the received submissions were produced after an accurate selection of the rendering conditions. The video clips were generated using a rendering video tools. This was used to generate, under the same conditions, two different video clips for each of the received submissions: a rotating view of a fixed synthesised image and a rotating view of moving synthesised video clips. The rotations were selected in a blind way and the resulting video clips were subjectively assessed to rank the submissions.

Conclusions

Quality is what end users of media standards value as the most important feature. To respond to this requirements, MPEG has designed a standards development process that is permeated by quality considerations.

MPEG has no resources of its own. Therefore, sometimes it has to rely on the voluntary participation of many competent laboratories to carry out subjective tests.

The domain of media is very dynamic and, very often, MPEG cannot rely on established method – both subjective and objective – to assess the quality of compressed new media types. Therefore, MPEG is constantly innovating the methodologies it used to assess media quality.

Posts in this thread

- Standards and quality

- How to make standards adopted by industry

- MPEG status report (Jan 2020)

- MPEG, 5 years from now

- Finding the driver of future MPEG standards

- The true history of MPEG’s first steps

- Put MPEG on trial

- An action plan for the MPEG Future community

- Which company would dare to do it?

- The birth of an MPEG standard idea

- More MPEG Strengths, Weaknesses, Opportunities and Threats

- The MPEG Future Manifesto

- What is MPEG doing these days?

- MPEG is a big thing. Can it be bigger?

- MPEG: vision, execution,, results and a conclusion

- Who “decides” in MPEG?

- What is the difference between an image and a video frame?

- MPEG and JPEG are grown up

- Standards and collaboration

- The talents, MPEG and the master

- Standards and business models

- On the convergence of Video and 3D Graphics

- Developing standards while preparing the future

- No one is perfect, but some are more accomplished than others

- Einige Gespenster gehen um in der Welt – die Gespenster der Zauberlehrlinge

- Does success breed success?

- Dot the i’s and cross the t’s

- The MPEG frontier

- Tranquil 7+ days of hard work

- Hamlet in Gothenburg: one or two ad hoc groups?

- The Mule, Foundation and MPEG

- Can we improve MPEG standards’ success rate?

- Which future for MPEG?

- Why MPEG is part of ISO/IEC

- The discontinuity of digital technologies

- The impact of MPEG standards

- Still more to say about MPEG standards

- The MPEG work plan (March 2019)

- MPEG and ISO

- Data compression in MPEG

- More video with more features

- Matching technology supply with demand

- What would MPEG be without Systems?

- MPEG: what it did, is doing, will do

- The MPEG drive to immersive visual experiences

- There is more to say about MPEG standards

- Moving intelligence around

- More standards – more successes – more failures

- Thirty years of audio coding and counting

- Is there a logic in MPEG standards?

- Forty years of video coding and counting

- The MPEG ecosystem

- Why is MPEG successful?

- MPEG can also be green

- The life of an MPEG standard

- Genome is digital, and can be compressed

- Compression standards and quality go hand in hand

- Digging deeper in the MPEG work

- MPEG communicates

- How does MPEG actually work?

- Life inside MPEG

- Data Compression Technologies – A FAQ

- It worked twice and will work again

- Compression standards for the data industries

- 30 years of MPEG, and counting?

- The MPEG machine is ready to start (again)

- IP counting or revenue counting?

- Business model based ISO/IEC standards

- Can MPEG overcome its Video “crisis”?

- A crisis, the causes and a solution

- Compression – the technology for the digital age