Introduction

These days MPEG is adapting its working methods at physical meetings to cope with the constraints of the Covid-19 pandemic. But this is not the first time that MPEG does works, and excellent one, without the help of physical meetings. This article will talk about this first and indispensable attempt at developing standards outside of regular MPEG meetings.

Compression is a basic need

In the early 2000, MPEG received an unexpected, but in hindsight obvious proposal. MPEG-4 defines several compressed media types – audio, video and 3D graphics are obvious choices – but also the technology to compose the different media. Text information, presented in a particular font, is more and more an essential component of a multimedia presentation and the font data must be accessible with the text objects in the multimedia presentation. However, as thousands of fonts are available today for use by content authors, and only few fonts (or just one) could be available on devices that are used to consume media content – there is a need to compress and stream font information.

So MPEG began to work on the new “font” media type, and in 2003 produced the new standard: MPEG-4 Part 18 – Font compression and streaming, which relied on OpenType as input font data format.

Fonts need more from MPEG

Later MPEG received another proposal from Adobe and Microsoft, the original developers of OpenType, a format for scalable computer fonts. By that time, OpenType was already in wide use in the PC world, and was seen as an attractive technology for adoption by consumer electronic and mobile devices. The community using it kept on requesting support for new features, and many new usage scenarios have emerged. As MPEG knows only too well, responding to those requests from different constituencies required a significant investment of resources. Adobe and Microsoft asked MPEG if it could do that. I don’t have to tell you what was the response.

Not all technical communities are the same

A new problem arose, though. The overlap of the traditional MPEG membership with the OpenType community was minimal, save for Vladimir Levantovsky of Monotype who had brought the font compression and streaming proposal to MPEG. So a novel arrangement was devised. MPEG would create an ad hoc group that would do the work via online discussions, and once the group has reached a consensus, the result of the discussions, in the form of “report of the ad hoc group”, would be brought by Vladimir to MPEG. The report would be reviewed by the Systems group and the result integrated in the rest of MPEG work, as it is done with all other subgroups. The report would often be accompanied by additional material to produce draft standard that would be submitted by MPEG to National Body voting. Disposition of comments and the new version of the standard would be developed within the ad hoc group, and submitted to MPEG for action.

This working method has been able to draw from the vast and dispersed community of language and font experts, who would never have been willing or able to attend physical meetings, to develop, maintain and extend standard for 16 years, and with outstanding results. MPEG has produced four editions of the standard called Open Font Format (OFF). As always, each edition of the standard has been updated by several amendments (extensions to the standard) before a new edition was created.

What does OFF offer to the industry?

High quality glyph scaling

A glyph is a unit of text display that provides visual representation for a character, such as the Latin letter a, the Greek letter the Japanese hiragana letter あor the Chinese character 亞. In an output device, a scaled outline of a glyph is represented by dots (or pixels) in a process called rasterization. The approximation of a complex glyph outline with dots can lead to significant distortions, especially when the number of dots per unit length is low.

OFF fonts introduced programmatic approach – a technology called “hinting” deals with these inconsistencies by adding additional instructions encoded in the font. The OFF standard helped overcome the obstacles by making proprietary font hinting and other patented technologies easily accessible to implementers, and enabled multiple software and device vendors to obtain Fair, Reasonable and Non Discriminatory (FRAND) licences.

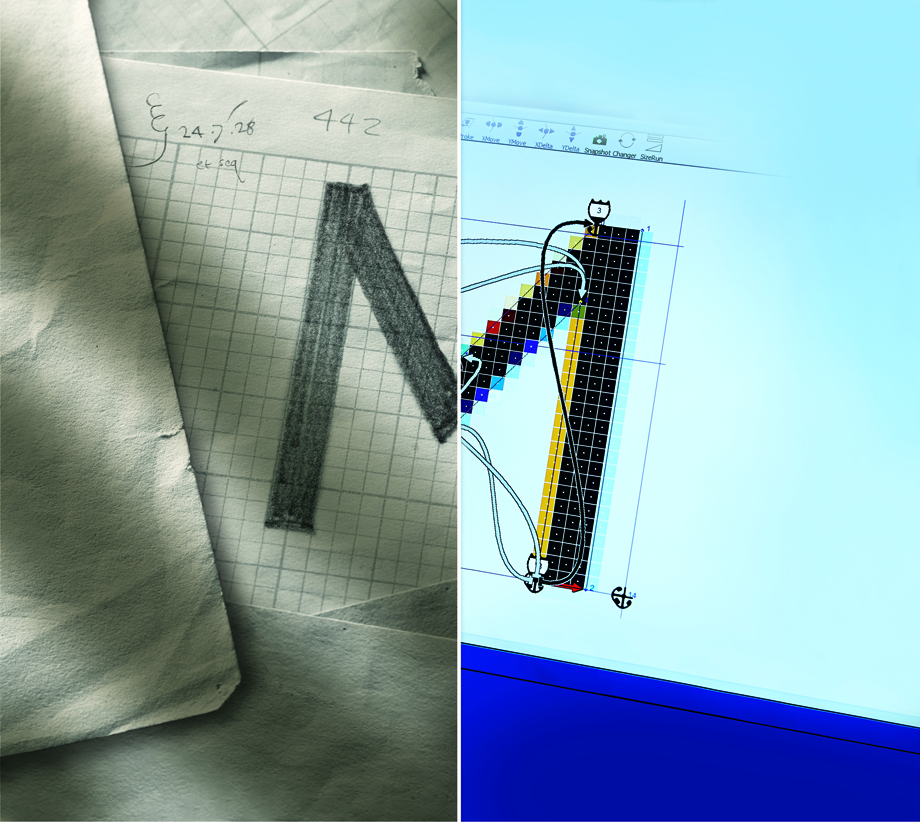

The figure illustrate the connection of the and new worlds, i.e. the transformation of analog to digital. The curved arrows on the right side of the picture illustrate the effects of applying hint instructions to glyph control points, and their interdependencies.

Leveraging Unicode

The Unicode Standard is a universal character encoding system that was developed by the Unicode Consortium to support the interchange, processing, and display of the written text in many world languages – both modern (i.e. used today) and historic (so that conversion of e.g. ancient books and document archives into digital format is possible).

The Unicode Standard follows a set of fundamental principles of separation of text content from text display. This goal is accomplished by introducing a universal repertoire of code-points for all characters, and by encoding plain text characters in their logical order, without regard for specific writing system, language, or text direction. As a result, the Unicode Standard enables text sequences to be editable, searchable, and machine-readable by a wide variety of applications.

In short, the Unicode encoding defines the semantics of text content and rules for its processing – to visualize a text and make it human readable we need fonts that bring all missing elements required for text display. Glyph outlines, character to glyph mapping, text metrics and language-specific layout features for dynamic text composition are only some (but not all) of the data elements encoded in an OFF font. It is the combination of font information with the Unicode compliant character encoding that makes it possible for all computer applications to “speak” in any of the world languages without effort!

Advanced typography

OFF fonts offer a number of key ingredients that enable advanced typographic features, including support for certain features (e.g. ligatures and contextual glyph substitution) that are mandatory for certain languages, and a number of discretionary features. These include support for stylistic alternates, colour fonts for emoji, and many other advanced features that are needed to support bi-directional text, complex scripts and writing systems, layout and composition of text when mixing characters from different languages … the list can go on!

Lately, the addition of the variable fonts made it possible to both improve the efficiency of encoding of font data (e.g. by replacing multi-font families with a single variable font that offers a variety of weight width and style choices), and introduce new dynamic typographic tools that revolutionized the way we use fonts on the web.

Font Embedding

As I mentioned earlier, thousands of fonts are available for use in media presentations, and making a particular font choice is solely the author’s right and responsibility. Media devices and applications must respect all authors’ decisions to ensure faithful content presentation. By embedding fonts in electronic documents and multimedia presentations, content creators are assured that text content will be reproduced exactly as intended by authors, preserving content appearance, look and feel, original layout, and language choices.

OFF supports many different ways for font embedding, including embedding font data in electronic documents, font data streaming and encoding as part of ISO Base Media File Format, and font linking on the web. OFF font technology standard developed by MPEG became a fundamental component for deployment of Web Open Font Format, which has facilitated adoption of OFF fonts in the web environment and is now widely supported in different browsers.

What is OFF being used for?

OFF has become the preferred font encoding solution for applications that demand advanced text and graphics capabilities. Fonts are a key component of any media presentation, including web content and applications, digital publishing, newscast, commercial broadcasting and advertisement, e-learning, games, interactive TV and Rich Media, multimedia messaging, sub-titling, Digital Cinema, document processing, etc.

It is fair to say that after many years of OFF development, the support for OFF and font technology in general became ubiquitous in all consumer electronic devices and applications, bringing tremendous benefits for font designers, authors, and users alike!

Acknowledgement

The review and substantial additions of Vladimir Levantosky of Monotype to this article are gratefully acknowledged. For 20 years Vladimir has dedicated his energies to coordinate the font community efforts, make font standards in MPEG and promote the standards to industry.

Thank you Vladimir!

Posts in next thread

Posts in this thread

- An “unexpected” MPEG media type: fonts

- The future of visual information coding standards

- Can MPEG survive? And if so, how?

- Quality, more quality and more more quality

- Developing MPEG standards in the viral pandemic age

- The impact of MPEG on the media industry

- MPEG standards, MPEG software and Open Source Software

- The MPEG Metamorphoses

- National interests, international standards and MPEG

- Media, linked media and applications

- Standards and quality

- How to make standards adopted by industry

- MPEG status report (Jan 2020)

- MPEG, 5 years from now

- Finding the driver of future MPEG standards

- The true history of MPEG’s first steps

- Put MPEG on trial

- An action plan for the MPEG Future community

- Which company would dare to do it?

- The birth of an MPEG standard idea

- More MPEG Strengths, Weaknesses, Opportunities and Threats

- The MPEG Future Manifesto

- What is MPEG doing these days?

- MPEG is a big thing. Can it be bigger?

- MPEG: vision, execution,, results and a conclusion

- Who “decides” in MPEG?

- What is the difference between an image and a video frame?

- MPEG and JPEG are grown up

- Standards and collaboration

- The talents, MPEG and the master

- Standards and business models

- On the convergence of Video and 3D Graphics

- Developing standards while preparing the future

- No one is perfect, but some are more accomplished than others

- Einige Gespenster gehen um in der Welt – die Gespenster der Zauberlehrlinge

- Does success breed success?

- Dot the i’s and cross the t’s

- The MPEG frontier

- Tranquil 7+ days of hard work

- Hamlet in Gothenburg: one or two ad hoc groups?

- The Mule, Foundation and MPEG

- Can we improve MPEG standards’ success rate?

- Which future for MPEG?

- Why MPEG is part of ISO/IEC

- The discontinuity of digital technologies

- The impact of MPEG standards

- Still more to say about MPEG standards

- The MPEG work plan (March 2019)

- MPEG and ISO

- Data compression in MPEG

- More video with more features

- Matching technology supply with demand

- What would MPEG be without Systems?

- MPEG: what it did, is doing, will do

- The MPEG drive to immersive visual experiences

- There is more to say about MPEG standards

- Moving intelligence around

- More standards – more successes – more failures

- Thirty years of audio coding and counting

- Is there a logic in MPEG standards?

- Forty years of video coding and counting

- The MPEG ecosystem

- Why is MPEG successful?

- MPEG can also be green

- The life of an MPEG standard

- Genome is digital, and can be compressed

- Compression standards and quality go hand in hand

- Digging deeper in the MPEG work

- MPEG communicates

- How does MPEG actually work?

- Life inside MPEG

- Data Compression Technologies – A FAQ

- It worked twice and will work again

- Compression standards for the data industries

- 30 years of MPEG, and counting?

- The MPEG machine is ready to start (again)

- IP counting or revenue counting?

- Business model based ISO/IEC standards

- Can MPEG overcome its Video “crisis”?

- A crisis, the causes and a solution

- Compression – the technology for the digital age